Pedestrian orientation dynamics from high-fidelity measurements

Paper and Code

Jan 14, 2020

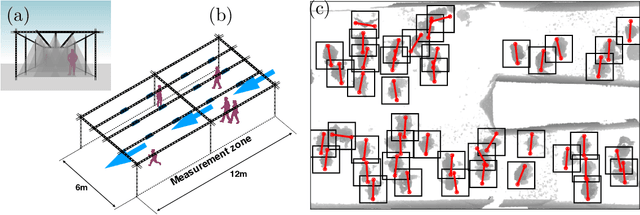

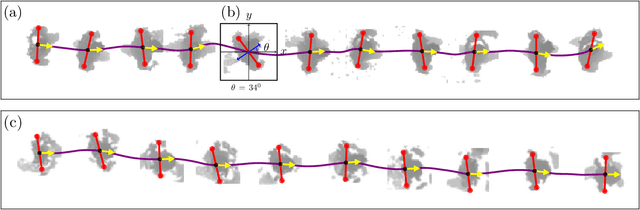

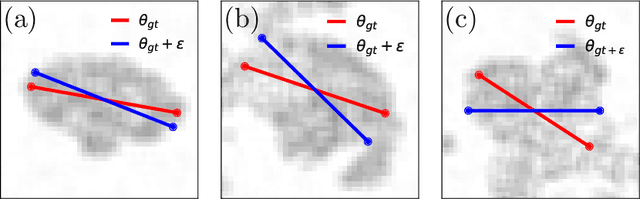

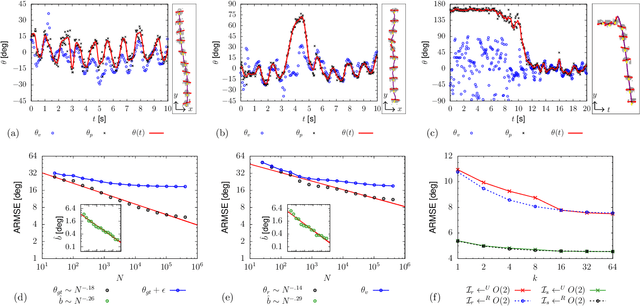

We investigate in real-life conditions and with very high accuracy the dynamics of body rotation, or yawing, of walking pedestrians - an highly complex task due to the wide variety in shapes, postures and walking gestures. We propose a novel measurement method based on a deep neural architecture that we train on the basis of generic physical properties of the motion of pedestrians. Specifically, we leverage on the strong statistical correlation between individual velocity and body orientation: the velocity direction is typically orthogonal with respect to the shoulder line. We make the reasonable assumption that this approximation, although instantaneously slightly imperfect, is correct on average. This enables us to use velocity data as training labels for a highly-accurate point-estimator of individual orientation, that we can train with no dedicated annotation labor. We discuss the measurement accuracy and show the error scaling, both on synthetic and real-life data: we show that our method is capable of estimating orientation with an error as low as 7.5 degrees. This tool opens up new possibilities in the studies of human crowd dynamics where orientation is key. By analyzing the dynamics of body rotation in real-life conditions, we show that the instantaneous velocity direction can be described by the combination of orientation and a random delay, where randomness is provided by an Ornstein-Uhlenbeck process centered on an average delay of 100ms. Quantifying these dynamics could have only been possible thanks to a tool as precise as that proposed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge