Joris Willems

Benchmarking high-fidelity pedestrian tracking systems for research, real-time monitoring and crowd control

Aug 26, 2021

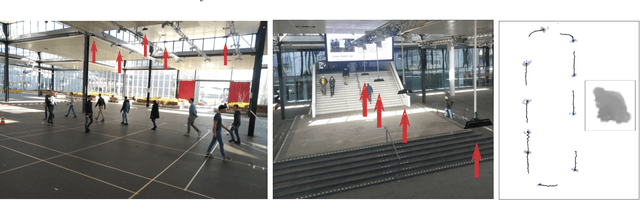

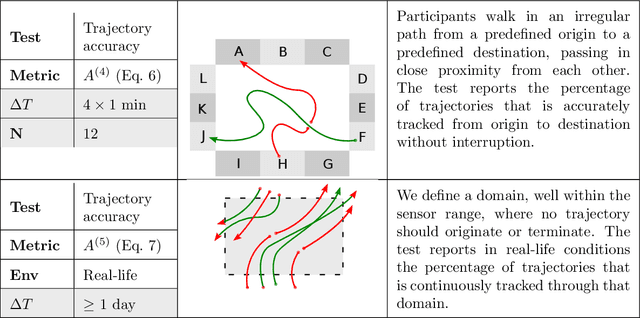

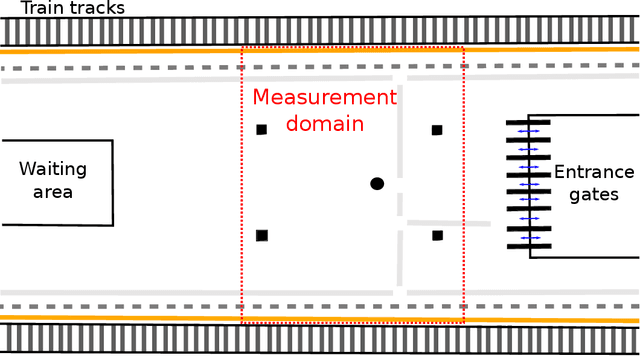

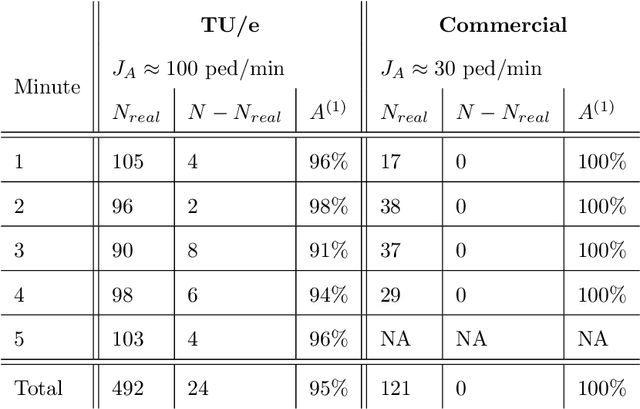

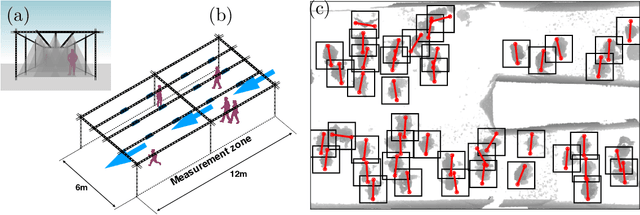

Abstract:High-fidelity pedestrian tracking in real-life conditions has been an important tool in fundamental crowd dynamics research allowing to quantify statistics of relevant observables including walking velocities, mutual distances and body orientations. As this technology advances, it is becoming increasingly useful also in society. In fact, continued urbanization is overwhelming existing pedestrian infrastructures such as transportation hubs and stations, generating an urgent need for real-time highly-accurate usage data, aiming both at flow monitoring and dynamics understanding. To successfully employ pedestrian tracking techniques in research and technology, it is crucial to validate and benchmark them for accuracy. This is not only necessary to guarantee data quality, but also to identify systematic errors. In this contribution, we present and discuss a benchmark suite, towards an open standard in the community, for privacy-respectful pedestrian tracking techniques. The suite is technology-independent and is applicable to academic and commercial pedestrian tracking systems, operating both in lab environments and real-life conditions. The benchmark suite consists of 5 tests addressing specific aspects of pedestrian tracking quality, including accurate crowd flux estimation, density estimation, position detection and trajectory accuracy. The output of the tests are quality factors expressed as single numbers. We provide the benchmark results for two tracking systems, both operating in real-life, one commercial, and the other based on overhead depth-maps developed at TU Eindhoven. We discuss the results on the basis of the quality factors and report on the typical sensor and algorithmic performance. This enables us to highlight the current state-of-the-art, its limitations and provide installation recommendations, with specific attention to multi-sensor setups and data stitching.

Pedestrian orientation dynamics from high-fidelity measurements

Jan 14, 2020

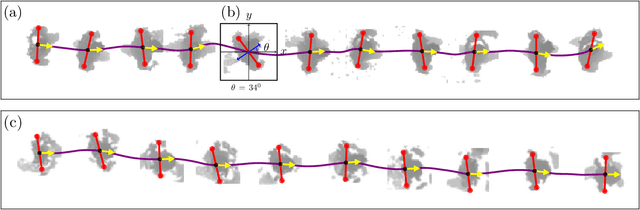

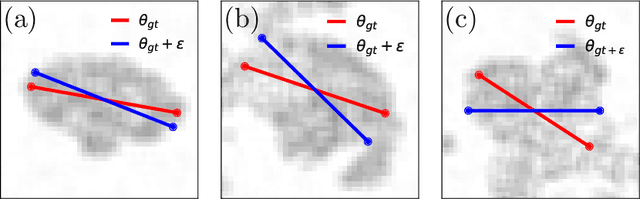

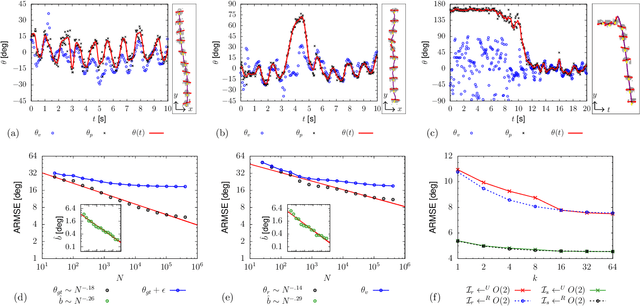

Abstract:We investigate in real-life conditions and with very high accuracy the dynamics of body rotation, or yawing, of walking pedestrians - an highly complex task due to the wide variety in shapes, postures and walking gestures. We propose a novel measurement method based on a deep neural architecture that we train on the basis of generic physical properties of the motion of pedestrians. Specifically, we leverage on the strong statistical correlation between individual velocity and body orientation: the velocity direction is typically orthogonal with respect to the shoulder line. We make the reasonable assumption that this approximation, although instantaneously slightly imperfect, is correct on average. This enables us to use velocity data as training labels for a highly-accurate point-estimator of individual orientation, that we can train with no dedicated annotation labor. We discuss the measurement accuracy and show the error scaling, both on synthetic and real-life data: we show that our method is capable of estimating orientation with an error as low as 7.5 degrees. This tool opens up new possibilities in the studies of human crowd dynamics where orientation is key. By analyzing the dynamics of body rotation in real-life conditions, we show that the instantaneous velocity direction can be described by the combination of orientation and a random delay, where randomness is provided by an Ornstein-Uhlenbeck process centered on an average delay of 100ms. Quantifying these dynamics could have only been possible thanks to a tool as precise as that proposed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge