Albert Wu

One-Shot Transfer of Long-Horizon Extrinsic Manipulation Through Contact Retargeting

Apr 11, 2024Abstract:Extrinsic manipulation, the use of environment contacts to achieve manipulation objectives, enables strategies that are otherwise impossible with a parallel jaw gripper. However, orchestrating a long-horizon sequence of contact interactions between the robot, object, and environment is notoriously challenging due to the scene diversity, large action space, and difficult contact dynamics. We observe that most extrinsic manipulation are combinations of short-horizon primitives, each of which depend strongly on initializing from a desirable contact configuration to succeed. Therefore, we propose to generalize one extrinsic manipulation trajectory to diverse objects and environments by retargeting contact requirements. We prepare a single library of robust short-horizon, goal-conditioned primitive policies, and design a framework to compose state constraints stemming from contacts specifications of each primitive. Given a test scene and a single demo prescribing the primitive sequence, our method enforces the state constraints on the test scene and find intermediate goal states using inverse kinematics. The goals are then tracked by the primitive policies. Using a 7+1 DoF robotic arm-gripper system, we achieved an overall success rate of 80.5% on hardware over 4 long-horizon extrinsic manipulation tasks, each with up to 4 primitives. Our experiments cover 10 objects and 6 environment configurations. We further show empirically that our method admits a wide range of demonstrations, and that contact retargeting is indeed the key to successfully combining primitives for long-horizon extrinsic manipulation. Code and additional details are available at stanford-tml.github.io/extrinsic-manipulation.

Synthesize Dexterous Nonprehensile Pregrasp for Ungraspable Objects

May 08, 2023Abstract:Daily objects embedded in a contextual environment are often ungraspable initially. Whether it is a book sandwiched by other books on a fully packed bookshelf or a piece of paper lying flat on the desk, a series of nonprehensile pregrasp maneuvers is required to manipulate the object into a graspable state. Humans are proficient at utilizing environmental contacts to achieve manipulation tasks that are otherwise impossible, but synthesizing such nonprehensile pregrasp behaviors is challenging to existing methods. We present a novel method that combines graph search, optimal control, and a learning-based objective function to synthesize physically realistic and diverse nonprehensile pre-grasp motions that leverage the external contacts. Since the ``graspability'' of an object in context with its surrounding is difficult to define, we utilize a dataset of dexterous grasps to learn a metric which implicitly takes into account the exposed surface of the object and the finger tip locations. Our method can efficiently discover hand and object trajectories that are certified to be physically feasible by the simulation and kinematically achievable by the dexterous hand. We evaluate our method on eight challenging scenarios where nonprehensile pre-grasps are required to succeed. We also show that our method can be applied to unseen objects different from those in the training dataset. Finally, we report quantitative analyses on generalization and robustness of our method, as well as an ablation study.

* 11 pages, 9 figures, SIGGRAPH Conference Proceedings 2023

Learning Diverse and Physically Feasible Dexterous Grasps with Generative Model and Bilevel Optimization

Jul 01, 2022

Abstract:To fully utilize the versatility of a multi-finger dexterous robotic hand for object grasping, one must satisfy complex physical constraints introduced by hand-object interaction and object geometry during grasp planning. We propose an integrative approach of combining a generative model and a bilevel optimization to compute diverse grasps for novel unseen objects. First, a grasp prediction is obtained from a conditional variational autoencoder trained on merely six YCB objects. The prediction is then projected onto the manifold of kinematically and dynamically feasible grasps by jointly solving collision-aware inverse kinematics, force closure, and friction constraints as one nonconvex bilevel optimization. We demonstrate the effectiveness of our method on hardware by successfully grasping a wide range of unseen household objects, including adversarial shapes challenging to other types of robotic grippers. A video summary of our results is available at https://youtu.be/9DTrImbN99I.

Robust-RRT: Probabilistically-Complete Motion Planning for Uncertain Nonlinear Systems

May 16, 2022

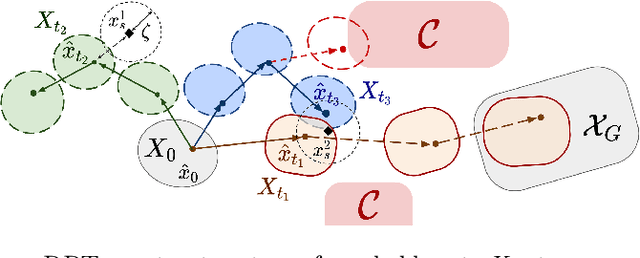

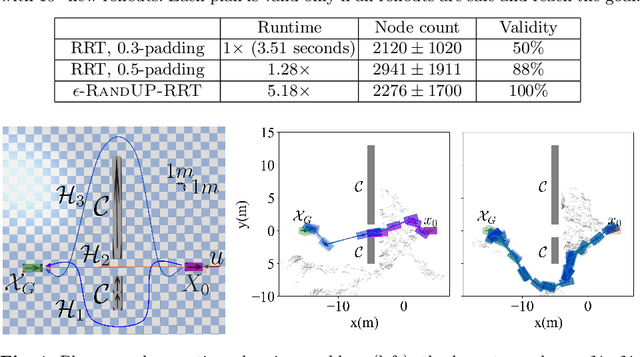

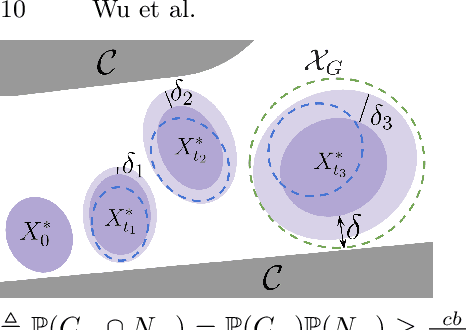

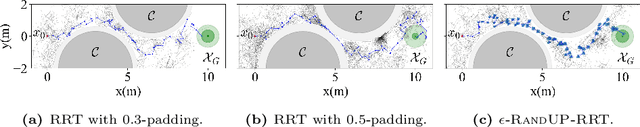

Abstract:Robust motion planning entails computing a global motion plan that is safe under all possible uncertainty realizations, be it in the system dynamics, the robot's initial position, or with respect to external disturbances. Current approaches for robust motion planning either lack theoretical guarantees, or make restrictive assumptions on the system dynamics and uncertainty distributions. In this paper, we address these limitations by proposing the robust rapidly-exploring random-tree (Robust-RRT) algorithm, which integrates forward reachability analysis directly into sampling-based control trajectory synthesis. We prove that Robust-RRT is probabilistically complete (PC) for nonlinear Lipschitz continuous dynamical systems with bounded uncertainty. In other words, Robust-RRT eventually finds a robust motion plan that is feasible under all possible uncertainty realizations assuming such a plan exists. Our analysis applies even to unstable systems that admit only short-horizon feasible plans; this is because we explicitly consider the time evolution of reachable sets along control trajectories. Thanks to the explicit consideration of time dependency in our analysis, PC applies to unstabilizable systems. To the best of our knowledge, this is the most general PC proof for robust sampling-based motion planning, in terms of the types of uncertainties and dynamical systems it can handle. Considering that an exact computation of reachable sets can be computationally expensive for some dynamical systems, we incorporate sampling-based reachability analysis into Robust-RRT and demonstrate our robust planner on nonlinear, underactuated, and hybrid systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge