Alan Williams

Is AI currently capable of identifying wild oysters? A comparison of human annotators against the AI model, ODYSSEE

May 06, 2025Abstract:Oysters are ecologically and commercially important species that require frequent monitoring to track population demographics (e.g. abundance, growth, mortality). Current methods of monitoring oyster reefs often require destructive sampling methods and extensive manual effort. Therefore, they are suboptimal for small-scale or sensitive environments. A recent alternative, the ODYSSEE model, was developed to use deep learning techniques to identify live oysters using video or images taken in the field of oyster reefs to assess abundance. The validity of this model in identifying live oysters on a reef was compared to expert and non-expert annotators. In addition, we identified potential sources of prediction error. Although the model can make inferences significantly faster than expert and non-expert annotators (39.6 s, $2.34 \pm 0.61$ h, $4.50 \pm 1.46$ h, respectively), the model overpredicted the number of live oysters, achieving lower accuracy (63\%) in identifying live oysters compared to experts (74\%) and non-experts (75\%) alike. Image quality was an important factor in determining the accuracy of the model and the annotators. Better quality images improved human accuracy and worsened model accuracy. Although ODYSSEE was not sufficiently accurate, we anticipate that future training on higher-quality images, utilizing additional live imagery, and incorporating additional annotation training classes will greatly improve the model's predictive power based on the results of this analysis. Future research should address methods that improve the detection of living vs. dead oysters.

Adaptive conditional latent diffusion maps beam loss to 2D phase space projections

Feb 25, 2025Abstract:Beam loss (BLM) and beam current monitors (BCM) are ubiquitous at particle accelerator around the world. These simple devices provide non-invasive high level beam measurements, but give no insight into the detailed 6D (x,y,z,px,py,pz) beam phase space distributions or dynamics. We show that generative conditional latent diffusion models can learn intricate patterns to map waveforms of tens of BLMs or BCMs along an accelerator to detailed 2D projections of a charged particle beam's 6D phase space density. This transformational method can be used at any particle accelerator to transform simple non-invasive devices into detailed beam phase space diagnostics. We demonstrate this concept via multi-particle simulations of the high intensity beam in the kilometer-long LANSCE linear proton accelerator.

CBOL-Tuner: Classifier-pruned Bayesian optimization to explore temporally structured latent spaces for particle accelerator tuning

Dec 02, 2024

Abstract:Complex dynamical systems, such as particle accelerators, often require complicated and time-consuming tuning procedures for optimal performance. It may also be required that these procedures estimate the optimal system parameters, which govern the dynamics of a spatiotemporal beam -- this can be a high-dimensional optimization problem. To address this, we propose a Classifier-pruned Bayesian Optimization-based Latent space Tuner (CBOL-Tuner), a framework for efficient exploration within a temporally-structured latent space. The CBOL-Tuner integrates a convolutional variational autoencoder (CVAE) for latent space representation, a long short-term memory (LSTM) network for temporal dynamics, a dense neural network (DNN) for parameter estimation, and a classifier-pruned Bayesian optimizer (C-BO) to adaptively search and filter the latent space for optimal solutions. CBOL-Tuner demonstrates superior performance in identifying multiple optimal settings and outperforms alternative global optimization methods.

3D Water Quality Mapping using Invariant Extended Kalman Filtering for Underwater Robot Localization

Sep 17, 2024Abstract:Water quality mapping for critical parameters such as temperature, salinity, and turbidity is crucial for assessing an aquaculture farm's health and yield capacity. Traditional approaches involve using boats or human divers, which are time-constrained and lack depth variability. This work presents an innovative approach to 3D water quality mapping in shallow water environments using a BlueROV2 equipped with GPS and a water quality sensor. This system allows for accurate location correction by resurfacing when errors occur. This study is being conducted at an oyster farm in the Chesapeake Bay, USA, providing a more comprehensive and precise water quality analysis in aquaculture settings.

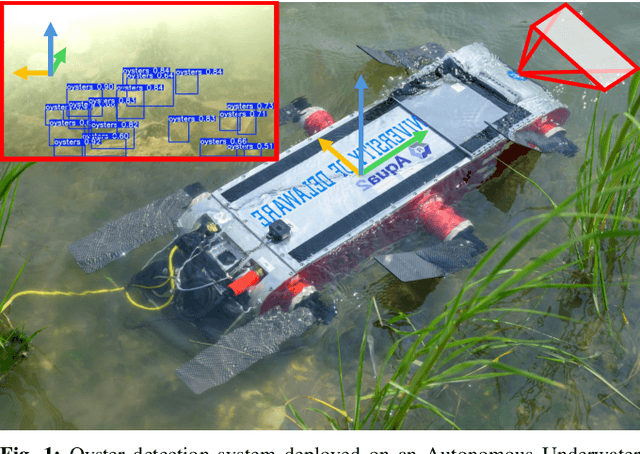

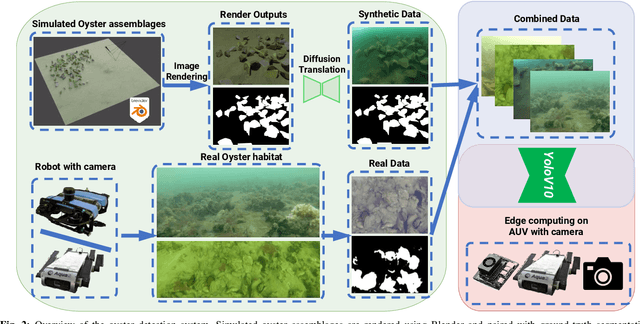

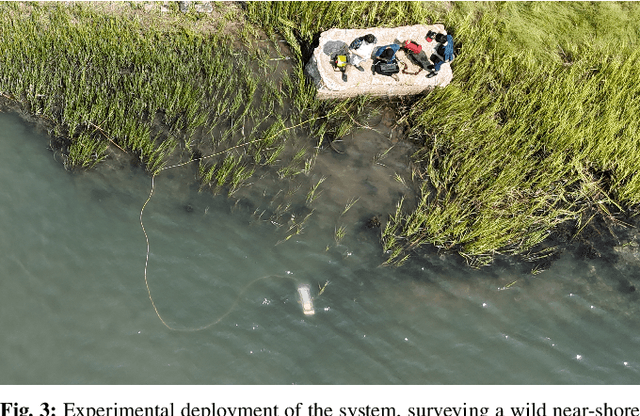

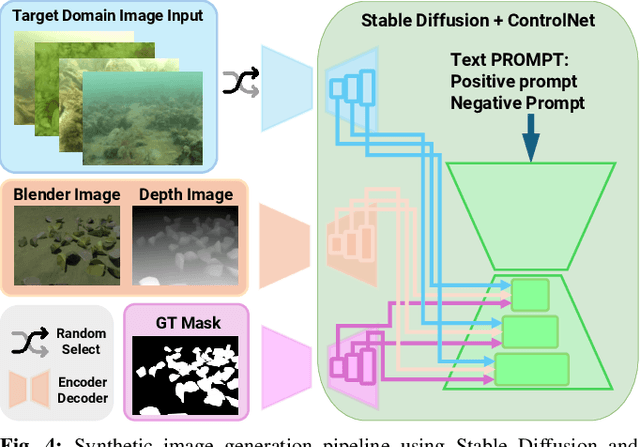

ODYSSEE: Oyster Detection Yielded by Sensor Systems on Edge Electronics

Sep 11, 2024

Abstract:Oysters are a keystone species in coastal ecosystems, offering significant economic, environmental, and cultural benefits. However, current monitoring systems are often destructive, typically involving dredging to physically collect and count oysters. A nondestructive alternative is manual identification from video footage collected by divers, which is time-consuming and labor-intensive with expert input. An alternative to human monitoring is the deployment of a system with trained object detection models that performs real-time, on edge oyster detection in the field. One such platform is the Aqua2 robot. Effective training of these models requires extensive high-quality data, which is difficult to obtain in marine settings. To address these complications, we introduce a novel method that leverages stable diffusion to generate high-quality synthetic data for the marine domain. We exploit diffusion models to create photorealistic marine imagery, using ControlNet inputs to ensure consistency with the segmentation ground-truth mask, the geometry of the scene, and the target domain of real underwater images for oysters. The resulting dataset is used to train a YOLOv10-based vision model, achieving a state-of-the-art 0.657 mAP@50 for oyster detection on the Aqua2 platform. The system we introduce not only improves oyster habitat monitoring, but also paves the way to autonomous surveillance for various tasks in marine contexts, improving aquaculture and conservation efforts.

Time-inversion of spatiotemporal beam dynamics using uncertainty-aware latent evolution reversal

Aug 14, 2024

Abstract:Charged particle dynamics under the influence of electromagnetic fields is a challenging spatiotemporal problem. Many high performance physics-based simulators for predicting behavior in a charged particle beam are computationally expensive, limiting their utility for solving inverse problems online. The problem of estimating upstream six-dimensional phase space given downstream measurements of charged particles in an accelerator is an inverse problem of growing importance. This paper introduces a reverse Latent Evolution Model (rLEM) designed for temporal inversion of forward beam dynamics. In this two-step self-supervised deep learning framework, we utilize a Conditional Variational Autoencoder (CVAE) to project 6D phase space projections of a charged particle beam into a lower-dimensional latent distribution. Subsequently, we autoregressively learn the inverse temporal dynamics in the latent space using a Long Short-Term Memory (LSTM) network. The coupled CVAE-LSTM framework can predict 6D phase space projections across all upstream accelerating sections based on single or multiple downstream phase space measurements as inputs. The proposed model also captures the aleatoric uncertainty of the high-dimensional input data within the latent space. This uncertainty, which reflects potential uncertain measurements at a given module, is propagated through the LSTM to estimate uncertainty bounds for all upstream predictions, demonstrating the robustness of the LSTM against in-distribution variations in the input data.

A conditional latent autoregressive recurrent model for generation and forecasting of beam dynamics in particle accelerators

Mar 19, 2024Abstract:Particle accelerators are complex systems that focus, guide, and accelerate intense charged particle beams to high energy. Beam diagnostics present a challenging problem due to limited non-destructive measurements, computationally demanding simulations, and inherent uncertainties in the system. We propose a two-step unsupervised deep learning framework named as Conditional Latent Autoregressive Recurrent Model (CLARM) for learning the spatiotemporal dynamics of charged particles in accelerators. CLARM consists of a Conditional Variational Autoencoder (CVAE) transforming six-dimensional phase space into a lower-dimensional latent distribution and a Long Short-Term Memory (LSTM) network capturing temporal dynamics in an autoregressive manner. The CLARM can generate projections at various accelerator modules by sampling and decoding the latent space representation. The model also forecasts future states (downstream locations) of charged particles from past states (upstream locations). The results demonstrate that the generative and forecasting ability of the proposed approach is promising when tested against a variety of evaluation metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge