Aadi Palnitkar

Mil-SCORE: Benchmarking Long-Context Geospatial Reasoning and Planning in Large Language Models

Jan 29, 2026Abstract:As large language models (LLMs) are applied to increasingly longer and more complex tasks, there is a growing need for realistic long-context benchmarks that require selective reading and integration of heterogeneous, multi-modal information sources. This need is especially acute for geospatial planning problems, such as those found in planning for large-scale military operations, which demand fast and accurate reasoning over maps, orders, intelligence reports, and other distributed data. To address this gap, we present MilSCORE (Military Scenario Contextual Reasoning), to our knowledge the first scenario-level dataset of expert-authored, multi-hop questions grounded in a complex, simulated military planning scenario used for training. MilSCORE is designed to evaluate high-stakes decision-making and planning, probing LLMs' ability to combine tactical and spatial reasoning across multiple sources and to reason over long-horizon, geospatially rich context. The benchmark includes a diverse set of question types across seven categories targeting both factual recall and multi-step reasoning about constraints, strategy, and spatial analysis. We provide an evaluation protocol and report baseline results for a range of contemporary vision-language models. Our findings highlight substantial headroom on MilSCORE, indicating that current systems struggle with realistic, scenario-level long-context planning, and positioning MilSCORE as a challenging testbed for future work.

Hold Onto That Thought: Assessing KV Cache Compression On Reasoning

Dec 12, 2025Abstract:Large language models (LLMs) have demonstrated remarkable performance on long-context tasks, but are often bottlenecked by memory constraints. Namely, the KV cache, which is used to significantly speed up attention computations, grows linearly with context length. A suite of compression algorithms has been introduced to alleviate cache growth by evicting unimportant tokens. However, several popular strategies are targeted towards the prefill phase, i.e., processing long prompt context, and their performance is rarely assessed on reasoning tasks requiring long decoding. In particular, short but complex prompts, such as those in benchmarks like GSM8K and MATH500, often benefit from multi-step reasoning and self-reflection, resulting in thinking sequences thousands of tokens long. In this work, we benchmark the performance of several popular compression strategies on long-reasoning tasks. For the non-reasoning Llama-3.1-8B-Instruct, we determine that no singular strategy fits all, and that performance is heavily influenced by dataset type. However, we discover that H2O and our decoding-enabled variant of SnapKV are dominant strategies for reasoning models, indicating the utility of heavy-hitter tracking for reasoning traces. We also find that eviction strategies at low budgets can produce longer reasoning traces, revealing a tradeoff between cache size and inference costs.

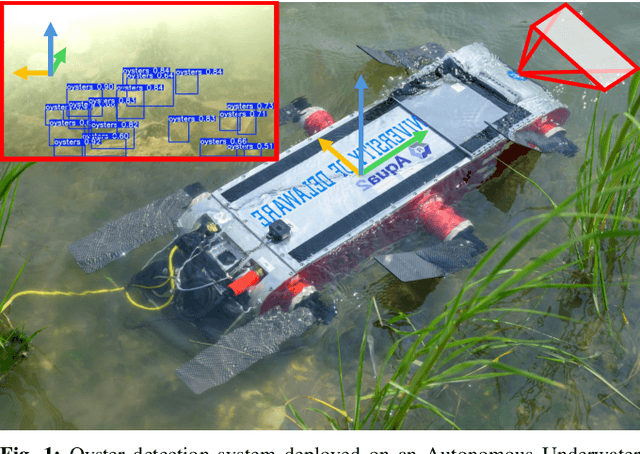

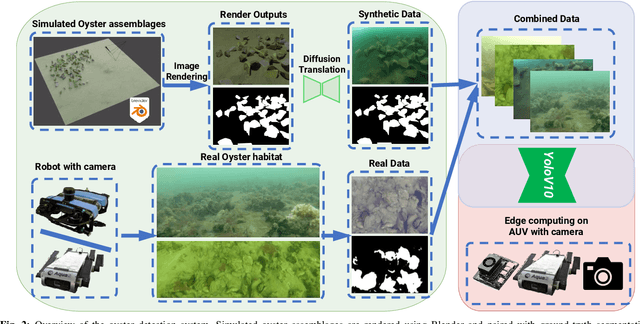

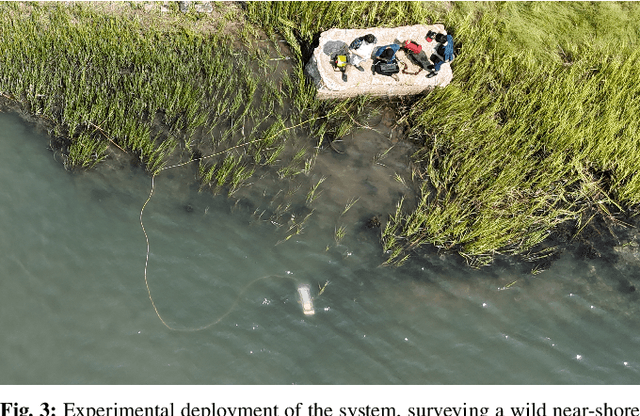

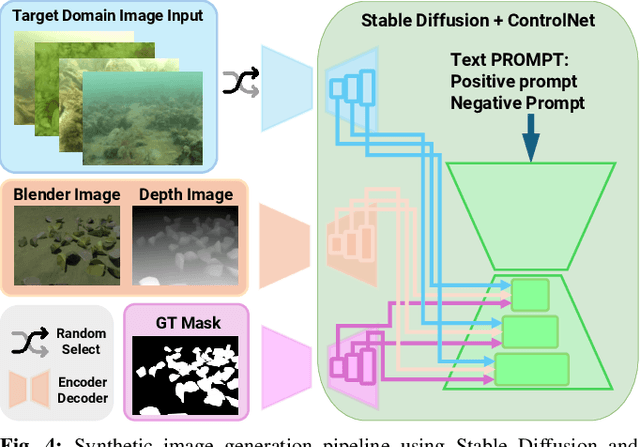

ODYSSEE: Oyster Detection Yielded by Sensor Systems on Edge Electronics

Sep 11, 2024

Abstract:Oysters are a keystone species in coastal ecosystems, offering significant economic, environmental, and cultural benefits. However, current monitoring systems are often destructive, typically involving dredging to physically collect and count oysters. A nondestructive alternative is manual identification from video footage collected by divers, which is time-consuming and labor-intensive with expert input. An alternative to human monitoring is the deployment of a system with trained object detection models that performs real-time, on edge oyster detection in the field. One such platform is the Aqua2 robot. Effective training of these models requires extensive high-quality data, which is difficult to obtain in marine settings. To address these complications, we introduce a novel method that leverages stable diffusion to generate high-quality synthetic data for the marine domain. We exploit diffusion models to create photorealistic marine imagery, using ControlNet inputs to ensure consistency with the segmentation ground-truth mask, the geometry of the scene, and the target domain of real underwater images for oysters. The resulting dataset is used to train a YOLOv10-based vision model, achieving a state-of-the-art 0.657 mAP@50 for oyster detection on the Aqua2 platform. The system we introduce not only improves oyster habitat monitoring, but also paves the way to autonomous surveillance for various tasks in marine contexts, improving aquaculture and conservation efforts.

ChatSim: Underwater Simulation with Natural Language Prompting

Aug 09, 2023Abstract:Robots are becoming an essential part of many operations including marine exploration or environmental monitoring. However, the underwater environment presents many challenges, including high pressure, limited visibility, and harsh conditions that can damage equipment. Real-world experimentation can be expensive and difficult to execute. Therefore, it is essential to simulate the performance of underwater robots in comparable environments to ensure their optimal functionality within practical real-world contexts.OysterSim generates photo-realistic images and segmentation masks of objects in marine environments, providing valuable training data for underwater computer vision applications. By integrating ChatGPT into underwater simulations, users can convey their thoughts effortlessly and intuitively create desired underwater environments without intricate coding. \invis{Moreover, researchers can realize substantial time and cost savings by evaluating their algorithms across diverse underwater conditions in the simulation.} The objective of ChatSim is to integrate Large Language Models (LLM) with a simulation environment~(OysterSim), enabling direct control of the simulated environment via natural language input. This advancement can greatly enhance the capabilities of underwater simulation, with far-reaching benefits for marine exploration and broader scientific research endeavors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge