Ajith Suresh

Comments on "Privacy-Enhanced Federated Learning Against Poisoning Adversaries"

Sep 30, 2024Abstract:In August 2021, Liu et al. (IEEE TIFS'21) proposed a privacy-enhanced framework named PEFL to efficiently detect poisoning behaviours in Federated Learning (FL) using homomorphic encryption. In this article, we show that PEFL does not preserve privacy. In particular, we illustrate that PEFL reveals the entire gradient vector of all users in clear to one of the participating entities, thereby violating privacy. Furthermore, we clearly show that an immediate fix for this issue is still insufficient to achieve privacy by pointing out multiple flaws in the proposed system. Note: Although our privacy issues mentioned in Section II have been published in January 2023 (Schneider et. al., IEEE TIFS'23), several subsequent papers continued to reference Liu et al. (IEEE TIFS'21) as a potential solution for private federated learning. While a few works have acknowledged the privacy concerns we raised, several of subsequent works either propagate these errors or adopt the constructions from Liu et al. (IEEE TIFS'21), thereby unintentionally inheriting the same privacy vulnerabilities. We believe this oversight is partly due to the limited visibility of our comments paper at TIFS'23 (Schneider et. al., IEEE TIFS'23). Consequently, to prevent the continued propagation of the flawed algorithms in Liu et al. (IEEE TIFS'21) into future research, we also put this article to an ePrint.

HyFL: A Hybrid Approach For Private Federated Learning

Feb 20, 2023

Abstract:As a distributed machine learning paradigm, federated learning (FL) conveys a sense of privacy to contributing participants because training data never leaves their devices. However, gradient updates and the aggregated model still reveal sensitive information. In this work, we propose HyFL, a new framework that combines private training and inference with secure aggregation and hierarchical FL to provide end-to-end protection and facilitate large-scale global deployments. Additionally, we show that HyFL strictly limits the attack surface for malicious participants: they are restricted to data-poisoning attacks and cannot significantly reduce accuracy.

ScionFL: Secure Quantized Aggregation for Federated Learning

Oct 13, 2022

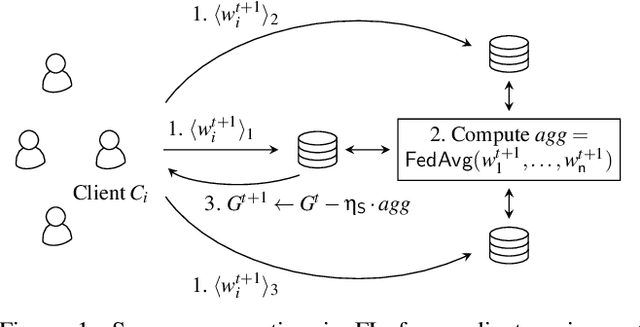

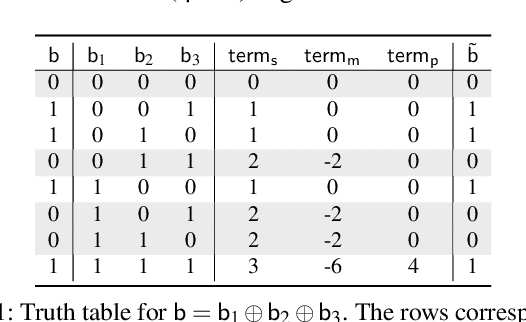

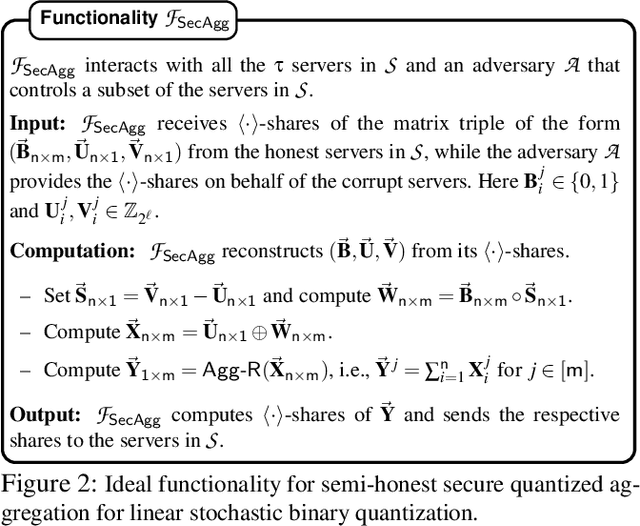

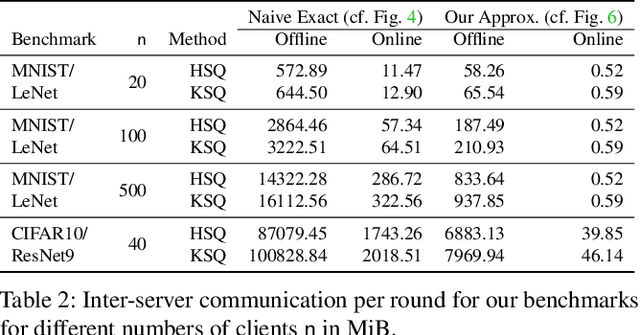

Abstract:Privacy concerns in federated learning (FL) are commonly addressed with secure aggregation schemes that prevent a central party from observing plaintext client updates. However, most such schemes neglect orthogonal FL research that aims at reducing communication between clients and the aggregator and is instrumental in facilitating cross-device FL with thousands and even millions of (mobile) participants. In particular, quantization techniques can typically reduce client-server communication by a factor of 32x. In this paper, we unite both research directions by introducing an efficient secure aggregation framework based on outsourced multi-party computation (MPC) that supports any linear quantization scheme. Specifically, we design a novel approximate version of an MPC-based secure aggregation protocol with support for multiple stochastic quantization schemes, including ones that utilize the randomized Hadamard transform and Kashin's representation. In our empirical performance evaluation, we show that with no additional overhead for clients and moderate inter-server communication, we achieve similar training accuracy as insecure schemes for standard FL benchmarks. Beyond this, we present an efficient extension to our secure quantized aggregation framework that effectively defends against state-of-the-art untargeted poisoning attacks.

MPClan: Protocol Suite for Privacy-Conscious Computations

Jun 24, 2022

Abstract:The growing volumes of data being collected and its analysis to provide better services are creating worries about digital privacy. To address privacy concerns and give practical solutions, the literature has relied on secure multiparty computation. However, recent research has mostly focused on the small-party honest-majority setting of up to four parties, noting efficiency concerns. In this work, we extend the strategies to support a larger number of participants in an honest-majority setting with efficiency at the center stage. Cast in the preprocessing paradigm, our semi-honest protocol improves the online complexity of the decade-old state-of-the-art protocol of Damg\aa rd and Nielson (CRYPTO'07). In addition to having an improved online communication cost, we can shut down almost half of the parties in the online phase, thereby saving up to 50% in the system's operational costs. Our maliciously secure protocol also enjoys similar benefits and requires only half of the parties, except for one-time verification, towards the end. To showcase the practicality of the designed protocols, we benchmark popular applications such as deep neural networks, graph neural networks, genome sequence matching, and biometric matching using prototype implementations. Our improved protocols aid in bringing up to 60-80% savings in monetary cost over prior work.

MPCLeague: Robust MPC Platform for Privacy-Preserving Machine Learning

Dec 26, 2021

Abstract:In the modern era of computing, machine learning tools have demonstrated their potential in vital sectors, such as healthcare and finance, to derive proper inferences. The sensitive and confidential nature of the data in such sectors raises genuine concerns for data privacy. This motivated the area of Privacy-preserving Machine Learning (PPML), where privacy of data is guaranteed. In this thesis, we design an efficient platform, MPCLeague, for PPML in the Secure Outsourced Computation (SOC) setting using Secure Multi-party Computation (MPC) techniques. MPC, the holy-grail problem of secure distributed computing, enables a set of n mutually distrusting parties to perform joint computation on their private inputs in a way that no coalition of t parties can learn more information than the output (privacy) or affect the true output of the computation (correctness). While MPC, in general, has been a subject of extensive research, the area of MPC with a small number of parties has drawn popularity of late mainly due to its application to real-time scenarios, efficiency and simplicity. This thesis focuses on designing efficient MPC frameworks for 2, 3 and 4 parties, with at most one corruption and supports ring structures. At the heart of this thesis are four frameworks - ASTRA, SWIFT, Tetrad, ABY2.0 - catered to different settings. The practicality of our framework is argued through improvements in the benchmarking of widely used ML algorithms -- Linear Regression, Logistic Regression, Neural Networks, and Support Vector Machines. We propose two variants for each of our frameworks, with one variant aiming to minimise the execution time while the other focuses on the monetary cost. The concrete efficiency gains of our frameworks coupled with the stronger security guarantee of robustness make our platform an ideal choice for a real-time deployment of PPML techniques.

Tetrad: Actively Secure 4PC for Secure Training and Inference

Jun 08, 2021

Abstract:In this work, we design an efficient mixed-protocol framework, Tetrad, with applications to privacy-preserving machine learning. It is designed for the four-party setting with at most one active corruption and supports rings. Our fair multiplication protocol requires communicating only 5 ring elements improving over the state-of-the-art protocol of Trident (Chaudhari et al. NDSS'20). The technical highlights of Tetrad include efficient (a) truncation without any overhead, (b) multi-input multiplication protocols for arithmetic and boolean worlds, (c) garbled-world, tailor-made for the mixed-protocol framework, and (d) conversion mechanisms to switch between the computation styles. The fair framework is also extended to provide robustness without inflating the costs. The competence of Tetrad is tested with benchmarks for deep neural networks such as LeNet and VGG16 and support vector machines. One variant of our framework aims at minimizing the execution time, while the other focuses on the monetary cost. We observe improvements up to 6x over Trident across these parameters.

SWIFT: Super-fast and Robust Privacy-Preserving Machine Learning

May 20, 2020

Abstract:Performing ML computation on private data while maintaining data privacy aka Privacy-preserving Machine Learning (PPML) is an emergent field of research. Recently, PPML has seen a visible shift towards the adoption of Secure Outsourced Computation (SOC) paradigm, due to the heavy computation that it entails. In the SOC paradigm, computation is outsourced to a set of powerful and specially equipped servers that provide service on a pay-per-use basis. In this work, we propose SWIFT, a robust PPML framework for a range of ML algorithms in SOC setting, that guarantees output delivery to the users irrespective of any adversarial behaviour. Robustness, a highly desirable feature, evokes user participation without the fear of denial of service. At the heart of our framework lies a highly-efficient, maliciously-secure, three-party computation (3PC) over rings that provides guaranteed output delivery (GOD) in the honest-majority setting. To the best of our knowledge, SWIFT is the first robust and efficient PPML framework in the 3PC setting. SWIFT is as fast as the best-known 3PC framework BLAZE (Patra et al. NDSS'20) which only achieves fairness. Fairness ensures either all or none receive the output, whereas GOD ensures guaranteed output delivery no matter what. We extend our 3PC framework for four parties (4PC). In this regime, SWIFT is as fast as the best known fair 4PC framework Trident (Chaudhari et al. NDSS'20) and twice faster than the best-known robust 4PC framework FLASH (Byali et al. PETS'20). We demonstrate the practical relevance of our framework by benchmarking two important applications-- i) ML algorithms: Logistic Regression and Neural Network, and ii) Biometric matching, both over a 64-bit ring in WAN setting. Our readings reflect our claims as above.

BLAZE: Blazing Fast Privacy-Preserving Machine Learning

May 18, 2020

Abstract:Machine learning tools have illustrated their potential in many significant sectors such as healthcare and finance, to aide in deriving useful inferences. The sensitive and confidential nature of the data, in such sectors, raise natural concerns for the privacy of data. This motivated the area of Privacy-preserving Machine Learning (PPML) where privacy of the data is guaranteed. Typically, ML techniques require large computing power, which leads clients with limited infrastructure to rely on the method of Secure Outsourced Computation (SOC). In SOC setting, the computation is outsourced to a set of specialized and powerful cloud servers and the service is availed on a pay-per-use basis. In this work, we explore PPML techniques in the SOC setting for widely used ML algorithms-- Linear Regression, Logistic Regression, and Neural Networks. We propose BLAZE, a blazing fast PPML framework in the three server setting tolerating one malicious corruption over a ring (\Z{\ell}). BLAZE achieves the stronger security guarantee of fairness (all honest servers get the output whenever the corrupt server obtains the same). Leveraging an input-independent preprocessing phase, BLAZE has a fast input-dependent online phase relying on efficient PPML primitives such as: (i) A dot product protocol for which the communication in the online phase is independent of the vector size, the first of its kind in the three server setting; (ii) A method for truncation that shuns evaluating expensive circuit for Ripple Carry Adders (RCA) and achieves a constant round complexity. This improves over the truncation method of ABY3 (Mohassel et al., CCS 2018) that uses RCA and consumes a round complexity that is of the order of the depth of RCA. An extensive benchmarking of BLAZE for the aforementioned ML algorithms over a 64-bit ring in both WAN and LAN settings shows massive improvements over ABY3.

Trident: Efficient 4PC Framework for Privacy Preserving Machine Learning

Dec 05, 2019

Abstract:Machine learning has started to be deployed in fields such as healthcare and finance, which propelled the need for and growth of privacy-preserving machine learning (PPML). We propose an actively secure four-party protocol (4PC), and a framework for PPML, showcasing its applications on four of the most widely-known machine learning algorithms -- Linear Regression, Logistic Regression, Neural Networks, and Convolutional Neural Networks. Our 4PC protocol tolerating at most one malicious corruption is practically efficient as compared to the existing works. We use the protocol to build an efficient mixed-world framework (Trident) to switch between the Arithmetic, Boolean, and Garbled worlds. Our framework operates in the offline-online paradigm over rings and is instantiated in an outsourced setting for machine learning. Also, we propose conversions especially relevant to privacy-preserving machine learning. The highlights of our framework include using a minimal number of expensive circuits overall as compared to ABY3. This can be seen in our technique for truncation, which does not affect the online cost of multiplication and removes the need for any circuits in the offline phase. Our B2A conversion has an improvement of $\mathbf{7} \times$ in rounds and $\mathbf{18} \times$ in the communication complexity. In addition to these, all of the special conversions for machine learning, e.g. Secure Comparison, achieve constant round complexity. The practicality of our framework is argued through improvements in the benchmarking of the aforementioned algorithms when compared with ABY3. All the protocols are implemented over a 64-bit ring in both LAN and WAN settings. Our improvements go up to $\mathbf{187} \times$ for the training phase and $\mathbf{158} \times$ for the prediction phase when observed over LAN and WAN.

ASTRA: High Throughput 3PC over Rings with Application to Secure Prediction

Dec 05, 2019

Abstract:The concrete efficiency of secure computation has been the focus of many recent works. In this work, we present concretely-efficient protocols for secure $3$-party computation (3PC) over a ring of integers modulo $2^{\ell}$ tolerating one corruption, both with semi-honest and malicious security. Owing to the fact that computation over ring emulates computation over the real-world system architectures, secure computation over ring has gained momentum of late. Cast in the offline-online paradigm, our constructions present the most efficient online phase in concrete terms. In the semi-honest setting, our protocol requires communication of $2$ ring elements per multiplication gate during the {\it online} phase, attaining a per-party cost of {\em less than one element}. This is achieved for the first time in the regime of 3PC. In the {\it malicious} setting, our protocol requires communication of $4$ elements per multiplication gate during the online phase, beating the state-of-the-art protocol by $5$ elements. Realized with both the security notions of selective abort and fairness, the malicious protocol with fairness involves slightly more communication than its counterpart with abort security for the output gates {\em alone}. We apply our techniques from $3$PC in the regime of secure server-aided machine-learning (ML) inference for a range of prediction functions-- linear regression, linear SVM regression, logistic regression, and linear SVM classification. Our setting considers a model-owner with trained model parameters and a client with a query, with the latter willing to learn the prediction of her query based on the model parameters of the former. The inputs and computation are outsourced to a set of three non-colluding servers. Our constructions catering to both semi-honest and the malicious world, invariably perform better than the existing constructions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge