Ahmed Abusnaina

Burning the Adversarial Bridges: Robust Windows Malware Detection Against Binary-level Mutations

Oct 05, 2023

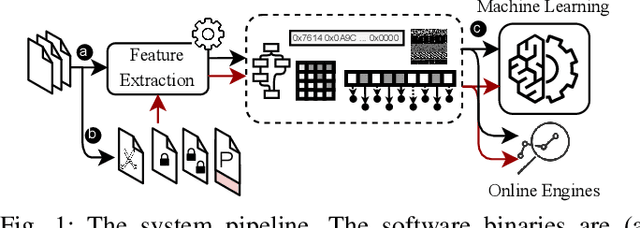

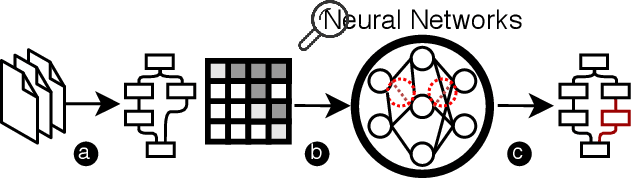

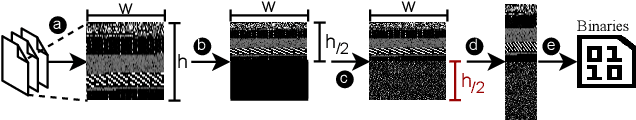

Abstract:Toward robust malware detection, we explore the attack surface of existing malware detection systems. We conduct root-cause analyses of the practical binary-level black-box adversarial malware examples. Additionally, we uncover the sensitivity of volatile features within the detection engines and exhibit their exploitability. Highlighting volatile information channels within the software, we introduce three software pre-processing steps to eliminate the attack surface, namely, padding removal, software stripping, and inter-section information resetting. Further, to counter the emerging section injection attacks, we propose a graph-based section-dependent information extraction scheme for software representation. The proposed scheme leverages aggregated information within various sections in the software to enable robust malware detection and mitigate adversarial settings. Our experimental results show that traditional malware detection models are ineffective against adversarial threats. However, the attack surface can be largely reduced by eliminating the volatile information. Therefore, we propose simple-yet-effective methods to mitigate the impacts of binary manipulation attacks. Overall, our graph-based malware detection scheme can accurately detect malware with an area under the curve score of 88.32\% and a score of 88.19% under a combination of binary manipulation attacks, exhibiting the efficiency of our proposed scheme.

ML-based IoT Malware Detection Under Adversarial Settings: A Systematic Evaluation

Aug 30, 2021

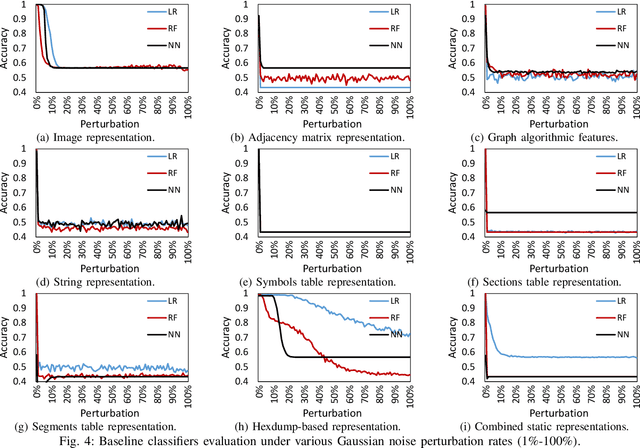

Abstract:The rapid growth of the Internet of Things (IoT) devices is paralleled by them being on the front-line of malicious attacks. This has led to an explosion in the number of IoT malware, with continued mutations, evolution, and sophistication. These malicious software are detected using machine learning (ML) algorithms alongside the traditional signature-based methods. Although ML-based detectors improve the detection performance, they are susceptible to malware evolution and sophistication, making them limited to the patterns that they have been trained upon. This continuous trend motivates the large body of literature on malware analysis and detection research, with many systems emerging constantly, and outperforming their predecessors. In this work, we systematically examine the state-of-the-art malware detection approaches, that utilize various representation and learning techniques, under a range of adversarial settings. Our analyses highlight the instability of the proposed detectors in learning patterns that distinguish the benign from the malicious software. The results exhibit that software mutations with functionality-preserving operations, such as stripping and padding, significantly deteriorate the accuracy of such detectors. Additionally, our analysis of the industry-standard malware detectors shows their instability to the malware mutations.

Hate, Obscenity, and Insults: Measuring the Exposure of Children to Inappropriate Comments in YouTube

Mar 03, 2021

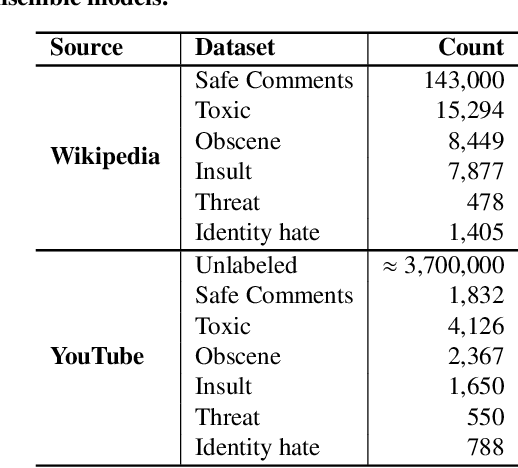

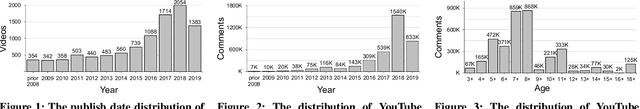

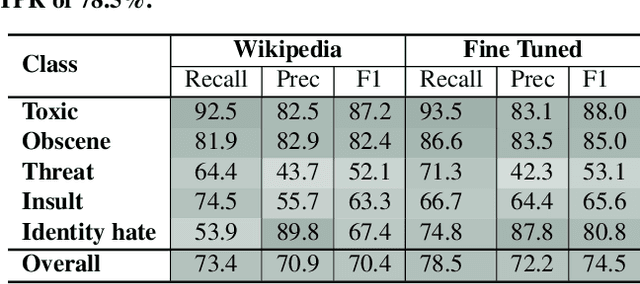

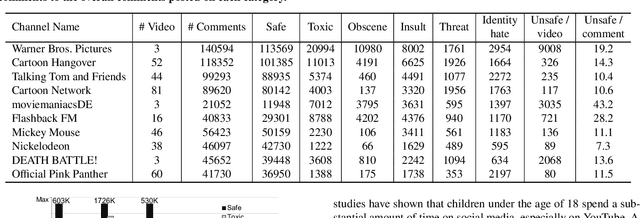

Abstract:Social media has become an essential part of the daily routines of children and adolescents. Moreover, enormous efforts have been made to ensure the psychological and emotional well-being of young users as well as their safety when interacting with various social media platforms. In this paper, we investigate the exposure of those users to inappropriate comments posted on YouTube videos targeting this demographic. We collected a large-scale dataset of approximately four million records and studied the presence of five age-inappropriate categories and the amount of exposure to each category. Using natural language processing and machine learning techniques, we constructed ensemble classifiers that achieved high accuracy in detecting inappropriate comments. Our results show a large percentage of worrisome comments with inappropriate content: we found 11% of the comments on children's videos to be toxic, highlighting the importance of monitoring comments, particularly on children's platforms.

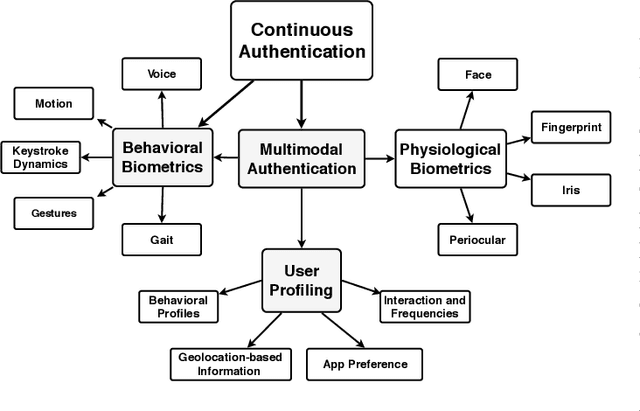

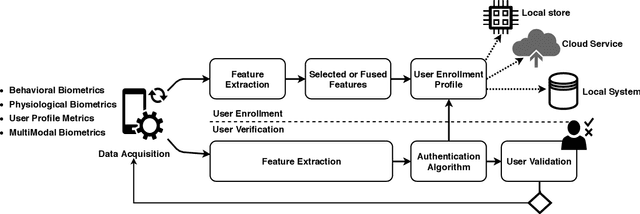

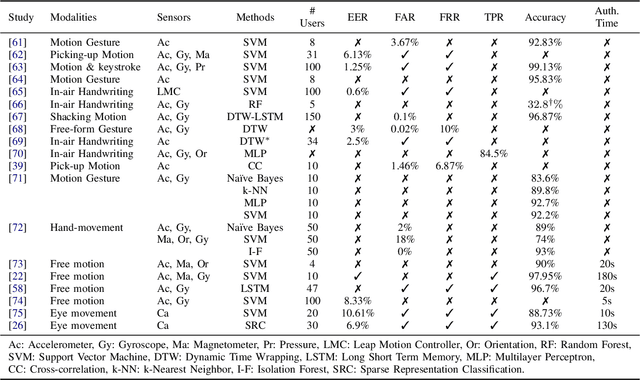

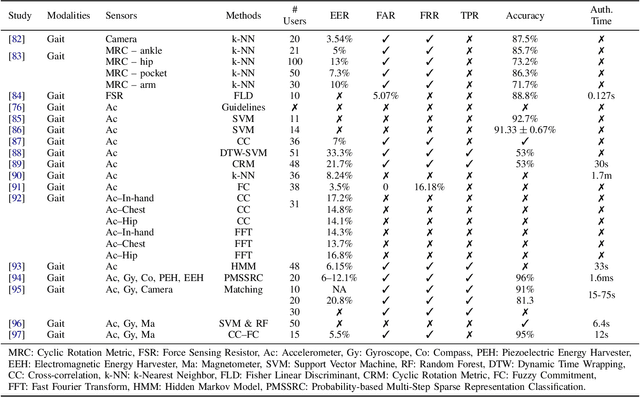

Sensor-based Continuous Authentication of Smartphones' Users Using Behavioral Biometrics: A Survey

Jan 23, 2020

Abstract:Mobile devices and technologies have become increasingly popular, offering comparable storage and computational capabilities to desktop computers allowing users to store and interact with sensitive and private information. The security and protection of such personal information are becoming more and more important since mobile devices are vulnerable to unauthorized access or theft. User authentication is a task of paramount importance that grants access to legitimate users at the point-of-entry and continuously through the usage session. This task is made possible with today's smartphones' embedded sensors that enable continuous and implicit user authentication by capturing behavioral biometrics and traits. In this paper, we survey more than 140 recent behavioral biometric-based approaches for continuous user authentication, including motion-based methods (27 studies), gait-based methods (23 studies), keystroke dynamics-based methods (20 studies), touch gesture-based methods (29 studies), voice-based methods (16 studies), and multimodal-based methods (33 studies). The survey provides an overview of the current state-of-the-art approaches for continuous user authentication using behavioral biometrics captured by smartphones' embedded sensors, including insights and open challenges for adoption, usability, and performance.

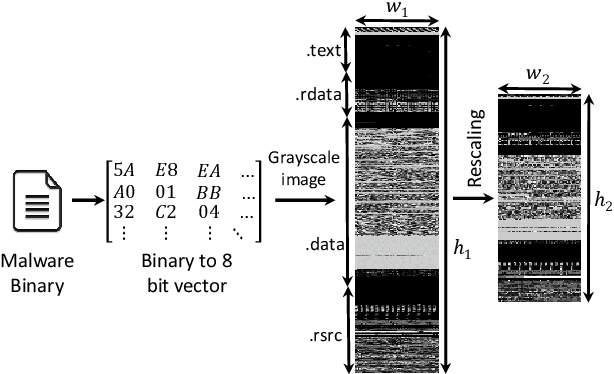

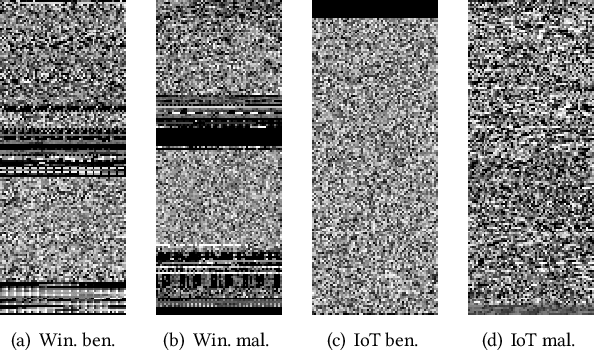

COPYCAT: Practical Adversarial Attacks on Visualization-Based Malware Detection

Sep 20, 2019

Abstract:Despite many attempts, the state-of-the-art of adversarial machine learning on malware detection systems generally yield unexecutable samples. In this work, we set out to examine the robustness of visualization-based malware detection system against adversarial examples (AEs) that not only are able to fool the model, but also maintain the executability of the original input. As such, we first investigate the application of existing off-the-shelf adversarial attack approaches on malware detection systems through which we found that those approaches do not necessarily maintain the functionality of the original inputs. Therefore, we proposed an approach to generate adversarial examples, COPYCAT, which is specifically designed for malware detection systems considering two main goals; achieving a high misclassification rate and maintaining the executability and functionality of the original input. We designed two main configurations for COPYCAT, namely AE padding and sample injection. While the first configuration results in untargeted misclassification attacks, the sample injection configuration is able to force the model to generate a targeted output, which is highly desirable in the malware attribution setting. We evaluate the performance of COPYCAT through an extensive set of experiments on two malware datasets, and report that we were able to generate adversarial samples that are misclassified at a rate of 98.9% and 96.5% with Windows and IoT binary datasets, respectively, outperforming the misclassification rates in the literature. Most importantly, we report that those AEs were executable unlike AEs generated by off-the-shelf approaches. Our transferability study demonstrates that the generated AEs through our proposed method can be generalized to other models.

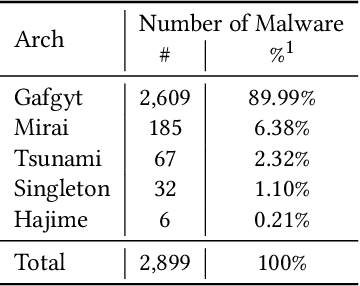

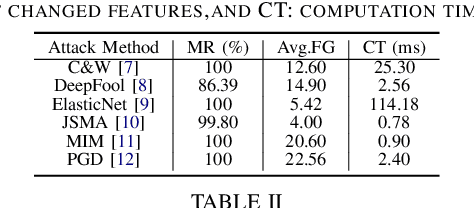

Examining Adversarial Learning against Graph-based IoT Malware Detection Systems

Feb 15, 2019

Abstract:The main goal of this study is to investigate the robustness of graph-based Deep Learning (DL) models used for Internet of Things (IoT) malware classification against Adversarial Learning (AL). We designed two approaches to craft adversarial IoT software, including Off-the-Shelf Adversarial Attack (OSAA) methods, using six different AL attack approaches, and Graph Embedding and Augmentation (GEA). The GEA approach aims to preserve the functionality and practicality of the generated adversarial sample through a careful embedding of a benign sample to a malicious one. Our evaluations demonstrate that OSAAs are able to achieve a misclassification rate (MR) of 100%. Moreover, we observed that the GEA approach is able to misclassify all IoT malware samples as benign.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge