Songqing Chen

Towards Zero-trust Security for the Metaverse

Feb 17, 2023Abstract:By focusing on immersive interaction among users, the burgeoning Metaverse can be viewed as a natural extension of existing social media. Similar to traditional online social networks, there are numerous security and privacy issues in the Metaverse (e.g., attacks on user authentication and impersonation). In this paper, we develop a holistic research agenda for zero-trust user authentication in social virtual reality (VR), an early prototype of the Metaverse. Our proposed research includes four concrete steps: investigating biometrics-based authentication that is suitable for continuously authenticating VR users, leveraging federated learning (FL) for protecting user privacy in biometric data, improving the accuracy of continuous VR authentication with multimodal data, and boosting the usability of zero-trust security with adaptive VR authentication. Our preliminary study demonstrates that conventional FL algorithms are not well suited for biometrics-based authentication of VR users, leading to an accuracy of less than 10%. We discuss the root cause of this problem, the associated open challenges, and several future directions for realizing our research vision.

Computer Systems Have 99 Problems, Let's Not Make Machine Learning Another One

Nov 28, 2019Abstract:Machine learning techniques are finding many applications in computer systems, including many tasks that require decision making: network optimization, quality of service assurance, and security. We believe machine learning systems are here to stay, and to materialize on their potential we advocate a fresh look at various key issues that need further attention, including security as a requirement and system complexity, and how machine learning systems affect them. We also discuss reproducibility as a key requirement for sustainable machine learning systems, and leads to pursuing it.

COPYCAT: Practical Adversarial Attacks on Visualization-Based Malware Detection

Sep 20, 2019

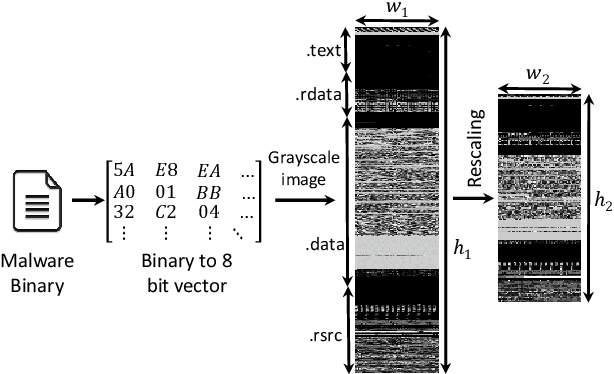

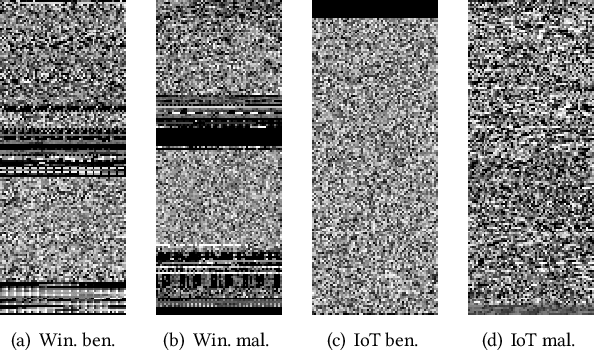

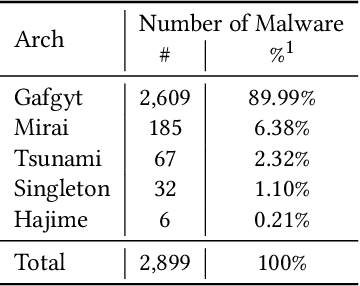

Abstract:Despite many attempts, the state-of-the-art of adversarial machine learning on malware detection systems generally yield unexecutable samples. In this work, we set out to examine the robustness of visualization-based malware detection system against adversarial examples (AEs) that not only are able to fool the model, but also maintain the executability of the original input. As such, we first investigate the application of existing off-the-shelf adversarial attack approaches on malware detection systems through which we found that those approaches do not necessarily maintain the functionality of the original inputs. Therefore, we proposed an approach to generate adversarial examples, COPYCAT, which is specifically designed for malware detection systems considering two main goals; achieving a high misclassification rate and maintaining the executability and functionality of the original input. We designed two main configurations for COPYCAT, namely AE padding and sample injection. While the first configuration results in untargeted misclassification attacks, the sample injection configuration is able to force the model to generate a targeted output, which is highly desirable in the malware attribution setting. We evaluate the performance of COPYCAT through an extensive set of experiments on two malware datasets, and report that we were able to generate adversarial samples that are misclassified at a rate of 98.9% and 96.5% with Windows and IoT binary datasets, respectively, outperforming the misclassification rates in the literature. Most importantly, we report that those AEs were executable unlike AEs generated by off-the-shelf approaches. Our transferability study demonstrates that the generated AEs through our proposed method can be generalized to other models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge