Ahmad Ridley

Interpreting Agent Behaviors in Reinforcement-Learning-Based Cyber-Battle Simulation Platforms

Jun 09, 2025Abstract:We analyze two open source deep reinforcement learning agents submitted to the CAGE Challenge 2 cyber defense challenge, where each competitor submitted an agent to defend a simulated network against each of several provided rules-based attack agents. We demonstrate that one can gain interpretability of agent successes and failures by simplifying the complex state and action spaces and by tracking important events, shedding light on the fine-grained behavior of both the defense and attack agents in each experimental scenario. By analyzing important events within an evaluation episode, we identify patterns in infiltration and clearing events that tell us how well the attacker and defender played their respective roles; for example, defenders were generally able to clear infiltrations within one or two timesteps of a host being exploited. By examining transitions in the environment's state caused by the various possible actions, we determine which actions tended to be effective and which did not, showing that certain important actions are between 40% and 99% ineffective. We examine how decoy services affect exploit success, concluding for instance that decoys block up to 94% of exploits that would directly grant privileged access to a host. Finally, we discuss the realism of the challenge and ways that the CAGE Challenge 4 has addressed some of our concerns.

Proceedings of the 2nd International Workshop on Adaptive Cyber Defense

Sep 06, 2023Abstract:The 2nd International Workshop on Adaptive Cyber Defense was held at the Florida Institute of Technology, Florida. This workshop was organized to share research that explores unique applications of Artificial Intelligence (AI) and Machine Learning (ML) as foundational capabilities for the pursuit of adaptive cyber defense. The cyber domain cannot currently be reliably and effectively defended without extensive reliance on human experts. Skilled cyber defenders are in short supply and often cannot respond fast enough to cyber threats. Building on recent advances in AI and ML the Cyber defense research community has been motivated to develop new dynamic and sustainable defenses through the adoption of AI and ML techniques to cyber settings. Bridging critical gaps between AI and Cyber researchers and practitioners can accelerate efforts to create semi-autonomous cyber defenses that can learn to recognize and respond to cyber attacks or discover and mitigate weaknesses in cooperation with other cyber operation systems and human experts. Furthermore, these defenses are expected to be adaptive and able to evolve over time to thwart changes in attacker behavior, changes in the system health and readiness, and natural shifts in user behavior over time. The workshop was comprised of invited keynote talks, technical presentations and a panel discussion about how AI/ML can enable autonomous mitigation of current and future cyber attacks. Workshop submissions were peer reviewed by a panel of domain experts with a proceedings consisting of six technical articles exploring challenging problems of critical importance to national and global security. Participation in this workshop offered new opportunities to stimulate research and innovation in the emerging domain of adaptive and autonomous cyber defense.

Proceedings of TDA: Applications of Topological Data Analysis to Data Science, Artificial Intelligence, and Machine Learning Workshop at SDM 2022

Apr 14, 2022Abstract:Topological Data Analysis (TDA) is a rigorous framework that borrows techniques from geometric and algebraic topology, category theory, and combinatorics in order to study the "shape" of such complex high-dimensional data. Research in this area has grown significantly over the last several years bringing a deeply rooted theory to bear on practical applications in areas such as genomics, natural language processing, medicine, cybersecurity, energy, and climate change. Within some of these areas, TDA has also been used to augment AI and ML techniques. We believe there is further utility to be gained in this space that can be facilitated by a workshop bringing together experts (both theorists and practitioners) and non-experts. Currently there is an active community of pure mathematicians with research interests in developing and exploring the theoretical and computational aspects of TDA. Applied mathematicians and other practitioners are also present in community but do not represent a majority. This speaks to the primary aim of this workshop which is to grow a wider community of interest in TDA. By fostering meaningful exchanges between these groups, from across the government, academia, and industry, we hope to create new synergies that can only come through building a mutual comprehensive awareness of the problem and solution spaces.

Proceedings of the Artificial Intelligence for Cyber Security (AICS) Workshop at AAAI 2022

Mar 01, 2022Abstract:The workshop will focus on the application of AI to problems in cyber security. Cyber systems generate large volumes of data, utilizing this effectively is beyond human capabilities. Additionally, adversaries continue to develop new attacks. Hence, AI methods are required to understand and protect the cyber domain. These challenges are widely studied in enterprise networks, but there are many gaps in research and practice as well as novel problems in other domains. In general, AI techniques are still not widely adopted in the real world. Reasons include: (1) a lack of certification of AI for security, (2) a lack of formal study of the implications of practical constraints (e.g., power, memory, storage) for AI systems in the cyber domain, (3) known vulnerabilities such as evasion, poisoning attacks, (4) lack of meaningful explanations for security analysts, and (5) lack of analyst trust in AI solutions. There is a need for the research community to develop novel solutions for these practical issues.

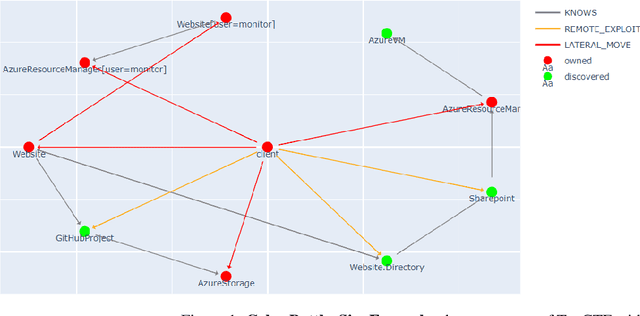

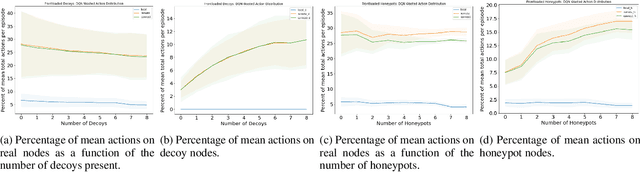

Incorporating Deception into CyberBattleSim for Autonomous Defense

Aug 31, 2021

Abstract:Deceptive elements, including honeypots and decoys, were incorporated into the Microsoft CyberBattleSim experimentation and research platform. The defensive capabilities of the deceptive elements were tested using reinforcement learning based attackers in the provided capture the flag environment. The attacker's progress was found to be dependent on the number and location of the deceptive elements. This is a promising step toward reproducibly testing attack and defense algorithms in a simulated enterprise network with deceptive defensive elements.

Proceedings - AI/ML for Cybersecurity: Challenges, Solutions, and Novel Ideas at SIAM Data Mining 2021

Apr 27, 2021Abstract:Malicious cyber activity is ubiquitous and its harmful effects have dramatic and often irreversible impacts on society. Given the shortage of cybersecurity professionals, the ever-evolving adversary, the massive amounts of data which could contain evidence of an attack, and the speed at which defensive actions must be taken, innovations which enable autonomy in cybersecurity must continue to expand, in order to move away from a reactive defense posture and towards a more proactive one. The challenges in this space are quite different from those associated with applying AI in other domains such as computer vision. The environment suffers from an incredibly high degree of uncertainty, stemming from the intractability of ingesting all the available data, as well as the possibility that malicious actors are manipulating the data. Another unique challenge in this space is the dynamism of the adversary causes the indicators of compromise to change frequently and without warning. In spite of these challenges, machine learning has been applied to this domain and has achieved some success in the realm of detection. While this aspect of the problem is far from solved, a growing part of the commercial sector is providing ML-enhanced capabilities as a service. Many of these entities also provide platforms which facilitate the deployment of these automated solutions. Academic research in this space is growing and continues to influence current solutions, as well as strengthen foundational knowledge which will make autonomous agents in this space a possibility.

Network Defense is Not a Game

Apr 20, 2021

Abstract:Research seeks to apply Artificial Intelligence (AI) to scale and extend the capabilities of human operators to defend networks. A fundamental problem that hinders the generalization of successful AI approaches -- i.e., beating humans at playing games -- is that network defense cannot be defined as a single game with a fixed set of rules. Our position is that network defense is better characterized as a collection of games with uncertain and possibly drifting rules. Hence, we propose to define network defense tasks as distributions of network environments, to: (i) enable research to apply modern AI techniques, such as unsupervised curriculum learning and reinforcement learning for network defense; and, (ii) facilitate the design of well-defined challenges that can be used to compare approaches for autonomous cyberdefense. To demonstrate that an approach for autonomous network defense is practical it is important to be able to reason about the boundaries of its applicability. Hence, we need to be able to define network defense tasks that capture sets of adversarial tactics, techniques, and procedures (TTPs); quality of service (QoS) requirements; and TTPs available to defenders. Furthermore, the abstractions to define these tasks must be extensible; must be backed by well-defined semantics that allow us to reason about distributions of environments; and should enable the generation of data and experiences from which an agent can learn. Our approach named Network Environment Design for Autonomous Cyberdefense inspired the architecture of FARLAND, a Framework for Advanced Reinforcement Learning for Autonomous Network Defense, which we use at MITRE to develop RL network defenders that perform blue actions from the MITRE Shield matrix against attackers with TTPs that drift from MITRE ATT&CK TTPs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge