Kimberly Ferguson-Walter

Informing Autonomous Deception Systems with Cyber Expert Performance Data

Aug 31, 2021

Abstract:The performance of artificial intelligence (AI) algorithms in practice depends on the realism and correctness of the data, models, and feedback (labels or rewards) provided to the algorithm. This paper discusses methods for improving the realism and ecological validity of AI used for autonomous cyber defense by exploring the potential to use Inverse Reinforcement Learning (IRL) to gain insight into attacker actions, utilities of those actions, and ultimately decision points which cyber deception could thwart. The Tularosa study, as one example, provides experimental data of real-world techniques and tools commonly used by attackers, from which core data vectors can be leveraged to inform an autonomous cyber defense system.

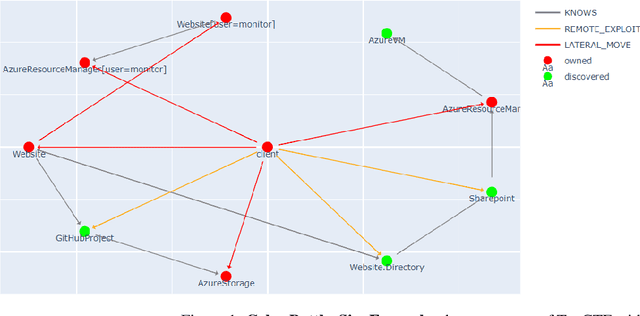

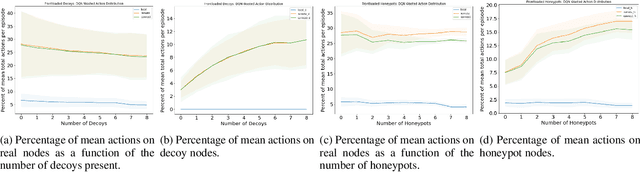

Incorporating Deception into CyberBattleSim for Autonomous Defense

Aug 31, 2021

Abstract:Deceptive elements, including honeypots and decoys, were incorporated into the Microsoft CyberBattleSim experimentation and research platform. The defensive capabilities of the deceptive elements were tested using reinforcement learning based attackers in the provided capture the flag environment. The attacker's progress was found to be dependent on the number and location of the deceptive elements. This is a promising step toward reproducibly testing attack and defense algorithms in a simulated enterprise network with deceptive defensive elements.

Proceedings of the 1st International Workshop on Adaptive Cyber Defense

Aug 19, 2021Abstract:The 1st International Workshop on Adaptive Cyber Defense was held as part of the 2021 International Joint Conference on Artificial Intelligence. This workshop was organized to share research that explores unique applications of Artificial Intelligence (AI) and Machine Learning (ML) as foundational capabilities for the pursuit of adaptive cyber defense. The cyber domain cannot currently be reliably and effectively defended without extensive reliance on human experts. Skilled cyber defenders are in short supply and often cannot respond fast enough to cyber threats. Building on recent advances in AI and ML the Cyber defense research community has been motivated to develop new dynamic and sustainable defenses through the adoption of AI and ML techniques to both cyber and non-cyber settings. Bridging critical gaps between AI and Cyber researchers and practitioners can accelerate efforts to create semi-autonomous cyber defenses that can learn to recognize and respond to cyber attacks or discover and mitigate weaknesses in cooperation with other cyber operation systems and human experts. Furthermore, these defenses are expected to be adaptive and able to evolve over time to thwart changes in attacker behavior, changes in the system health and readiness, and natural shifts in user behavior over time. The Workshop (held on August 19th and 20th 2021 in Montreal-themed virtual reality) was comprised of technical presentations and a panel discussion focused on open problems and potential research solutions. Workshop submissions were peer reviewed by a panel of domain experts with a proceedings consisting of 10 technical articles exploring challenging problems of critical importance to national and global security. Participation in this workshop offered new opportunities to stimulate research and innovation in the emerging domain of adaptive and autonomous cyber defense.

Proceedings - AI/ML for Cybersecurity: Challenges, Solutions, and Novel Ideas at SIAM Data Mining 2021

Apr 27, 2021Abstract:Malicious cyber activity is ubiquitous and its harmful effects have dramatic and often irreversible impacts on society. Given the shortage of cybersecurity professionals, the ever-evolving adversary, the massive amounts of data which could contain evidence of an attack, and the speed at which defensive actions must be taken, innovations which enable autonomy in cybersecurity must continue to expand, in order to move away from a reactive defense posture and towards a more proactive one. The challenges in this space are quite different from those associated with applying AI in other domains such as computer vision. The environment suffers from an incredibly high degree of uncertainty, stemming from the intractability of ingesting all the available data, as well as the possibility that malicious actors are manipulating the data. Another unique challenge in this space is the dynamism of the adversary causes the indicators of compromise to change frequently and without warning. In spite of these challenges, machine learning has been applied to this domain and has achieved some success in the realm of detection. While this aspect of the problem is far from solved, a growing part of the commercial sector is providing ML-enhanced capabilities as a service. Many of these entities also provide platforms which facilitate the deployment of these automated solutions. Academic research in this space is growing and continues to influence current solutions, as well as strengthen foundational knowledge which will make autonomous agents in this space a possibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge