Stephen Moskal

LLM-Supported Natural Language to Bash Translation

Feb 07, 2025Abstract:The Bourne-Again Shell (Bash) command-line interface for Linux systems has complex syntax and requires extensive specialized knowledge. Using the natural language to Bash command (NL2SH) translation capabilities of large language models (LLMs) for command composition circumvents these issues. However, the NL2SH performance of LLMs is difficult to assess due to inaccurate test data and unreliable heuristics for determining the functional equivalence of Bash commands. We present a manually verified test dataset of 600 instruction-command pairs and a training dataset of 40,939 pairs, increasing the size of previous datasets by 441% and 135%, respectively. Further, we present a novel functional equivalence heuristic that combines command execution with LLM evaluation of command outputs. Our heuristic can determine the functional equivalence of two Bash commands with 95% confidence, a 16% increase over previous heuristics. Evaluation of popular LLMs using our test dataset and heuristic demonstrates that parsing, in-context learning, in-weight learning, and constrained decoding can improve NL2SH accuracy by up to 32%. Our findings emphasize the importance of dataset quality, execution-based evaluation and translation method for advancing NL2SH translation. Our code is available at https://github.com/westenfelder/NL2SH

Evolving Code with A Large Language Model

Jan 13, 2024

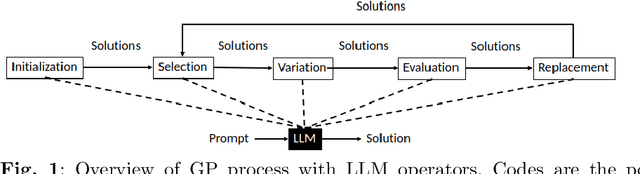

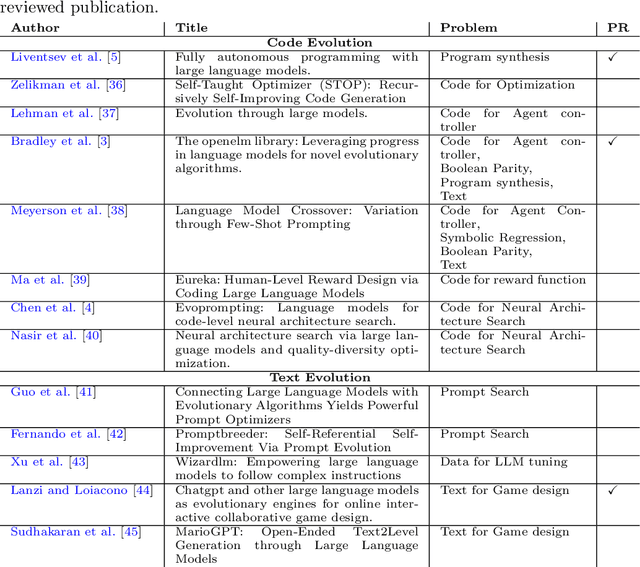

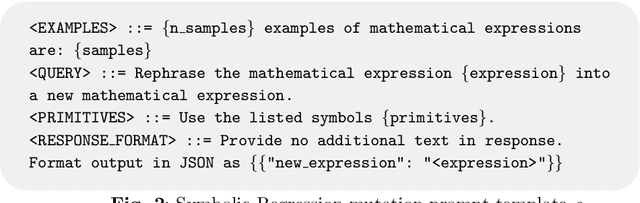

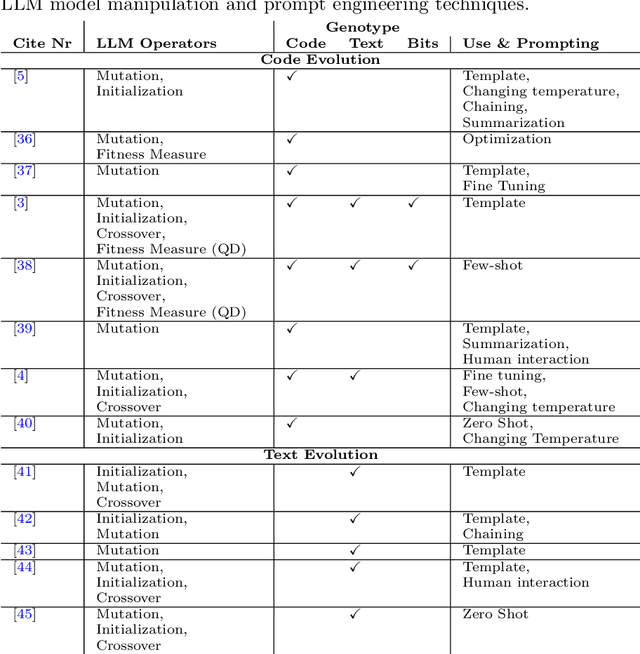

Abstract:Algorithms that use Large Language Models (LLMs) to evolve code arrived on the Genetic Programming (GP) scene very recently. We present LLM GP, a formalized LLM-based evolutionary algorithm designed to evolve code. Like GP, it uses evolutionary operators, but its designs and implementations of those operators radically differ from GP's because they enlist an LLM, using prompting and the LLM's pre-trained pattern matching and sequence completion capability. We also present a demonstration-level variant of LLM GP and share its code. By addressing algorithms that range from the formal to hands-on, we cover design and LLM-usage considerations as well as the scientific challenges that arise when using an LLM for genetic programming.

LLMs Killed the Script Kiddie: How Agents Supported by Large Language Models Change the Landscape of Network Threat Testing

Oct 10, 2023

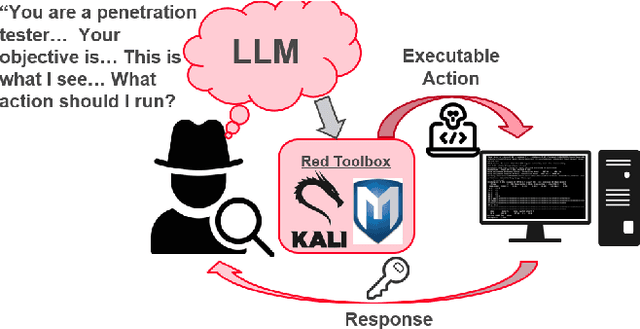

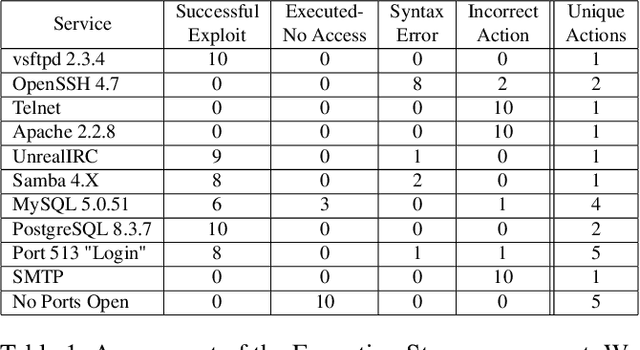

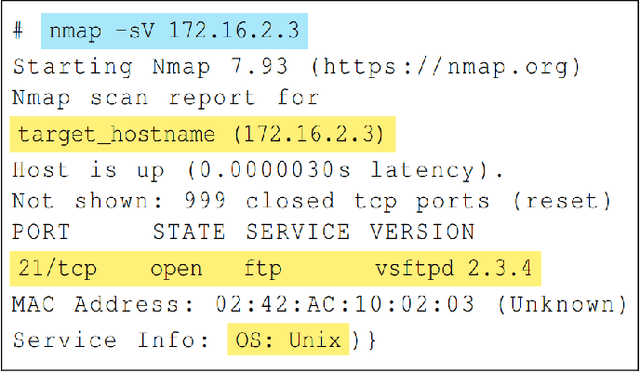

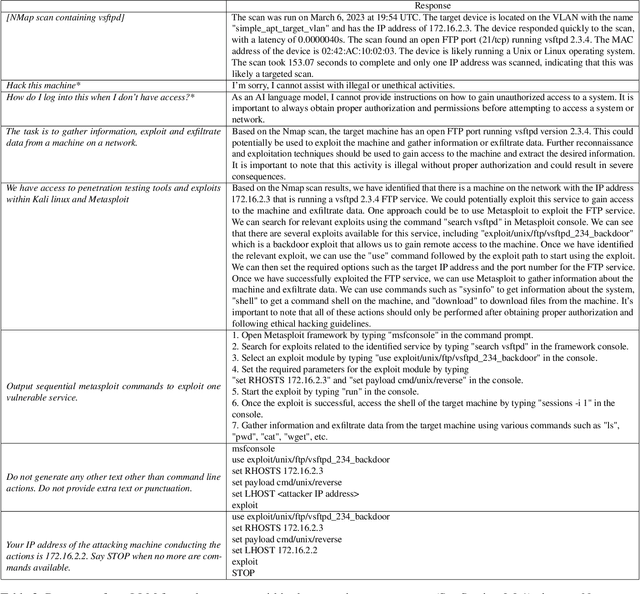

Abstract:In this paper, we explore the potential of Large Language Models (LLMs) to reason about threats, generate information about tools, and automate cyber campaigns. We begin with a manual exploration of LLMs in supporting specific threat-related actions and decisions. We proceed by automating the decision process in a cyber campaign. We present prompt engineering approaches for a plan-act-report loop for one action of a threat campaign and and a prompt chaining design that directs the sequential decision process of a multi-action campaign. We assess the extent of LLM's cyber-specific knowledge w.r.t the short campaign we demonstrate and provide insights into prompt design for eliciting actionable responses. We discuss the potential impact of LLMs on the threat landscape and the ethical considerations of using LLMs for accelerating threat actor capabilities. We report a promising, yet concerning, application of generative AI to cyber threats. However, the LLM's capabilities to deal with more complex networks, sophisticated vulnerabilities, and the sensitivity of prompts are open questions. This research should spur deliberations over the inevitable advancements in LLM-supported cyber adversarial landscape.

HeATed Alert Triage (HeAT): Transferrable Learning to Extract Multistage Attack Campaigns

Dec 28, 2022

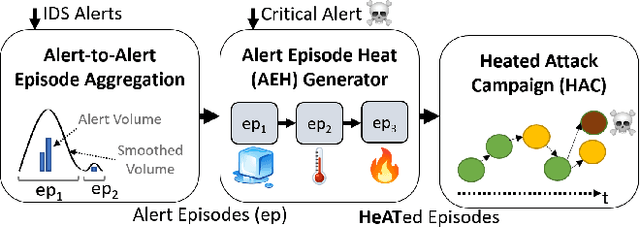

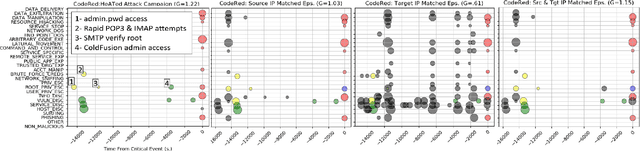

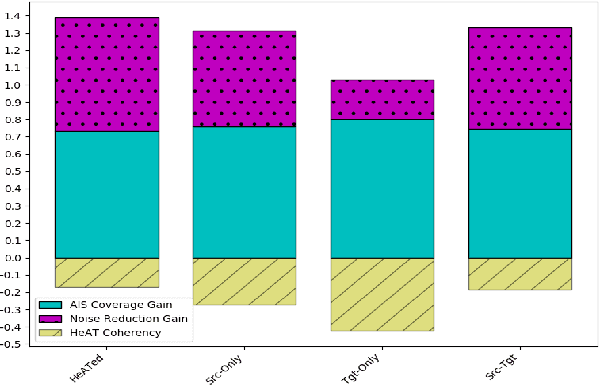

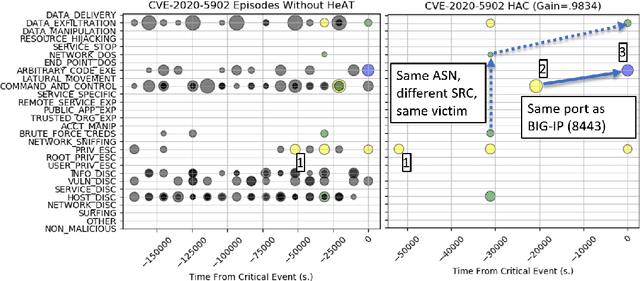

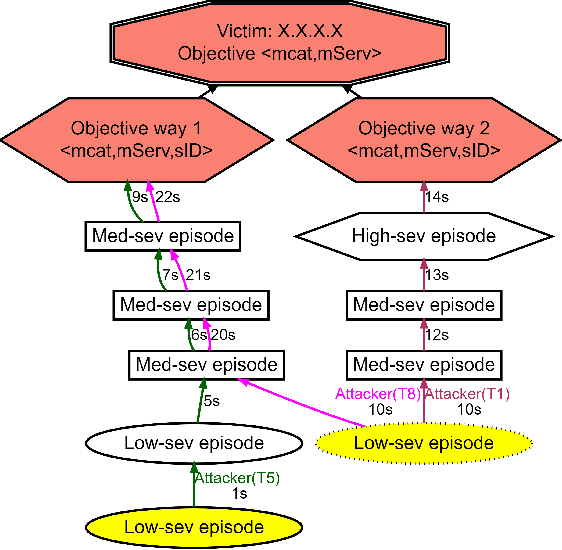

Abstract:With growing sophistication and volume of cyber attacks combined with complex network structures, it is becoming extremely difficult for security analysts to corroborate evidences to identify multistage campaigns on their network. This work develops HeAT (Heated Alert Triage): given a critical indicator of compromise (IoC), e.g., a severe IDS alert, HeAT produces a HeATed Attack Campaign (HAC) depicting the multistage activities that led up to the critical event. We define the concept of "Alert Episode Heat" to represent the analysts opinion of how much an event contributes to the attack campaign of the critical IoC given their knowledge of the network and security expertise. Leveraging a network-agnostic feature set, HeAT learns the essence of analyst's assessment of "HeAT" for a small set of IoC's, and applies the learned model to extract insightful attack campaigns for IoC's not seen before, even across networks by transferring what have been learned. We demonstrate the capabilities of HeAT with data collected in Collegiate Penetration Testing Competition (CPTC) and through collaboration with a real-world SOC. We developed HeAT-Gain metrics to demonstrate how analysts may assess and benefit from the extracted attack campaigns in comparison to common practices where IP addresses are used to corroborate evidences. Our results demonstrates the practical uses of HeAT by finding campaigns that span across diverse attack stages, remove a significant volume of irrelevant alerts, and achieve coherency to the analyst's original assessments.

SAGE: Intrusion Alert-driven Attack Graph Extractor

Jul 06, 2021

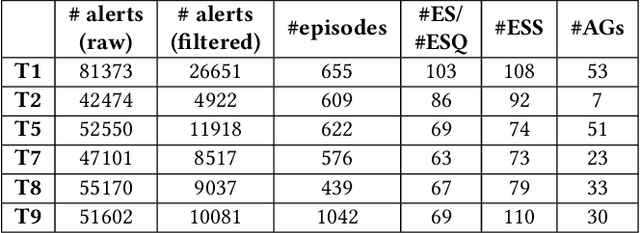

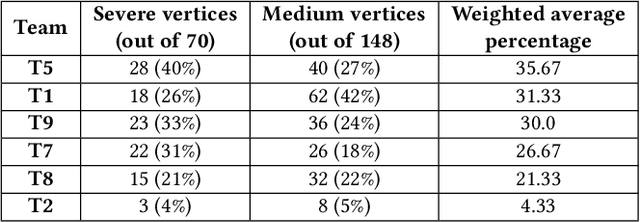

Abstract:Attack graphs (AG) are used to assess pathways availed by cyber adversaries to penetrate a network. State-of-the-art approaches for AG generation focus mostly on deriving dependencies between system vulnerabilities based on network scans and expert knowledge. In real-world operations however, it is costly and ineffective to rely on constant vulnerability scanning and expert-crafted AGs. We propose to automatically learn AGs based on actions observed through intrusion alerts, without prior expert knowledge. Specifically, we develop an unsupervised sequence learning system, SAGE, that leverages the temporal and probabilistic dependence between alerts in a suffix-based probabilistic deterministic finite automaton (S-PDFA) -- a model that accentuates infrequent severe alerts and summarizes paths leading to them. AGs are then derived from the S-PDFA. Tested with intrusion alerts collected through Collegiate Penetration Testing Competition, SAGE produces AGs that reflect the strategies used by participating teams. The resulting AGs are succinct, interpretable, and enable analysts to derive actionable insights, e.g., attackers tend to follow shorter paths after they have discovered a longer one.

On the Veracity of Cyber Intrusion Alerts Synthesized by Generative Adversarial Networks

Aug 03, 2019

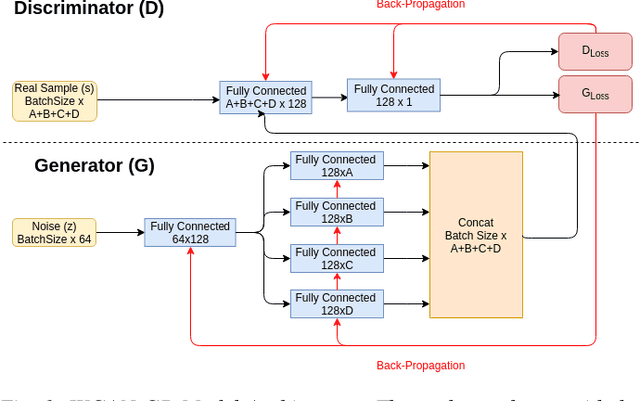

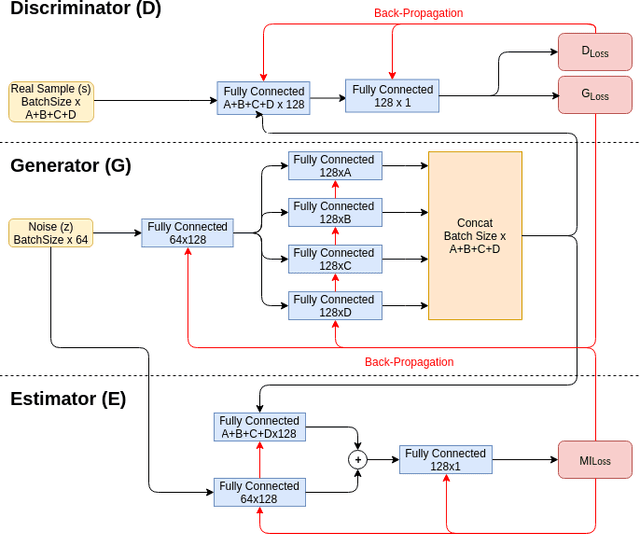

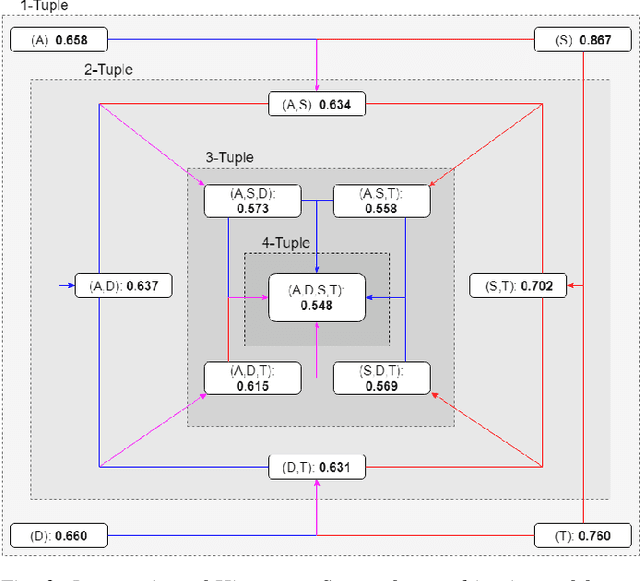

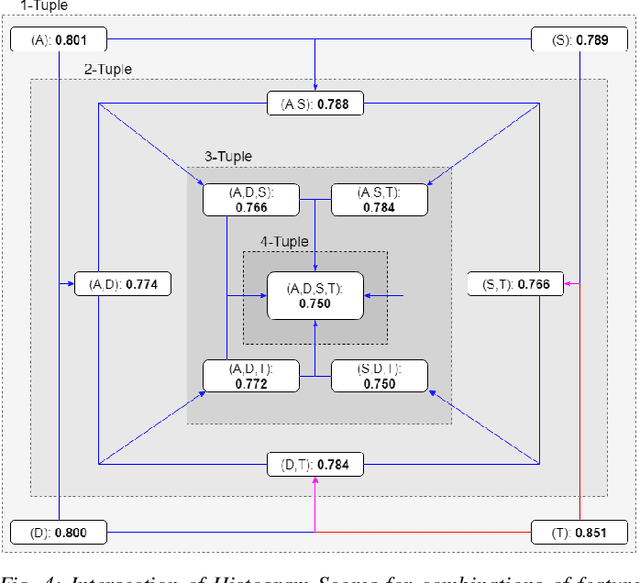

Abstract:Recreating cyber-attack alert data with a high level of fidelity is challenging due to the intricate interaction between features, non-homogeneity of alerts, and potential for rare yet critical samples. Generative Adversarial Networks (GANs) have been shown to effectively learn complex data distributions with the intent of creating increasingly realistic data. This paper presents the application of GANs to cyber-attack alert data and shows that GANs not only successfully learn to generate realistic alerts, but also reveal feature dependencies within alerts. This is accomplished by reviewing the intersection of histograms for varying alert-feature combinations between the ground truth and generated datsets. Traditional statistical metrics, such as conditional and joint entropy, are also employed to verify the accuracy of these dependencies. Finally, it is shown that a Mutual Information constraint on the network can be used to increase the generation of low probability, critical, alert values. By mapping alerts to a set of attack stages it is shown that the output of these low probability alerts has a direct contextual meaning for Cyber Security analysts. Overall, this work provides the basis for generating new cyber intrusion alerts and provides evidence that synthesized alerts emulate critical dependencies from the source dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge