Adrian F. Clark

A Generic Framework for Assessing the Performance Bounds of Image Feature Detectors

May 19, 2016

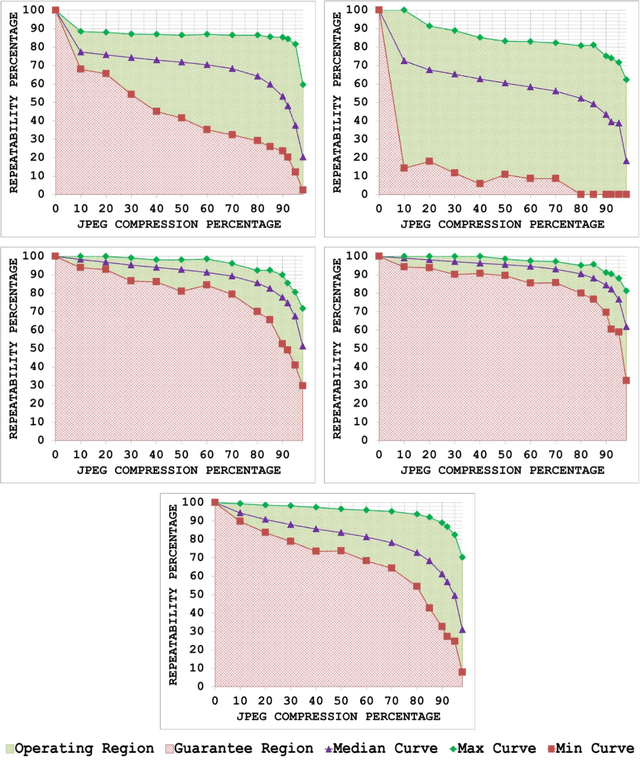

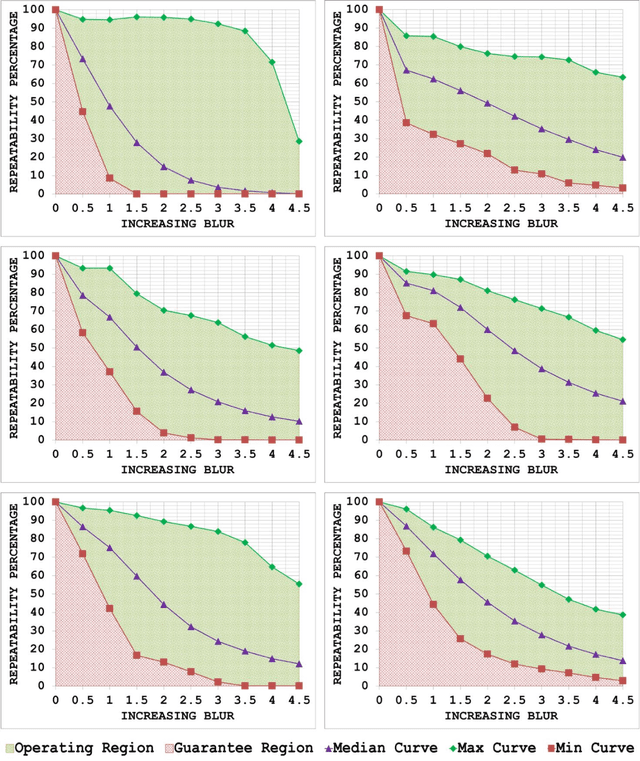

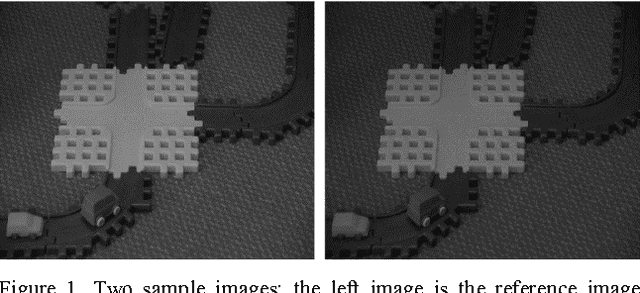

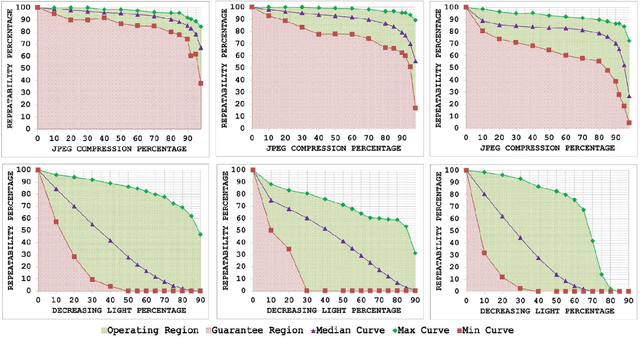

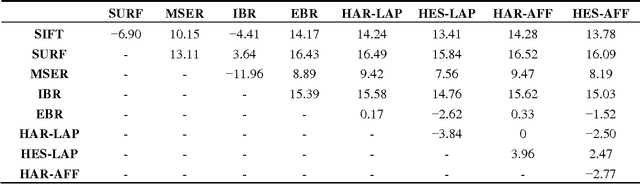

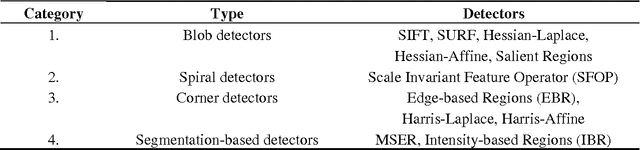

Abstract:Since local feature detection has been one of the most active research areas in computer vision during the last decade, a large number of detectors have been proposed. The interest in feature-based applications continues to grow and has thus rendered the task of characterizing the performance of various feature detection methods an important issue in vision research. Inspired by the good practices of electronic system design, a generic framework based on the repeatability measure is presented in this paper that allows assessment of the upper and lower bounds of detector performance and finds statistically significant performance differences between detectors as a function of image transformation amount by introducing a new variant of McNemars test in an effort to design more reliable and effective vision systems. The proposed framework is then employed to establish operating and guarantee regions for several state-of-the-art detectors and to identify their statistical performance differences for three specific image transformations: JPEG compression, uniform light changes and blurring. The results are obtained using a newly acquired, large image database (20482) images with 539 different scenes. These results provide new insights into the behaviour of detectors and are also useful from the vision systems design perspective.

Sensor Fusion of Camera, GPS and IMU using Fuzzy Adaptive Multiple Motion Models

Dec 09, 2015

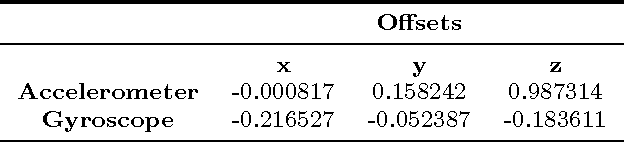

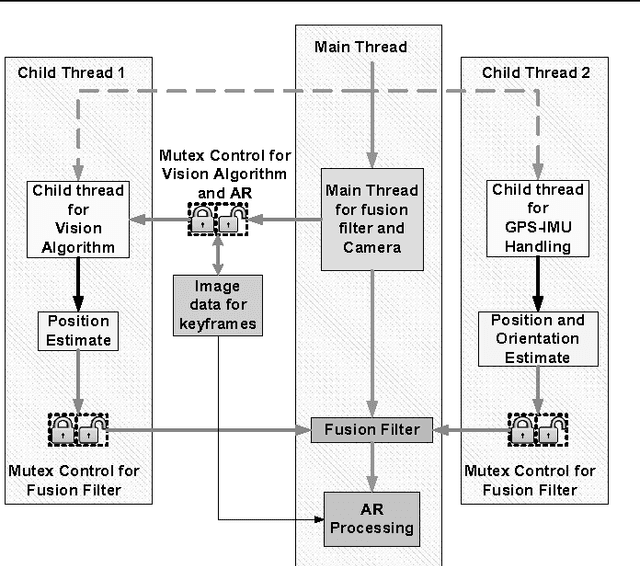

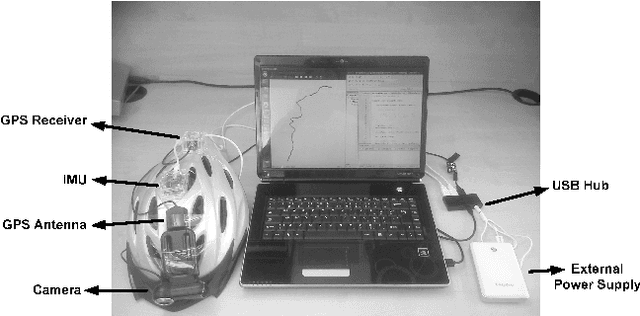

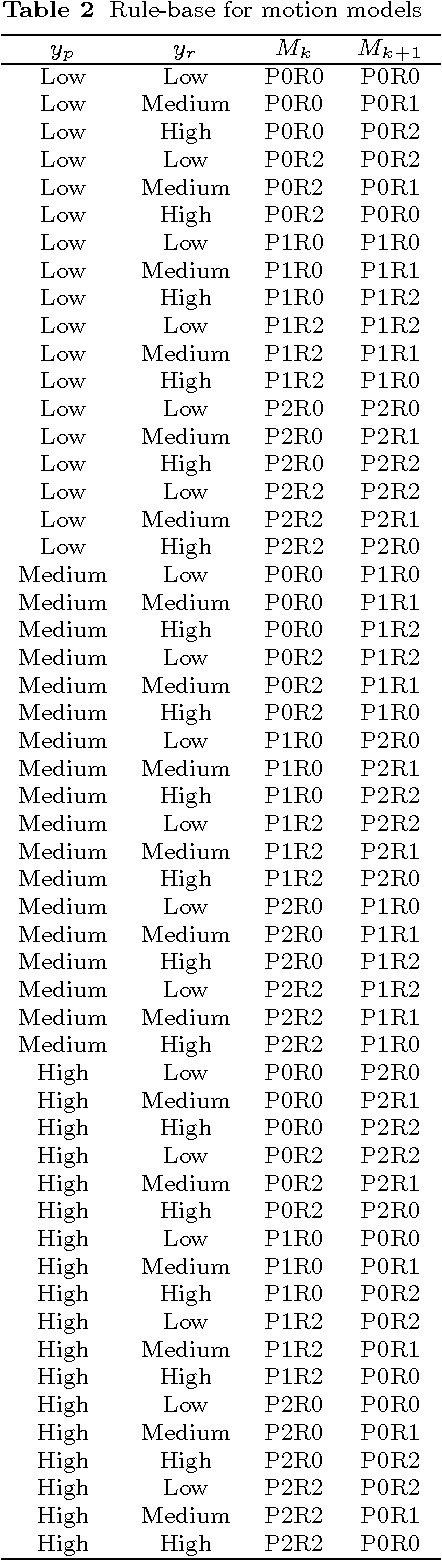

Abstract:A tracking system that will be used for Augmented Reality (AR) applications has two main requirements: accuracy and frame rate. The first requirement is related to the performance of the pose estimation algorithm and how accurately the tracking system can find the position and orientation of the user in the environment. Accuracy problems of current tracking devices, considering that they are low-cost devices, cause static errors during this motion estimation process. The second requirement is related to dynamic errors (the end-to-end system delay; occurring because of the delay in estimating the motion of the user and displaying images based on this estimate. This paper investigates combining the vision-based estimates with measurements from other sensors, GPS and IMU, in order to improve the tracking accuracy in outdoor environments. The idea of using Fuzzy Adaptive Multiple Models (FAMM) was investigated using a novel fuzzy rule-based approach to decide on the model that results in improved accuracy and faster convergence for the fusion filter. Results show that the developed tracking system is more accurate than a conventional GPS-IMU fusion approach due to additional estimates from a camera and fuzzy motion models. The paper also presents an application in cultural heritage context.

Assessing The Performance Bounds Of Local Feature Detectors: Taking Inspiration From Electronics Design Practices

Oct 17, 2015

Abstract:Since local feature detection has been one of the most active research areas in computer vision, a large number of detectors have been proposed. This has rendered the task of characterizing the performance of various feature detection methods an important issue in vision research. Inspired by the good practices of electronic system design, a generic framework based on the improved repeatability measure is presented in this paper that allows assessment of the upper and lower bounds of detector performance in an effort to design more reliable and effective vision systems. This framework is then employed to establish operating and guarantee regions for several state-of-the art detectors for JPEG compression and uniform light changes. The results are obtained using a newly acquired, large image database (15092 images) with 539 different scenes. These results provide new insights into the behavior of detectors and are also useful from the vision systems design perspective.

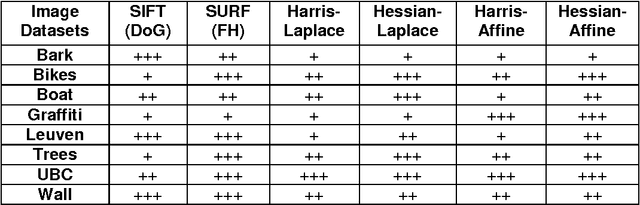

Rapid Online Analysis of Local Feature Detectors and Their Complementarity

Oct 17, 2015

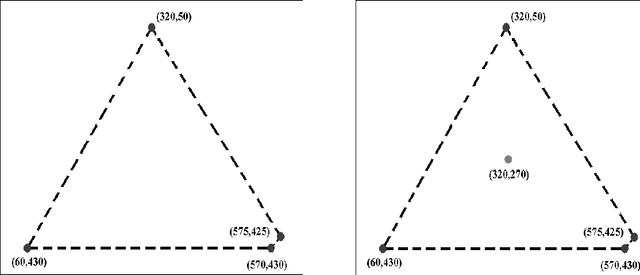

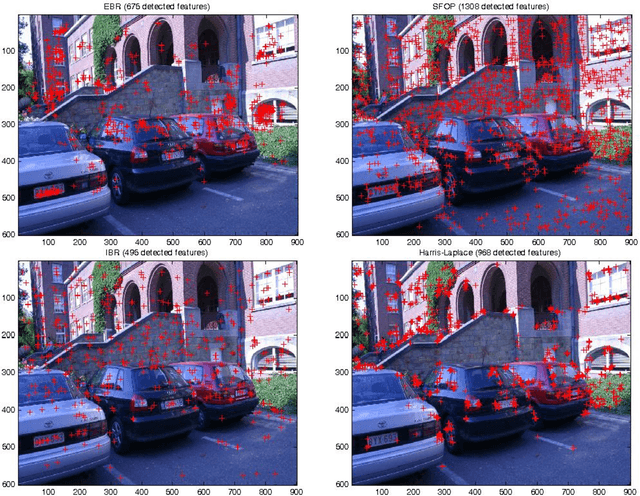

Abstract:A vision system that can assess its own performance and take appropriate actions online to maximize its effectiveness would be a step towards achieving the long-cherished goal of imitating humans. This paper proposes a method for performing an online performance analysis of local feature detectors, the primary stage of many practical vision systems. It advocates the spatial distribution of local image features as a good performance indicator and presents a metric that can be calculated rapidly, concurs with human visual assessments and is complementary to existing offline measures such as repeatability. The metric is shown to provide a measure of complementarity for combinations of detectors, correctly reflecting the underlying principles of individual detectors. Qualitative results on well-established datasets for several state-of-the-art detectors are presented based on the proposed measure. Using a hypothesis testing approach and a newly-acquired, larger image database, statistically-significant performance differences are identified. Different detector pairs and triplets are examined quantitatively and the results provide a useful guideline for combining detectors in applications that require a reasonable spatial distribution of image features. A principled framework for combining feature detectors in these applications is also presented. Timing results reveal the potential of the metric for online applications.

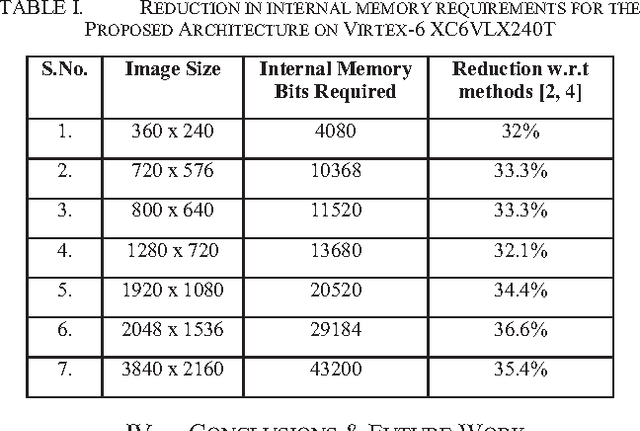

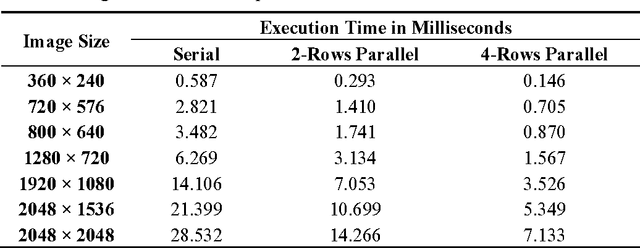

Memory-Efficient Design Strategy for a Parallel Embedded Integral Image Computation Engine

Oct 17, 2015

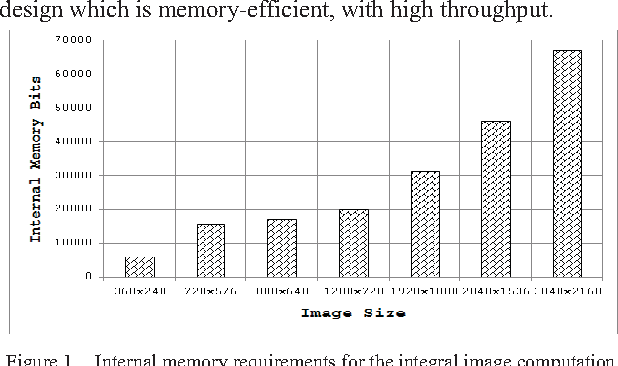

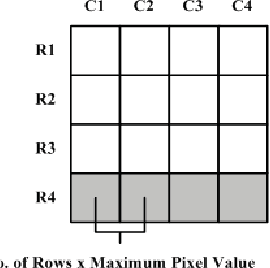

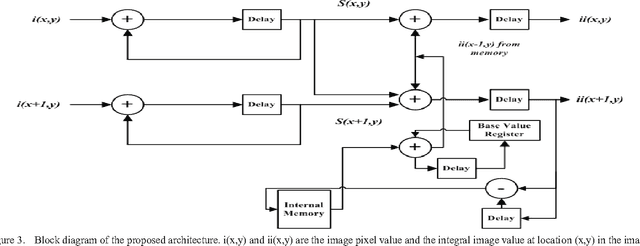

Abstract:In embedded vision systems, parallel computation of the integral image presents several design challenges in terms of hardware resources, speed and power consumption. Although recursive equations significantly reduce the number of operations for computing the integral image, the required internal memory becomes prohibitively large for an embedded integral image computation engine for increasing image sizes. With the objective of achieving high-throughput with minimum hardware resources, this paper proposes a memory-efficient design strategy for a parallel embedded integral image computation engine. Results show that the design achieves nearly 35% reduction in memory for common HD video.

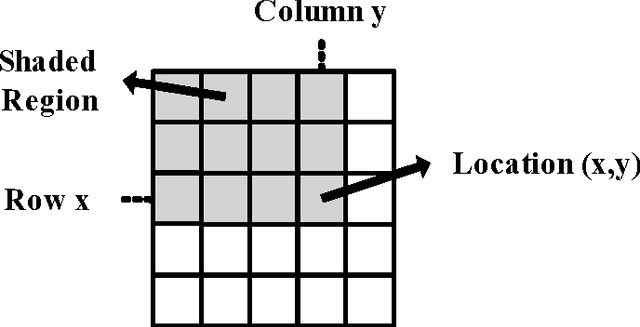

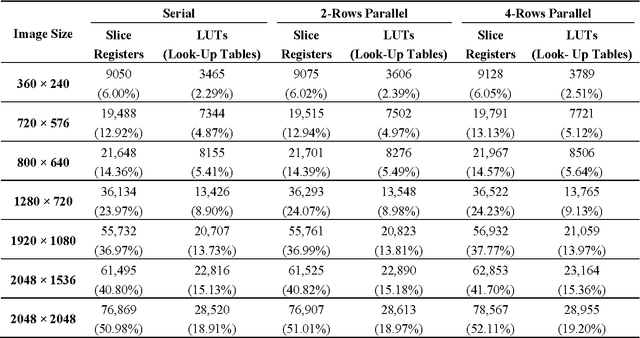

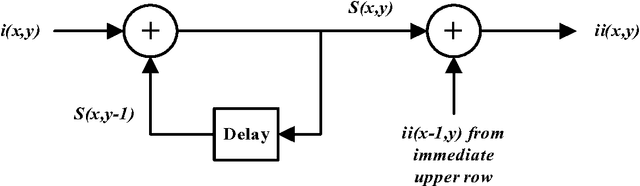

Integral Images: Efficient Algorithms for Their Computation and Storage in Resource-Constrained Embedded Vision Systems

Oct 17, 2015

Abstract:The integral image, an intermediate image representation, has found extensive use in multi-scale local feature detection algorithms, such as Speeded-Up Robust Features (SURF), allowing fast computation of rectangular features at constant speed, independent of filter size. For resource-constrained real-time embedded vision systems, computation and storage of integral image presents several design challenges due to strict timing and hardware limitations. Although calculation of the integral image only consists of simple addition operations, the total number of operations is large owing to the generally large size of image data. Recursive equations allow substantial decrease in the number of operations but require calculation in a serial fashion. This paper presents two new hardware algorithms that are based on the decomposition of these recursive equations, allowing calculation of up to four integral image values in a row-parallel way without significantly increasing the number of operations. An efficient design strategy is also proposed for a parallel integral image computation unit to reduce the size of the required internal memory (nearly 35% for common HD video). Addressing the storage problem of integral image in embedded vision systems, the paper presents two algorithms which allow substantial decrease (at least 44.44%) in the memory requirements. Finally, the paper provides a case study that highlights the utility of the proposed architectures in embedded vision systems.

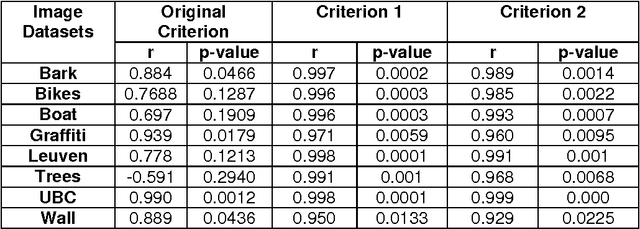

Improved repeatability measures for evaluating performance of feature detectors

Apr 29, 2015

Abstract:The most frequently employed measure for performance characterisation of local feature detectors is repeatability, but it has been observed that this does not necessarily mirror actual performance. Presented are improved repeatability formulations which correlate much better with the true performance of feature detectors. Comparative results for several state-of-the-art feature detectors are presented using these measures; it is found that Hessian-based detectors are generally superior at identifying features when images are subject to various geometric and photometric transformations.

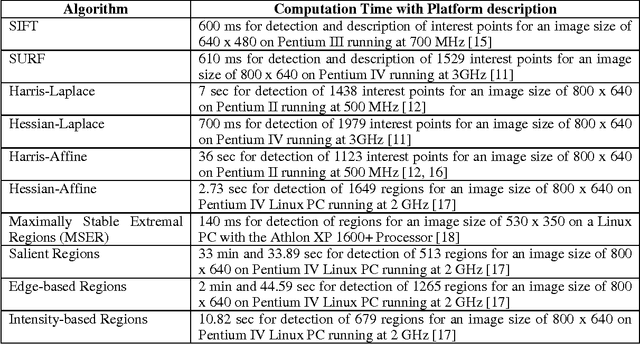

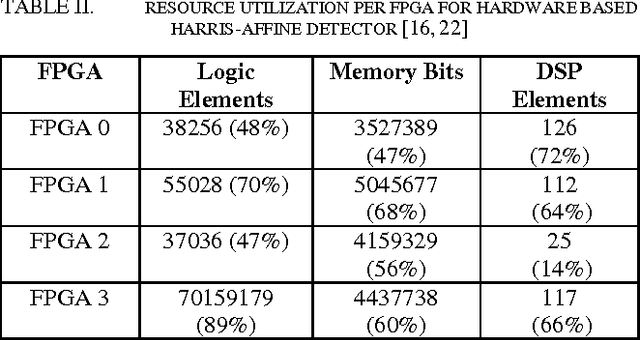

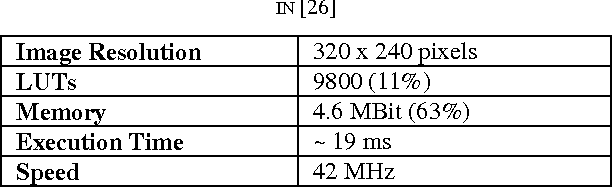

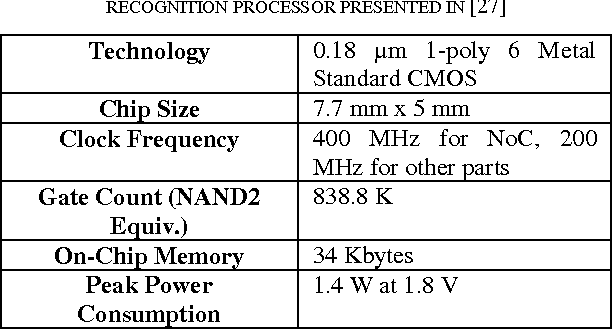

Hardware based Scale- and Rotation-Invariant Feature Extraction: A Retrospective Analysis and Future Directions

Apr 29, 2015

Abstract:Computer Vision techniques represent a class of algorithms that are highly computation and data intensive in nature. Generally, performance of these algorithms in terms of execution speed on desktop computers is far from real-time. Since real-time performance is desirable in many applications, special-purpose hardware is required in most cases to achieve this goal. Scale- and rotation-invariant local feature extraction is a low level computer vision task with very high computational complexity. The state-of-the-art algorithms that currently exist in this domain, like SIFT and SURF, suffer from slow execution speeds and at best can only achieve rates of 2-3 Hz on modern desktop computers. Hardware-based scale- and rotation-invariant local feature extraction is an emerging trend enabling real-time performance for these computationally complex algorithms. This paper takes a retrospective look at the advances made so far in this field, discusses the hardware design strategies employed and results achieved, identifies current research gaps and suggests future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge