Towards Long-term Fairness in Recommendation

Paper and Code

Jan 10, 2021

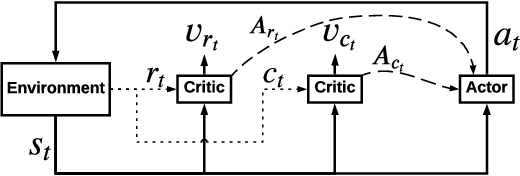

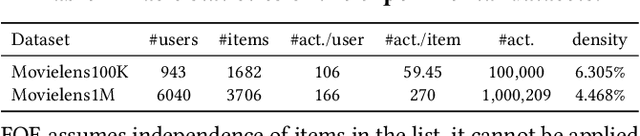

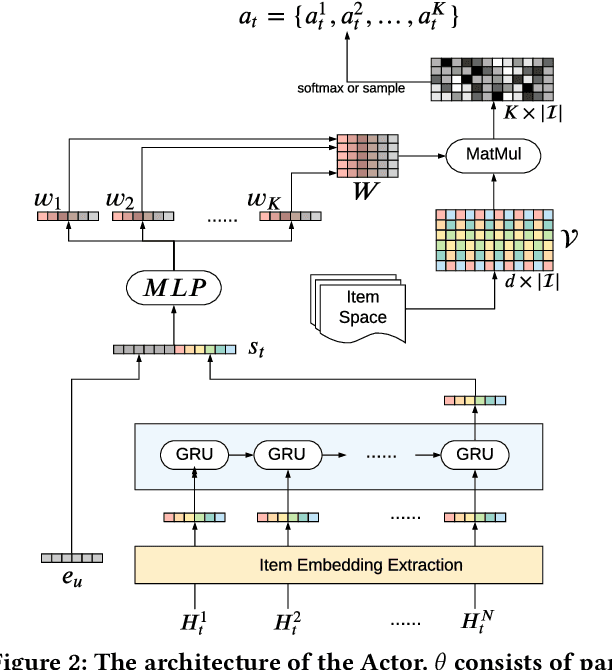

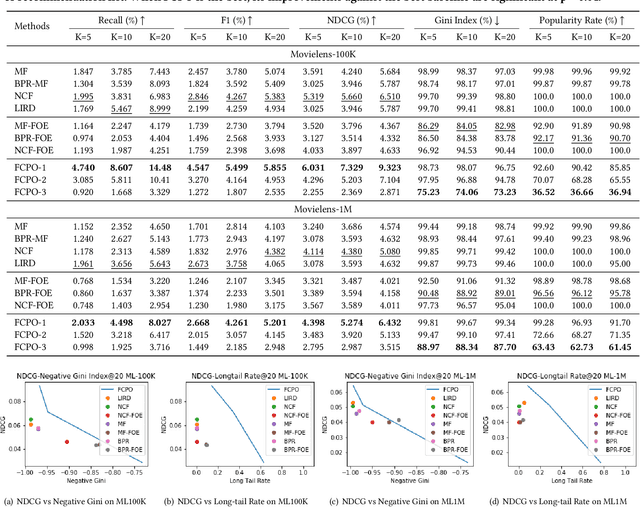

As Recommender Systems (RS) influence more and more people in their daily life, the issue of fairness in recommendation is becoming more and more important. Most of the prior approaches to fairness-aware recommendation have been situated in a static or one-shot setting, where the protected groups of items are fixed, and the model provides a one-time fairness solution based on fairness-constrained optimization. This fails to consider the dynamic nature of the recommender systems, where attributes such as item popularity may change over time due to the recommendation policy and user engagement. For example, products that were once popular may become no longer popular, and vice versa. As a result, the system that aims to maintain long-term fairness on the item exposure in different popularity groups must accommodate this change in a timely fashion. Novel to this work, we explore the problem of long-term fairness in recommendation and accomplish the problem through dynamic fairness learning. We focus on the fairness of exposure of items in different groups, while the division of the groups is based on item popularity, which dynamically changes over time in the recommendation process. We tackle this problem by proposing a fairness-constrained reinforcement learning algorithm for recommendation, which models the recommendation problem as a Constrained Markov Decision Process (CMDP), so that the model can dynamically adjust its recommendation policy to make sure the fairness requirement is always satisfied when the environment changes. Experiments on several real-world datasets verify our framework's superiority in terms of recommendation performance, short-term fairness, and long-term fairness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge