Leveraging Symmetry in RL-based Legged Locomotion Control

Paper and Code

Mar 27, 2024

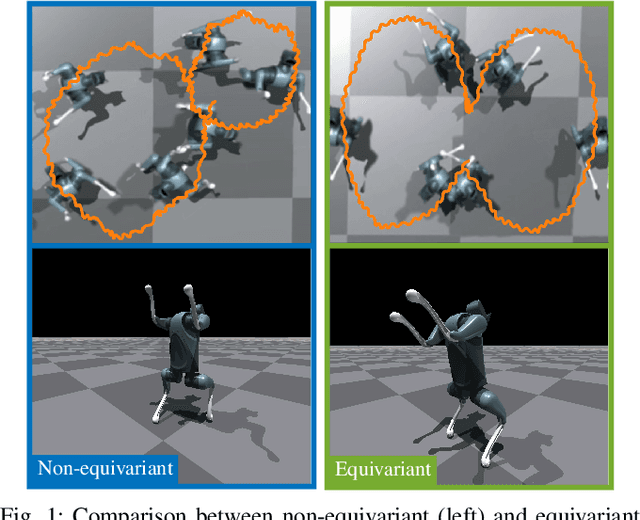

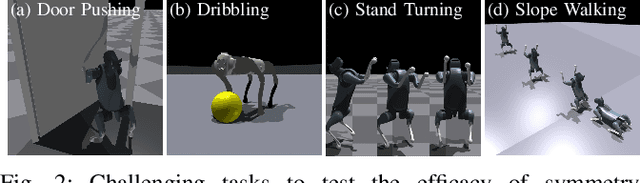

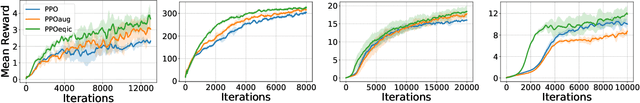

Model-free reinforcement learning is a promising approach for autonomously solving challenging robotics control problems, but faces exploration difficulty without information of the robot's kinematics and dynamics morphology. The under-exploration of multiple modalities with symmetric states leads to behaviors that are often unnatural and sub-optimal. This issue becomes particularly pronounced in the context of robotic systems with morphological symmetries, such as legged robots for which the resulting asymmetric and aperiodic behaviors compromise performance, robustness, and transferability to real hardware. To mitigate this challenge, we can leverage symmetry to guide and improve the exploration in policy learning via equivariance/invariance constraints. In this paper, we investigate the efficacy of two approaches to incorporate symmetry: modifying the network architectures to be strictly equivariant/invariant, and leveraging data augmentation to approximate equivariant/invariant actor-critics. We implement the methods on challenging loco-manipulation and bipedal locomotion tasks and compare with an unconstrained baseline. We find that the strictly equivariant policy consistently outperforms other methods in sample efficiency and task performance in simulation. In addition, symmetry-incorporated approaches exhibit better gait quality, higher robustness and can be deployed zero-shot in real-world experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge