Zongxin Liu

Training Verification-Friendly Neural Networks via Neuron Behavior Consistency

Dec 17, 2024

Abstract:Formal verification provides critical security assurances for neural networks, yet its practical application suffers from the long verification time. This work introduces a novel method for training verification-friendly neural networks, which are robust, easy to verify, and relatively accurate. Our method integrates neuron behavior consistency into the training process, making neuron activation states consistent across different inputs in a local neighborhood, reducing the number of unstable neurons and tightening the bounds of neurons thereby enhancing neural network verifiability. We evaluated our method using the MNIST, Fashion-MNIST, and CIFAR-10 datasets across various network architectures. The results of the experiment demonstrate that networks trained using our method are verification-friendly across different radii and different model architectures, whereas other tools fail to maintain verifiability as the radius increases. We also show that our method can be combined with existing methods to further improve the verifiability of networks.

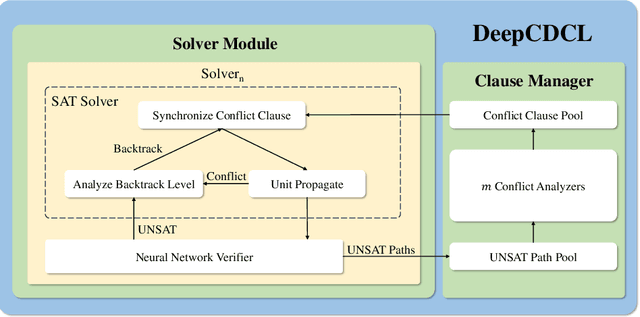

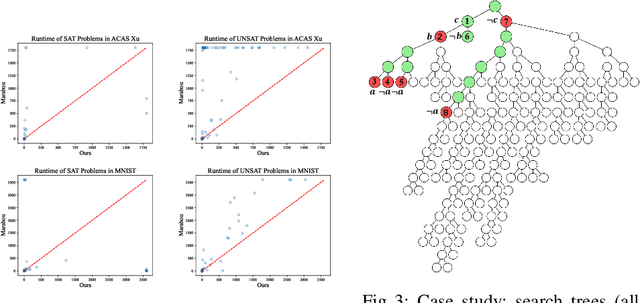

DeepCDCL: An CDCL-based Neural Network Verification Framework

Mar 12, 2024

Abstract:Neural networks in safety-critical applications face increasing safety and security concerns due to their susceptibility to little disturbance. In this paper, we propose DeepCDCL, a novel neural network verification framework based on the Conflict-Driven Clause Learning (CDCL) algorithm. We introduce an asynchronous clause learning and management structure, reducing redundant time consumption compared to the direct application of the CDCL framework. Furthermore, we also provide a detailed evaluation of the performance of our approach on the ACAS Xu and MNIST datasets, showing that a significant speed-up is achieved in most cases.

Incremental Satisfiability Modulo Theory for Verification of Deep Neural Networks

Feb 10, 2023

Abstract:Constraint solving is an elementary way for verification of deep neural networks (DNN). In the domain of AI safety, a DNN might be modified in its structure and parameters for its repair or attack. For such situations, we propose the incremental DNN verification problem, which asks whether a safety property still holds after the DNN is modified. To solve the problem, we present an incremental satisfiability modulo theory (SMT) algorithm based on the Reluplex framework. We simulate the most important features of the configurations that infers the verification result of the searching branches in the old solving procedure (with respect to the original network), and heuristically check whether the proofs are still valid for the modified DNN. We implement our algorithm as an incremental solver called DeepInc, and exerimental results show that DeepInc is more efficient in most cases. For the cases that the property holds both before and after modification, the acceleration can be faster by several orders of magnitude, showing that DeepInc is outstanding in incrementally searching for counterexamples. Moreover, based on the framework, we propose the multi-objective DNN repair problem and give an algorithm based on our incremental SMT solving algorithm. Our repair method preserves more potential safety properties on the repaired DNNs compared with state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge