Ziqian Shao

Deep Video Restoration for Under-Display Camera

Sep 09, 2023

Abstract:Images or videos captured by the Under-Display Camera (UDC) suffer from severe degradation, such as saturation degeneration and color shift. While restoration for UDC has been a critical task, existing works of UDC restoration focus only on images. UDC video restoration (UDC-VR) has not been explored in the community. In this work, we first propose a GAN-based generation pipeline to simulate the realistic UDC degradation process. With the pipeline, we build the first large-scale UDC video restoration dataset called PexelsUDC, which includes two subsets named PexelsUDC-T and PexelsUDC-P corresponding to different displays for UDC. Using the proposed dataset, we conduct extensive benchmark studies on existing video restoration methods and observe their limitations on the UDC-VR task. To this end, we propose a novel transformer-based baseline method that adaptively enhances degraded videos. The key components of the method are a spatial branch with local-aware transformers, a temporal branch embedded temporal transformers, and a spatial-temporal fusion module. These components drive the model to fully exploit spatial and temporal information for UDC-VR. Extensive experiments show that our method achieves state-of-the-art performance on PexelsUDC. The benchmark and the baseline method are expected to promote the progress of UDC-VR in the community, which will be made public.

LLDiffusion: Learning Degradation Representations in Diffusion Models for Low-Light Image Enhancement

Jul 27, 2023Abstract:Current deep learning methods for low-light image enhancement (LLIE) typically rely on pixel-wise mapping learned from paired data. However, these methods often overlook the importance of considering degradation representations, which can lead to sub-optimal outcomes. In this paper, we address this limitation by proposing a degradation-aware learning scheme for LLIE using diffusion models, which effectively integrates degradation and image priors into the diffusion process, resulting in improved image enhancement. Our proposed degradation-aware learning scheme is based on the understanding that degradation representations play a crucial role in accurately modeling and capturing the specific degradation patterns present in low-light images. To this end, First, a joint learning framework for both image generation and image enhancement is presented to learn the degradation representations. Second, to leverage the learned degradation representations, we develop a Low-Light Diffusion model (LLDiffusion) with a well-designed dynamic diffusion module. This module takes into account both the color map and the latent degradation representations to guide the diffusion process. By incorporating these conditioning factors, the proposed LLDiffusion can effectively enhance low-light images, considering both the inherent degradation patterns and the desired color fidelity. Finally, we evaluate our proposed method on several well-known benchmark datasets, including synthetic and real-world unpaired datasets. Extensive experiments on public benchmarks demonstrate that our LLDiffusion outperforms state-of-the-art LLIE methods both quantitatively and qualitatively. The source code and pre-trained models are available at https://github.com/TaoWangzj/LLDiffusion.

GridFormer: Residual Dense Transformer with Grid Structure for Image Restoration in Adverse Weather Conditions

May 29, 2023

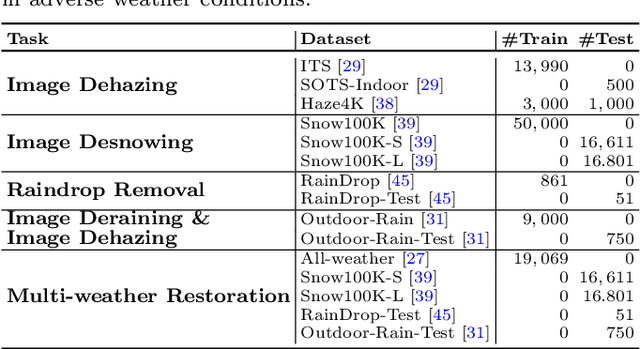

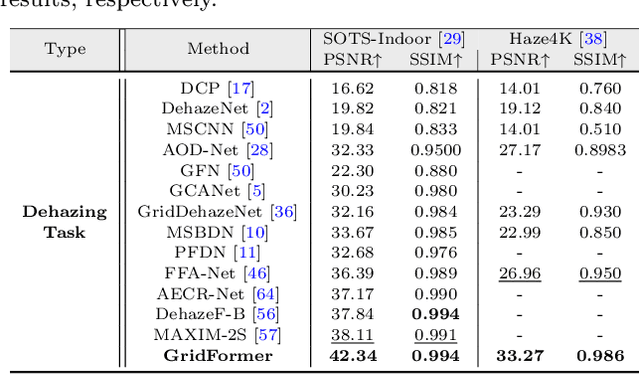

Abstract:Image restoration in adverse weather conditions is a difficult task in computer vision. In this paper, we propose a novel transformer-based framework called GridFormer which serves as a backbone for image restoration under adverse weather conditions. GridFormer is designed in a grid structure using a residual dense transformer block, and it introduces two core designs. First, it uses an enhanced attention mechanism in the transformer layer. The mechanism includes stages of the sampler and compact self-attention to improve efficiency, and a local enhancement stage to strengthen local information. Second, we introduce a residual dense transformer block (RDTB) as the final GridFormer layer. This design further improves the network's ability to learn effective features from both preceding and current local features. The GridFormer framework achieves state-of-the-art results on five diverse image restoration tasks in adverse weather conditions, including image deraining, dehazing, deraining & dehazing, desnowing, and multi-weather restoration. The source code and pre-trained models will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge