Zhuangzhuang Dai

Multi-Head Adaptive Graph Convolution Network for Sparse Point Cloud-Based Human Activity Recognition

Apr 03, 2025

Abstract:Human activity recognition is increasingly vital for supporting independent living, particularly for the elderly and those in need of assistance. Domestic service robots with monitoring capabilities can enhance safety and provide essential support. Although image-based methods have advanced considerably in the past decade, their adoption remains limited by concerns over privacy and sensitivity to low-light or dark conditions. As an alternative, millimetre-wave (mmWave) radar can produce point cloud data which is privacy-preserving. However, processing the sparse and noisy point clouds remains a long-standing challenge. While graph-based methods and attention mechanisms show promise, they predominantly rely on "fixed" kernels; kernels that are applied uniformly across all neighbourhoods, highlighting the need for adaptive approaches that can dynamically adjust their kernels to the specific geometry of each local neighbourhood in point cloud data. To overcome this limitation, we introduce an adaptive approach within the graph convolutional framework. Instead of a single shared weight function, our Multi-Head Adaptive Kernel (MAK) module generates multiple dynamic kernels, each capturing different aspects of the local feature space. By progressively refining local features while maintaining global spatial context, our method enables convolution kernels to adapt to varying local features. Experimental results on benchmark datasets confirm the effectiveness of our approach, achieving state-of-the-art performance in human activity recognition. Our source code is made publicly available at: https://github.com/Gbouna/MAK-GCN

Action Recognition in Real-World Ambient Assisted Living Environment

Mar 29, 2025

Abstract:The growing ageing population and their preference to maintain independence by living in their own homes require proactive strategies to ensure safety and support. Ambient Assisted Living (AAL) technologies have emerged to facilitate ageing in place by offering continuous monitoring and assistance within the home. Within AAL technologies, action recognition plays a crucial role in interpreting human activities and detecting incidents like falls, mobility decline, or unusual behaviours that may signal worsening health conditions. However, action recognition in practical AAL applications presents challenges, including occlusions, noisy data, and the need for real-time performance. While advancements have been made in accuracy, robustness to noise, and computation efficiency, achieving a balance among them all remains a challenge. To address this challenge, this paper introduces the Robust and Efficient Temporal Convolution network (RE-TCN), which comprises three main elements: Adaptive Temporal Weighting (ATW), Depthwise Separable Convolutions (DSC), and data augmentation techniques. These elements aim to enhance the model's accuracy, robustness against noise and occlusion, and computational efficiency within real-world AAL contexts. RE-TCN outperforms existing models in terms of accuracy, noise and occlusion robustness, and has been validated on four benchmark datasets: NTU RGB+D 60, Northwestern-UCLA, SHREC'17, and DHG-14/28. The code is publicly available at: https://github.com/Gbouna/RE-TCN

SoundCount: Sound Counting from Raw Audio with Dyadic Decomposition Neural Network

Dec 26, 2023

Abstract:In this paper, we study an underexplored, yet important and challenging problem: counting the number of distinct sounds in raw audio characterized by a high degree of polyphonicity. We do so by systematically proposing a novel end-to-end trainable neural network (which we call DyDecNet, consisting of a dyadic decomposition front-end and backbone network), and quantifying the difficulty level of counting depending on sound polyphonicity. The dyadic decomposition front-end progressively decomposes the raw waveform dyadically along the frequency axis to obtain time-frequency representation in multi-stage, coarse-to-fine manner. Each intermediate waveform convolved by a parent filter is further processed by a pair of child filters that evenly split the parent filter's carried frequency response, with the higher-half child filter encoding the detail and lower-half child filter encoding the approximation. We further introduce an energy gain normalization to normalize sound loudness variance and spectrum overlap, and apply it to each intermediate parent waveform before feeding it to the two child filters. To better quantify sound counting difficulty level, we further design three polyphony-aware metrics: polyphony ratio, max polyphony and mean polyphony. We test DyDecNet on various datasets to show its superiority, and we further show dyadic decomposition network can be used as a general front-end to tackle other acoustic tasks.

Detecting Worker Attention Lapses in Human-Robot Interaction: An Eye Tracking and Multimodal Sensing Study

Apr 20, 2023

Abstract:The advent of industrial robotics and autonomous systems endow human-robot collaboration in a massive scale. However, current industrial robots are restrained in co-working with human in close proximity due to inability of interpreting human agents' attention. Human attention study is non-trivial since it involves multiple aspects of the mind: perception, memory, problem solving, and consciousness. Human attention lapses are particularly problematic and potentially catastrophic in industrial workplace, from assembling electronics to operating machines. Attention is indeed complex and cannot be easily measured with single-modality sensors. Eye state, head pose, posture, and manifold environment stimulus could all play a part in attention lapses. To this end, we propose a pipeline to annotate multimodal dataset of human attention tracking, including eye tracking, fixation detection, third-person surveillance camera, and sound. We produce a pilot dataset containing two fully annotated phone assembly sequences in a realistic manufacturing environment. We evaluate existing fatigue and drowsiness prediction methods for attention lapse detection. Experimental results show that human attention lapses in production scenarios are more subtle and imperceptible than well-studied fatigue and drowsiness.

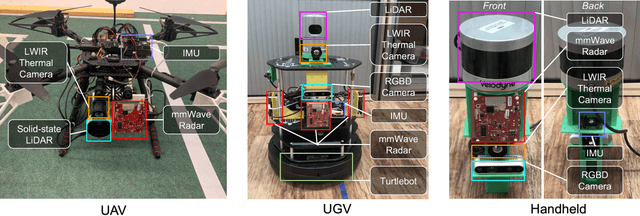

OdomBeyondVision: An Indoor Multi-modal Multi-platform Odometry Dataset Beyond the Visible Spectrum

Jun 03, 2022

Abstract:This paper presents a multimodal indoor odometry dataset, OdomBeyondVision, featuring multiple sensors across the different spectrum and collected with different mobile platforms. Not only does OdomBeyondVision contain the traditional navigation sensors, sensors such as IMUs, mechanical LiDAR, RGBD camera, it also includes several emerging sensors such as the single-chip mmWave radar, LWIR thermal camera and solid-state LiDAR. With the above sensors on UAV, UGV and handheld platforms, we respectively recorded the multimodal odometry data and their movement trajectories in various indoor scenes and different illumination conditions. We release the exemplar radar, radar-inertial and thermal-inertial odometry implementations to demonstrate their results for future works to compare against and improve upon. The full dataset including toolkit and documentation is publicly available at: https://github.com/MAPS-Lab/OdomBeyondVision.

Deep Odometry Systems on Edge with EKF-LoRa Backend for Real-Time Positioning in Adverse Environment

Dec 10, 2021

Abstract:Ubiquitous positioning for pedestrian in adverse environment has served a long standing challenge. Despite dramatic progress made by Deep Learning, multi-sensor deep odometry systems yet pose a high computational cost and suffer from cumulative drifting errors over time. Thanks to the increasing computational power of edge devices, we propose a novel ubiquitous positioning solution by integrating state-of-the-art deep odometry models on edge with an EKF (Extended Kalman Filter)-LoRa backend. We carefully compare and select three sensor modalities, i.e., an Inertial Measurement Unit (IMU), a millimetre-wave (mmWave) radar, and a thermal infrared camera, and realise their deep odometry inference engines which runs in real-time. A pipeline of deploying deep odometry considering accuracy, complexity, and edge platform is proposed. We design a LoRa link for positional data backhaul and projecting aggregated positions of deep odometry into the global frame. We find that a simple EKF based fusion module is sufficient for generic positioning calibration with over 34% accuracy gains against any standalone deep odometry system. Extensive tests in different environments validate the efficiency and efficacy of our proposed positioning system.

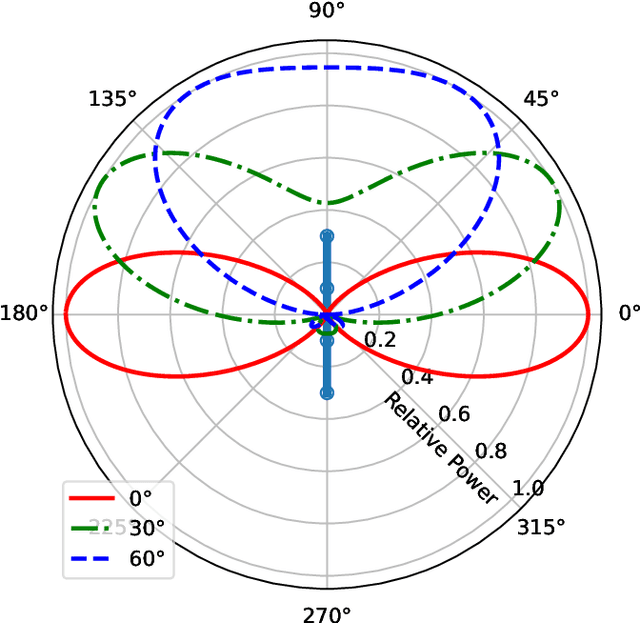

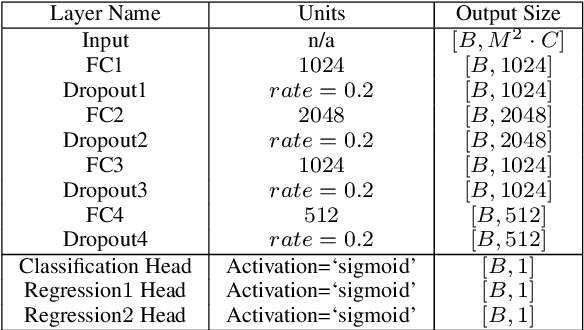

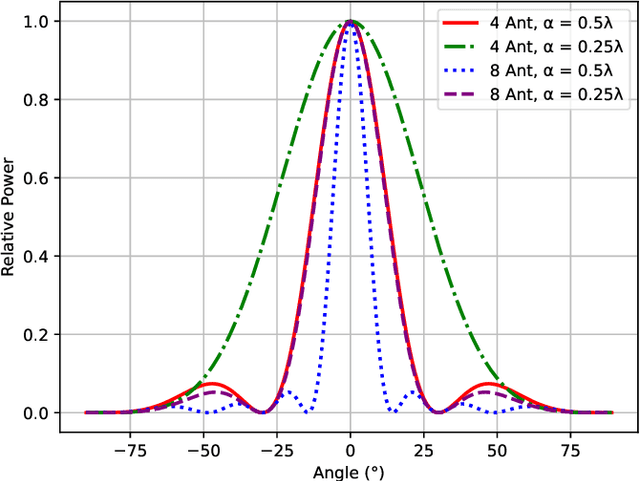

DeepAoANet: Learning Angle of Arrival from Software Defined Radios with Deep Neural Networks

Dec 09, 2021

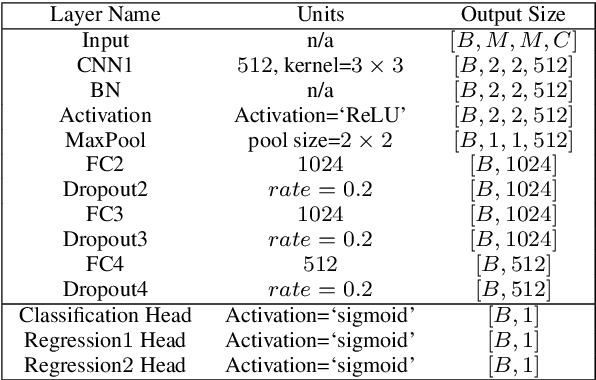

Abstract:Direction finding and positioning systems based on RF signals are significantly impacted by multipath propagation, particularly in indoor environments. Existing algorithms (e.g MUSIC) perform poorly in resolving Angle of Arrival (AoA) in the presence of multipath or when operating in a weak signal regime. We note that digitally sampled RF frontends allow for the easy analysis of signals, and their delayed components. Low-cost Software-Defined Radio (SDR) modules enable Channel State Information (CSI) extraction across a wide spectrum, motivating the design of an enhanced Angle-of-Arrival (AoA) solution. We propose a Deep Learning approach to deriving AoA from a single snapshot of the SDR multichannel data. We compare and contrast deep-learning based angle classification and regression models, to estimate up to two AoAs accurately. We have implemented the inference engines on different platforms to extract AoAs in real-time, demonstrating the computational tractability of our approach. To demonstrate the utility of our approach we have collected IQ (In-phase and Quadrature components) samples from a four-element Universal Linear Array (ULA) in various Light-of-Sight (LOS) and Non-Line-of-Sight (NLOS) environments, and published the dataset. Our proposed method demonstrates excellent reliability in determining number of impinging signals and realized mean absolute AoA errors less than $2^{\circ}$.

Demo Abstract: Indoor Positioning System in Visually-Degraded Environments with Millimetre-Wave Radar and Inertial Sensors

Oct 26, 2020

Abstract:Positional estimation is of great importance in the public safety sector. Emergency responders such as fire fighters, medical rescue teams, and the police will all benefit from a resilient positioning system to deliver safe and effective emergency services. Unfortunately, satellite navigation (e.g., GPS) offers limited coverage in indoor environments. It is also not possible to rely on infrastructure based solutions. To this end, wearable sensor-aided navigation techniques, such as those based on camera and Inertial Measurement Units (IMU), have recently emerged recently as an accurate, infrastructure-free solution. Together with an increase in the computational capabilities of mobile devices, motion estimation can be performed in real-time. In this demonstration, we present a real-time indoor positioning system which fuses millimetre-wave (mmWave) radar and IMU data via deep sensor fusion. We employ mmWave radar rather than an RGB camera as it provides better robustness to visual degradation (e.g., smoke, darkness, etc.) while at the same time requiring lower computational resources to enable runtime computation. We implemented the sensor system on a handheld device and a mobile computer running at 10 FPS to track a user inside an apartment. Good accuracy and resilience were exhibited even in poorly illuminated scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge