Zhixing Du

Cost-Effective RF Fingerprinting Based on Hybrid CVNN-RF Classifier with Automated Multi-Dimensional Early-Exit Strategy

Jun 21, 2024

Abstract:While the Internet of Things (IoT) technology is booming and offers huge opportunities for information exchange, it also faces unprecedented security challenges. As an important complement to the physical layer security technologies for IoT, radio frequency fingerprinting (RFF) is of great interest due to its difficulty in counterfeiting. Recently, many machine learning (ML)-based RFF algorithms have emerged. In particular, deep learning (DL) has shown great benefits in automatically extracting complex and subtle features from raw data with high classification accuracy. However, DL algorithms face the computational cost problem as the difficulty of the RFF task and the size of the DNN have increased dramatically. To address the above challenge, this paper proposes a novel costeffective early-exit neural network consisting of a complex-valued neural network (CVNN) backbone with multiple random forest branches, called hybrid CVNN-RF. Unlike conventional studies that use a single fixed DL model to process all RF samples, our hybrid CVNN-RF considers differences in the recognition difficulty of RF samples and introduces an early-exit mechanism to dynamically process the samples. When processing "easy" samples that can be well classified with high confidence, the hybrid CVNN-RF can end early at the random forest branch to reduce computational cost. Conversely, subsequent network layers will be activated to ensure accuracy. To further improve the early-exit rate, an automated multi-dimensional early-exit strategy is proposed to achieve scheduling control from multiple dimensions within the network depth and classification category. Finally, our experiments on the public ADS-B dataset show that the proposed algorithm can reduce the computational cost by 83% while improving the accuracy by 1.6% under a classification task with 100 categories.

Distilling Object Detectors with Feature Richness

Nov 02, 2021

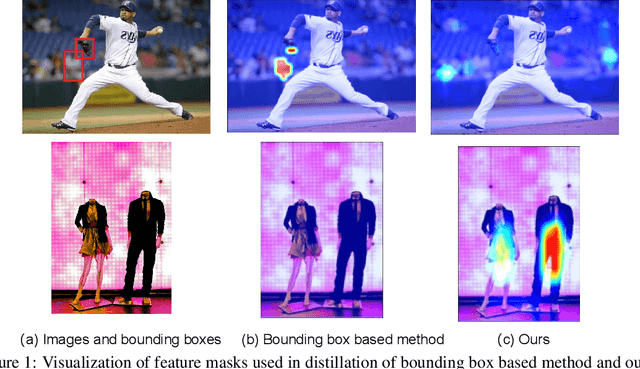

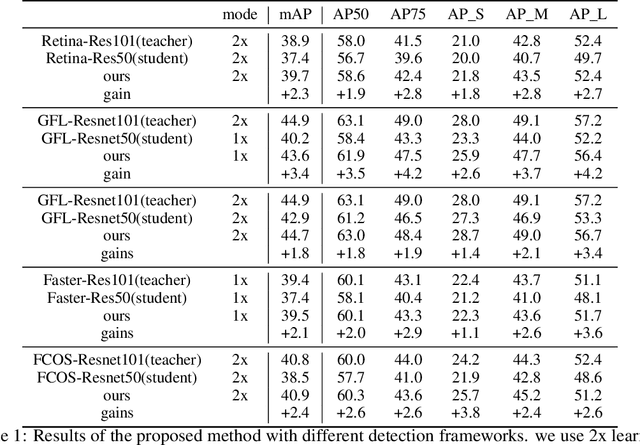

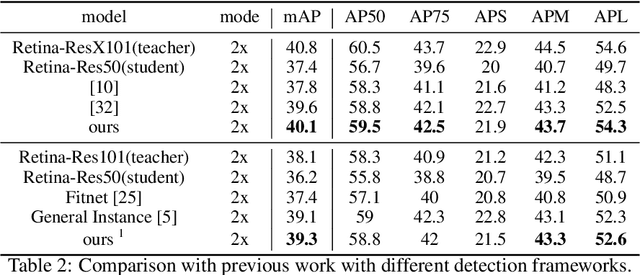

Abstract:In recent years, large-scale deep models have achieved great success, but the huge computational complexity and massive storage requirements make it a great challenge to deploy them in resource-limited devices. As a model compression and acceleration method, knowledge distillation effectively improves the performance of small models by transferring the dark knowledge from the teacher detector. However, most of the existing distillation-based detection methods mainly imitating features near bounding boxes, which suffer from two limitations. First, they ignore the beneficial features outside the bounding boxes. Second, these methods imitate some features which are mistakenly regarded as the background by the teacher detector. To address the above issues, we propose a novel Feature-Richness Score (FRS) method to choose important features that improve generalized detectability during distilling. The proposed method effectively retrieves the important features outside the bounding boxes and removes the detrimental features within the bounding boxes. Extensive experiments show that our methods achieve excellent performance on both anchor-based and anchor-free detectors. For example, RetinaNet with ResNet-50 achieves 39.7% in mAP on the COCO2017 dataset, which even surpasses the ResNet-101 based teacher detector 38.9% by 0.8%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge