Ming Chang

3D-EffiViTCaps: 3D Efficient Vision Transformer with Capsule for Medical Image Segmentation

Mar 25, 2024

Abstract:Medical image segmentation (MIS) aims to finely segment various organs. It requires grasping global information from both parts and the entire image for better segmenting, and clinically there are often certain requirements for segmentation efficiency. Convolutional neural networks (CNNs) have made considerable achievements in MIS. However, they are difficult to fully collect global context information and their pooling layer may cause information loss. Capsule networks, which combine the benefits of CNNs while taking into account additional information such as relative location that CNNs do not, have lately demonstrated some advantages in MIS. Vision Transformer (ViT) employs transformers in visual tasks. Transformer based on attention mechanism has excellent global inductive modeling capabilities and is expected to capture longrange information. Moreover, there have been resent studies on making ViT more lightweight to minimize model complexity and increase efficiency. In this paper, we propose a U-shaped 3D encoder-decoder network named 3D-EffiViTCaps, which combines 3D capsule blocks with 3D EfficientViT blocks for MIS. Our encoder uses capsule blocks and EfficientViT blocks to jointly capture local and global semantic information more effectively and efficiently with less information loss, while the decoder employs CNN blocks and EfficientViT blocks to catch ffner details for segmentation. We conduct experiments on various datasets, including iSeg-2017, Hippocampus and Cardiac to verify the performance and efficiency of 3D-EffiViTCaps, which performs better than previous 3D CNN-based, 3D Capsule-based and 3D Transformer-based models. We further implement a series of ablation experiments on the main blocks. Our code is available at: https://github.com/HidNeuron/3D-EffiViTCaps.

Distilling Object Detectors with Feature Richness

Nov 02, 2021

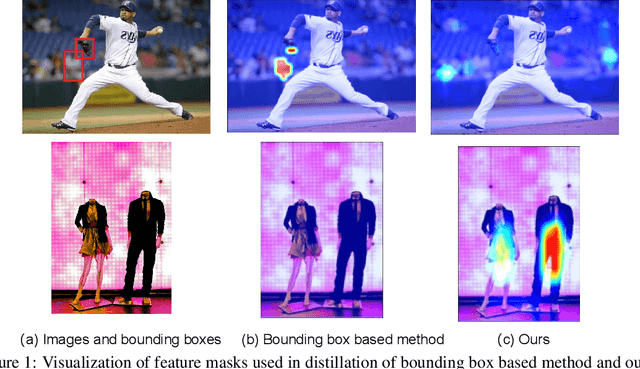

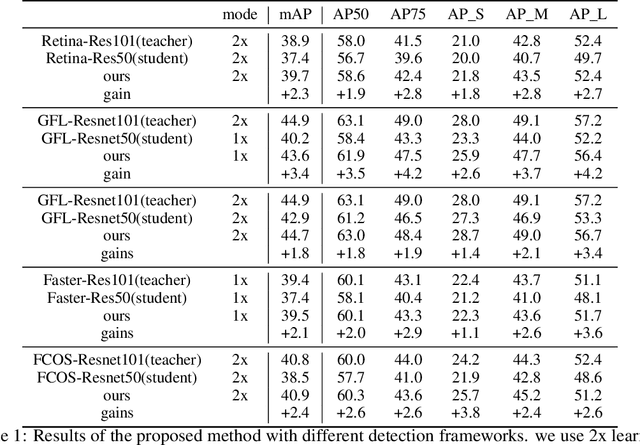

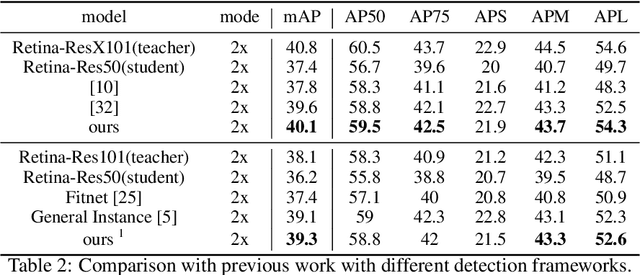

Abstract:In recent years, large-scale deep models have achieved great success, but the huge computational complexity and massive storage requirements make it a great challenge to deploy them in resource-limited devices. As a model compression and acceleration method, knowledge distillation effectively improves the performance of small models by transferring the dark knowledge from the teacher detector. However, most of the existing distillation-based detection methods mainly imitating features near bounding boxes, which suffer from two limitations. First, they ignore the beneficial features outside the bounding boxes. Second, these methods imitate some features which are mistakenly regarded as the background by the teacher detector. To address the above issues, we propose a novel Feature-Richness Score (FRS) method to choose important features that improve generalized detectability during distilling. The proposed method effectively retrieves the important features outside the bounding boxes and removes the detrimental features within the bounding boxes. Extensive experiments show that our methods achieve excellent performance on both anchor-based and anchor-free detectors. For example, RetinaNet with ResNet-50 achieves 39.7% in mAP on the COCO2017 dataset, which even surpasses the ResNet-101 based teacher detector 38.9% by 0.8%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge