Zhiqiang Zhuang

Bridging the Gap Between Estimated and True Regret Towards Reliable Regret Estimation in Deep Learning based Mechanism Design

Jan 20, 2026Abstract:Recent advances, such as RegretNet, ALGnet, RegretFormer and CITransNet, use deep learning to approximate optimal multi item auctions by relaxing incentive compatibility (IC) and measuring its violation via ex post regret. However, the true accuracy of these regret estimates remains unclear. Computing exact regret is computationally intractable, and current models rely on gradient based optimizers whose outcomes depend heavily on hyperparameter choices. Through extensive experiments, we reveal that existing methods systematically underestimate actual regret (In some models, the true regret is several hundred times larger than the reported regret), leading to overstated claims of IC and revenue. To address this issue, we derive a lower bound on regret and introduce an efficient item wise regret approximation. Building on this, we propose a guided refinement procedure that substantially improves regret estimation accuracy while reducing computational cost. Our method provides a more reliable foundation for evaluating incentive compatibility in deep learning based auction mechanisms and highlights the need to reassess prior performance claims in this area.

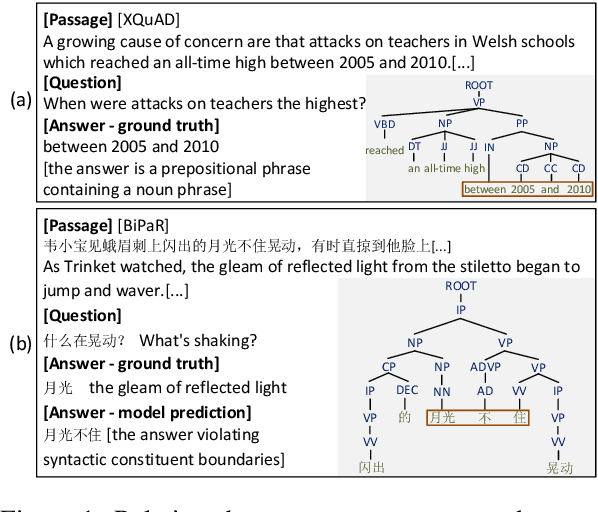

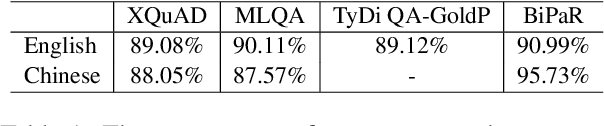

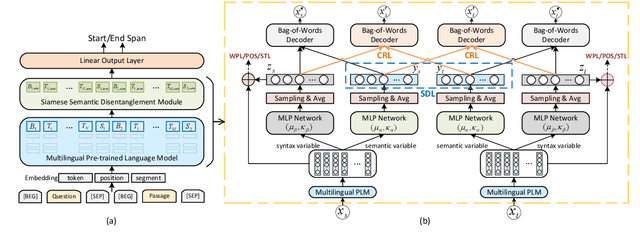

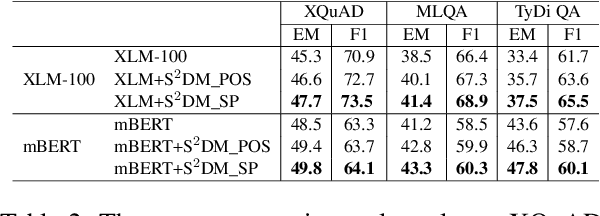

Learning Disentangled Semantic Representations for Zero-Shot Cross-Lingual Transfer in Multilingual Machine Reading Comprehension

Apr 03, 2022

Abstract:Multilingual pre-trained models are able to zero-shot transfer knowledge from rich-resource to low-resource languages in machine reading comprehension (MRC). However, inherent linguistic discrepancies in different languages could make answer spans predicted by zero-shot transfer violate syntactic constraints of the target language. In this paper, we propose a novel multilingual MRC framework equipped with a Siamese Semantic Disentanglement Model (SSDM) to disassociate semantics from syntax in representations learned by multilingual pre-trained models. To explicitly transfer only semantic knowledge to the target language, we propose two groups of losses tailored for semantic and syntactic encoding and disentanglement. Experimental results on three multilingual MRC datasets (i.e., XQuAD, MLQA, and TyDi QA) demonstrate the effectiveness of our proposed approach over models based on mBERT and XLM-100. Code is available at:https://github.com/wulinjuan/SSDM_MRC.

Syntax-Preserving Belief Change Operators for Logic Programs

Mar 17, 2017

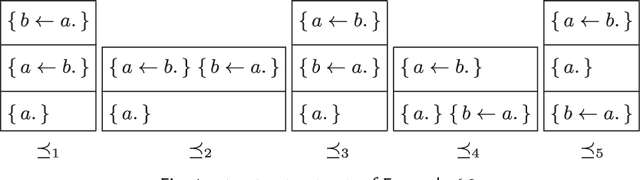

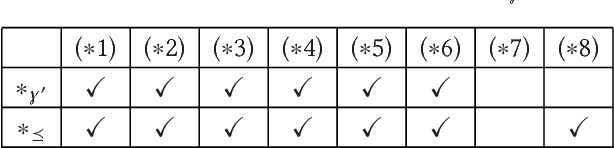

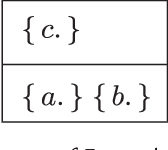

Abstract:Recent methods have adapted the well-established AGM and belief base frameworks for belief change to cover belief revision in logic programs. In this study here, we present two new sets of belief change operators for logic programs. They focus on preserving the explicit relationships expressed in the rules of a program, a feature that is missing in purely semantic approaches that consider programs only in their entirety. In particular, operators of the latter class fail to satisfy preservation and support, two important properties for belief change in logic programs required to ensure intuitive results. We address this shortcoming of existing approaches by introducing partial meet and ensconcement constructions for logic program belief change, which allow us to define syntax-preserving operators that satisfy preservation and support. Our work is novel in that our constructions not only preserve more information from a logic program during a change operation than existing ones, but they also facilitate natural definitions of contraction operators, the first in the field to the best of our knowledge. In order to evaluate the rationality of our operators, we translate the revision and contraction postulates from the AGM and belief base frameworks to the logic programming setting. We show that our operators fully comply with the belief base framework and formally state the interdefinability between our operators. We further propose an algorithm that is based on modularising a logic program to reduce partial meet and ensconcement revisions or contractions to performing the operation only on the relevant modules of that program. Finally, we compare our approach to two state-of-the-art logic program revision methods and demonstrate that our operators address the shortcomings of one and generalise the other method.

A New Approach for Revising Logic Programs

Mar 31, 2016Abstract:Belief revision has been studied mainly with respect to background logics that are monotonic in character. In this paper we study belief revision when the underlying logic is non-monotonic instead--an inherently interesting problem that is under explored. In particular, we will focus on the revision of a body of beliefs that is represented as a logic program under the answer set semantics, while the new information is also similarly represented as a logic program. Our approach is driven by the observation that unlike in a monotonic setting where, when necessary, consistency in a revised body of beliefs is maintained by jettisoning some old beliefs, in a non-monotonic setting consistency can be restored by adding new beliefs as well. We will define a syntactic revision function and subsequently provide representation theorem for characterising it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge