Abhaya Nayak

On Conforming and Conflicting Values

Jul 08, 2019

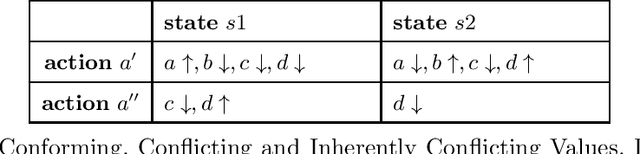

Abstract:Values are things that are important to us. Actions activate values - they either go against our values or they promote our values. Values themselves can either be conforming or conflicting depending on the action that is taken. In this short paper, we argue that values may be classified as one of two types - conflicting and inherently conflicting values. They are distinguished by the fact that the latter in some sense can be thought of as being independent of actions. This allows us to do two things: i) check whether a set of values is consistent and ii) check whether it is in conflict with other sets of values.

A Value-based Trust Assessment Model for Multi-agent Systems

May 31, 2019Abstract:An agent's assessment of its trust in another agent is commonly taken to be a measure of the reliability/predictability of the latter's actions. It is based on the trustor's past observations of the behaviour of the trustee and requires no knowledge of the inner-workings of the trustee. However, in situations that are new or unfamiliar, past observations are of little help in assessing trust. In such cases, knowledge about the trustee can help. A particular type of knowledge is that of values - things that are important to the trustor and the trustee. In this paper, based on the premise that the more values two agents share, the more they should trust one another, we propose a simple approach to trust assessment between agents based on values, taking into account if agents trust cautiously or boldly, and if they depend on others in carrying out a task.

Maximizing Expected Impact in an Agent Reputation Network -- Technical Report

May 14, 2018Abstract:Many multi-agent systems (MASs) are situated in stochastic environments. Some such systems that are based on the partially observable Markov decision process (POMDP) do not take the benevolence of other agents for granted. We propose a new POMDP-based framework which is general enough for the specification of a variety of stochastic MAS domains involving the impact of agents on each other's reputations. A unique feature of this framework is that actions are specified as either undirected (regular) or directed (towards a particular agent), and a new directed transition function is provided for modeling the effects of reputation in interactions. Assuming that an agent must maintain a good enough reputation to survive in the network, a planning algorithm is developed for an agent to select optimal actions in stochastic MASs. Preliminary evaluation is provided via an example specification and by determining the algorithm's complexity.

A New Approach for Revising Logic Programs

Mar 31, 2016Abstract:Belief revision has been studied mainly with respect to background logics that are monotonic in character. In this paper we study belief revision when the underlying logic is non-monotonic instead--an inherently interesting problem that is under explored. In particular, we will focus on the revision of a body of beliefs that is represented as a logic program under the answer set semantics, while the new information is also similarly represented as a logic program. Our approach is driven by the observation that unlike in a monotonic setting where, when necessary, consistency in a revised body of beliefs is maintained by jettisoning some old beliefs, in a non-monotonic setting consistency can be restored by adding new beliefs as well. We will define a syntactic revision function and subsequently provide representation theorem for characterising it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge