Zhiji Yang

A Safe Screening Rule with Bi-level Optimization of $ν$ Support Vector Machine

Mar 04, 2024

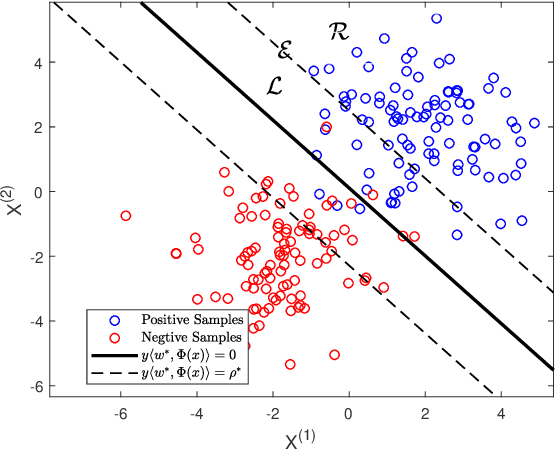

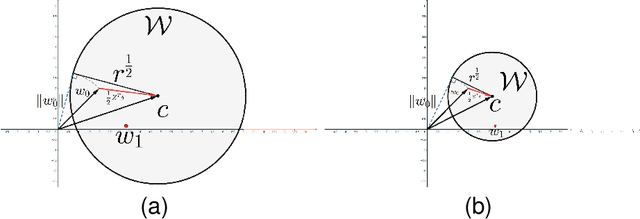

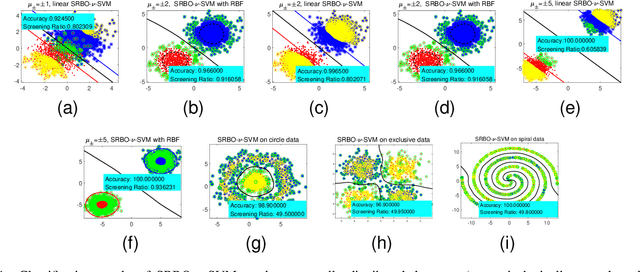

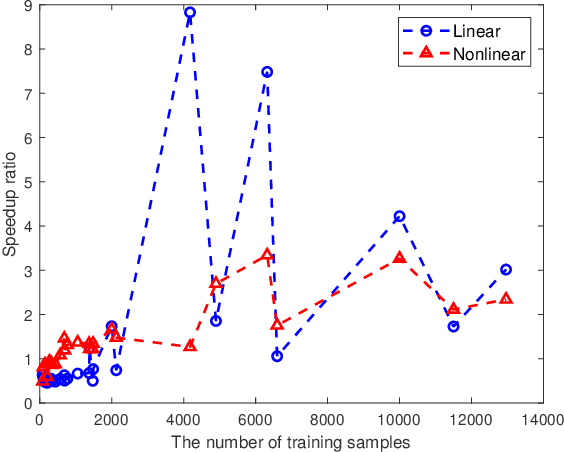

Abstract:Support vector machine (SVM) has achieved many successes in machine learning, especially for a small sample problem. As a famous extension of the traditional SVM, the $\nu$ support vector machine ($\nu$-SVM) has shown outstanding performance due to its great model interpretability. However, it still faces challenges in training overhead for large-scale problems. To address this issue, we propose a safe screening rule with bi-level optimization for $\nu$-SVM (SRBO-$\nu$-SVM) which can screen out inactive samples before training and reduce the computational cost without sacrificing the prediction accuracy. Our SRBO-$\nu$-SVM is strictly deduced by integrating the Karush-Kuhn-Tucker (KKT) conditions, the variational inequalities of convex problems and the $\nu$-property. Furthermore, we develop an efficient dual coordinate descent method (DCDM) to further improve computational speed. Finally, a unified framework for SRBO is proposed to accelerate many SVM-type models, and it is successfully applied to one-class SVM. Experimental results on 6 artificial data sets and 30 benchmark data sets have verified the effectiveness and safety of our proposed methods in supervised and unsupervised tasks.

Regularized Bilinear Discriminant Analysis for Multivariate Time Series Data

Feb 26, 2022

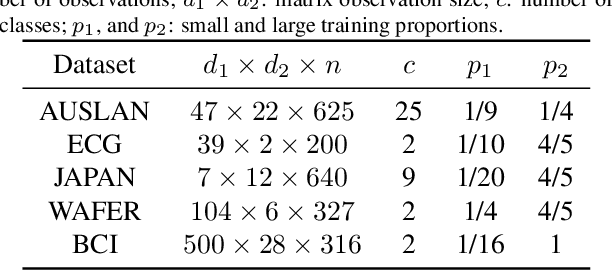

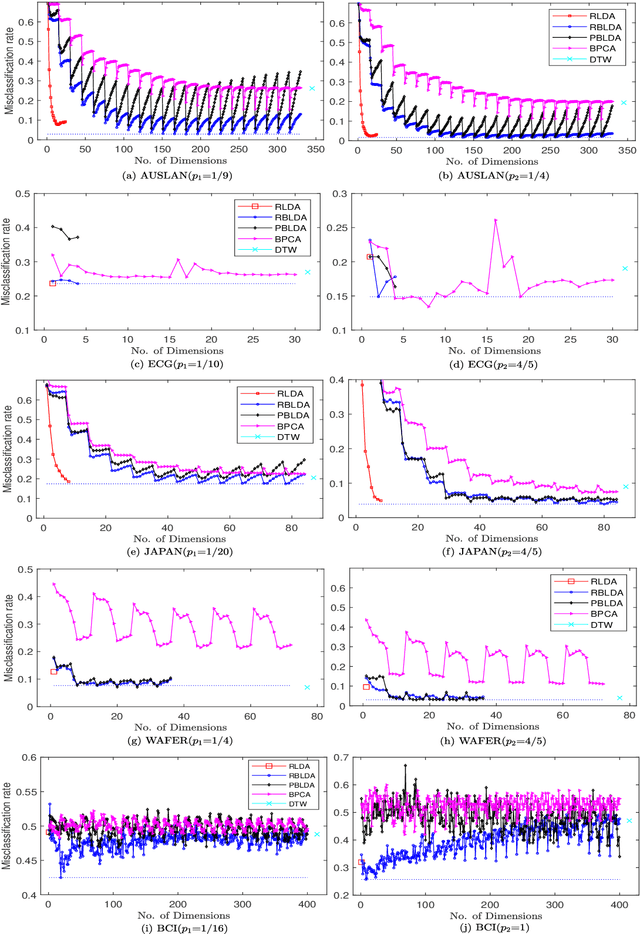

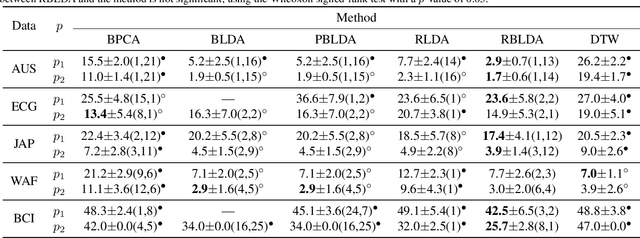

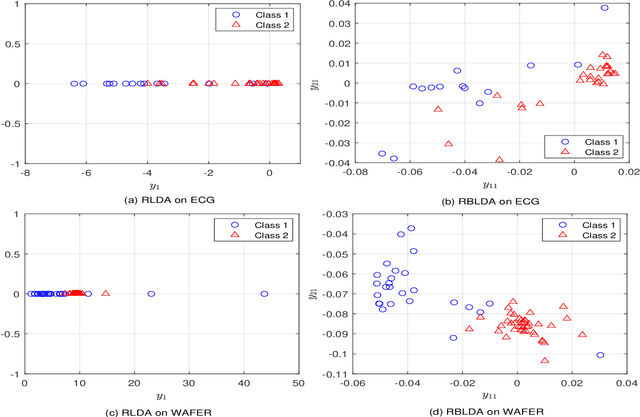

Abstract:In recent years, the methods on matrix-based or bilinear discriminant analysis (BLDA) have received much attention. Despite their advantages, it has been reported that the traditional vector-based regularized LDA (RLDA) is still quite competitive and could outperform BLDA on some benchmark datasets. Nevertheless, it is also noted that this finding is mainly limited to image data. In this paper, we propose regularized BLDA (RBLDA) and further explore the comparison between RLDA and RBLDA on another type of matrix data, namely multivariate time series (MTS). Unlike image data, MTS typically consists of multiple variables measured at different time points. Although many methods for MTS data classification exist within the literature, there is relatively little work in exploring the matrix data structure of MTS data. Moreover, the existing BLDA can not be performed when one of its within-class matrices is singular. To address the two problems, we propose RBLDA for MTS data classification, where each of the two within-class matrices is regularized via one parameter. We develop an efficient implementation of RBLDA and an efficient model selection algorithm with which the cross validation procedure for RBLDA can be performed efficiently. Experiments on a number of real MTS data sets are conducted to evaluate the proposed algorithm and compare RBLDA with several closely related methods, including RLDA and BLDA. The results reveal that RBLDA achieves the best overall recognition performance and the proposed model selection algorithm is efficient; Moreover, RBLDA can produce better visualization of MTS data than RLDA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge