Zhicong Lin

CLIP-driven Outliers Synthesis for few-shot OOD detection

Mar 30, 2024

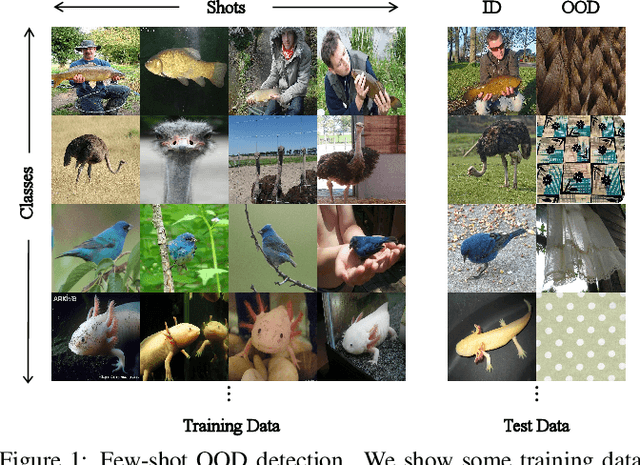

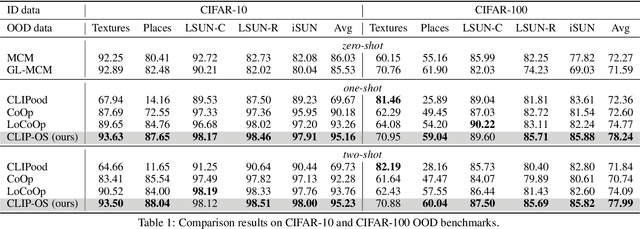

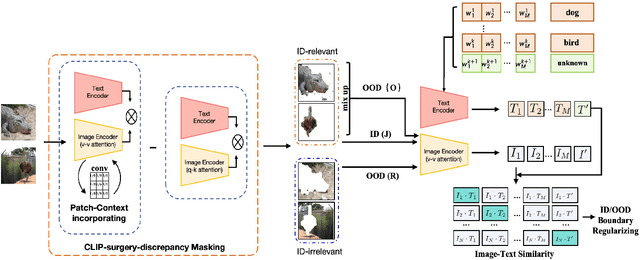

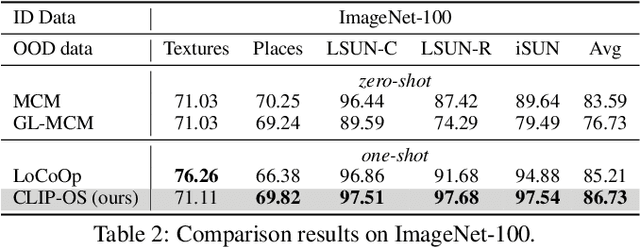

Abstract:Few-shot OOD detection focuses on recognizing out-of-distribution (OOD) images that belong to classes unseen during training, with the use of only a small number of labeled in-distribution (ID) images. Up to now, a mainstream strategy is based on large-scale vision-language models, such as CLIP. However, these methods overlook a crucial issue: the lack of reliable OOD supervision information, which can lead to biased boundaries between in-distribution (ID) and OOD. To tackle this problem, we propose CLIP-driven Outliers Synthesis~(CLIP-OS). Firstly, CLIP-OS enhances patch-level features' perception by newly proposed patch uniform convolution, and adaptively obtains the proportion of ID-relevant information by employing CLIP-surgery-discrepancy, thus achieving separation between ID-relevant and ID-irrelevant. Next, CLIP-OS synthesizes reliable OOD data by mixing up ID-relevant features from different classes to provide OOD supervision information. Afterward, CLIP-OS leverages synthetic OOD samples by unknown-aware prompt learning to enhance the separability of ID and OOD. Extensive experiments across multiple benchmarks demonstrate that CLIP-OS achieves superior few-shot OOD detection capability.

A Novel Low-cost FPGA-based Real-time Object Tracking System

Apr 22, 2018

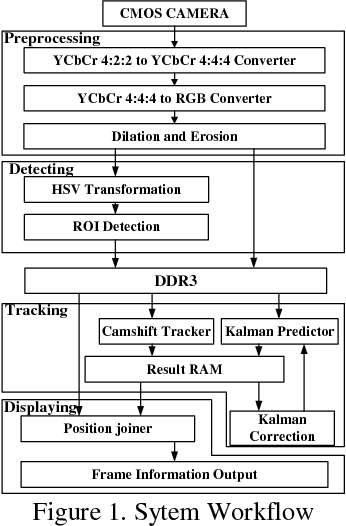

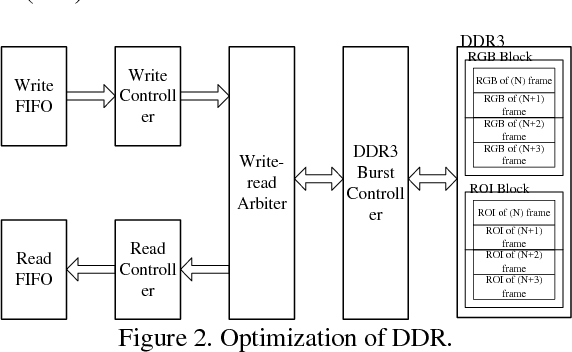

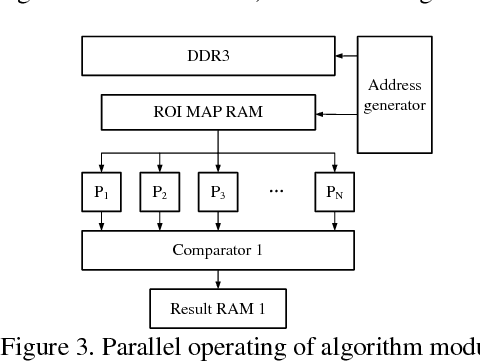

Abstract:In current visual object tracking system, the CPU or GPU-based visual object tracking systems have high computational cost and consume a prohibitive amount of power. Therefore, in this paper, to reduce the computational burden of the Camshift algorithm, we propose a novel visual object tracking algorithm by exploiting the properties of the binary classifier and Kalman predictor. Moreover, we present a low-cost FPGA-based real-time object tracking hardware architecture. Extensive evaluations on OTB benchmark demonstrate that the proposed system has extremely compelling real-time, stability and robustness. The evaluation results show that the accuracy of our algorithm is about 48%, and the average speed is about 309 frames per second.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge