Zhenya Yang

GenieDrive: Towards Physics-Aware Driving World Model with 4D Occupancy Guided Video Generation

Dec 14, 2025Abstract:Physics-aware driving world model is essential for drive planning, out-of-distribution data synthesis, and closed-loop evaluation. However, existing methods often rely on a single diffusion model to directly map driving actions to videos, which makes learning difficult and leads to physically inconsistent outputs. To overcome these challenges, we propose GenieDrive, a novel framework designed for physics-aware driving video generation. Our approach starts by generating 4D occupancy, which serves as a physics-informed foundation for subsequent video generation. 4D occupancy contains rich physical information, including high-resolution 3D structures and dynamics. To facilitate effective compression of such high-resolution occupancy, we propose a VAE that encodes occupancy into a latent tri-plane representation, reducing the latent size to only 58% of that used in previous methods. We further introduce Mutual Control Attention (MCA) to accurately model the influence of control on occupancy evolution, and we jointly train the VAE and the subsequent prediction module in an end-to-end manner to maximize forecasting accuracy. Together, these designs yield a 7.2% improvement in forecasting mIoU at an inference speed of 41 FPS, while using only 3.47 M parameters. Additionally, a Normalized Multi-View Attention is introduced in the video generation model to generate multi-view driving videos with guidance from our 4D occupancy, significantly improving video quality with a 20.7% reduction in FVD. Experiments demonstrate that GenieDrive enables highly controllable, multi-view consistent, and physics-aware driving video generation.

ClipGS: Clippable Gaussian Splatting for Interactive Cinematic Visualization of Volumetric Medical Data

Jul 09, 2025Abstract:The visualization of volumetric medical data is crucial for enhancing diagnostic accuracy and improving surgical planning and education. Cinematic rendering techniques significantly enrich this process by providing high-quality visualizations that convey intricate anatomical details, thereby facilitating better understanding and decision-making in medical contexts. However, the high computing cost and low rendering speed limit the requirement of interactive visualization in practical applications. In this paper, we introduce ClipGS, an innovative Gaussian splatting framework with the clipping plane supported, for interactive cinematic visualization of volumetric medical data. To address the challenges posed by dynamic interactions, we propose a learnable truncation scheme that automatically adjusts the visibility of Gaussian primitives in response to the clipping plane. Besides, we also design an adaptive adjustment model to dynamically adjust the deformation of Gaussians and refine the rendering performance. We validate our method on five volumetric medical data (including CT and anatomical slice data), and reach an average 36.635 PSNR rendering quality with 156 FPS and 16.1 MB model size, outperforming state-of-the-art methods in rendering quality and efficiency.

Efficient Data-driven Scene Simulation using Robotic Surgery Videos via Physics-embedded 3D Gaussians

May 02, 2024

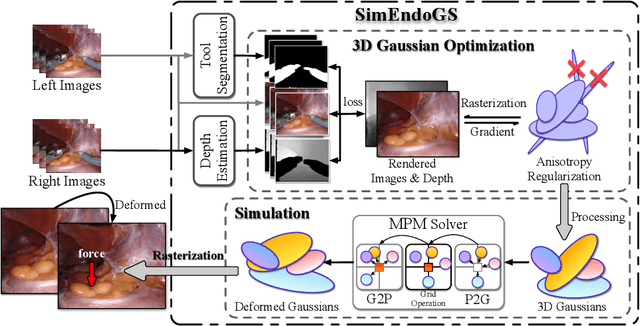

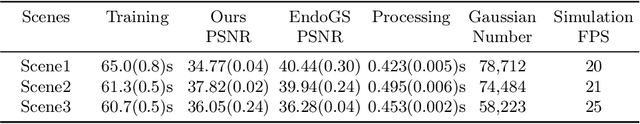

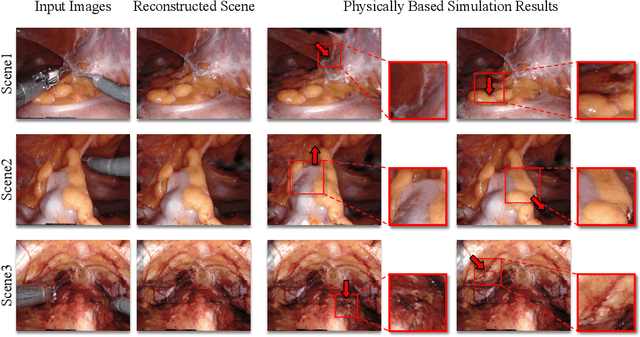

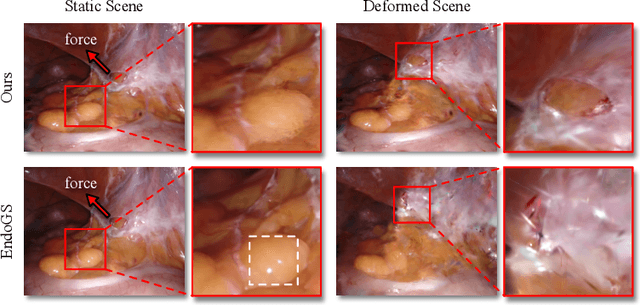

Abstract:Surgical scene simulation plays a crucial role in surgical education and simulator-based robot learning. Traditional approaches for creating these environments with surgical scene involve a labor-intensive process where designers hand-craft tissues models with textures and geometries for soft body simulations. This manual approach is not only time-consuming but also limited in the scalability and realism. In contrast, data-driven simulation offers a compelling alternative. It has the potential to automatically reconstruct 3D surgical scenes from real-world surgical video data, followed by the application of soft body physics. This area, however, is relatively uncharted. In our research, we introduce 3D Gaussian as a learnable representation for surgical scene, which is learned from stereo endoscopic video. To prevent over-fitting and ensure the geometrical correctness of these scenes, we incorporate depth supervision and anisotropy regularization into the Gaussian learning process. Furthermore, we apply the Material Point Method, which is integrated with physical properties, to the 3D Gaussians to achieve realistic scene deformations. Our method was evaluated on our collected in-house and public surgical videos datasets. Results show that it can reconstruct and simulate surgical scenes from endoscopic videos efficiently-taking only a few minutes to reconstruct the surgical scene-and produce both visually and physically plausible deformations at a speed approaching real-time. The results demonstrate great potential of our proposed method to enhance the efficiency and variety of simulations available for surgical education and robot learning.

Efficient Physically-based Simulation of Soft Bodies in Embodied Environment for Surgical Robot

Feb 02, 2024Abstract:Surgical robot simulation platform plays a crucial role in enhancing training efficiency and advancing research on robot learning. Much effort have been made by scholars on developing open-sourced surgical robot simulators to facilitate research. We also developed SurRoL formerly, an open-source, da Vinci Research Kit (dVRK) compatible and interactive embodied environment for robot learning. Despite its advancements, the simulation of soft bodies still remained a major challenge within the open-source platforms available for surgical robotics. To this end, we develop an interactive physically based soft body simulation framework and integrate it to SurRoL. Specifically, we utilized a high-performance adaptation of the Material Point Method (MPM) along with the Neo-Hookean model to represent the deformable tissue. Lagrangian particles are used to track the motion and deformation of the soft body throughout the simulation and Eulerian grids are leveraged to discretize space and facilitate the calculation of forces, velocities, and other physical quantities. We also employed an efficient collision detection and handling strategy to simulate the interaction between soft body and rigid tool of the surgical robot. By employing the Taichi programming language, our implementation harnesses parallel computing to boost simulation speed. Experimental results show that our platform is able to simulate soft bodies efficiently with strong physical interpretability and plausible visual effects. These new features in SurRoL enable the efficient simulation of surgical tasks involving soft tissue manipulation and pave the path for further investigation of surgical robot learning. The code will be released in a new branch of SurRoL github repo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge