Zhentai Lu

Unsupervised Deformable Medical Image Registration via Pyramidal Residual Deformation Fields Estimation

Apr 16, 2020

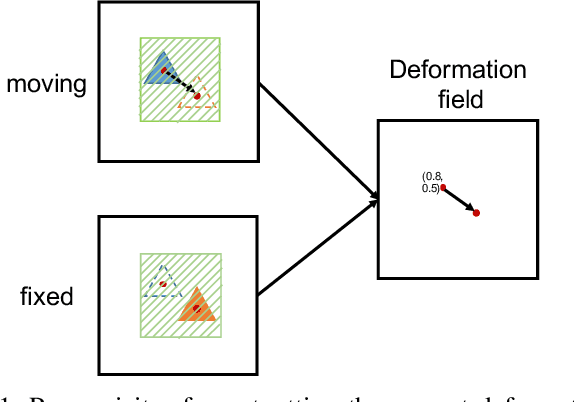

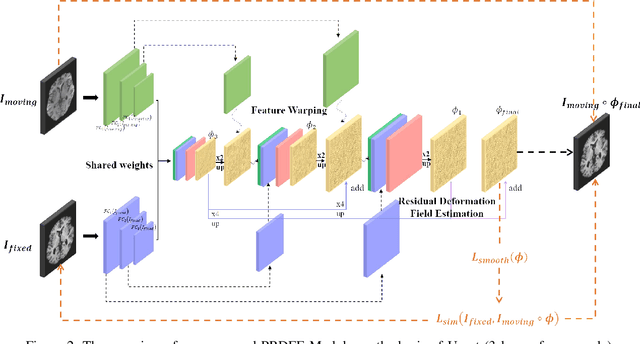

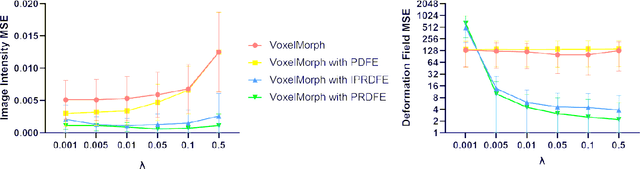

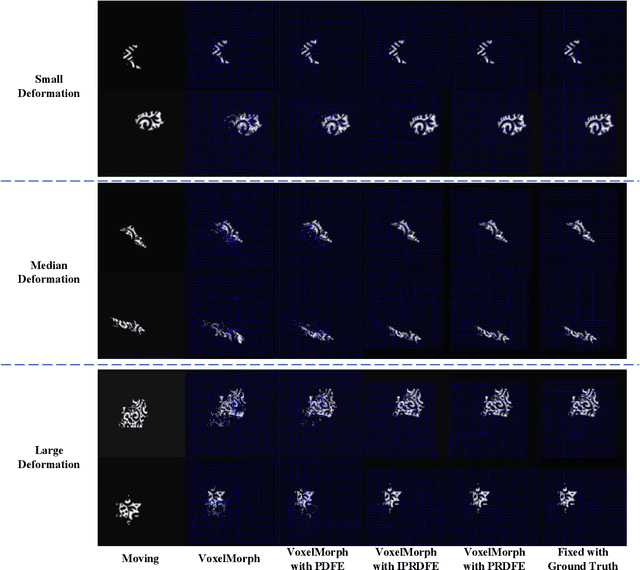

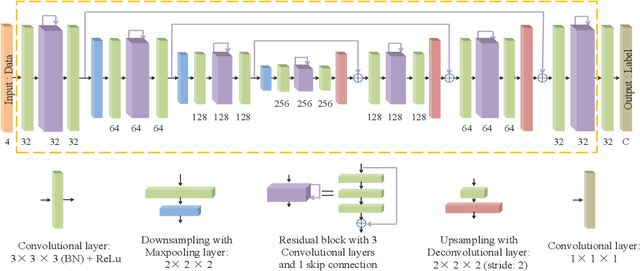

Abstract:Deformation field estimation is an important and challenging issue in many medical image registration applications. In recent years, deep learning technique has become a promising approach for simplifying registration problems, and has been gradually applied to medical image registration. However, most existing deep learning registrations do not consider the problem that when the receptive field cannot cover the corresponding features in the moving image and the fixed image, it cannot output accurate displacement values. In fact, due to the limitation of the receptive field, the 3 x 3 kernel has difficulty in covering the corresponding features at high/original resolution. Multi-resolution and multi-convolution techniques can improve but fail to avoid this problem. In this study, we constructed pyramidal feature sets on moving and fixed images and used the warped moving and fixed features to estimate their "residual" deformation field at each scale, called the Pyramidal Residual Deformation Field Estimation module (PRDFE-Module). The "total" deformation field at each scale was computed by upsampling and weighted summing all the "residual" deformation fields at all its previous scales, which can effectively and accurately transfer the deformation fields from low resolution to high resolution and is used for warping the moving features at each scale. Simulation and real brain data results show that our method improves the accuracy of the registration and the rationality of the deformation field.

One-pass Multi-task Networks with Cross-task Guided Attention for Brain Tumor Segmentation

Jun 05, 2019

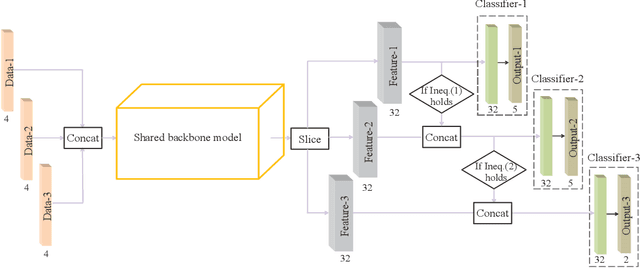

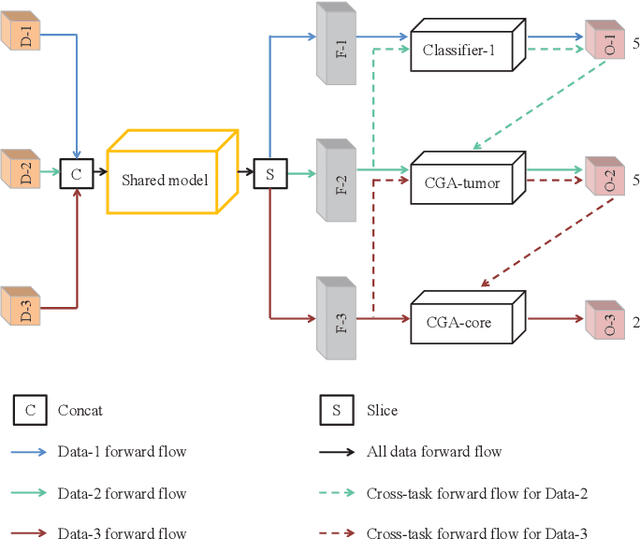

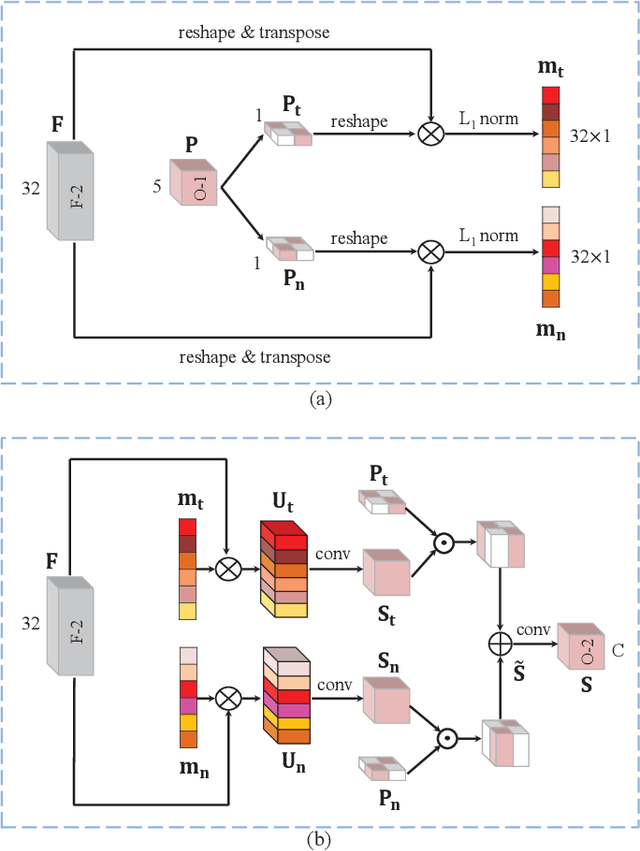

Abstract:Class imbalance has been one of the major challenges for medical image segmentation. The model cascade (MC) strategy significantly alleviates class imbalance issue. In spite of its outstanding performance, this method leads to an undesired system complexity and meanwhile ignores the relevance among the models. To handle these flaws of MC, we propose in this paper a light-weight deep model, i.e., the One-pass Multi-task Network (OM-Net) to solve class imbalance better than MC and require only one-pass computation for brain tumor segmentation. First, OM-Net integrates the separate segmentation tasks into one deep model. Second, to optimize OM-Net more effectively, we take advantage of the correlation among tasks to design an online training data transfer strategy and a curriculum learning-based training strategy. Third, we further propose to share prediction results between tasks, which enables us to design a cross-task guided attention (CGA) module. With the guidance of prediction results provided by the previous task, CGA can adaptively recalibrate channel-wise feature responses based on the category-specific statistics. Finally, a simple yet effective post-processing method is introduced to refine the segmentation results of the proposed attention network. Extensive experiments are performed to justify the effectiveness of the proposed techniques. Most impressively, we achieve state-of-the-art performance on the BraTS 2015 and BraTS 2017 datasets. With the proposed approaches, we also won the joint third place in the BraTS 2018 challenge among 64 participating teams. We will make the code publicly available at https://github.com/chenhong-zhou/OM-Net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge