Zhenping Xia

Two-level Graph Network for Few-Shot Class-Incremental Learning

Mar 24, 2023Abstract:Few-shot class-incremental learning (FSCIL) aims to design machine learning algorithms that can continually learn new concepts from a few data points, without forgetting knowledge of old classes. The difficulty lies in that limited data from new classes not only lead to significant overfitting issues but also exacerbates the notorious catastrophic forgetting problems. However, existing FSCIL methods ignore the semantic relationships between sample-level and class-level. % Using the advantage that graph neural network (GNN) can mine rich information among few samples, In this paper, we designed a two-level graph network for FSCIL named Sample-level and Class-level Graph Neural Network (SCGN). Specifically, a pseudo incremental learning paradigm is designed in SCGN, which synthesizes virtual few-shot tasks as new tasks to optimize SCGN model parameters in advance. Sample-level graph network uses the relationship of a few samples to aggregate similar samples and obtains refined class-level features. Class-level graph network aims to mitigate the semantic conflict between prototype features of new classes and old classes. SCGN builds two-level graph networks to guarantee the latent semantic of each few-shot class can be effectively represented in FSCIL. Experiments on three popular benchmark datasets show that our method significantly outperforms the baselines and sets new state-of-the-art results with remarkable advantages.

Centroid Distance Distillation for Effective Rehearsal in Continual Learning

Mar 06, 2023Abstract:Rehearsal, retraining on a stored small data subset of old tasks, has been proven effective in solving catastrophic forgetting in continual learning. However, due to the sampled data may have a large bias towards the original dataset, retraining them is susceptible to driving continual domain drift of old tasks in feature space, resulting in forgetting. In this paper, we focus on tackling the continual domain drift problem with centroid distance distillation. First, we propose a centroid caching mechanism for sampling data points based on constructed centroids to reduce the sample bias in rehearsal. Then, we present a centroid distance distillation that only stores the centroid distance to reduce the continual domain drift. The experiments on four continual learning datasets show the superiority of the proposed method, and the continual domain drift can be reduced.

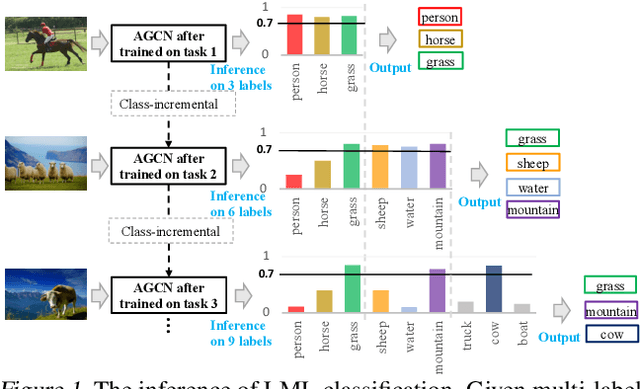

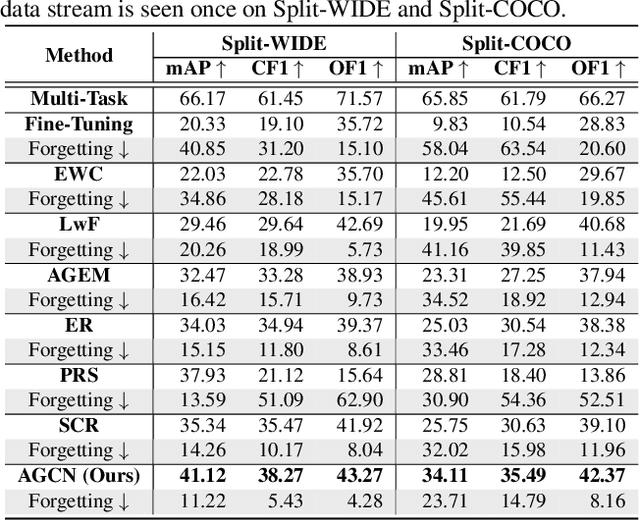

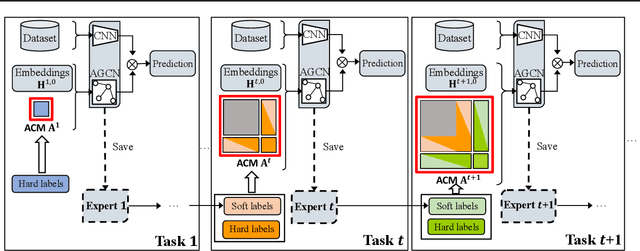

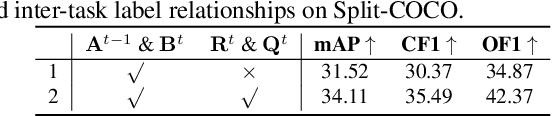

Class-Incremental Lifelong Learning in Multi-Label Classification

Jul 16, 2022

Abstract:Existing class-incremental lifelong learning studies only the data is with single-label, which limits its adaptation to multi-label data. This paper studies Lifelong Multi-Label (LML) classification, which builds an online class-incremental classifier in a sequential multi-label classification data stream. Training on the data with Partial Labels in LML classification may result in more serious Catastrophic Forgetting in old classes. To solve the problem, the study proposes an Augmented Graph Convolutional Network (AGCN) with a built Augmented Correlation Matrix (ACM) across sequential partial-label tasks. The results of two benchmarks show that the method is effective for LML classification and reducing forgetting.

Associating Multi-Scale Receptive Fields for Fine-grained Recognition

May 19, 2020

Abstract:Extracting and fusing part features have become the key of fined-grained image recognition. Recently, Non-local (NL) module has shown excellent improvement in image recognition. However, it lacks the mechanism to model the interactions between multi-scale part features, which is vital for fine-grained recognition. In this paper, we propose a novel cross-layer non-local (CNL) module to associate multi-scale receptive fields by two operations. First, CNL computes correlations between features of a query layer and all response layers. Second, all response features are weighted according to the correlations and are added to the query features. Due to the interactions of cross-layer features, our model builds spatial dependencies among multi-level layers and learns more discriminative features. In addition, we can reduce the aggregation cost if we set low-dimensional deep layer as query layer. Experiments are conducted to show our model achieves or surpasses state-of-the-art results on three benchmark datasets of fine-grained classification. Our codes can be found at github.com/FouriYe/CNL-ICIP2020.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge