Zhengze Zhou

Examining spatial heterogeneity of ridesourcing demand determinants with explainable machine learning

Sep 16, 2022

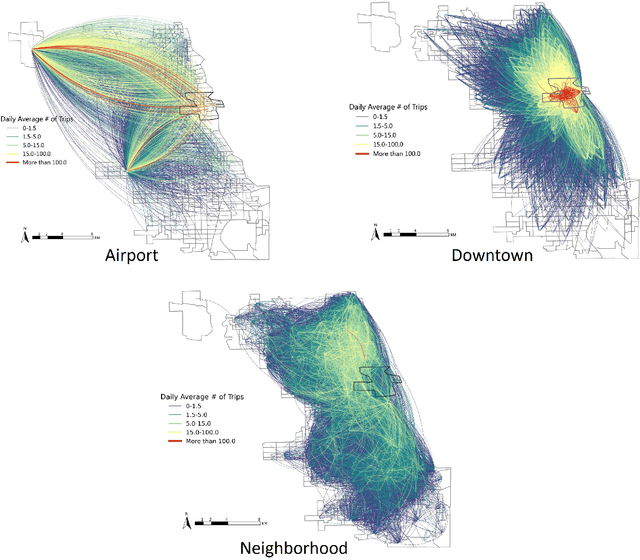

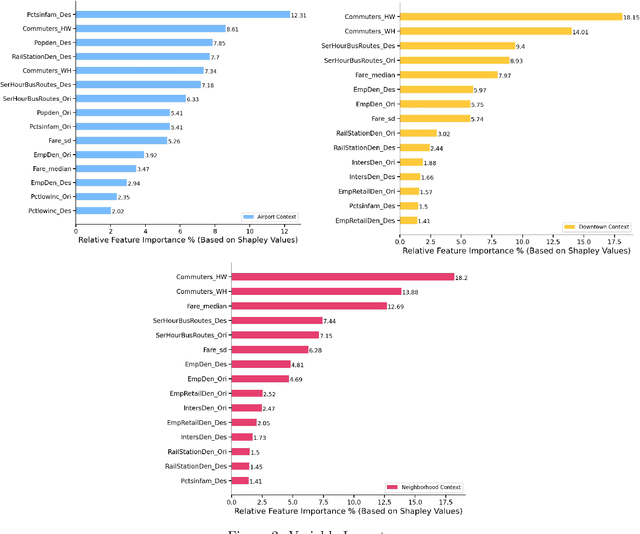

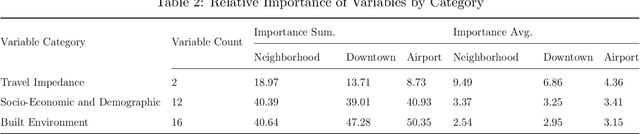

Abstract:The growing significance of ridesourcing services in recent years suggests a need to examine the key determinants of ridesourcing demand. However, little is known regarding the nonlinear effects and spatial heterogeneity of ridesourcing demand determinants. This study applies an explainable-machine-learning-based analytical framework to identify the key factors that shape ridesourcing demand and to explore their nonlinear associations across various spatial contexts (airport, downtown, and neighborhood). We use the ridesourcing-trip data in Chicago for empirical analysis. The results reveal that the importance of built environment varies across spatial contexts, and it collectively contributes the largest importance in predicting ridesourcing demand for airport trips. Additionally, the nonlinear effects of built environment on ridesourcing demand show strong spatial variations. Ridesourcing demand is usually most responsive to the built environment changes for downtown trips, followed by neighborhood trips and airport trips. These findings offer transportation professionals nuanced insights for managing ridesourcing services.

S-LIME: Stabilized-LIME for Model Explanation

Jun 15, 2021

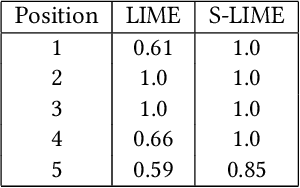

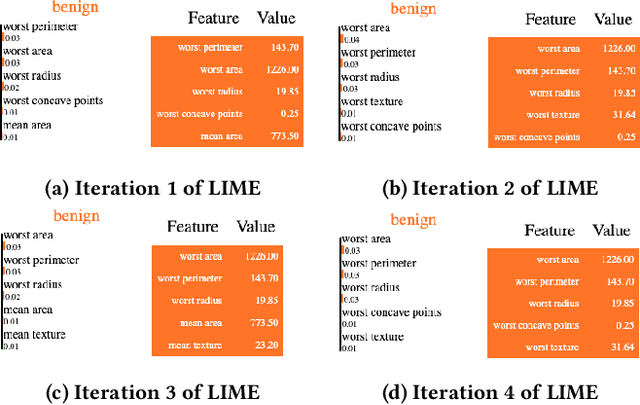

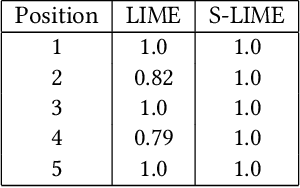

Abstract:An increasing number of machine learning models have been deployed in domains with high stakes such as finance and healthcare. Despite their superior performances, many models are black boxes in nature which are hard to explain. There are growing efforts for researchers to develop methods to interpret these black-box models. Post hoc explanations based on perturbations, such as LIME, are widely used approaches to interpret a machine learning model after it has been built. This class of methods has been shown to exhibit large instability, posing serious challenges to the effectiveness of the method itself and harming user trust. In this paper, we propose S-LIME, which utilizes a hypothesis testing framework based on central limit theorem for determining the number of perturbation points needed to guarantee stability of the resulting explanation. Experiments on both simulated and real world data sets are provided to demonstrate the effectiveness of our method.

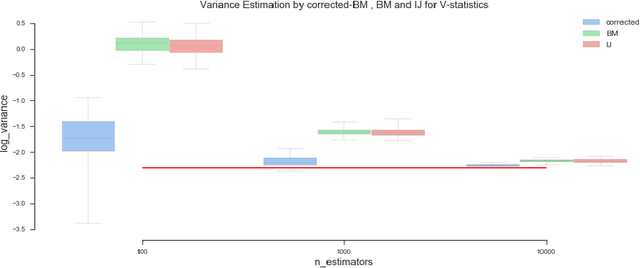

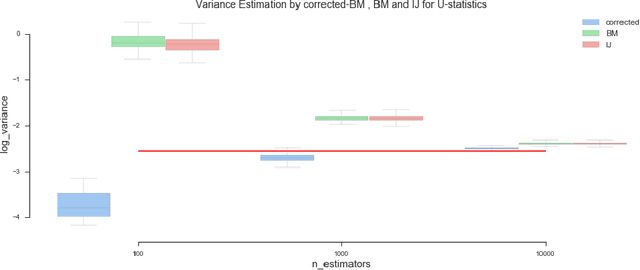

Asymptotic Normality and Variance Estimation For Supervised Ensembles

Dec 02, 2019

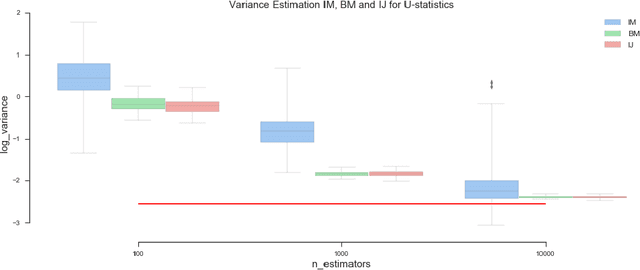

Abstract:Ensemble methods based on bootstrapping have improved the predictive accuracy of base learners, but fail to provide a framework in which formal statistical inference can be conducted. Recent theoretical developments suggest taking subsamples without replacement and analyze the resulting estimator in the context of a U-statistic, thus demonstrating asymptotic normality properties. However, we observe that current methods for variance estimation exhibit severe bias when the number of base learners is not large enough, compromising the validity of the resulting confidence intervals or hypothesis tests. This paper shows that similar asymptotics can be achieved by means of V-statistics, corresponding to taking subsamples with replacement. Further, we develop a bias correction algorithm for estimating variance in the limiting distribution, which yields satisfactory results with moderate size of base learners.

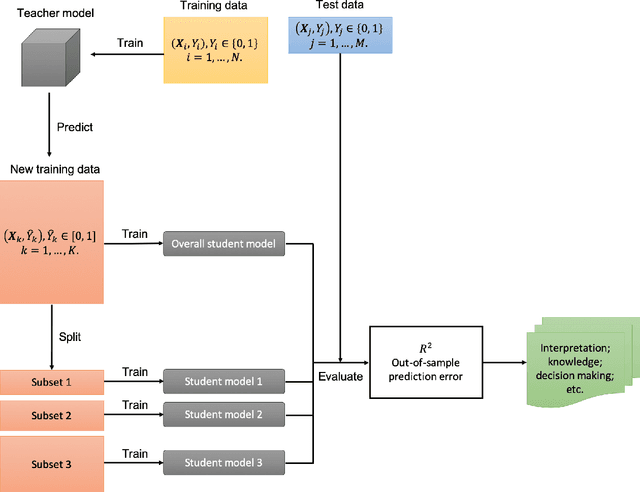

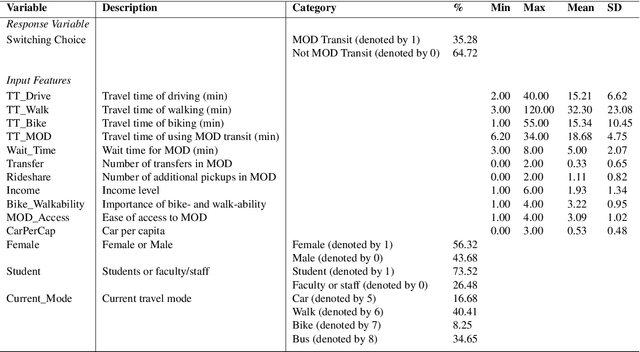

Distilling Black-Box Travel Mode Choice Model for Behavioral Interpretation

Oct 30, 2019

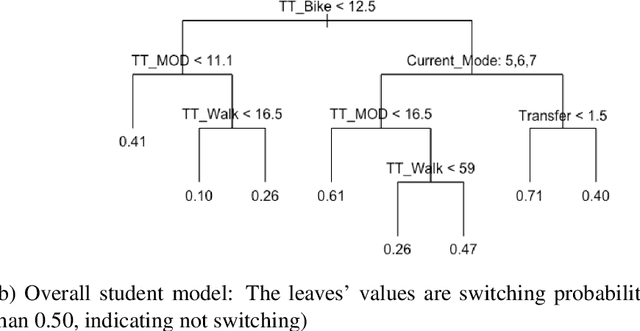

Abstract:Machine learning has proved to be very successful for making predictions in travel behavior modeling. However, most machine-learning models have complex model structures and offer little or no explanation as to how they arrive at these predictions. Interpretations about travel behavior models are essential for decision makers to understand travelers' preferences and plan policy interventions accordingly. Therefore, this paper proposes to apply and extend the model distillation approach, a model-agnostic machine-learning interpretation method, to explain how a black-box travel mode choice model makes predictions for the entire population and subpopulations of interest. Model distillation aims at compressing knowledge from a complex model (teacher) into an understandable and interpretable model (student). In particular, the paper integrates model distillation with market segmentation to generate more insights by accounting for heterogeneity. Furthermore, the paper provides a comprehensive comparison of student models with the benchmark model (decision tree) and the teacher model (gradient boosting trees) to quantify the fidelity and accuracy of the students' interpretations.

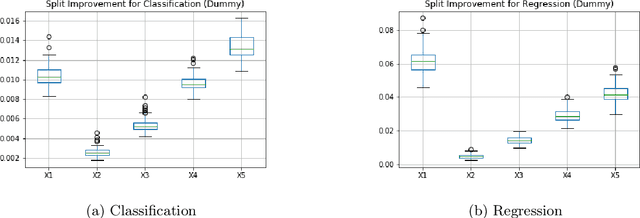

Unbiased Measurement of Feature Importance in Tree-Based Methods

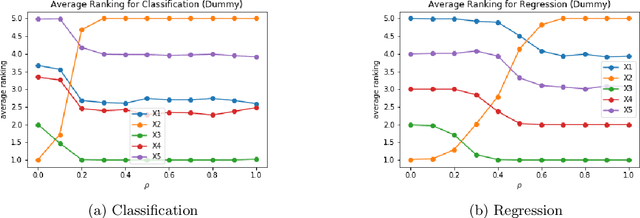

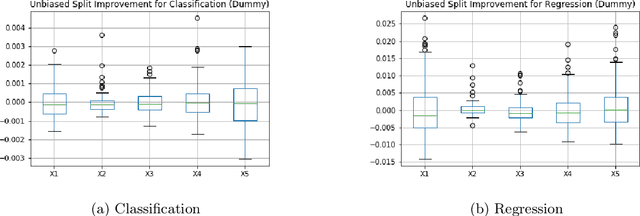

Mar 12, 2019

Abstract:We propose a modification that corrects for split-improvement variable importance measures in Random Forests and other tree-based methods. These methods have been shown to be biased towards increasing the importance of features with more potential splits. We show that by appropriately incorporating split-improvement as measured on out of sample data, this bias can be corrected yielding better summaries and screening tools.

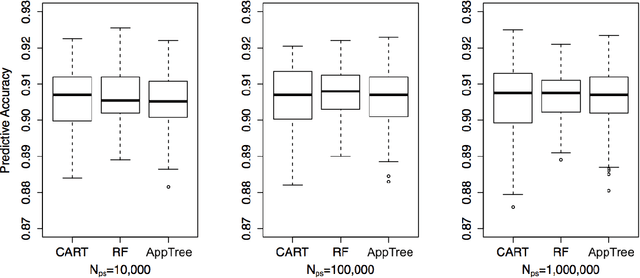

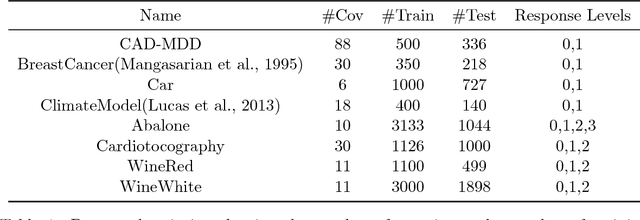

Approximation Trees: Statistical Stability in Model Distillation

Aug 22, 2018

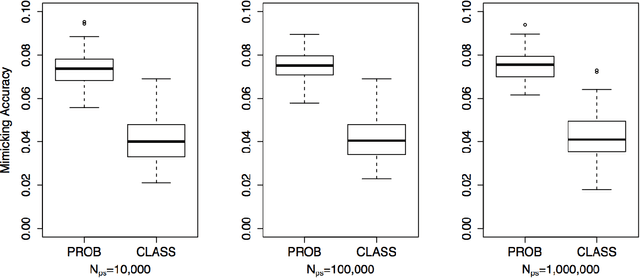

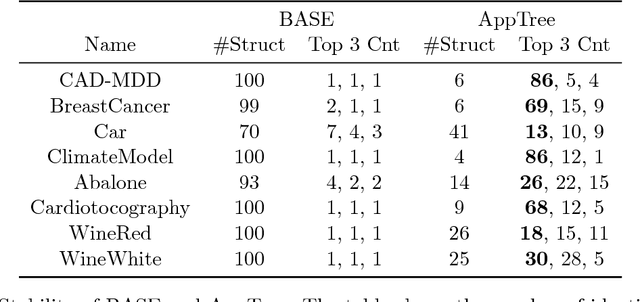

Abstract:This paper examines the stability of learned explanations for black-box predictions via model distillation with decision trees. One approach to intelligibility in machine learning is to use an understandable `student' model to mimic the output of an accurate `teacher'. Here, we consider the use of regression trees as a student model, in which nodes of the tree can be used as `explanations' for particular predictions, and the whole structure of the tree can be used as a global representation of the resulting function. However, individual trees are sensitive to the particular data sets used to train them, and an interpretation of a student model may be suspect if small changes in the training data have a large effect on it. In this context, access to outcomes from a teacher helps to stabilize the greedy splitting strategy by generating a much larger corpus of training examples than was originally available. We develop tests to ensure that enough examples are generated at each split so that the same splitting rule would be chosen with high probability were the tree to be re trained. Further, we develop a stopping rule to indicate how deep the tree should be built based on recent results on the variability of Random Forests when these are used as the teacher. We provide concrete examples of these procedures on the CAD-MDD and COMPAS data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge