Zhanteng Xie

Semantic2D: A Semantic Dataset for 2D Lidar Semantic Segmentation

Sep 15, 2024Abstract:This paper presents a 2D lidar semantic segmentation dataset to enhance the semantic scene understanding for mobile robots in different indoor robotics applications. While most existing lidar semantic datasets focus on 3D lidar sensors and autonomous driving scenarios, the proposed 2D lidar semantic dataset is the first public dataset for 2D lidar sensors and mobile robots. It contains data collected in six different indoor environments and has nine categories of typical objects in indoor environments. A novel semi-automatic semantic labeling framework is proposed to provide point-wise annotation for the dataset with minimal human effort. Based on this 2D lidar dataset, a hardware-friendly stochastic semantic segmentation benchmark is proposed to enable 2D lidar sensors to have semantic scene understanding capabilities. A series of segmentation tests are performed to demonstrate that the proposed learning-based segmentation benchmark can achieve more accurate and richer segmentation for each lidar point compared to traditional geometry-based extraction algorithms.

SCOPE: Stochastic Cartographic Occupancy Prediction Engine for Uncertainty-Aware Dynamic Navigation

Jun 28, 2024Abstract:This article presents a family of Stochastic Cartographic Occupancy Prediction Engines (SCOPEs) that enable mobile robots to predict the future states of complex dynamic environments. They do this by accounting for the motion of the robot itself, the motion of dynamic objects, and the geometry of static objects in the scene, and they generate a range of possible future states of the environment. These prediction algorithms are software-optimized for real-time performance for navigation in crowded dynamic scenes, achieving 10 times faster inference speed and 3 times less memory usage than the original engines. Three simulated and real-world datasets collected by different robot models are used to demonstrate that these proposed prediction algorithms are able to achieve more accurate and robust stochastic prediction performance than other algorithms. Furthermore, a series of simulation and hardware navigation experiments demonstrate that the proposed predictive uncertainty-aware navigation framework with these stochastic prediction engines is able to improve the safe navigation performance of current state-of-the-art model- and learning-based control policies.

Towards Predicting Collective Performance in Multi-Robot Teams

May 02, 2024

Abstract:The increased deployment of multi-robot systems (MRS) in various fields has led to the need for analysis of system-level performance. However, creating consistent metrics for MRS is challenging due to the wide range of system and environmental factors, such as team size and environment size. This paper presents a new analytical framework for MRS based on dimensionless variable analysis, a mathematical technique typically used to simplify complex physical systems. This approach effectively condenses the complex parameters influencing MRS performance into a manageable set of dimensionless variables. We form dimensionless variables which encapsulate key parameters of the robot team and task. Then we use these dimensionless variables to fit a parametric model of team performance. Our model successfully identifies critical performance determinants and their interdependencies, providing insight for MRS design and optimization. The application of dimensionless variable analysis to MRS offers a promising method for MRS analysis that effectively reduces complexity, enhances comprehension of system behaviors, and informs the design and management of future MRS deployments.

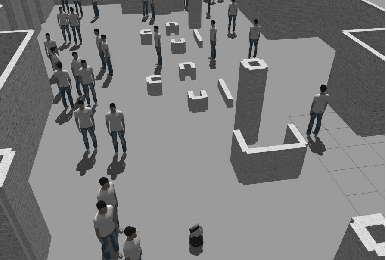

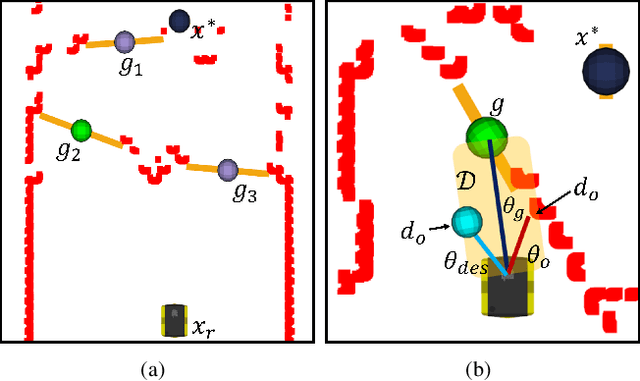

DRL-VO: Learning to Navigate Through Crowded Dynamic Scenes Using Velocity Obstacles

Jan 16, 2023Abstract:This paper proposes a novel learning-based control policy with strong generalizability to new environments that enables a mobile robot to navigate autonomously through spaces filled with both static obstacles and dense crowds of pedestrians. The policy uses a unique combination of input data to generate the desired steering angle and forward velocity: a short history of lidar data, kinematic data about nearby pedestrians, and a sub-goal point. The policy is trained in a reinforcement learning setting using a reward function that contains a novel term based on velocity obstacles to guide the robot to actively avoid pedestrians and move towards the goal. Through a series of 3D simulated experiments with up to 55 pedestrians, this control policy is able to achieve a better balance between collision avoidance and speed (i.e. higher success rate and faster average speed) than state-of-the-art model-based and learning-based policies, and it also generalizes better to different crowd sizes and unseen environments. An extensive series of hardware experiments demonstrate the ability of this policy to directly work in different real-world environments with different crowd sizes with zero retraining. Furthermore, a series of simulated and hardware experiments show that the control policy also works in highly constrained static environments on a different robot platform without any additional training. Lastly, we summarize several important lessons that can be applied to other robot learning systems. Multimedia demonstrations are available at https://www.youtube.com/watch?v=eCcNYSbgCv8&list=PLouWbAcP4zIvPgaARrV223lf2eiSR-eSS.

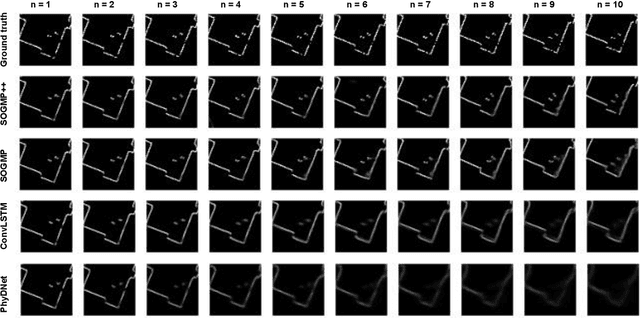

Stochastic Occupancy Grid Map Prediction in Dynamic Scenes

Oct 16, 2022

Abstract:This paper presents two variations of a novel stochastic prediction algorithm that enables mobile robots to accurately and robustly predict the future state of complex dynamic scenes, such as environments full of people. The proposed algorithm uses a variational autoencoder-based neural network to predict a range of possible future states of the environment. The algorithm takes full advantage of the motion of the robot itself, the motion of dynamic objects, and the geometry of static objects in the scene to improve prediction accuracy. Three different datasets collected by different robot models are used to demonstrate that the proposed algorithm is able to achieve smaller absolute error, higher structure similarity, and higher tracking accuracy than state-of-the-art prediction algorithms for video prediction tasks. Implementations of both proposed stochastic prediction algorithms are available open source at https://github.com/TempleRAIL/SOGMP.

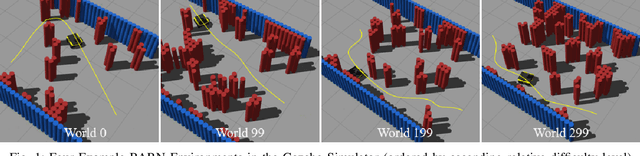

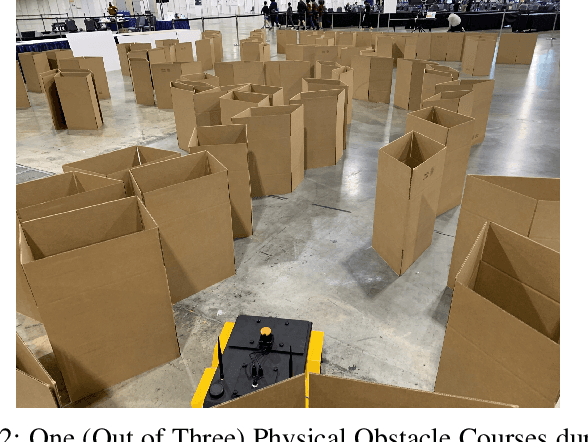

Autonomous Ground Navigation in Highly Constrained Spaces: Lessons learned from The BARN Challenge at ICRA 2022

Aug 22, 2022

Abstract:The BARN (Benchmark Autonomous Robot Navigation) Challenge took place at the 2022 IEEE International Conference on Robotics and Automation (ICRA 2022) in Philadelphia, PA. The aim of the challenge was to evaluate state-of-the-art autonomous ground navigation systems for moving robots through highly constrained environments in a safe and efficient manner. Specifically, the task was to navigate a standardized, differential-drive ground robot from a predefined start location to a goal location as quickly as possible without colliding with any obstacles, both in simulation and in the real world. Five teams from all over the world participated in the qualifying simulation competition, three of which were invited to compete with each other at a set of physical obstacle courses at the conference center in Philadelphia. The competition results suggest that autonomous ground navigation in highly constrained spaces, despite seeming ostensibly simple even for experienced roboticists, is actually far from being a solved problem. In this article, we discuss the challenge, the approaches used by the top three winning teams, and lessons learned to direct future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge