Zhanghao Sun

Scalable Low-latency Optical Phase Sensor Array

May 03, 2023

Abstract:Optical phase measurement is critical for many applications and traditional approaches often suffer from mechanical instability, temporal latency, and computational complexity. In this paper, we describe compact phase sensor arrays based on integrated photonics, which enable accurate and scalable reference-free phase sensing in a few measurement steps. This is achieved by connecting multiple two-port phase sensors into a graph to measure relative phases between neighboring and distant spatial locations. We propose an efficient post-processing algorithm, as well as circuit design rules to reduce random and biased error accumulations. We demonstrate the effectiveness of our system in both simulations and experiments with photonic integrated circuits. The proposed system measures the optical phase directly without the need for external references or spatial light modulators, thus providing significant benefits for applications including microscope imaging and optical phased arrays.

Consistent Direct Time-of-Flight Video Depth Super-Resolution

Nov 16, 2022

Abstract:Direct time-of-flight (dToF) sensors are promising for next-generation on-device 3D sensing. However, to achieve the sufficient signal-to-noise-ratio (SNR) in a compact module, the dToF data has limited spatial resolution (e.g., ~20x30 for iPhone dToF), and it requires a super-resolution step before being passed to downstream tasks. In this paper, we solve this super-resolution problem by fusing the low-resolution dToF data with the corresponding high-resolution RGB guidance. Unlike the conventional RGB-guided depth enhancement approaches which perform the fusion in a per-frame manner, we propose the first multi-frame fusion scheme to mitigate the spatial ambiguity resulting from the low-resolution dToF imaging. In addition, dToF sensors provide unique depth histogram information for each local patch, and we incorporate this dToF-specific feature in our network design to further alleviate spatial ambiguity. To evaluate our models on complex dynamic indoor environments and to provide a large-scale dToF sensor dataset, we introduce DyDToF, the first synthetic RGB-dToF video dataset that features dynamic objects and a realistic dToF simulator following the physical imaging process. We believe the methods and dataset are beneficial to a broad community as dToF depth sensing is becoming mainstream on mobile devices.

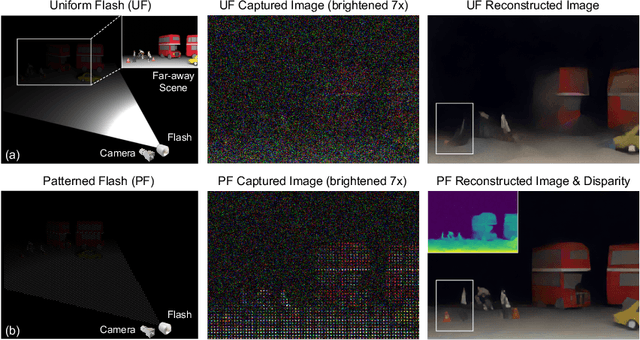

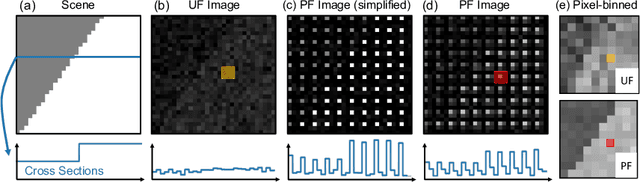

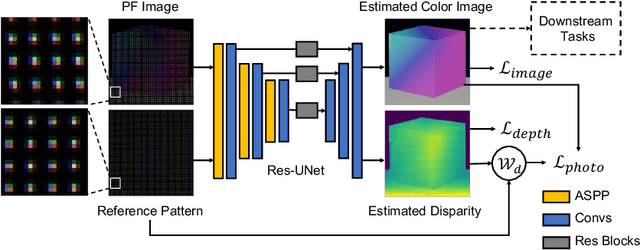

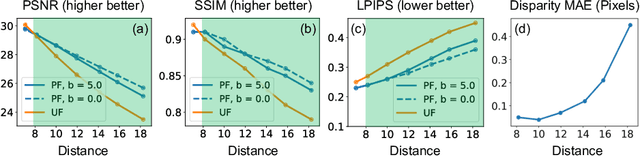

Seeing Far in the Dark with Patterned Flash

Jul 25, 2022

Abstract:Flash illumination is widely used in imaging under low-light environments. However, illumination intensity falls off with propagation distance quadratically, which poses significant challenges for flash imaging at a long distance. We propose a new flash technique, named ``patterned flash'', for flash imaging at a long distance. Patterned flash concentrates optical power into a dot array. Compared with the conventional uniform flash where the signal is overwhelmed by the noise everywhere, patterned flash provides stronger signals at sparsely distributed points across the field of view to ensure the signals at those points stand out from the sensor noise. This enables post-processing to resolve important objects and details. Additionally, the patterned flash projects texture onto the scene, which can be treated as a structured light system for depth perception. Given the novel system, we develop a joint image reconstruction and depth estimation algorithm with a convolutional neural network. We build a hardware prototype and test the proposed flash technique on various scenes. The experimental results demonstrate that our patterned flash has significantly better performance at long distances in low-light environments.

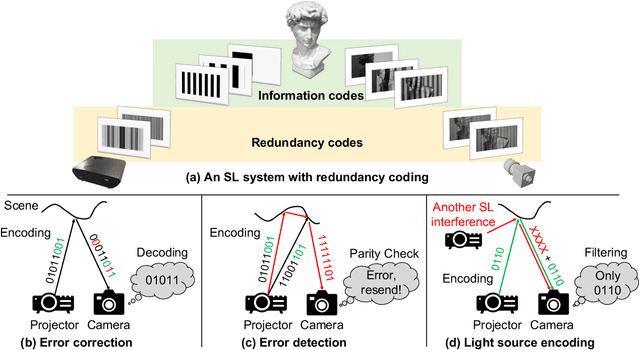

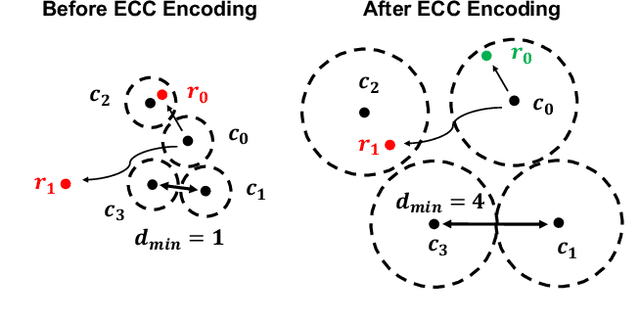

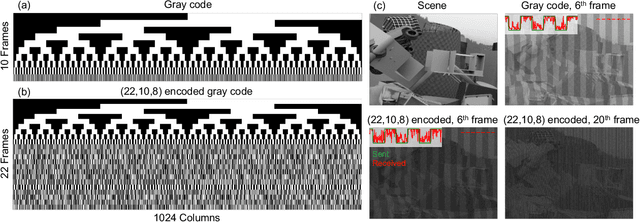

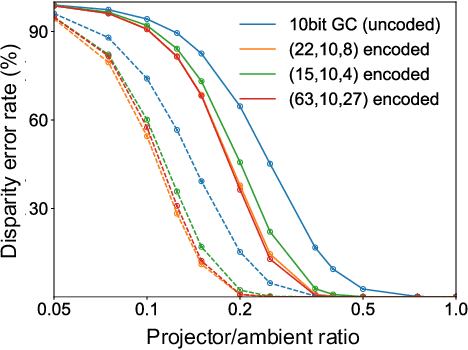

Structured Light with Redundancy Codes

Jun 18, 2022

Abstract:Structured light (SL) systems acquire high-fidelity 3D geometry with active illumination projection. Conventional systems exhibit challenges when working in environments with strong ambient illumination, global illumination and cross-device interference. This paper proposes a general-purposed technique to improve the robustness of SL by projecting redundant optical signals in addition to the native SL patterns. In this way, projected signals become more distinguishable from errors. Thus the geometry information can be more easily recovered using simple signal processing and the ``coding gain" in performance is obtained. We propose three applications using our redundancy codes: (1) Self error-correction for SL imaging under strong ambient light, (2) Error detection for adaptive reconstruction under global illumination, and (3) Interference filtering with device-specific projection sequence encoding, especially for event camera-based SL and light curtain devices. We systematically analyze the design rules and signal processing algorithms in these applications. Corresponding hardware prototypes are built for evaluations on real-world complex scenes. Experimental results on the synthetic and real data demonstrate the significant performance improvements in SL systems with our redundancy codes.

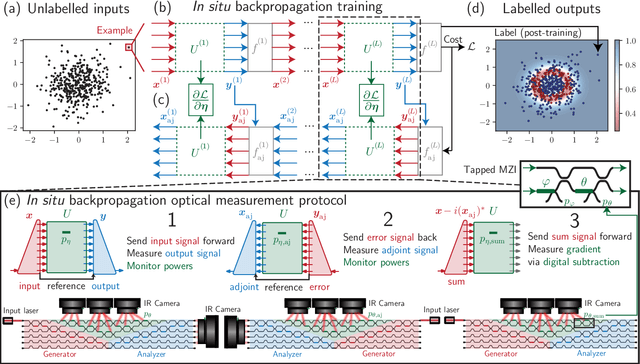

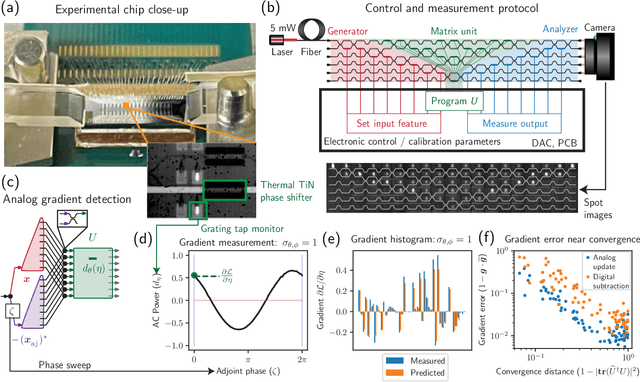

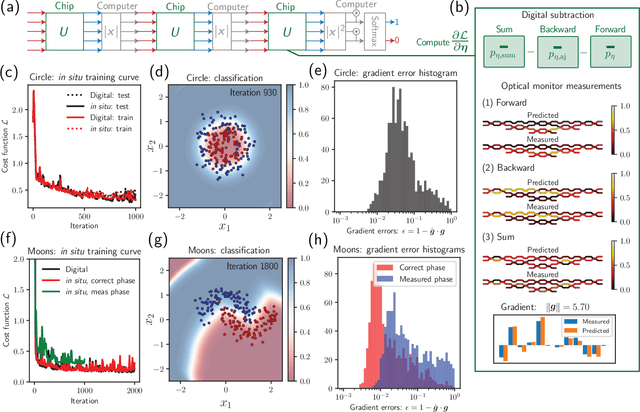

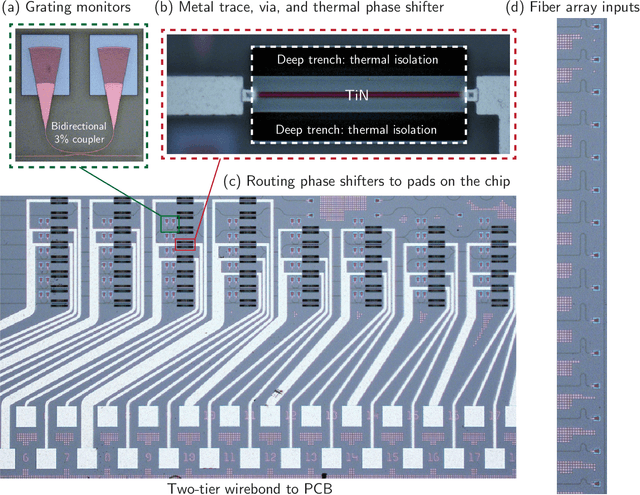

Experimentally realized in situ backpropagation for deep learning in nanophotonic neural networks

May 17, 2022

Abstract:Neural networks are widely deployed models across many scientific disciplines and commercial endeavors ranging from edge computing and sensing to large-scale signal processing in data centers. The most efficient and well-entrenched method to train such networks is backpropagation, or reverse-mode automatic differentiation. To counter an exponentially increasing energy budget in the artificial intelligence sector, there has been recent interest in analog implementations of neural networks, specifically nanophotonic neural networks for which no analog backpropagation demonstration exists. We design mass-manufacturable silicon photonic neural networks that alternately cascade our custom designed "photonic mesh" accelerator with digitally implemented nonlinearities. These reconfigurable photonic meshes program computationally intensive arbitrary matrix multiplication by setting physical voltages that tune the interference of optically encoded input data propagating through integrated Mach-Zehnder interferometer networks. Here, using our packaged photonic chip, we demonstrate in situ backpropagation for the first time to solve classification tasks and evaluate a new protocol to keep the entire gradient measurement and update of physical device voltages in the analog domain, improving on past theoretical proposals. Our method is made possible by introducing three changes to typical photonic meshes: (1) measurements at optical "grating tap" monitors, (2) bidirectional optical signal propagation automated by fiber switch, and (3) universal generation and readout of optical amplitude and phase. After training, our classification achieves accuracies similar to digital equivalents even in presence of systematic error. Our findings suggest a new training paradigm for photonics-accelerated artificial intelligence based entirely on a physical analog of the popular backpropagation technique.

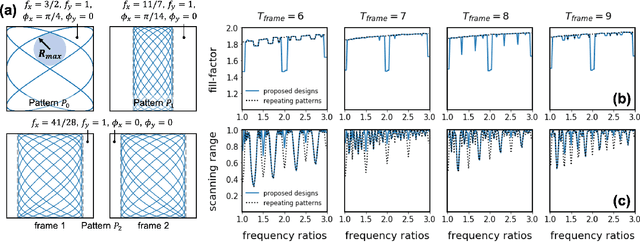

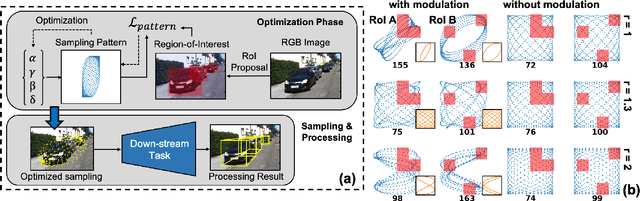

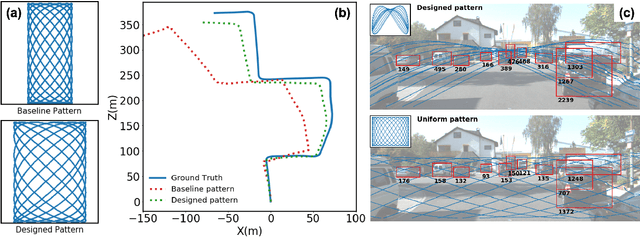

Resonant Scanning Design and Control for Fast Spatial Sampling

Mar 24, 2021

Abstract:Two-dimensional, resonant scanners have been utilized in a large variety of imaging modules due to their compact form, low power consumption, large angular range, and high speed. However, resonant scanners have problems with non-optimal and inflexible scanning patterns and inherent phase uncertainty, which limit practical applications. Here we propose methods for optimized design and control of the scanning trajectory of two-dimensional resonant scanners under various physical constraints, including high frame-rate and limited actuation amplitude. First, we propose an analytical design rule for uniform spatial sampling. We demonstrate theoretically and experimentally that by including non-repeating scanning patterns, the proposed designs outperform previous designs in terms of scanning range and fill factor. Second, we show that we can create flexible scanning patterns that allow focusing on user-defined Regions-of-Interest (RoI) by modulation of the scanning parameters. The scanning parameters are found by an optimization algorithm. In simulations, we demonstrate the benefits of these designs with standard metrics and higher-level computer vision tasks (LiDAR odometry and 3D object detection). Finally, we experimentally implement and verify both unmodulated and modulated scanning modes using a two-dimensional, resonant MEMS scanner. Central to the implementations is high bandwidth monitoring of the phase of the angular scans in both dimensions. This task is carried out with a position-sensitive photodetector combined with high-bandwidth electronics, enabling fast spatial sampling at ~ 100Hz frame-rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge