Zekun Yao

A Tale of Two Graphs: Separating Knowledge Exploration from Outline Structure for Open-Ended Deep Research

Feb 14, 2026Abstract:Open-Ended Deep Research (OEDR) pushes LLM agents beyond short-form QA toward long-horizon workflows that iteratively search, connect, and synthesize evidence into structured reports. However, existing OEDR agents largely follow either linear ``search-then-generate'' accumulation or outline-centric planning. The former suffers from lost-in-the-middle failures as evidence grows, while the latter relies on the LLM to implicitly infer knowledge gaps from the outline alone, providing weak supervision for identifying missing relations and triggering targeted exploration. We present DualGraph memory, an architecture that separates what the agent knows from how it writes. DualGraph maintains two co-evolving graphs: an Outline Graph (OG), and a Knowledge Graph (KG), a semantic memory that stores fine-grained knowledge units, including core entities, concepts, and their relations. By analyzing the KG topology together with structural signals from the OG, DualGraph generates targeted search queries, enabling more efficient and comprehensive iterative knowledge-driven exploration and refinement. Across DeepResearch Bench, DeepResearchGym, and DeepConsult, DualGraph consistently outperforms state-of-the-art baselines in report depth, breadth, and factual grounding; for example, it reaches a 53.08 RACE score on DeepResearch Bench with GPT-5. Moreover, ablation studies confirm the central role of the dual-graph design.

Improving Factual Consistency of Text Summarization by Adversarially Decoupling Comprehension and Embellishment Abilities of LLMs

Nov 01, 2023

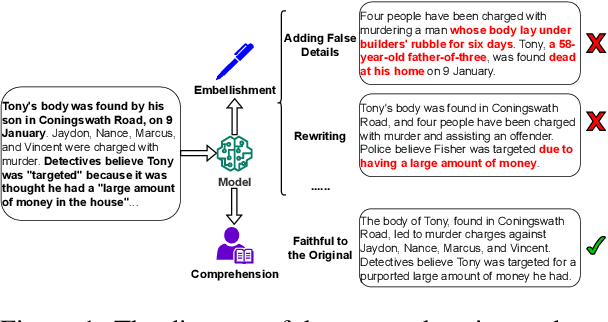

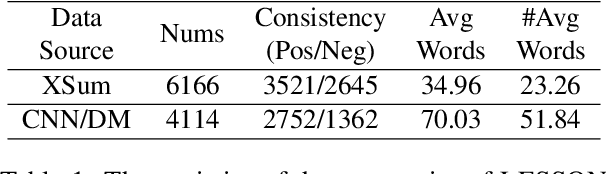

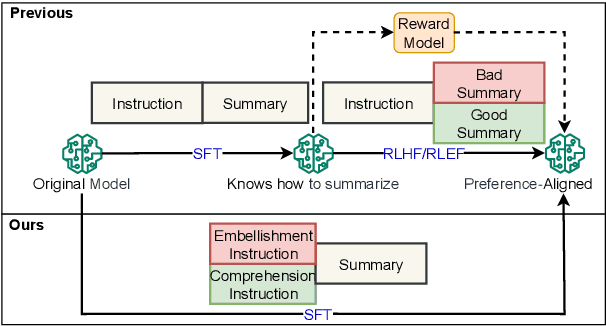

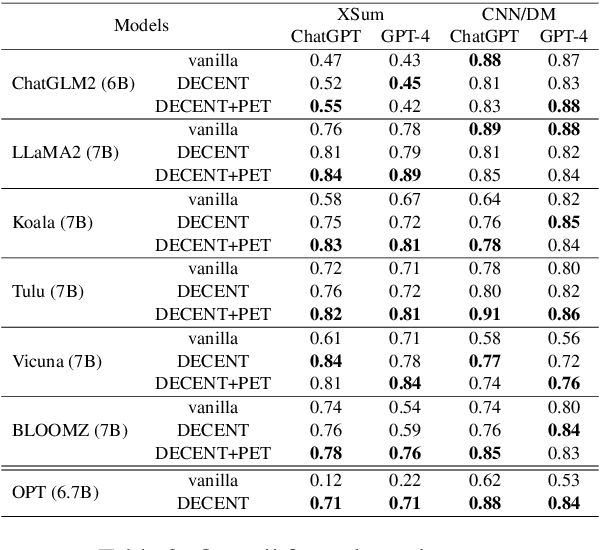

Abstract:Despite the recent progress in text summarization made by large language models (LLMs), they often generate summaries that are factually inconsistent with original articles, known as "hallucinations" in text generation. Unlike previous small models (e.g., BART, T5), current LLMs make fewer silly mistakes but more sophisticated ones, such as imposing cause and effect, adding false details, and overgeneralizing, etc. These hallucinations are challenging to detect through traditional methods, which poses great challenges for improving the factual consistency of text summarization. In this paper, we propose an adversarially DEcoupling method to disentangle the Comprehension and EmbellishmeNT abilities of LLMs (DECENT). Furthermore, we adopt a probing-based parameter-efficient technique to cover the shortage of sensitivity for true and false in the training process of LLMs. In this way, LLMs are less confused about embellishing and understanding, thus can execute the instructions more accurately and have enhanced abilities to distinguish hallucinations. Experimental results show that DECENT significantly improves the reliability of text summarization based on LLMs.

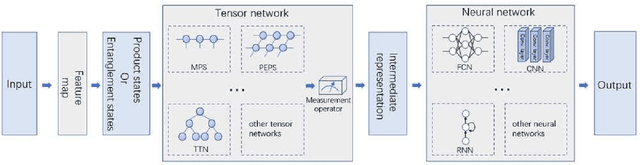

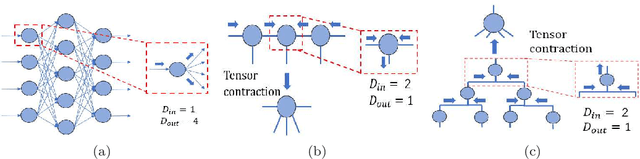

Quantum-Classical Machine learning by Hybrid Tensor Networks

May 15, 2020

Abstract:Tensor networks (TN) have found a wide use in machine learning, and in particular, TN and deep learning bear striking similarities. In this work, we propose the quantum-classical hybrid tensor networks (HTN) which combine tensor networks with classical neural networks in a uniform deep learning framework to overcome the limitations of regular tensor networks in machine learning. We first analyze the limitations of regular tensor networks in the applications of machine learning involving the representation power and architecture scalability. We conclude that in fact the regular tensor networks are not competent to be the basic building blocks of deep learning. Then, we discuss the performance of HTN which overcome all the deficiency of regular tensor networks for machine learning. In this sense, we are able to train HTN in the deep learning way which is the standard combination of algorithms such as Back Propagation and Stochastic Gradient Descent. We finally provide two applicable cases to show the potential applications of HTN, including quantum states classification and quantum-classical autoencoder. These cases also demonstrate the great potentiality to design various HTN in deep learning way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge