Zardad Khan

Centroid Decision Forest

Mar 25, 2025Abstract:This paper introduces the centroid decision forest (CDF), a novel ensemble learning framework that redefines the splitting strategy and tree building in the ordinary decision trees for high-dimensional classification. The splitting approach in CDF differs from the traditional decision trees in theat the class separability score (CSS) determines the selection of the most discriminative features at each node to construct centroids of the partitions (daughter nodes). The splitting criterion uses the Euclidean distance measurements from each class centroid to achieve a splitting mechanism that is more flexible and robust. Centroids are constructed by computing the mean feature values of the selected features for each class, ensuring a class-representative division of the feature space. This centroid-driven approach enables CDF to capture complex class structures while maintaining interpretability and scalability. To evaluate CDF, 23 high-dimensional datasets are used to assess its performance against different state-of-the-art classifiers through classification accuracy and Cohen's kappa statistic. The experimental results show that CDF outperforms the conventional methods establishing its effectiveness and flexibility for high-dimensional classification problems.

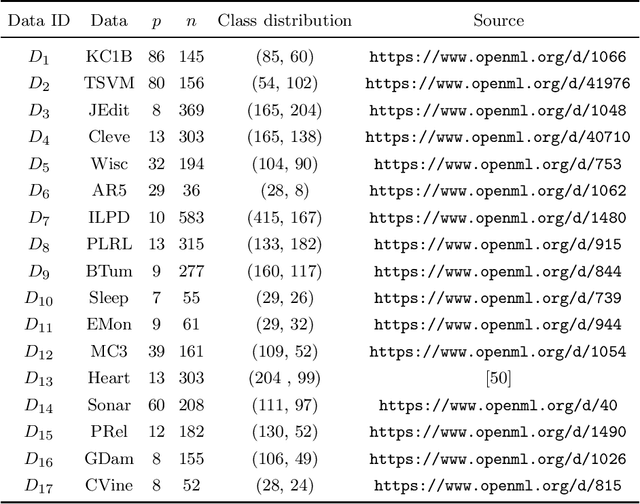

Feature Selection via Robust Weighted Score for High Dimensional Binary Class-Imbalanced Gene Expression Data

Jan 23, 2024Abstract:In this paper, a robust weighted score for unbalanced data (ROWSU) is proposed for selecting the most discriminative feature for high dimensional gene expression binary classification with class-imbalance problem. The method addresses one of the most challenging problems of highly skewed class distributions in gene expression datasets that adversely affect the performance of classification algorithms. First, the training dataset is balanced by synthetically generating data points from minority class observations. Second, a minimum subset of genes is selected using a greedy search approach. Third, a novel weighted robust score, where the weights are computed by support vectors, is introduced to obtain a refined set of genes. The highest-scoring genes based on this approach are combined with the minimum subset of genes selected by the greedy search approach to form the final set of genes. The novel method ensures the selection of the most discriminative genes, even in the presence of skewed class distribution, thus improving the performance of the classifiers. The performance of the proposed ROWSU method is evaluated on $6$ gene expression datasets. Classification accuracy and sensitivity are used as performance metrics to compare the proposed ROWSU algorithm with several other state-of-the-art methods. Boxplots and stability plots are also constructed for a better understanding of the results. The results show that the proposed method outperforms the existing feature selection procedures based on classification performance from k nearest neighbours (kNN) and random forest (RF) classifiers.

A Random Projection k Nearest Neighbours Ensemble for Classification via Extended Neighbourhood Rule

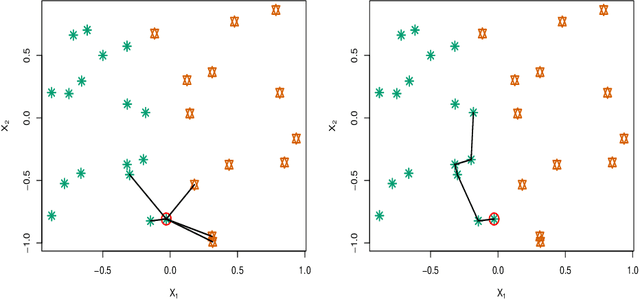

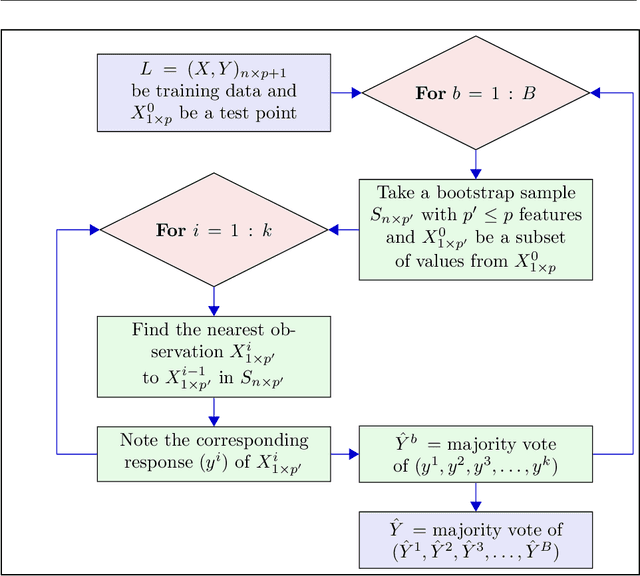

Mar 21, 2023Abstract:Ensembles based on k nearest neighbours (kNN) combine a large number of base learners, each constructed on a sample taken from a given training data. Typical kNN based ensembles determine the k closest observations in the training data bounded to a test sample point by a spherical region to predict its class. In this paper, a novel random projection extended neighbourhood rule (RPExNRule) ensemble is proposed where bootstrap samples from the given training data are randomly projected into lower dimensions for additional randomness in the base models and to preserve features information. It uses the extended neighbourhood rule (ExNRule) to fit kNN as base learners on randomly projected bootstrap samples.

An Optimal k Nearest Neighbours Ensemble for Classification Based on Extended Neighbourhood Rule with Features subspace

Nov 21, 2022Abstract:To minimize the effect of outliers, kNN ensembles identify a set of closest observations to a new sample point to estimate its unknown class by using majority voting in the labels of the training instances in the neighbourhood. Ordinary kNN based procedures determine k closest training observations in the neighbourhood region (enclosed by a sphere) by using a distance formula. The k nearest neighbours procedure may not work in a situation where sample points in the test data follow the pattern of the nearest observations that lie on a certain path not contained in the given sphere of nearest neighbours. Furthermore, these methods combine hundreds of base kNN learners and many of them might have high classification errors thereby resulting in poor ensembles. To overcome these problems, an optimal extended neighbourhood rule based ensemble is proposed where the neighbours are determined in k steps. It starts from the first nearest sample point to the unseen observation. The second nearest data point is identified that is closest to the previously selected data point. This process is continued until the required number of the k observations are obtained. Each base model in the ensemble is constructed on a bootstrap sample in conjunction with a random subset of features. After building a sufficiently large number of base models, the optimal models are then selected based on their performance on out-of-bag (OOB) data.

A k nearest neighbours classifiers ensemble based on extended neighbourhood rule and features subsets

May 30, 2022

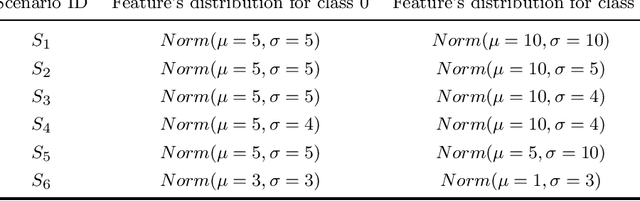

Abstract:kNN based ensemble methods minimise the effect of outliers by identifying a set of data points in the given feature space that are nearest to an unseen observation in order to predict its response by using majority voting. The ordinary ensembles based on kNN find out the k nearest observations in a region (bounded by a sphere) based on a predefined value of k. This scenario, however, might not work in situations when the test observation follows the pattern of the closest data points with the same class that lie on a certain path not contained in the given sphere. This paper proposes a k nearest neighbour ensemble where the neighbours are determined in k steps. Starting from the first nearest observation of the test point, the algorithm identifies a single observation that is closest to the observation at the previous step. At each base learner in the ensemble, this search is extended to k steps on a random bootstrap sample with a random subset of features selected from the feature space. The final predicted class of the test point is determined by using a majority vote in the predicted classes given by all base models. This new ensemble method is applied on 17 benchmark datasets and compared with other classical methods, including kNN based models, in terms of classification accuracy, kappa and Brier score as performance metrics. Boxplots are also utilised to illustrate the difference in the results given by the proposed and other state-of-the-art methods. The proposed method outperformed the rest of the classical methods in the majority of cases. The paper gives a detailed simulation study for further assessment.

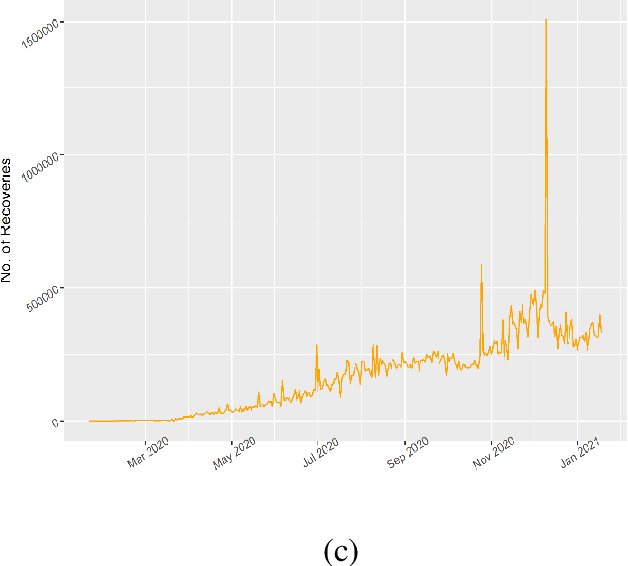

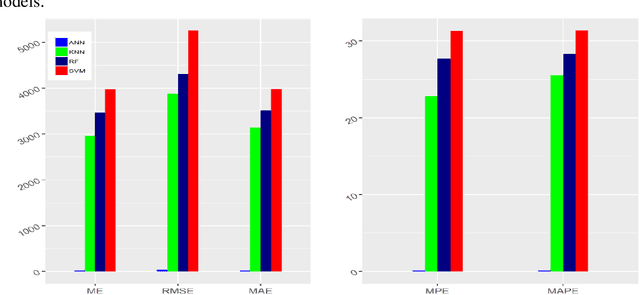

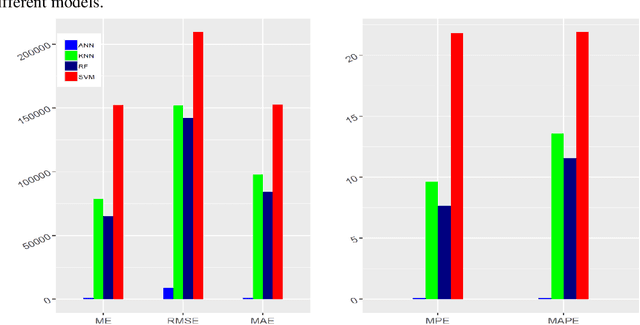

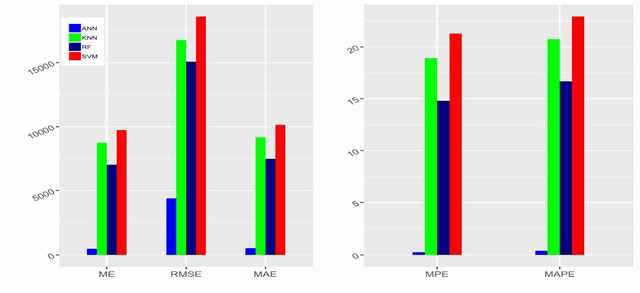

Comparative Analysis of Machine Learning Approaches to Analyze and Predict the Covid-19 Outbreak

Feb 11, 2021

Abstract:Background. Forecasting the time of forthcoming pandemic reduces the impact of diseases by taking precautionary steps such as public health messaging and raising the consciousness of doctors. With the continuous and rapid increase in the cumulative incidence of COVID-19, statistical and outbreak prediction models including various machine learning (ML) models are being used by the research community to track and predict the trend of the epidemic, and also in developing appropriate strategies to combat and manage its spread. Methods. In this paper, we present a comparative analysis of various ML approaches including Support Vector Machine, Random Forest, K-Nearest Neighbor and Artificial Neural Network in predicting the COVID-19 outbreak in the epidemiological domain. We first apply the autoregressive distributed lag (ARDL) method to identify and model the short and long-run relationships of the time-series COVID-19 datasets. That is, we determine the lags between a response variable and its respective explanatory time series variables as independent variables. Then, the resulting significant variables concerning their lags are used in the regression model selected by the ARDL for predicting and forecasting the trend of the epidemic. Results. Statistical measures i.e., Root Mean Square Error (RMSE), Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE) are used for model accuracy. The values of MAPE for the best selected models for confirmed, recovered and deaths cases are 0.407, 0.094 and 0.124 respectively, which falls under the category of highly accurate forecasts. In addition, we computed fifteen days ahead forecast for the daily deaths, recover, and confirm patients and the cases fluctuated across time in all aspects. Besides, the results reveal the advantages of ML algorithms for supporting decision making of evolving short term policies.

Optimal trees selection for classification via out-of-bag assessment and sub-bagging

Dec 30, 2020

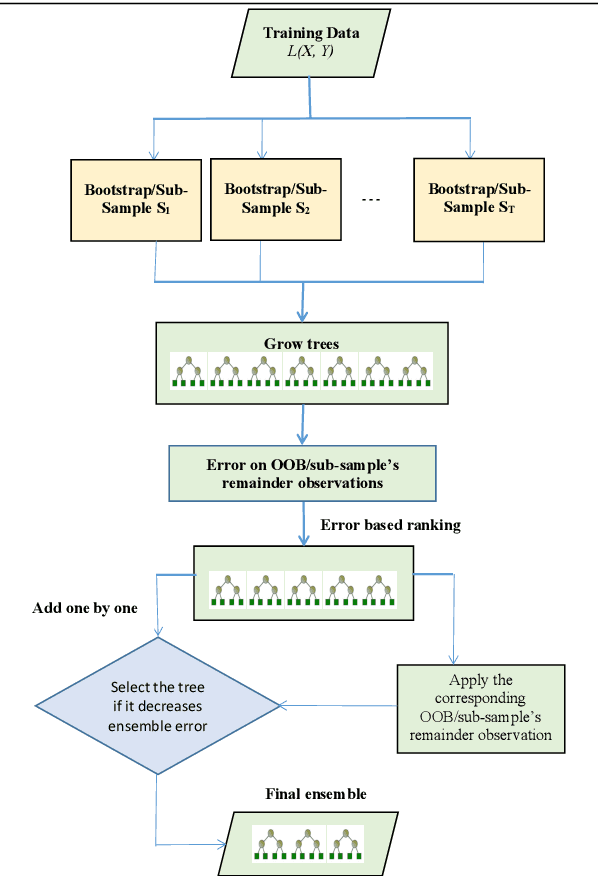

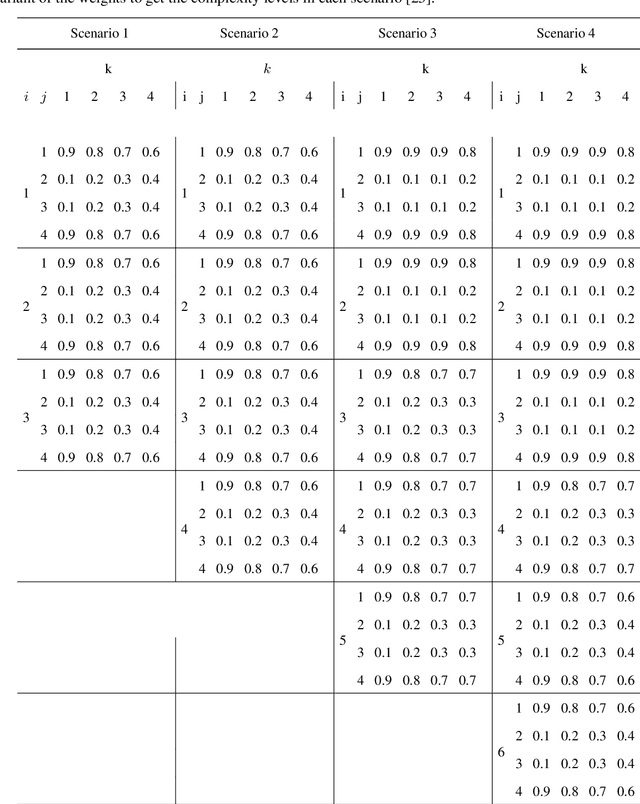

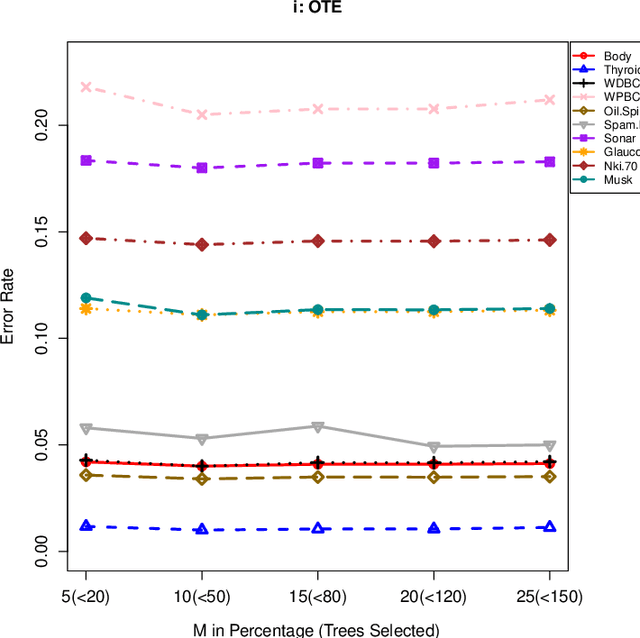

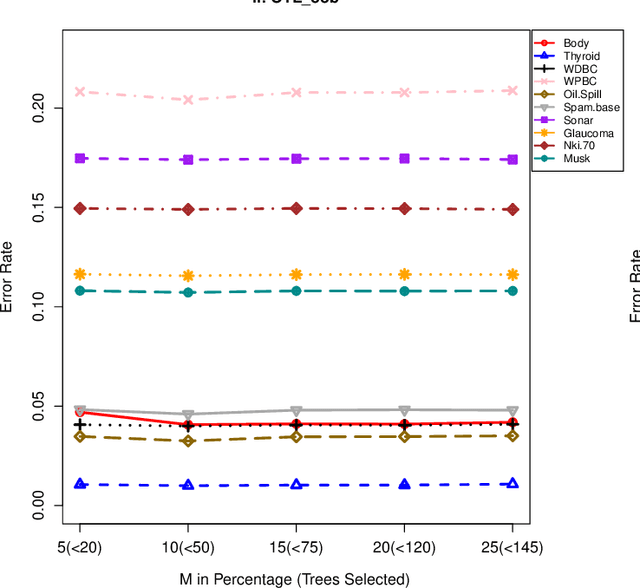

Abstract:The effect of training data size on machine learning methods has been well investigated over the past two decades. The predictive performance of tree based machine learning methods, in general, improves with a decreasing rate as the size of training data increases. We investigate this in optimal trees ensemble (OTE) where the method fails to learn from some of the training observations due to internal validation. Modified tree selection methods are thus proposed for OTE to cater for the loss of training observations in internal validation. In the first method, corresponding out-of-bag (OOB) observations are used in both individual and collective performance assessment for each tree. Trees are ranked based on their individual performance on the OOB observations. A certain number of top ranked trees is selected and starting from the most accurate tree, subsequent trees are added one by one and their impact is recorded by using the OOB observations left out from the bootstrap sample taken for the tree being added. A tree is selected if it improves predictive accuracy of the ensemble. In the second approach, trees are grown on random subsets, taken without replacement-known as sub-bagging, of the training data instead of bootstrap samples (taken with replacement). The remaining observations from each sample are used in both individual and collective assessments for each corresponding tree similar to the first method. Analysis on 21 benchmark datasets and simulations studies show improved performance of the modified methods in comparison to OTE and other state-of-the-art methods.

Optimal survival trees ensemble

May 18, 2020

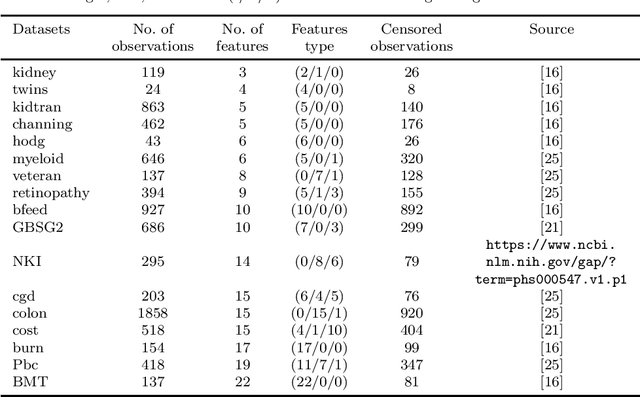

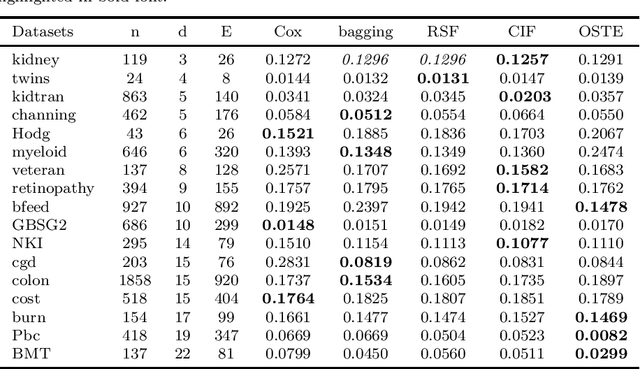

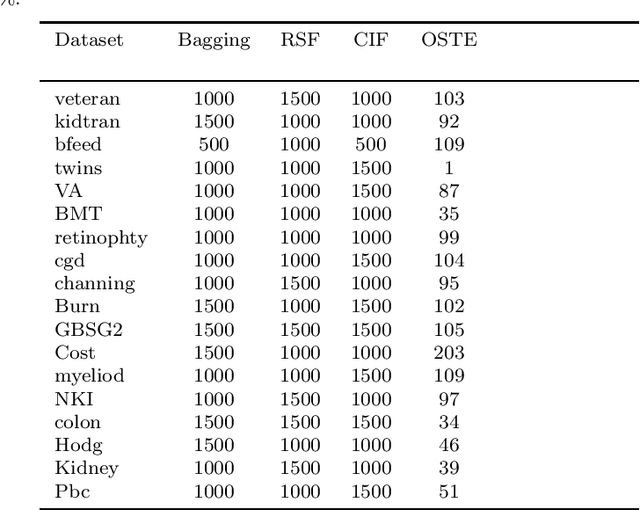

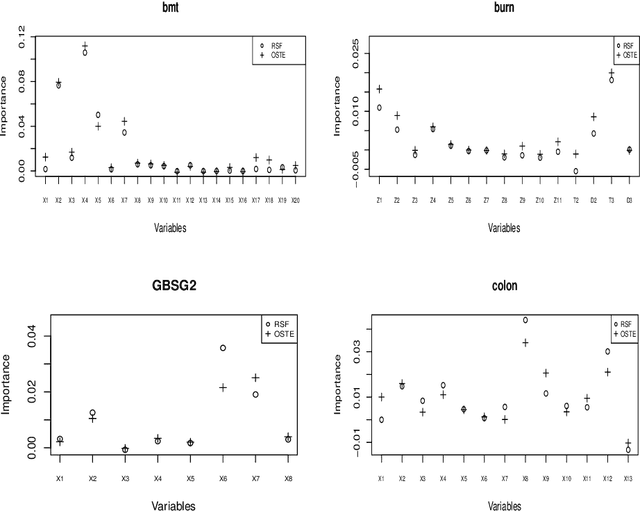

Abstract:Recent studies have adopted an approach of selecting accurate and diverse trees based on individual or collective performance within an ensemble for classification and regression problems. This work follows in the wake of these investigations and considers the possibility of growing a forest of optimal survival trees. Initially, a large set of survival trees are grown using the method of random survival forest. The grown trees are then ranked from smallest to highest value of their prediction error using out-of-bag observations for each respective survival tree. The top ranked survival trees are then assessed for their collective performance as an ensemble. This ensemble is initiated with the survival tree which stands first in rank, then further trees are tested one by one by adding them to the ensemble in order of rank. A survival tree is selected for the resultant ensemble if the performance improves after an assessment using independent training data. This ensemble is called an optimal trees ensemble (OSTE). The proposed method is assessed using 17 benchmark datasets and the results are compared with those of random survival forest, conditional inference forest, bagging and a non tree based method, the Cox proportional hazard model. In addition to improve predictive performance, the proposed method reduces the number of survival trees in the ensemble as compared to the other tree based methods. The method is implemented in an R package called "OSTE".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge