Nosheen Faiz

Optimal trees selection for classification via out-of-bag assessment and sub-bagging

Dec 30, 2020

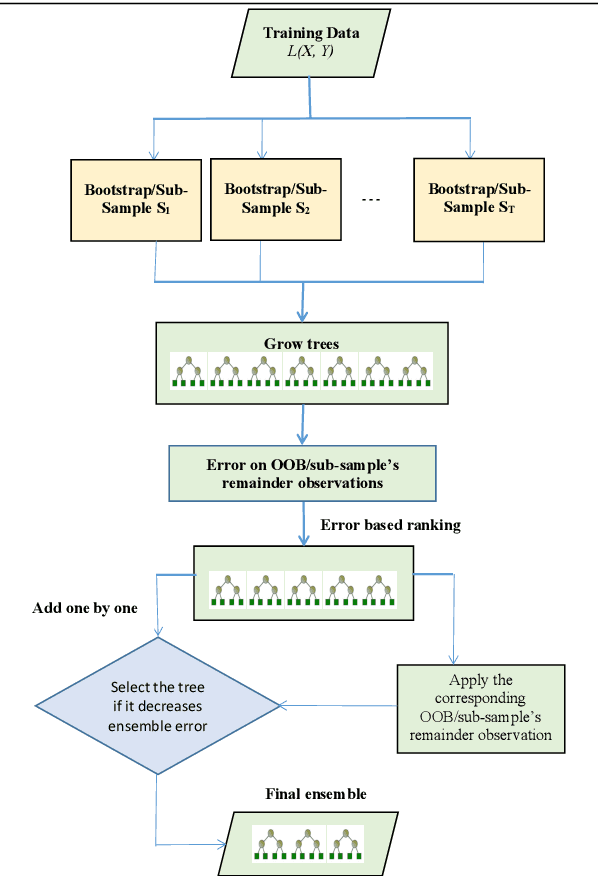

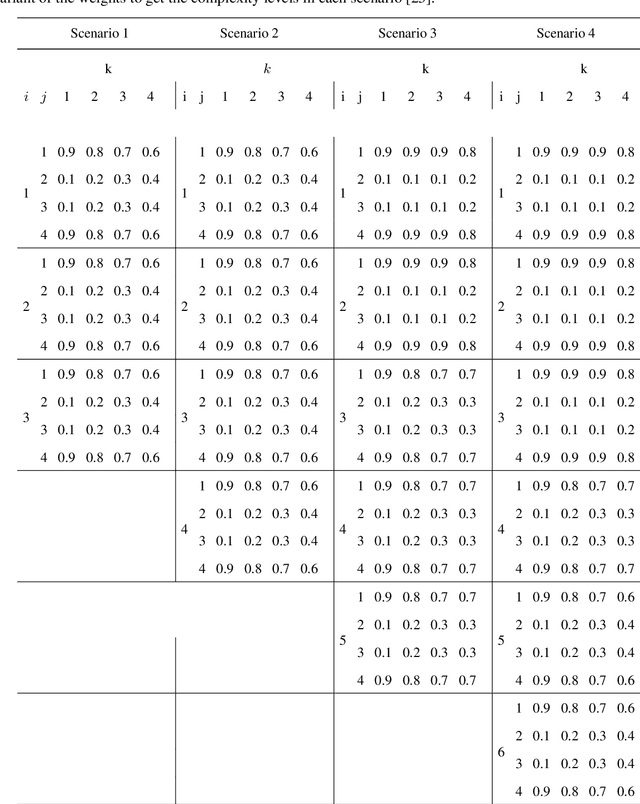

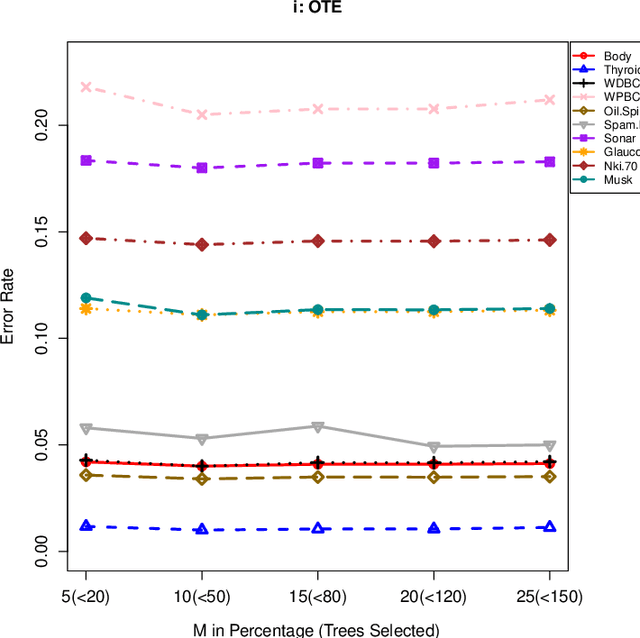

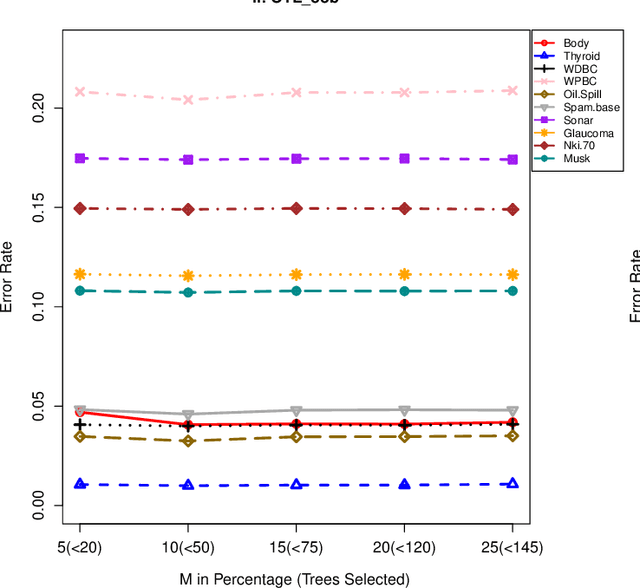

Abstract:The effect of training data size on machine learning methods has been well investigated over the past two decades. The predictive performance of tree based machine learning methods, in general, improves with a decreasing rate as the size of training data increases. We investigate this in optimal trees ensemble (OTE) where the method fails to learn from some of the training observations due to internal validation. Modified tree selection methods are thus proposed for OTE to cater for the loss of training observations in internal validation. In the first method, corresponding out-of-bag (OOB) observations are used in both individual and collective performance assessment for each tree. Trees are ranked based on their individual performance on the OOB observations. A certain number of top ranked trees is selected and starting from the most accurate tree, subsequent trees are added one by one and their impact is recorded by using the OOB observations left out from the bootstrap sample taken for the tree being added. A tree is selected if it improves predictive accuracy of the ensemble. In the second approach, trees are grown on random subsets, taken without replacement-known as sub-bagging, of the training data instead of bootstrap samples (taken with replacement). The remaining observations from each sample are used in both individual and collective assessments for each corresponding tree similar to the first method. Analysis on 21 benchmark datasets and simulations studies show improved performance of the modified methods in comparison to OTE and other state-of-the-art methods.

Optimal survival trees ensemble

May 18, 2020

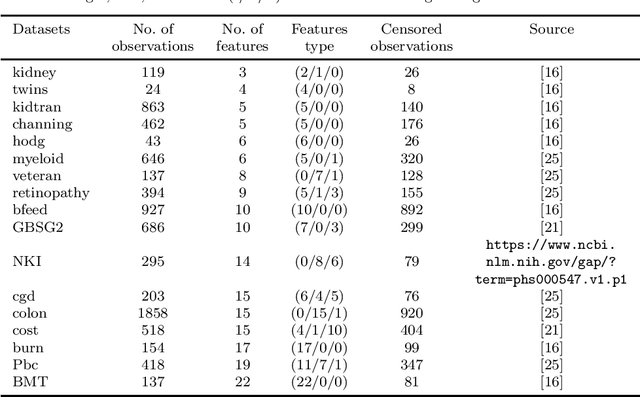

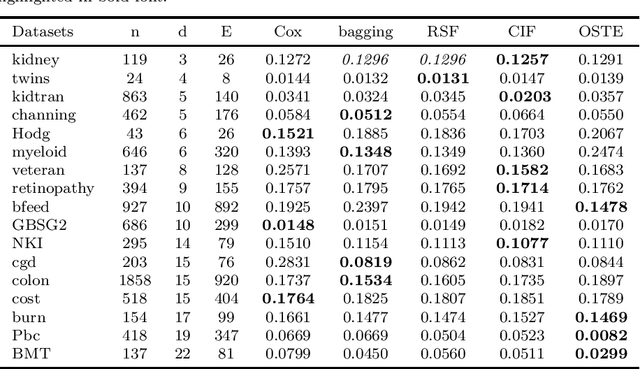

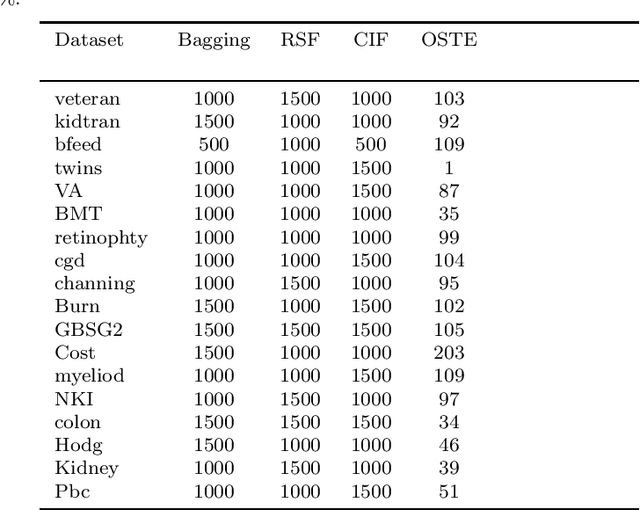

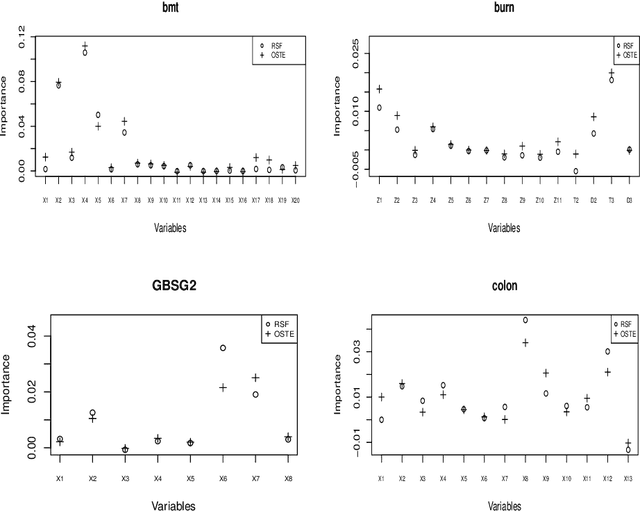

Abstract:Recent studies have adopted an approach of selecting accurate and diverse trees based on individual or collective performance within an ensemble for classification and regression problems. This work follows in the wake of these investigations and considers the possibility of growing a forest of optimal survival trees. Initially, a large set of survival trees are grown using the method of random survival forest. The grown trees are then ranked from smallest to highest value of their prediction error using out-of-bag observations for each respective survival tree. The top ranked survival trees are then assessed for their collective performance as an ensemble. This ensemble is initiated with the survival tree which stands first in rank, then further trees are tested one by one by adding them to the ensemble in order of rank. A survival tree is selected for the resultant ensemble if the performance improves after an assessment using independent training data. This ensemble is called an optimal trees ensemble (OSTE). The proposed method is assessed using 17 benchmark datasets and the results are compared with those of random survival forest, conditional inference forest, bagging and a non tree based method, the Cox proportional hazard model. In addition to improve predictive performance, the proposed method reduces the number of survival trees in the ensemble as compared to the other tree based methods. The method is implemented in an R package called "OSTE".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge