Zailei Zhou

Faster Video Moment Retrieval with Point-Level Supervision

May 23, 2023

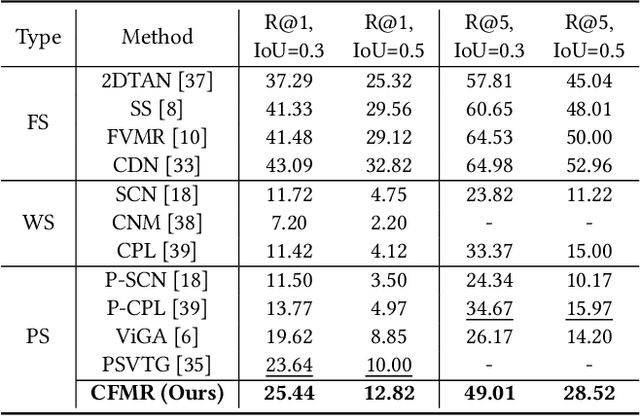

Abstract:Video Moment Retrieval (VMR) aims at retrieving the most relevant events from an untrimmed video with natural language queries. Existing VMR methods suffer from two defects: (1) massive expensive temporal annotations are required to obtain satisfying performance; (2) complicated cross-modal interaction modules are deployed, which lead to high computational cost and low efficiency for the retrieval process. To address these issues, we propose a novel method termed Cheaper and Faster Moment Retrieval (CFMR), which well balances the retrieval accuracy, efficiency, and annotation cost for VMR. Specifically, our proposed CFMR method learns from point-level supervision where each annotation is a single frame randomly located within the target moment. It is 6 times cheaper than the conventional annotations of event boundaries. Furthermore, we also design a concept-based multimodal alignment mechanism to bypass the usage of cross-modal interaction modules during the inference process, remarkably improving retrieval efficiency. The experimental results on three widely used VMR benchmarks demonstrate the proposed CFMR method establishes new state-of-the-art with point-level supervision. Moreover, it significantly accelerates the retrieval speed with more than 100 times FLOPs compared to existing approaches with point-level supervision.

Balancing Multi-level Interactions for Session-based Recommendation

Oct 29, 2019

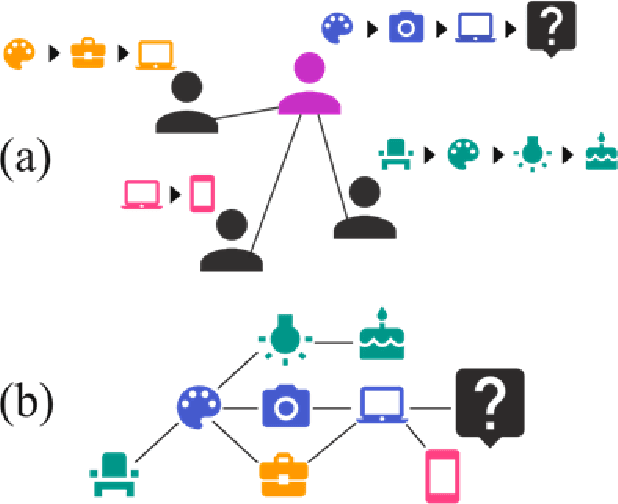

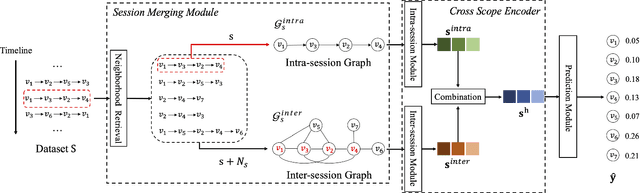

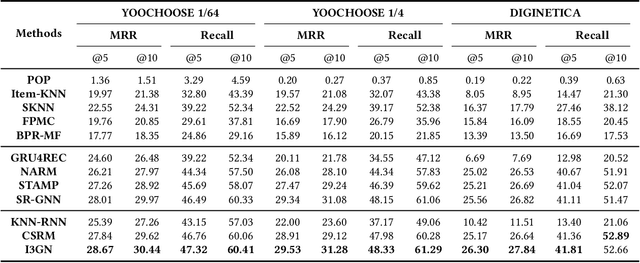

Abstract:Predicting user actions based on anonymous sessions is a challenge to general recommendation systems because the lack of user profiles heavily limits data-driven models. Recently, session-based recommendation methods have achieved remarkable results in dealing with this task. However, the upper bound of performance can still be boosted through the innovative exploration of limited data. In this paper, we propose a novel method, namely Intra-and Inter-session Interaction-aware Graph-enhanced Network, to take inter-session item-level interactions into account. Different from existing intra-session item-level interactions and session-level collaborative information, our introduced data represents complex item-level interactions between different sessions. For mining the new data without breaking the equilibrium of the model between different interactions, we construct an intra-session graph and an inter-session graph for the current session. The former focuses on item-level interactions within a single session and the latter models those between items among neighborhood sessions. Then different approaches are employed to encode the information of two graphs according to different structures, and the generated latent vectors are combined to balance the model across different scopes. Experiments on real-world datasets verify that our method outperforms other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge