Zachary Kaden

In-training Matrix Factorization for Parameter-frugal Neural Machine Translation

Sep 27, 2019

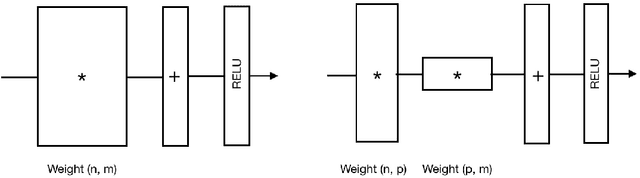

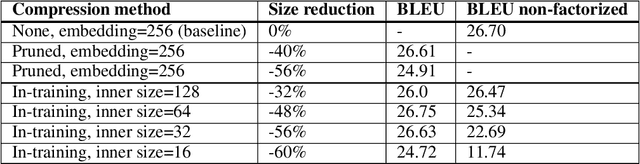

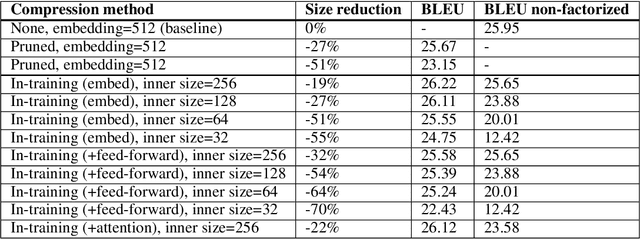

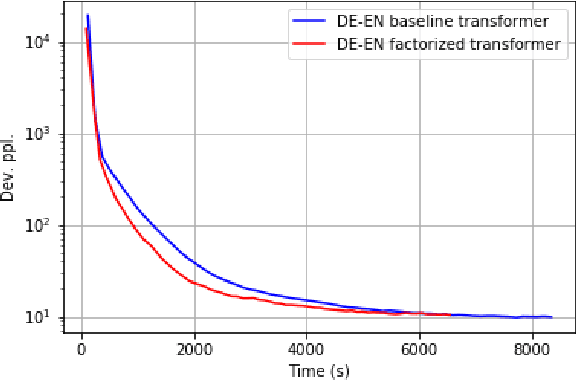

Abstract:In this paper, we propose the use of in-training matrix factorization to reduce the model size for neural machine translation. Using in-training matrix factorization, parameter matrices may be decomposed into the products of smaller matrices, which can compress large machine translation architectures by vastly reducing the number of learnable parameters. We apply in-training matrix factorization to different layers of standard neural architectures and show that in-training factorization is capable of reducing nearly 50% of learnable parameters without any associated loss in BLEU score. Further, we find that in-training matrix factorization is especially powerful on embedding layers, providing a simple and effective method to curtail the number of parameters with minimal impact on model performance, and, at times, an increase in performance.

Tartan: A retrieval-based socialbot powered by a dynamic finite-state machine architecture

Dec 04, 2018

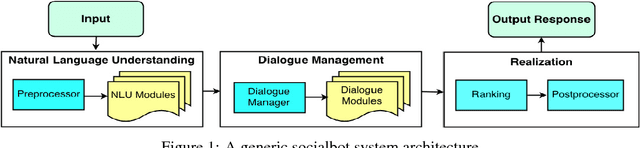

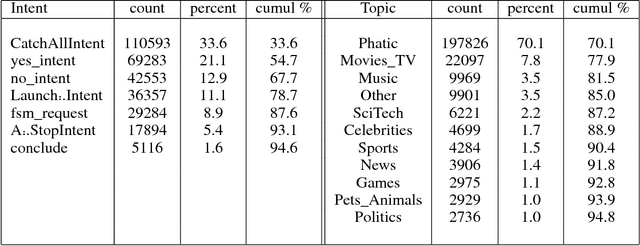

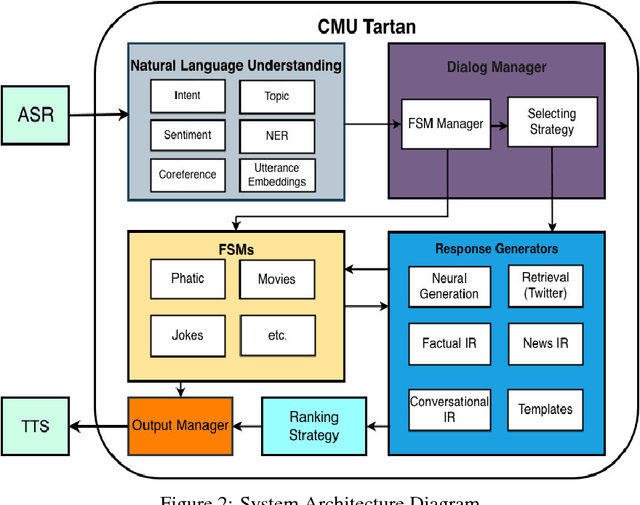

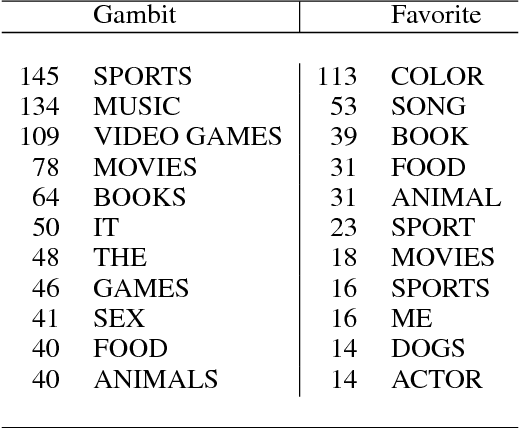

Abstract:This paper describes the Tartan conversational agent built for the 2018 Alexa Prize Competition. Tartan is a non-goal-oriented socialbot focused around providing users with an engaging and fluent casual conversation. Tartan's key features include an emphasis on structured conversation based on flexible finite-state models and an approach focused on understanding and using conversational acts. To provide engaging conversations, Tartan blends script-like yet dynamic responses with data-based generative and retrieval models. Unique to Tartan is that our dialog manager is modeled as a dynamic Finite State Machine. To our knowledge, no other conversational agent implementation has followed this specific structure.

Horizon: Facebook's Open Source Applied Reinforcement Learning Platform

Nov 01, 2018

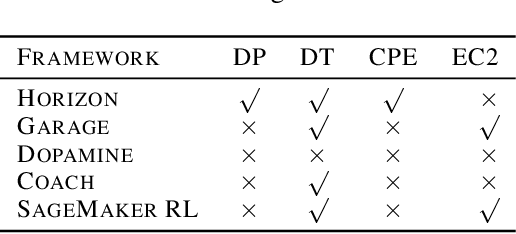

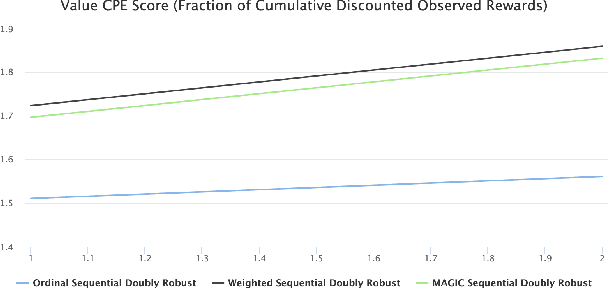

Abstract:In this paper we present Horizon, Facebook's open source applied reinforcement learning (RL) platform. Horizon is an end-to-end platform designed to solve industry applied RL problems where datasets are large (millions to billions of observations), the feedback loop is slow (vs. a simulator), and experiments must be done with care because they don't run in a simulator. Unlike other RL platforms, which are often designed for fast prototyping and experimentation, Horizon is designed with production use cases as top of mind. The platform contains workflows to train popular deep RL algorithms and includes data preprocessing, feature transformation, distributed training, counterfactual policy evaluation, and optimized serving. We also showcase real examples of where models trained with Horizon significantly outperformed and replaced supervised learning systems at Facebook.

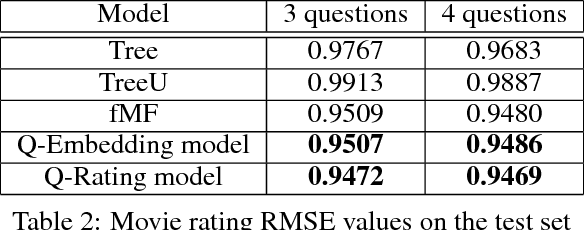

Handling Cold-Start Collaborative Filtering with Reinforcement Learning

Jun 16, 2018

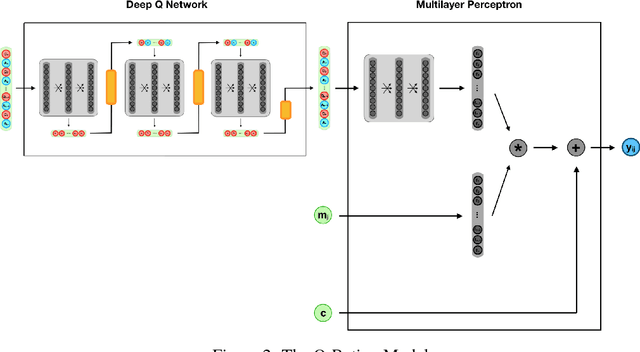

Abstract:A major challenge in recommender systems is handling new users, whom are also called $\textit{cold-start}$ users. In this paper, we propose a novel approach for learning an optimal series of questions with which to interview cold-start users for movie recommender systems. We propose learning interview questions using Deep Q Networks to create user profiles to make better recommendations to cold-start users. While our proposed system is trained using a movie recommender system, our Deep Q Network model should generalize across various types of recommender systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge