Yuval Pinter

Ben-Gurion University of the Negev

The Effect of Scripts and Formats on LLM Numeracy

Jan 21, 2026Abstract:Large language models (LLMs) have achieved impressive proficiency in basic arithmetic, rivaling human-level performance on standard numerical tasks. However, little attention has been given to how these models perform when numerical expressions deviate from the prevailing conventions present in their training corpora. In this work, we investigate numerical reasoning across a wide range of numeral scripts and formats. We show that LLM accuracy drops substantially when numerical inputs are rendered in underrepresented scripts or formats, despite the underlying mathematical reasoning being identical. We further demonstrate that targeted prompting strategies, such as few-shot prompting and explicit numeral mapping, can greatly narrow this gap. Our findings highlight an overlooked challenge in multilingual numerical reasoning and provide actionable insights for working with LLMs to reliably interpret, manipulate, and generate numbers across diverse numeral scripts and formatting styles.

Which Pieces Does Unigram Tokenization Really Need?

Dec 14, 2025

Abstract:The Unigram tokenization algorithm offers a probabilistic alternative to the greedy heuristics of Byte-Pair Encoding. Despite its theoretical elegance, its implementation in practice is complex, limiting its adoption to the SentencePiece package and adapters thereof. We bridge this gap between theory and practice by providing a clear guide to implementation and parameter choices. We also identify a simpler algorithm that accepts slightly higher training loss in exchange for improved compression.

Hebrew Diacritics Restoration using Visual Representation

Oct 30, 2025Abstract:Diacritics restoration in Hebrew is a fundamental task for ensuring accurate word pronunciation and disambiguating textual meaning. Despite the language's high degree of ambiguity when unvocalized, recent machine learning approaches have significantly advanced performance on this task. In this work, we present DIVRIT, a novel system for Hebrew diacritization that frames the task as a zero-shot classification problem. Our approach operates at the word level, selecting the most appropriate diacritization pattern for each undiacritized word from a dynamically generated candidate set, conditioned on the surrounding textual context. A key innovation of DIVRIT is its use of a Hebrew Visual Language Model, which processes undiacritized text as an image, allowing diacritic information to be embedded directly within the input's vector representation. Through a comprehensive evaluation across various configurations, we demonstrate that the system effectively performs diacritization without relying on complex, explicit linguistic analysis. Notably, in an ``oracle'' setting where the correct diacritized form is guaranteed to be among the provided candidates, DIVRIT achieves a high level of accuracy. Furthermore, strategic architectural enhancements and optimized training methodologies yield significant improvements in the system's overall generalization capabilities. These findings highlight the promising potential of visual representations for accurate and automated Hebrew diacritization.

Probing Subphonemes in Morphology Models

May 16, 2025Abstract:Transformers have achieved state-of-the-art performance in morphological inflection tasks, yet their ability to generalize across languages and morphological rules remains limited. One possible explanation for this behavior can be the degree to which these models are able to capture implicit phenomena at the phonological and subphonemic levels. We introduce a language-agnostic probing method to investigate phonological feature encoding in transformers trained directly on phonemes, and perform it across seven morphologically diverse languages. We show that phonological features which are local, such as final-obstruent devoicing in Turkish, are captured well in phoneme embeddings, whereas long-distance dependencies like vowel harmony are better represented in the transformer's encoder. Finally, we discuss how these findings inform empirical strategies for training morphological models, particularly regarding the role of subphonemic feature acquisition.

Boundless Byte Pair Encoding: Breaking the Pre-tokenization Barrier

Mar 31, 2025Abstract:Pre-tokenization, the initial step in many modern tokenization pipelines, segments text into smaller units called pretokens, typically splitting on whitespace and punctuation. While this process encourages having full, individual words as tokens, it introduces a fundamental limitation in most tokenization algorithms such as Byte Pair Encoding (BPE). Specifically, pre-tokenization causes the distribution of tokens in a corpus to heavily skew towards common, full-length words. This skewed distribution limits the benefits of expanding to larger vocabularies, since the additional tokens appear with progressively lower counts. To overcome this barrier, we propose BoundlessBPE, a modified BPE algorithm that relaxes the pretoken boundary constraint. Our approach selectively merges two complete pretokens into a larger unit we term a superword. Superwords are not necessarily semantically cohesive. For example, the pretokens " of" and " the" might be combined to form the superword " of the". This merging strategy results in a substantially more uniform distribution of tokens across a corpus than standard BPE, and compresses text more effectively, with an approximate 20% increase in bytes per token.

Splintering Nonconcatenative Languages for Better Tokenization

Mar 18, 2025Abstract:Common subword tokenization algorithms like BPE and UnigramLM assume that text can be split into meaningful units by concatenative measures alone. This is not true for languages such as Hebrew and Arabic, where morphology is encoded in root-template patterns, or Malay and Georgian, where split affixes are common. We present SPLINTER, a pre-processing step which rearranges text into a linear form that better represents such nonconcatenative morphologies, enabling meaningful contiguous segments to be found by the tokenizer. We demonstrate SPLINTER's merit using both intrinsic measures evaluating token vocabularies in Hebrew, Arabic, and Malay; as well as on downstream tasks using BERT-architecture models trained for Hebrew.

Token-Level Privacy in Large Language Models

Mar 05, 2025Abstract:The use of language models as remote services requires transmitting private information to external providers, raising significant privacy concerns. This process not only risks exposing sensitive data to untrusted service providers but also leaves it vulnerable to interception by eavesdroppers. Existing privacy-preserving methods for natural language processing (NLP) interactions primarily rely on semantic similarity, overlooking the role of contextual information. In this work, we introduce dchi-stencil, a novel token-level privacy-preserving mechanism that integrates contextual and semantic information while ensuring strong privacy guarantees under the dchi differential privacy framework, achieving 2epsilon-dchi-privacy. By incorporating both semantic and contextual nuances, dchi-stencil achieves a robust balance between privacy and utility. We evaluate dchi-stencil using state-of-the-art language models and diverse datasets, achieving comparable and even better trade-off between utility and privacy compared to existing methods. This work highlights the potential of dchi-stencil to set a new standard for privacy-preserving NLP in modern, high-risk applications.

How Much is Enough? The Diminishing Returns of Tokenization Training Data

Feb 27, 2025Abstract:Tokenization, a crucial initial step in natural language processing, is often assumed to benefit from larger training datasets. This paper investigates the impact of tokenizer training data sizes ranging from 1GB to 900GB. Our findings reveal diminishing returns as the data size increases, highlighting a practical limit on how much further scaling the training data can improve tokenization quality. We analyze this phenomenon and attribute the saturation effect to the constraints imposed by the pre-tokenization stage of tokenization. These results offer valuable insights for optimizing the tokenization process and highlight potential avenues for future research in tokenization algorithms.

Information Types in Product Reviews

Feb 20, 2025

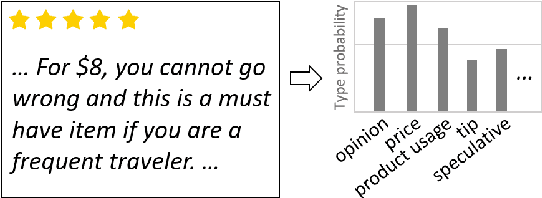

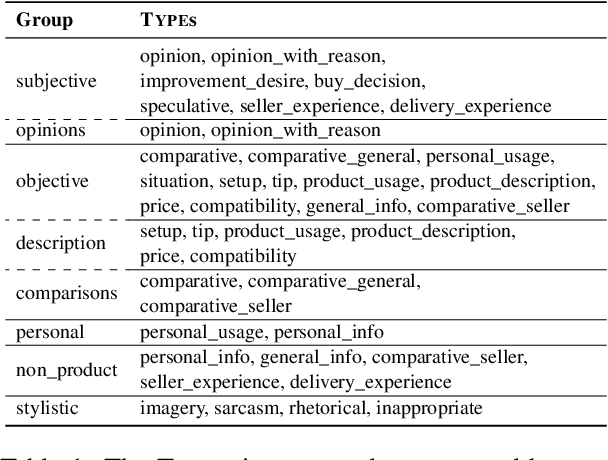

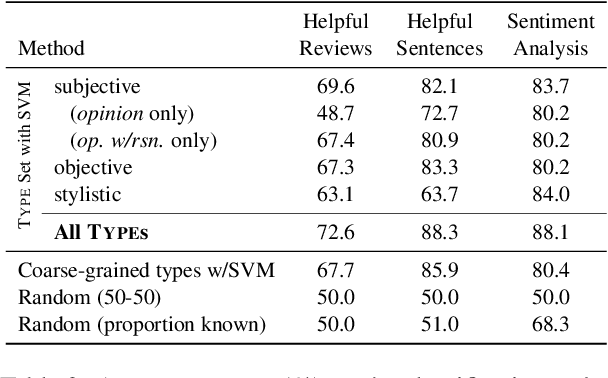

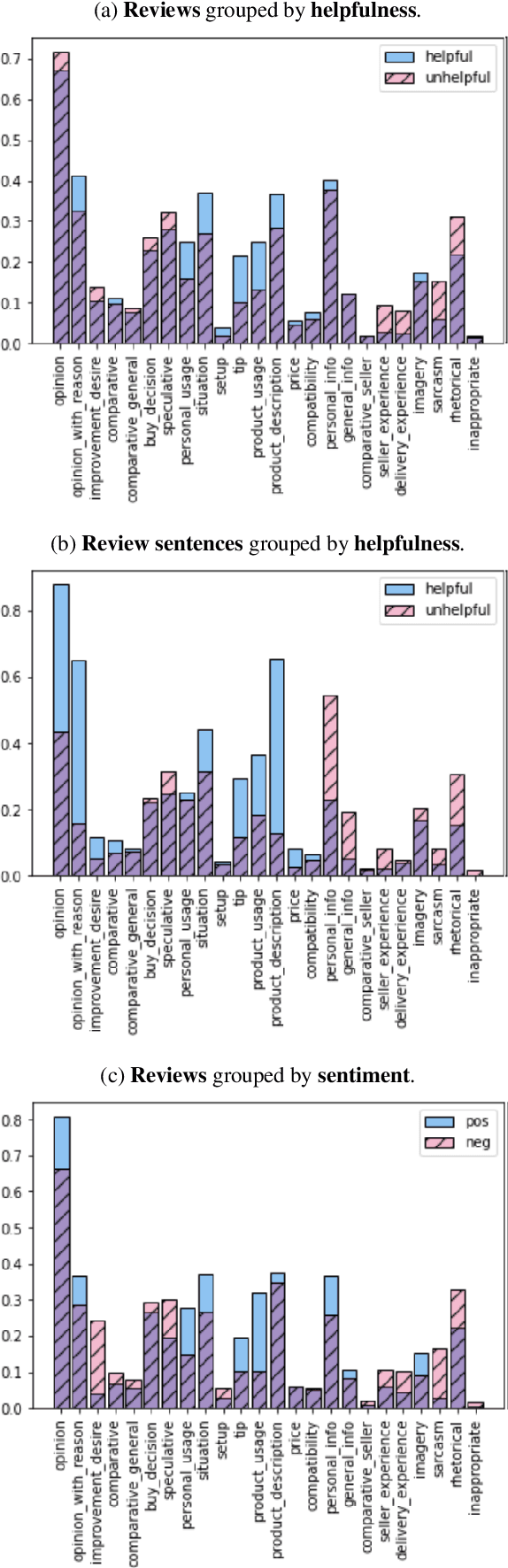

Abstract:Information in text is communicated in a way that supports a goal for its reader. Product reviews, for example, contain opinions, tips, product descriptions, and many other types of information that provide both direct insights, as well as unexpected signals for downstream applications. We devise a typology of 24 communicative goals in sentences from the product review domain, and employ a zero-shot multi-label classifier that facilitates large-scale analyses of review data. In our experiments, we find that the combination of classes in the typology forecasts helpfulness and sentiment of reviews, while supplying explanations for these decisions. In addition, our typology enables analysis of review intent, effectiveness and rhetorical structure. Characterizing the types of information in reviews unlocks many opportunities for more effective consumption of this genre.

Don't Touch My Diacritics

Oct 31, 2024Abstract:The common practice of preprocessing text before feeding it into NLP models introduces many decision points which have unintended consequences on model performance. In this opinion piece, we focus on the handling of diacritics in texts originating in many languages and scripts. We demonstrate, through several case studies, the adverse effects of inconsistent encoding of diacritized characters and of removing diacritics altogether. We call on the community to adopt simple but necessary steps across all models and toolkits in order to improve handling of diacritized text and, by extension, increase equity in multilingual NLP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge