Yuu Jinnai

Consensus Group Relative Policy Optimization for Text Generation

Feb 03, 2026Abstract:Many strong decoding methods for text generation follow a sample-and-rerank paradigm: they draw multiple candidates, score each under a utility (reward) function using consensus across samples, and return the best one. Although effective, these methods incur high computational costs during inference due to repeated sampling and scoring. Prior attempts to amortize inference-time computation typically rely on gold references, teacher labels, or curated preference data, increasing dataset construction effort and the demand for high-fidelity reward models. We propose Consensus Group Relative Policy Optimization (C-GRPO), which distills Minimum Bayes Risk (MBR) decoding into training by formulating the consensus utility as a group-relative objective within GRPO. C-GRPO requires only a utility function and policy samples, without gold references or explicit preference labels. Under ideal conditions, we show that the objective function of C-GRPO is directionally aligned with the gradient of the expected-utility objective underlying MBR decoding, leading to a convergence guarantee. Experiments on machine translation (WMT 2024) and text summarization (XSum) demonstrate that C-GRPO successfully achieves performance comparable to MBR decoding without the associated inference-time overhead, while outperforming reference-free baseline methods.

MO-GRPO: Mitigating Reward Hacking of Group Relative Policy Optimization on Multi-Objective Problems

Sep 26, 2025Abstract:Group Relative Policy Optimization (GRPO) has been shown to be an effective algorithm when an accurate reward model is available. However, such a highly reliable reward model is not available in many real-world tasks. In this paper, we particularly focus on multi-objective settings, in which we identify that GRPO is vulnerable to reward hacking, optimizing only one of the objectives at the cost of the others. To address this issue, we propose MO-GRPO, an extension of GRPO with a simple normalization method to reweight the reward functions automatically according to the variances of their values. We first show analytically that MO-GRPO ensures that all reward functions contribute evenly to the loss function while preserving the order of preferences, eliminating the need for manual tuning of the reward functions' scales. Then, we evaluate MO-GRPO experimentally in four domains: (i) the multi-armed bandits problem, (ii) simulated control task (Mo-Gymnasium), (iii) machine translation tasks on the WMT benchmark (En-Ja, En-Zh), and (iv) instruction following task. MO-GRPO achieves stable learning by evenly distributing correlations among the components of rewards, outperforming GRPO, showing MO-GRPO to be a promising algorithm for multi-objective reinforcement learning problems.

Document-Level Text Generation with Minimum Bayes Risk Decoding using Optimal Transport

May 29, 2025Abstract:Document-level text generation tasks are known to be more difficult than sentence-level text generation tasks as they require the understanding of longer context to generate high-quality texts. In this paper, we investigate the adaption of Minimum Bayes Risk (MBR) decoding for document-level text generation tasks. MBR decoding makes use of a utility function to estimate the output with the highest expected utility from a set of candidate outputs. Although MBR decoding is shown to be effective in a wide range of sentence-level text generation tasks, its performance on document-level text generation tasks is limited as many of the utility functions are designed for evaluating the utility of sentences. To this end, we propose MBR-OT, a variant of MBR decoding using Wasserstein distance to compute the utility of a document using a sentence-level utility function. The experimental result shows that the performance of MBR-OT outperforms that of the standard MBR in document-level machine translation, text simplification, and dense image captioning tasks. Our code is available at https://github.com/jinnaiyuu/mbr-optimal-transport

Evaluation of Best-of-N Sampling Strategies for Language Model Alignment

Feb 18, 2025

Abstract:Best-of-N (BoN) sampling with a reward model has been shown to be an effective strategy for aligning Large Language Models (LLMs) with human preferences at the time of decoding. BoN sampling is susceptible to a problem known as reward hacking. Since the reward model is an imperfect proxy for the true objective, an excessive focus on optimizing its value can lead to a compromise of its performance on the true objective. Previous work proposes Regularized BoN sampling (RBoN), a BoN sampling with regularization to the objective, and shows that it outperforms BoN sampling so that it mitigates reward hacking and empirically (Jinnai et al., 2024). However, Jinnai et al. (2024) introduce RBoN based on a heuristic and they lack the analysis of why such regularization strategy improves the performance of BoN sampling. The aim of this study is to analyze the effect of BoN sampling on regularization strategies. Using the regularization strategies corresponds to robust optimization, which maximizes the worst case over a set of possible perturbations in the proxy reward. Although the theoretical guarantees are not directly applicable to RBoN, RBoN corresponds to a practical implementation. This paper proposes an extension of the RBoN framework, called Stochastic RBoN sampling (SRBoN), which is a theoretically guaranteed approach to worst-case RBoN in proxy reward. We then perform an empirical evaluation using the AlpacaFarm and Anthropic's hh-rlhf datasets to evaluate which factors of the regularization strategies contribute to the improvement of the true proxy reward. In addition, we also propose another simple RBoN method, the Sentence Length Regularized BoN, which has a better performance in the experiment as compared to the previous methods.

Theoretical Guarantees for Minimum Bayes Risk Decoding

Feb 18, 2025Abstract:Minimum Bayes Risk (MBR) decoding optimizes output selection by maximizing the expected utility value of an underlying human distribution. While prior work has shown the effectiveness of MBR decoding through empirical evaluation, few studies have analytically investigated why the method is effective. As a result of our analysis, we show that, given the size $n$ of the reference hypothesis set used in computation, MBR decoding approaches the optimal solution with high probability at a rate of $O\left(n^{-\frac{1}{2}}\right)$, under certain assumptions, even though the language space $Y$ is significantly larger $Y\gg n$. This result helps to theoretically explain the strong performance observed in several prior empirical studies on MBR decoding. In addition, we provide the performance gap for maximum-a-posteriori (MAP) decoding and compare it to MBR decoding. The result of this paper indicates that MBR decoding tends to converge to the optimal solution faster than MAP decoding in several cases.

Does Cross-Cultural Alignment Change the Commonsense Morality of Language Models?

Jun 24, 2024Abstract:Alignment of the language model with human preferences is a common approach to making a language model useful to end users. However, most alignment work is done in English, and human preference datasets are dominated by English, reflecting only the preferences of English-speaking annotators. Nevertheless, it is common practice to use the English preference data, either directly or by translating it into the target language, when aligning a multilingual language model. The question is whether such an alignment strategy marginalizes the preference of non-English speaking users. To this end, we investigate the effect of aligning Japanese language models with (mostly) English resources. In particular, we focus on evaluating whether the commonsense morality of the resulting fine-tuned models is aligned with Japanese culture using the JCommonsenseMorality (JCM) and ETHICS datasets. The experimental results show that the fine-tuned model outperforms the SFT model. However, it does not demonstrate the same level of improvement as a model fine-tuned using the JCM, suggesting that while some aspects of commonsense morality are transferable, others may not be.

Annotation-Efficient Preference Optimization for Language Model Alignment

May 22, 2024

Abstract:Preference optimization is a standard approach to fine-tuning large language models to align with human preferences. The quality, diversity, and quantity of the preference dataset are critical to the effectiveness of preference optimization. However, obtaining a large amount of high-quality and diverse preference annotations is difficult in many applications. This raises the question of how to use the limited annotation budget to create an effective preference dataset. To this end, we propose Annotation-Efficient Preference Optimization (AEPO). Instead of exhaustively annotating preference over all available response texts, AEPO selects a subset of responses that maximizes quality and diversity from the available responses, and then annotates preference over the selected ones. In this way, AEPO focuses the annotation budget on labeling preference over a smaller subset of responses with diversity and of high quality. We evaluate the performance of Direct Preference Optimization (DPO) using AEPO and show that it outperforms models trained using a standard DPO with the same annotation budget. Our code is available at https://github.com/CyberAgentAILab/annotation-efficient-po

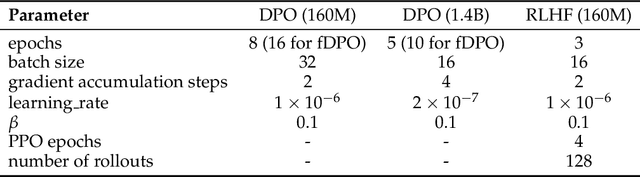

Filtered Direct Preference Optimization

Apr 23, 2024

Abstract:Reinforcement learning from human feedback (RLHF) plays a crucial role in aligning language models with human preferences. While the significance of dataset quality is generally recognized, explicit investigations into its impact within the RLHF framework, to our knowledge, have been limited. This paper addresses the issue of text quality within the preference dataset by focusing on Direct Preference Optimization (DPO), an increasingly adopted reward-model-free RLHF method. We confirm that text quality significantly influences the performance of models optimized with DPO more than those optimized with reward-model-based RLHF. Building on this new insight, we propose an extension of DPO, termed filtered direct preference optimization (fDPO). fDPO uses a trained reward model to monitor the quality of texts within the preference dataset during DPO training. Samples of lower quality are discarded based on comparisons with texts generated by the model being optimized, resulting in a more accurate dataset. Experimental results demonstrate that fDPO enhances the final model performance. Our code is available at https://github.com/CyberAgentAILab/filtered-dpo.

Regularized Best-of-N Sampling to Mitigate Reward Hacking for Language Model Alignment

Apr 05, 2024

Abstract:Best-of-N (BoN) sampling with a reward model has been shown to be an effective strategy for aligning Large Language Models (LLMs) to human preferences at the time of decoding. BoN sampling is susceptible to a problem known as reward hacking. Because the reward model is an imperfect proxy for the true objective, over-optimizing its value can compromise its performance on the true objective. A common solution to prevent reward hacking in preference learning techniques is to optimize a reward using proximity regularization (e.g., KL regularization), which ensures that the language model remains close to the reference model. In this research, we propose Regularized Best-of-N (RBoN), a variant of BoN that aims to mitigate reward hacking by incorporating a proximity term in response selection, similar to preference learning techniques. We evaluate two variants of RBoN on the AlpacaFarm dataset and find that they outperform BoN, especially when the proxy reward model has a low correlation with the true objective.

On the True Distribution Approximation of Minimum Bayes-Risk Decoding

Mar 31, 2024Abstract:Minimum Bayes-risk (MBR) decoding has recently gained renewed attention in text generation. MBR decoding considers texts sampled from a model as pseudo-references and selects the text with the highest similarity to the others. Therefore, sampling is one of the key elements of MBR decoding, and previous studies reported that the performance varies by sampling methods. From a theoretical standpoint, this performance variation is likely tied to how closely the samples approximate the true distribution of references. However, this approximation has not been the subject of in-depth study. In this study, we propose using anomaly detection to measure the degree of approximation. We first closely examine the performance variation and then show that previous hypotheses about samples do not correlate well with the variation, but our introduced anomaly scores do. The results are the first to empirically support the link between the performance and the core assumption of MBR decoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge