Yuki Kadokawa

Prolonging Tool Life: Learning Skillful Use of General-purpose Tools through Lifespan-guided Reinforcement Learning

Jul 23, 2025Abstract:In inaccessible environments with uncertain task demands, robots often rely on general-purpose tools that lack predefined usage strategies. These tools are not tailored for particular operations, making their longevity highly sensitive to how they are used. This creates a fundamental challenge: how can a robot learn a tool-use policy that both completes the task and prolongs the tool's lifespan? In this work, we address this challenge by introducing a reinforcement learning (RL) framework that incorporates tool lifespan as a factor during policy optimization. Our framework leverages Finite Element Analysis (FEA) and Miner's Rule to estimate Remaining Useful Life (RUL) based on accumulated stress, and integrates the RUL into the RL reward to guide policy learning toward lifespan-guided behavior. To handle the fact that RUL can only be estimated after task execution, we introduce an Adaptive Reward Normalization (ARN) mechanism that dynamically adjusts reward scaling based on estimated RULs, ensuring stable learning signals. We validate our method across simulated and real-world tool use tasks, including Object-Moving and Door-Opening with multiple general-purpose tools. The learned policies consistently prolong tool lifespan (up to 8.01x in simulation) and transfer effectively to real-world settings, demonstrating the practical value of learning lifespan-guided tool use strategies.

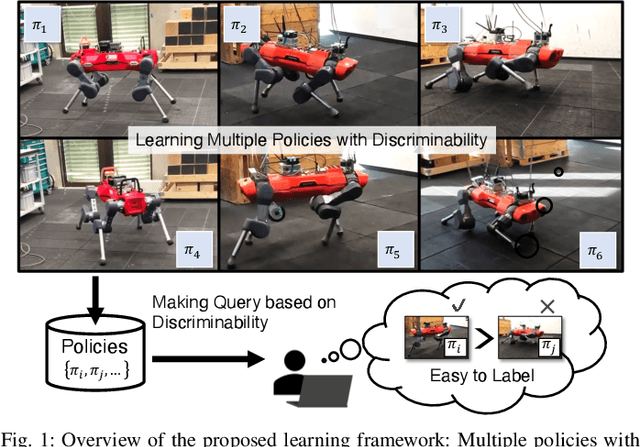

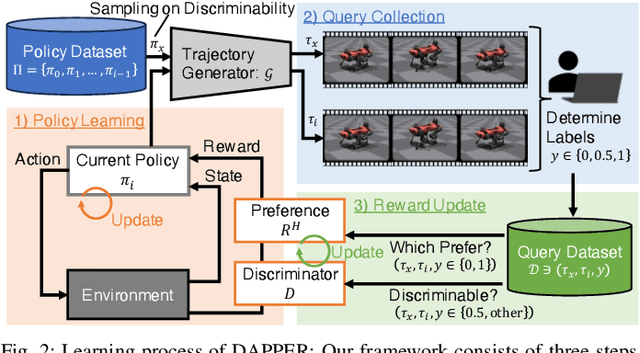

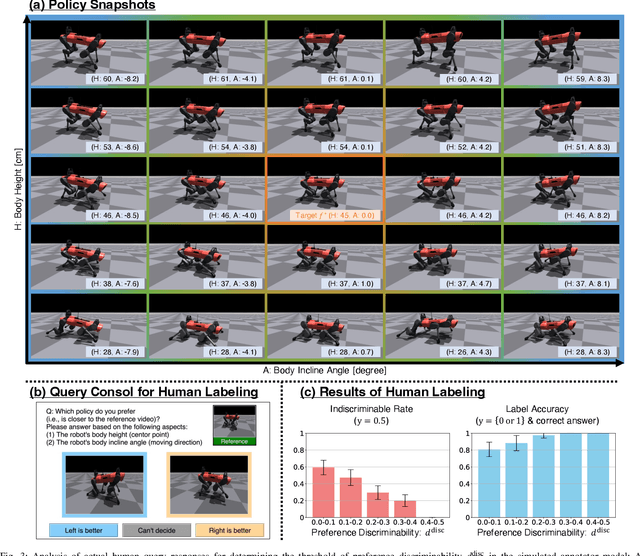

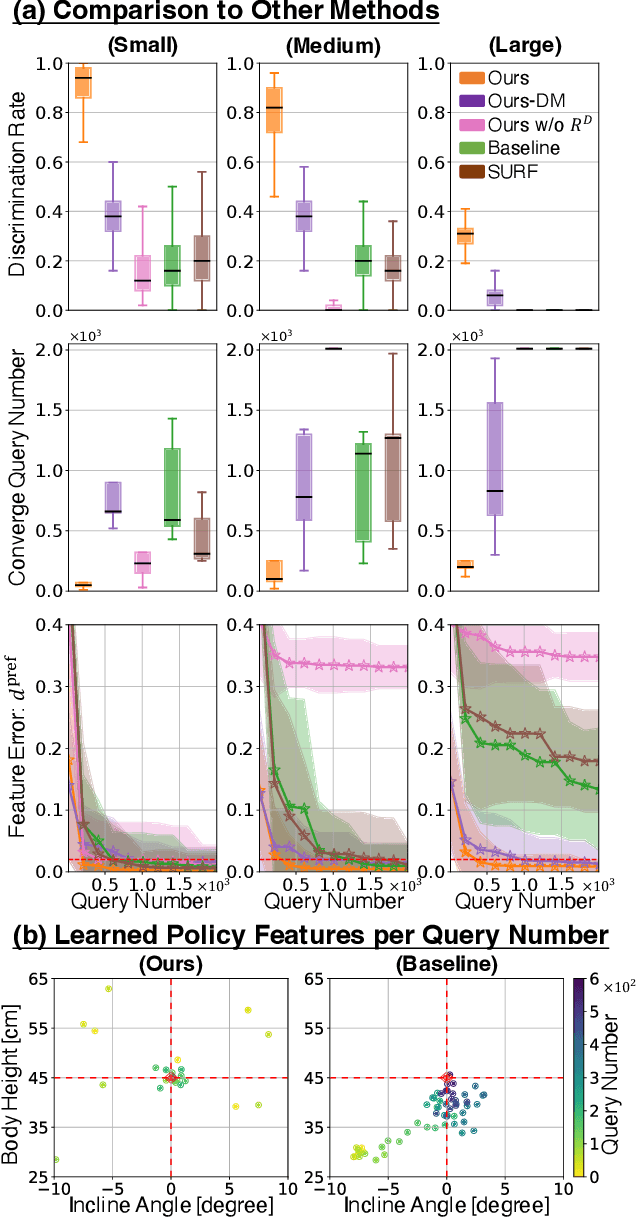

DAPPER: Discriminability-Aware Policy-to-Policy Preference-Based Reinforcement Learning for Query-Efficient Robot Skill Acquisition

May 09, 2025

Abstract:Preference-based Reinforcement Learning (PbRL) enables policy learning through simple queries comparing trajectories from a single policy. While human responses to these queries make it possible to learn policies aligned with human preferences, PbRL suffers from low query efficiency, as policy bias limits trajectory diversity and reduces the number of discriminable queries available for learning preferences. This paper identifies preference discriminability, which quantifies how easily a human can judge which trajectory is closer to their ideal behavior, as a key metric for improving query efficiency. To address this, we move beyond comparisons within a single policy and instead generate queries by comparing trajectories from multiple policies, as training them from scratch promotes diversity without policy bias. We propose Discriminability-Aware Policy-to-Policy Preference-Based Efficient Reinforcement Learning (DAPPER), which integrates preference discriminability with trajectory diversification achieved by multiple policies. DAPPER trains new policies from scratch after each reward update and employs a discriminator that learns to estimate preference discriminability, enabling the prioritized sampling of more discriminable queries. During training, it jointly maximizes the preference reward and preference discriminability score, encouraging the discovery of highly rewarding and easily distinguishable policies. Experiments in simulated and real-world legged robot environments demonstrate that DAPPER outperforms previous methods in query efficiency, particularly under challenging preference discriminability conditions.

Progressive-Resolution Policy Distillation: Leveraging Coarse-Resolution Simulation for Time-Efficient Fine-Resolution Policy Learning

Dec 10, 2024

Abstract:In earthwork and construction, excavators often encounter large rocks mixed with various soil conditions, requiring skilled operators. This paper presents a framework for achieving autonomous excavation using reinforcement learning (RL) through a rock excavation simulator. In the simulation, resolution can be defined by the particle size/number in the whole soil space. Fine-resolution simulations closely mimic real-world behavior but demand significant calculation time and challenging sample collection, while coarse-resolution simulations enable faster sample collection but deviate from real-world behavior. To combine the advantages of both resolutions, we explore using policies developed in coarse-resolution simulations for pre-training in fine-resolution simulations. To this end, we propose a novel policy learning framework called Progressive-Resolution Policy Distillation (PRPD), which progressively transfers policies through some middle-resolution simulations with conservative policy transfer to avoid domain gaps that could lead to policy transfer failure. Validation in a rock excavation simulator and nine real-world rock environments demonstrated that PRPD reduced sampling time to less than 1/7 while maintaining task success rates comparable to those achieved through policy learning in a fine-resolution simulation.

Robust Iterative Value Conversion: Deep Reinforcement Learning for Neurochip-driven Edge Robots

Aug 23, 2024

Abstract:A neurochip is a device that reproduces the signal processing mechanisms of brain neurons and calculates Spiking Neural Networks (SNNs) with low power consumption and at high speed. Thus, neurochips are attracting attention from edge robot applications, which suffer from limited battery capacity. This paper aims to achieve deep reinforcement learning (DRL) that acquires SNN policies suitable for neurochip implementation. Since DRL requires a complex function approximation, we focus on conversion techniques from Floating Point NN (FPNN) because it is one of the most feasible SNN techniques. However, DRL requires conversions to SNNs for every policy update to collect the learning samples for a DRL-learning cycle, which updates the FPNN policy and collects the SNN policy samples. Accumulative conversion errors can significantly degrade the performance of the SNN policies. We propose Robust Iterative Value Conversion (RIVC) as a DRL that incorporates conversion error reduction and robustness to conversion errors. To reduce them, FPNN is optimized with the same number of quantization bits as an SNN. The FPNN output is not significantly changed by quantization. To robustify the conversion error, an FPNN policy that is applied with quantization is updated to increase the gap between the probability of selecting the optimal action and other actions. This step prevents unexpected replacements of the policy's optimal actions. We verified RIVC's effectiveness on a neurochip-driven robot. The results showed that RIVC consumed 1/15 times less power and increased the calculation speed by five times more than an edge CPU (quad-core ARM Cortex-A72). The previous framework with no countermeasures against conversion errors failed to train the policies. Videos from our experiments are available: https://youtu.be/Q5Z0-BvK1Tc.

Cyclic Policy Distillation: Sample-Efficient Sim-to-Real Reinforcement Learning with Domain Randomization

Jul 29, 2022

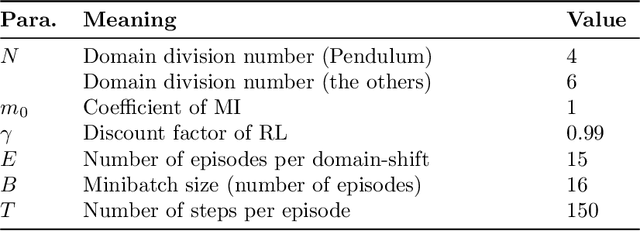

Abstract:Deep reinforcement learning with domain randomization learns a control policy in various simulations with randomized physical and sensor model parameters to become transferable to the real world in a zero-shot setting. However, a huge number of samples are often required to learn an effective policy when the range of randomized parameters is extensive due to the instability of policy updates. To alleviate this problem, we propose a sample-efficient method named Cyclic Policy Distillation (CPD). CPD divides the range of randomized parameters into several small sub-domains and assigns a local policy to each sub-domain. Then, the learning of local policies is performed while {\it cyclically} transitioning the target sub-domain to neighboring sub-domains and exploiting the learned values/policies of the neighbor sub-domains with a monotonic policy-improvement scheme. Finally, all of the learned local policies are distilled into a global policy for sim-to-real transfer. The effectiveness and sample efficiency of CPD are demonstrated through simulations with four tasks (Pendulum from OpenAIGym and Pusher, Swimmer, and HalfCheetah from Mujoco), and a real-robot ball-dispersal task.

Binarized P-Network: Deep Reinforcement Learning of Robot Control from Raw Images on FPGA

Sep 15, 2021Abstract:This paper explores a Deep Reinforcement Learning (DRL) approach for designing image-based control for edge robots to be implemented on Field Programmable Gate Arrays (FPGAs). Although FPGAs are more power-efficient than CPUs and GPUs, a typical DRL method cannot be applied since they are composed of many Logic Blocks (LBs) for high-speed logical operations but low-speed real-number operations. To cope with this problem, we propose a novel DRL algorithm called Binarized P-Network (BPN), which learns image-input control policies using Binarized Convolutional Neural Networks (BCNNs). To alleviate the instability of reinforcement learning caused by a BCNN with low function approximation accuracy, our BPN adopts a robust value update scheme called Conservative Value Iteration, which is tolerant of function approximation errors. We confirmed the BPN's effectiveness through applications to a visual tracking task in simulation and real-robot experiments with FPGA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge