Youqian Zhang

Rainbow Artifacts from Electromagnetic Signal Injection Attacks on Image Sensors

Jul 10, 2025

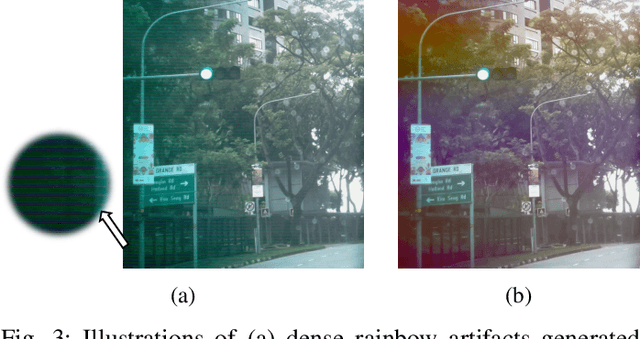

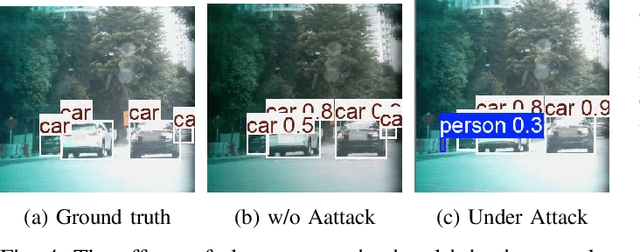

Abstract:Image sensors are integral to a wide range of safety- and security-critical systems, including surveillance infrastructure, autonomous vehicles, and industrial automation. These systems rely on the integrity of visual data to make decisions. In this work, we investigate a novel class of electromagnetic signal injection attacks that target the analog domain of image sensors, allowing adversaries to manipulate raw visual inputs without triggering conventional digital integrity checks. We uncover a previously undocumented attack phenomenon on CMOS image sensors: rainbow-like color artifacts induced in images captured by image sensors through carefully tuned electromagnetic interference. We further evaluate the impact of these attacks on state-of-the-art object detection models, showing that the injected artifacts propagate through the image signal processing pipeline and lead to significant mispredictions. Our findings highlight a critical and underexplored vulnerability in the visual perception stack, highlighting the need for more robust defenses against physical-layer attacks in such systems.

Is Your Autonomous Vehicle Safe? Understanding the Threat of Electromagnetic Signal Injection Attacks on Traffic Scene Perception

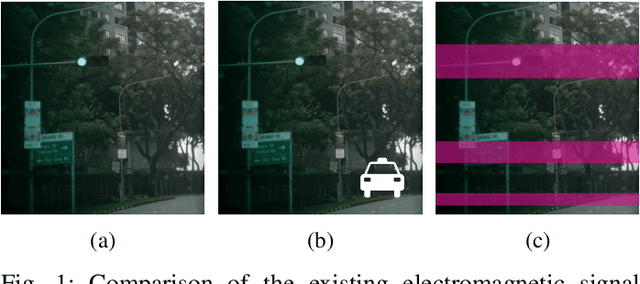

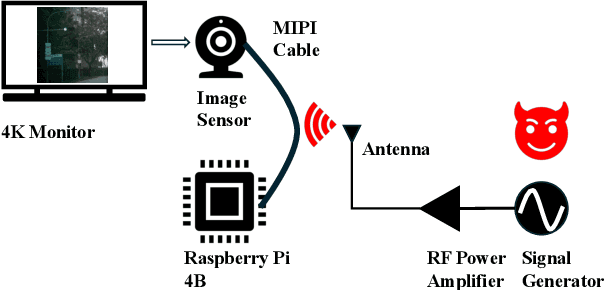

Jan 09, 2025Abstract:Autonomous vehicles rely on camera-based perception systems to comprehend their driving environment and make crucial decisions, thereby ensuring vehicles to steer safely. However, a significant threat known as Electromagnetic Signal Injection Attacks (ESIA) can distort the images captured by these cameras, leading to incorrect AI decisions and potentially compromising the safety of autonomous vehicles. Despite the serious implications of ESIA, there is limited understanding of its impacts on the robustness of AI models across various and complex driving scenarios. To address this gap, our research analyzes the performance of different models under ESIA, revealing their vulnerabilities to the attacks. Moreover, due to the challenges in obtaining real-world attack data, we develop a novel ESIA simulation method and generate a simulated attack dataset for different driving scenarios. Our research provides a comprehensive simulation and evaluation framework, aiming to enhance the development of more robust AI models and secure intelligent systems, ultimately contributing to the advancement of safer and more reliable technology across various fields.

Combating Phone Scams with LLM-based Detection: Where Do We Stand?

Sep 18, 2024Abstract:Phone scams pose a significant threat to individuals and communities, causing substantial financial losses and emotional distress. Despite ongoing efforts to combat these scams, scammers continue to adapt and refine their tactics, making it imperative to explore innovative countermeasures. This research explores the potential of large language models (LLMs) to provide detection of fraudulent phone calls. By analyzing the conversational dynamics between scammers and victims, LLM-based detectors can identify potential scams as they occur, offering immediate protection to users. While such approaches demonstrate promising results, we also acknowledge the challenges of biased datasets, relatively low recall, and hallucinations that must be addressed for further advancement in this field

Anti-ESIA: Analyzing and Mitigating Impacts of Electromagnetic Signal Injection Attacks

Sep 17, 2024Abstract:Cameras are integral components of many critical intelligent systems. However, a growing threat, known as Electromagnetic Signal Injection Attacks (ESIA), poses a significant risk to these systems, where ESIA enables attackers to remotely manipulate images captured by cameras, potentially leading to malicious actions and catastrophic consequences. Despite the severity of this threat, the underlying reasons for ESIA's effectiveness remain poorly understood, and effective countermeasures are lacking. This paper aims to address these gaps by investigating ESIA from two distinct aspects: pixel loss and color strips. By analyzing these aspects separately on image classification tasks, we gain a deeper understanding of how ESIA can compromise intelligent systems. Additionally, we explore a lightweight solution to mitigate the effects of ESIA while acknowledging its limitations. Our findings provide valuable insights for future research and development in the field of camera security and intelligent systems.

Modeling Electromagnetic Signal Injection Attacks on Camera-based Smart Systems: Applications and Mitigation

Aug 09, 2024

Abstract:Numerous safety- or security-critical systems depend on cameras to perceive their surroundings, further allowing artificial intelligence (AI) to analyze the captured images to make important decisions. However, a concerning attack vector has emerged, namely, electromagnetic waves, which pose a threat to the integrity of these systems. Such attacks enable attackers to manipulate the images remotely, leading to incorrect AI decisions, e.g., autonomous vehicles missing detecting obstacles ahead resulting in collisions. The lack of understanding regarding how different systems react to such attacks poses a significant security risk. Furthermore, no effective solutions have been demonstrated to mitigate this threat. To address these gaps, we modeled the attacks and developed a simulation method for generating adversarial images. Through rigorous analysis, we confirmed that the effects of the simulated adversarial images are indistinguishable from those from real attacks. This method enables researchers and engineers to rapidly assess the susceptibility of various AI vision applications to these attacks, without the need for constructing complicated attack devices. In our experiments, most of the models demonstrated vulnerabilities to these attacks, emphasizing the need to enhance their robustness. Fortunately, our modeling and simulation method serves as a stepping stone toward developing more resilient models. We present a pilot study on adversarial training to improve their robustness against attacks, and our results demonstrate a significant improvement by recovering up to 91% performance, offering a promising direction for mitigating this threat.

Understanding Impacts of Electromagnetic Signal Injection Attacks on Object Detection

Jul 23, 2024

Abstract:Object detection can localize and identify objects in images, and it is extensively employed in critical multimedia applications such as security surveillance and autonomous driving. Despite the success of existing object detection models, they are often evaluated in ideal scenarios where captured images guarantee the accurate and complete representation of the detecting scenes. However, images captured by image sensors may be affected by different factors in real applications, including cyber-physical attacks. In particular, attackers can exploit hardware properties within the systems to inject electromagnetic interference so as to manipulate the images. Such attacks can cause noisy or incomplete information about the captured scene, leading to incorrect detection results, potentially granting attackers malicious control over critical functions of the systems. This paper presents a research work that comprehensively quantifies and analyzes the impacts of such attacks on state-of-the-art object detection models in practice. It also sheds light on the underlying reasons for the incorrect detection outcomes.

Electromagnetic Signal Injection Attacks on Differential Signaling

Jul 31, 2022

Abstract:Differential signaling is a method of data transmission that uses two complementary electrical signals to encode information. This allows a receiver to reject any noise by looking at the difference between the two signals, assuming the noise affects both signals in the same way. Many protocols such as USB, Ethernet, and HDMI use differential signaling to achieve a robust communication channel in a noisy environment. This generally works well and has led many to believe that it is infeasible to remotely inject attacking signals into such a differential pair. In this paper we challenge this assumption and show that an adversary can in fact inject malicious signals from a distance, purely using common-mode injection, i.e., injecting into both wires at the same time. We show how this allows an attacker to inject bits or even arbitrary messages into a communication line. Such an attack is a significant threat to many applications, from home security and privacy to automotive systems, critical infrastructure, or implantable medical devices; in which incorrect data or unauthorized control could cause significant damage, or even fatal accidents. We show in detail the principles of how an electromagnetic signal can bypass the noise rejection of differential signaling, and eventually result in incorrect bits in the receiver. We show how an attacker can exploit this to achieve a successful injection of an arbitrary bit, and we analyze the success rate of injecting longer arbitrary messages. We demonstrate the attack on a real system and show that the success rate can reach as high as $90\%$. Finally, we present a case study where we wirelessly inject a message into a Controller Area Network (CAN) bus, which is a differential signaling bus protocol used in many critical applications, including the automotive and aviation sector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge