Chunxi Yang

Modeling Electromagnetic Signal Injection Attacks on Camera-based Smart Systems: Applications and Mitigation

Aug 09, 2024

Abstract:Numerous safety- or security-critical systems depend on cameras to perceive their surroundings, further allowing artificial intelligence (AI) to analyze the captured images to make important decisions. However, a concerning attack vector has emerged, namely, electromagnetic waves, which pose a threat to the integrity of these systems. Such attacks enable attackers to manipulate the images remotely, leading to incorrect AI decisions, e.g., autonomous vehicles missing detecting obstacles ahead resulting in collisions. The lack of understanding regarding how different systems react to such attacks poses a significant security risk. Furthermore, no effective solutions have been demonstrated to mitigate this threat. To address these gaps, we modeled the attacks and developed a simulation method for generating adversarial images. Through rigorous analysis, we confirmed that the effects of the simulated adversarial images are indistinguishable from those from real attacks. This method enables researchers and engineers to rapidly assess the susceptibility of various AI vision applications to these attacks, without the need for constructing complicated attack devices. In our experiments, most of the models demonstrated vulnerabilities to these attacks, emphasizing the need to enhance their robustness. Fortunately, our modeling and simulation method serves as a stepping stone toward developing more resilient models. We present a pilot study on adversarial training to improve their robustness against attacks, and our results demonstrate a significant improvement by recovering up to 91% performance, offering a promising direction for mitigating this threat.

Understanding Impacts of Electromagnetic Signal Injection Attacks on Object Detection

Jul 23, 2024

Abstract:Object detection can localize and identify objects in images, and it is extensively employed in critical multimedia applications such as security surveillance and autonomous driving. Despite the success of existing object detection models, they are often evaluated in ideal scenarios where captured images guarantee the accurate and complete representation of the detecting scenes. However, images captured by image sensors may be affected by different factors in real applications, including cyber-physical attacks. In particular, attackers can exploit hardware properties within the systems to inject electromagnetic interference so as to manipulate the images. Such attacks can cause noisy or incomplete information about the captured scene, leading to incorrect detection results, potentially granting attackers malicious control over critical functions of the systems. This paper presents a research work that comprehensively quantifies and analyzes the impacts of such attacks on state-of-the-art object detection models in practice. It also sheds light on the underlying reasons for the incorrect detection outcomes.

Spine Landmark Localization with combining of Heatmap Regression and Direct Coordinate Regression

Jul 10, 2020

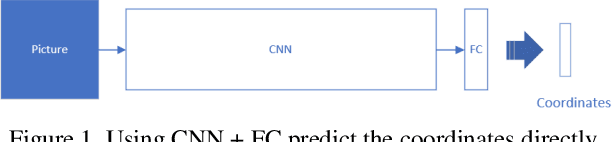

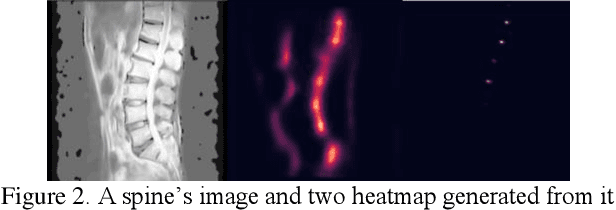

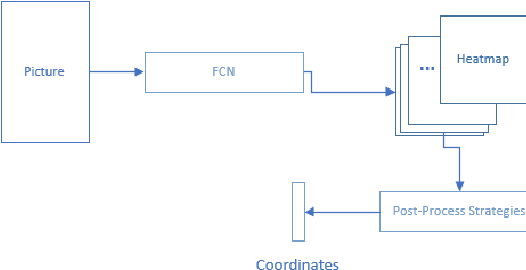

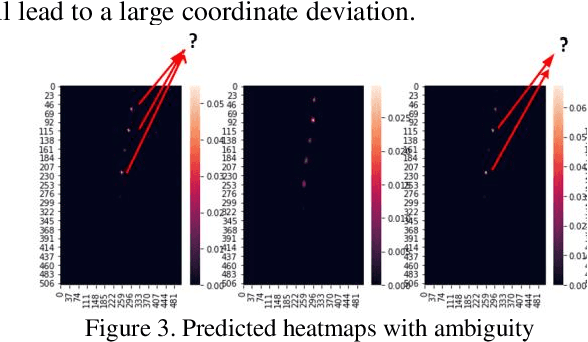

Abstract:Landmark Localization plays a very important role in processing medical images as well as in disease identification. However, In medical field, it's a challenging task because of the complexity of medical images and the high requirement of accuracy for disease identification and treatment.There are two dominant ways to regress landmark coordination, one using the full convolutional network to regress the heatmaps of landmarks , which is a complex way and heatmap post-process strategies are needed, and the other way is to regress the coordination using CNN + Full Connective Network directly, which is very simple and faster training , but larger dataset and deeper model are needed to achieve higher accuracy. Though with data augmentation and deeper network it can reach a reasonable accuracy, but the accuracy still not reach the requirement of medical field. In addition, a deeper networks also means larger space consumption. To achieve a higher accuracy, we contrived a new landmark regression method which combing heatmap regression and direct coordinate regression base on probability methods and system control theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge