Yongsheng Ou

Explicit Second-order LiDAR Bundle Adjustment Algorithm Using Mean Squared Group Metric

Sep 03, 2024

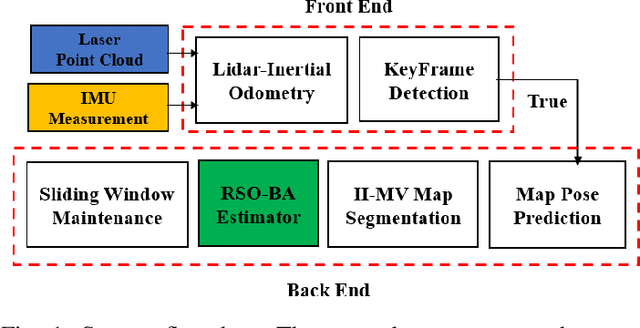

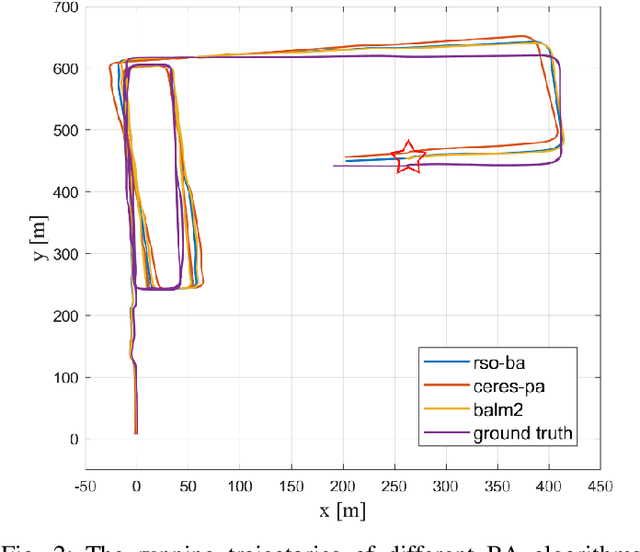

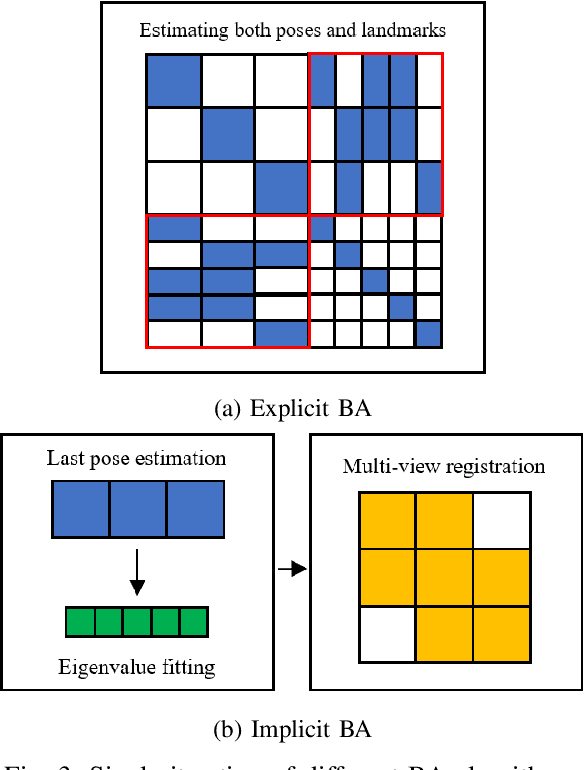

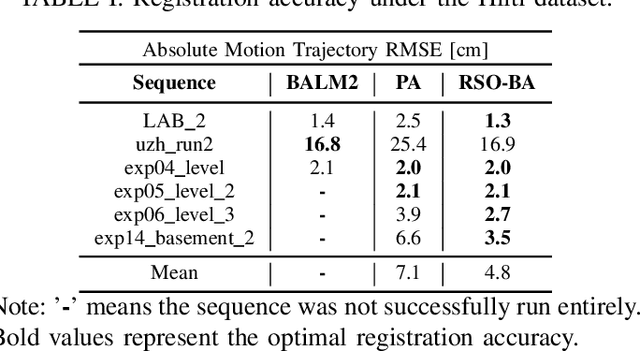

Abstract:The Bundle Adjustment (BA) algorithm is a widely used nonlinear optimization technique in the backend of Simultaneous Localization and Mapping (SLAM) systems. By leveraging the co-view relationships of landmarks from multiple perspectives, it constructs a joint estimation model for both poses and landmarks, enabling the system to generate refined maps and reduce front-end localization errors. However, applying BA to LiDAR data presents unique challenges due to the large volume of 3D points typically present in point clouds, making robust and accurate model solving more complex. In this work, we propose a novel mean square group metric (MSGM). This metric applies mean square transformation to uniformly process the measurement of plane landmarks from a single perspective. The transformed metric ensures scale interpretability while avoiding the time-consuming point-by-point calculations. By integrating a robust kernel function, the metrics involved in the BA model are reweighted, enhancing the robustness of the solution process. On the basis of the proposed robust LiDAR BA model, we derived an explicit second-order estimator (RSO-BA). This estimator employs analytical formulas for Hessian and gradient calculations, ensuring the precision of the BA solution. We evaluated the proposed RSO-BA estimator against existing implicit second-order and explicit approximate second-order estimators using the publicly available datasets. The experimental results demonstrate that the RSO-BA estimator outperforms its counterparts regarding registration accuracy and robustness, particularly in large-scale or complex unstructured environments.

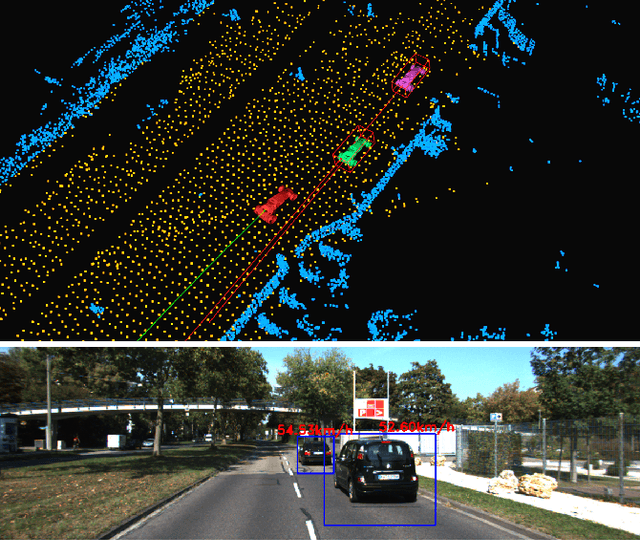

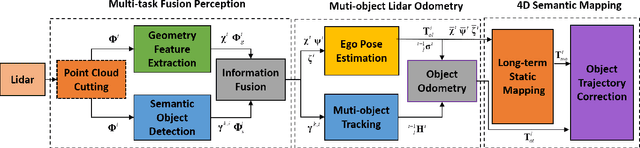

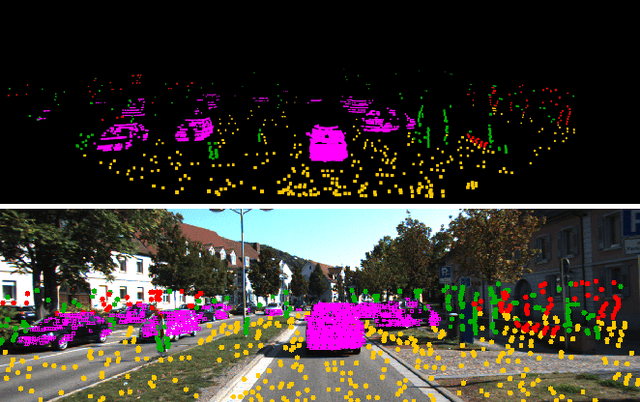

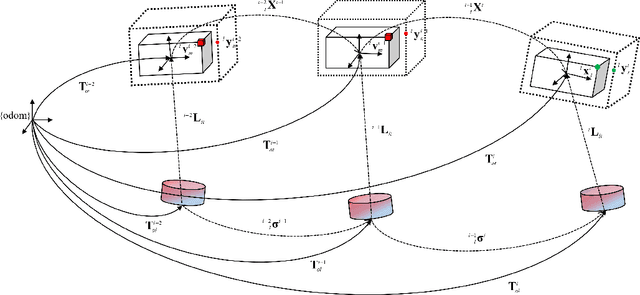

MLO: Multi-Object Tracking and Lidar Odometry in Dynamic Environment

Apr 29, 2022

Abstract:The SLAM system built on the static scene assumption will introduce significant estimation errors when a large number of moving objects appear in the field of view. Tracking and maintaining semantic objects is beneficial to understand the scene and provide rich decision information for planning and control modules. This paper introduces MLO , a multi-object Lidar odometry which tracks ego-motion and movable objects with only the lidar sensor. First, it achieves information extraction of foreground movable objects, surface road, and static background features based on geometry and object fusion perception module. While robustly estimating ego-motion, Multi-object tracking is accomplished through the least-squares method fused by 3D bounding boxes and geometric point clouds. Then, a continuous 4D semantic object map on the timeline can be created. Our approach is evaluated qualitatively and quantitatively under different scenarios on the public KITTI dataset. The experiment results show that the ego localization accuracy of MLO is better than A-LOAM system in highly dynamic, unstructured, and unknown semantic scenes. Meanwhile, the multi-object tracking method with semantic-geometry fusion also has apparent advantages in accuracy and tracking robustness compared with the single method.

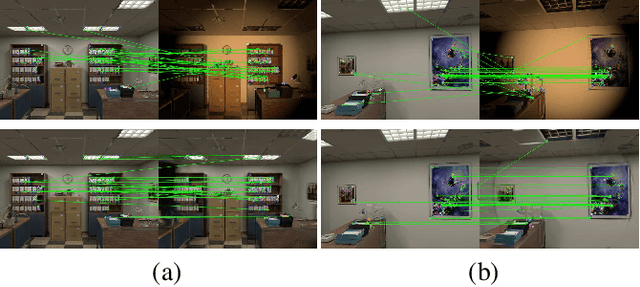

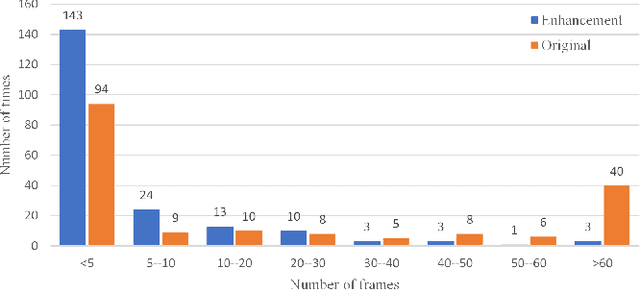

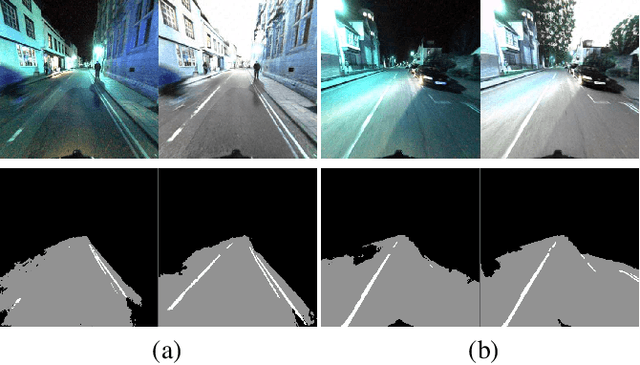

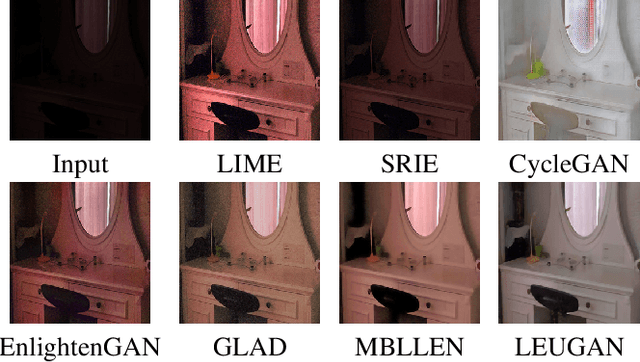

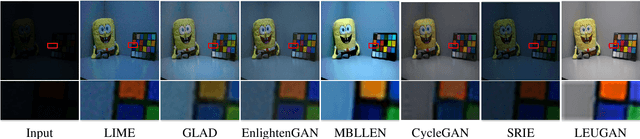

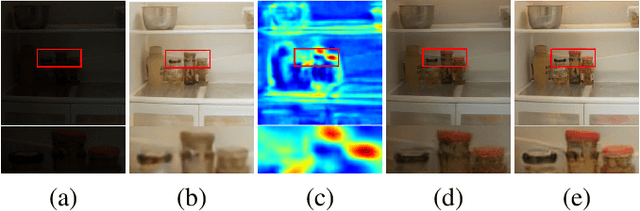

UMLE: Unsupervised Multi-discriminator Network for Low Light Enhancement

Dec 25, 2020

Abstract:Low-light image enhancement, such as recovering color and texture details from low-light images, is a complex and vital task. For automated driving, low-light scenarios will have serious implications for vision-based applications. To address this problem, we propose a real-time unsupervised generative adversarial network (GAN) containing multiple discriminators, i.e. a multi-scale discriminator, a texture discriminator, and a color discriminator. These distinct discriminators allow the evaluation of images from different perspectives. Further, considering that different channel features contain different information and the illumination is uneven in the image, we propose a feature fusion attention module. This module combines channel attention with pixel attention mechanisms to extract image features. Additionally, to reduce training time, we adopt a shared encoder for the generator and the discriminator. This makes the structure of the model more compact and the training more stable. Experiments indicate that our method is superior to the state-of-the-art methods in qualitative and quantitative evaluations, and significant improvements are achieved for both autopilot positioning and detection results.

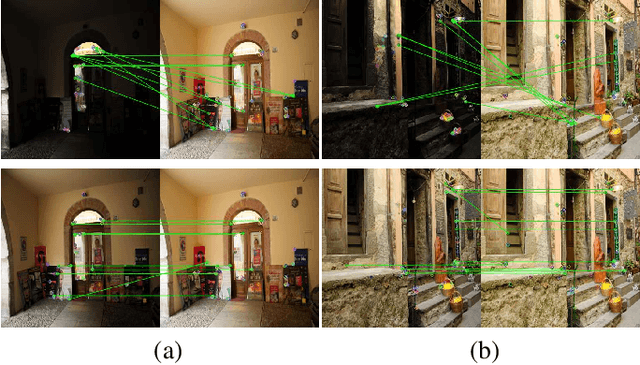

LEUGAN:Low-Light Image Enhancement by Unsupervised Generative Attentional Networks

Dec 24, 2020

Abstract:Restoring images from low-light data is a challenging problem. Most existing deep-network based algorithms are designed to be trained with pairwise images. Due to the lack of real-world datasets, they usually perform poorly when generalized in practice in terms of loss of image edge and color information. In this paper, we propose an unsupervised generation network with attention-guidance to handle the low-light image enhancement task. Specifically, our network contains two parts: an edge auxiliary module that restores sharper edges and an attention guidance module that recovers more realistic colors. Moreover, we propose a novel loss function to make the edges of the generated images more visible. Experiments validate that our proposed algorithm performs favorably against state-of-the-art methods, especially for real-world images in terms of image clarity and noise control.

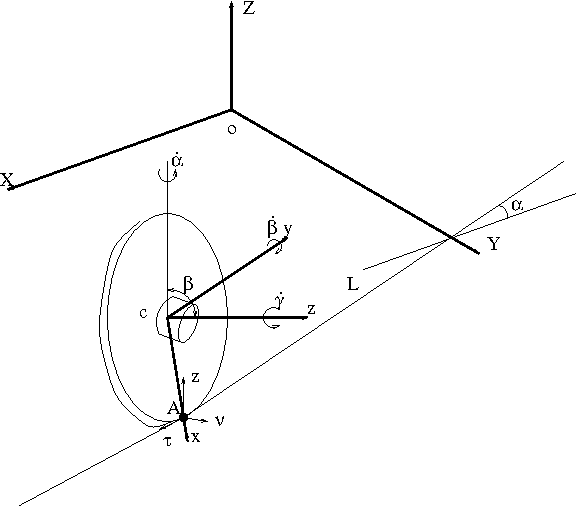

Gyroscopically Stabilized Robot: Balance and Tracking

Dec 11, 2004

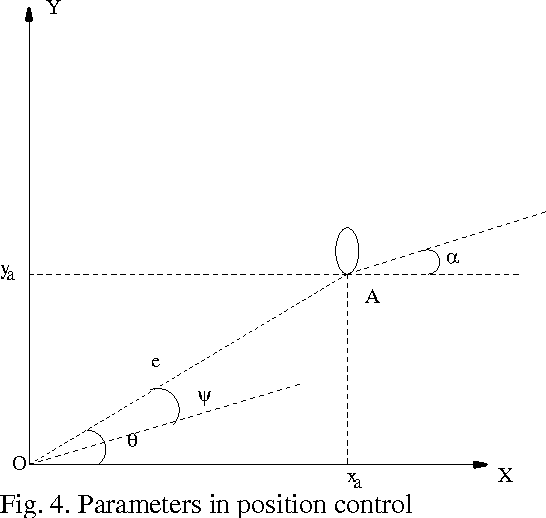

Abstract:The single wheel, gyroscopically stabilized robot - Gyrover, is a dynamically stable but statically unstable, underactuated system. In this paper, based on the dynamic model of the robot, we investigate two classes of nonholonomic constraints associated with the system. Then, based on the backstepping technology, we propose a control law for balance control of Gyrover. Next, through transferring the systems states from Cartesian coordinate to polar coordinate, control laws for point-to-point control and line tracking in Cartesian space are provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge