UMLE: Unsupervised Multi-discriminator Network for Low Light Enhancement

Paper and Code

Dec 25, 2020

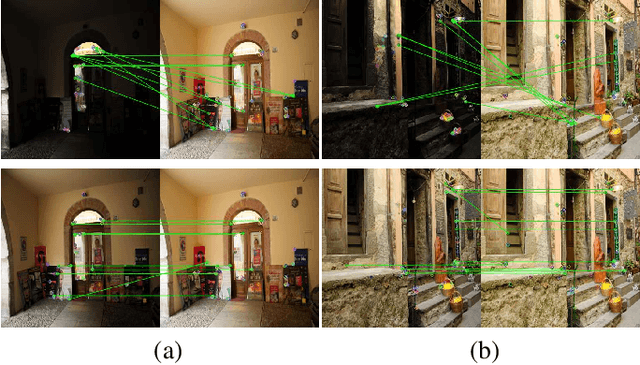

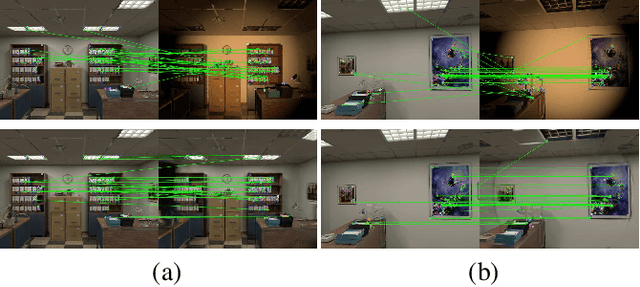

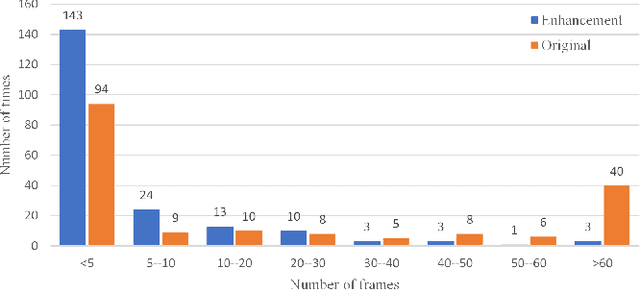

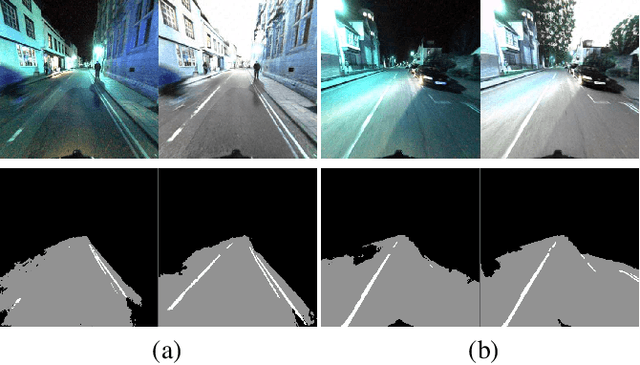

Low-light image enhancement, such as recovering color and texture details from low-light images, is a complex and vital task. For automated driving, low-light scenarios will have serious implications for vision-based applications. To address this problem, we propose a real-time unsupervised generative adversarial network (GAN) containing multiple discriminators, i.e. a multi-scale discriminator, a texture discriminator, and a color discriminator. These distinct discriminators allow the evaluation of images from different perspectives. Further, considering that different channel features contain different information and the illumination is uneven in the image, we propose a feature fusion attention module. This module combines channel attention with pixel attention mechanisms to extract image features. Additionally, to reduce training time, we adopt a shared encoder for the generator and the discriminator. This makes the structure of the model more compact and the training more stable. Experiments indicate that our method is superior to the state-of-the-art methods in qualitative and quantitative evaluations, and significant improvements are achieved for both autopilot positioning and detection results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge