Tingchen Ma

Explicit Second-order LiDAR Bundle Adjustment Algorithm Using Mean Squared Group Metric

Sep 03, 2024

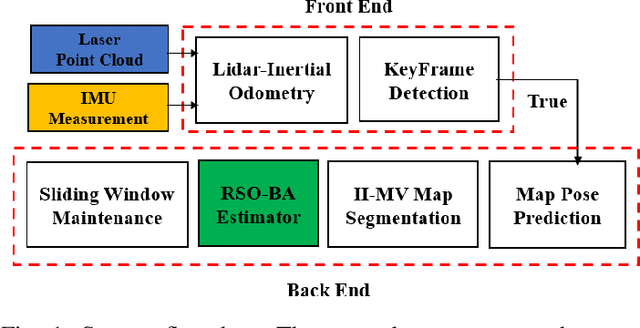

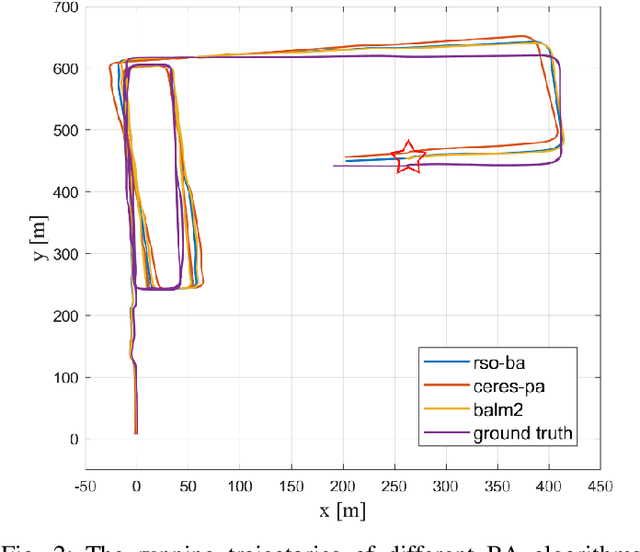

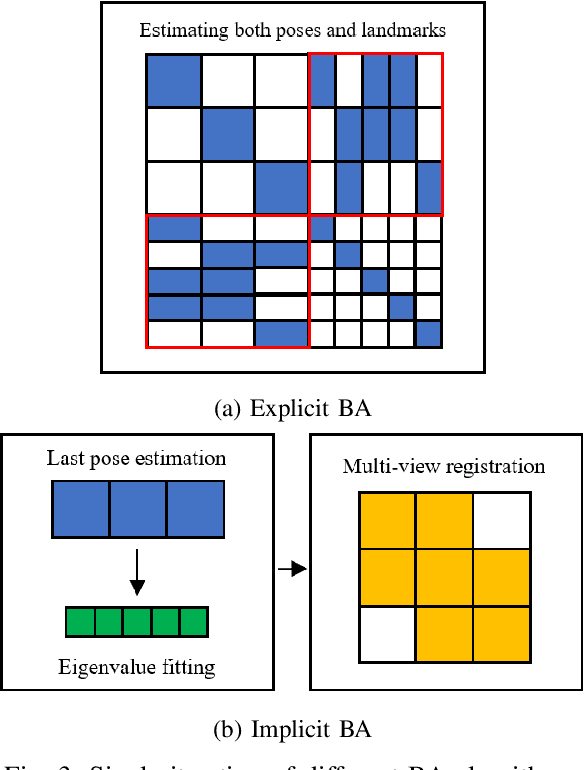

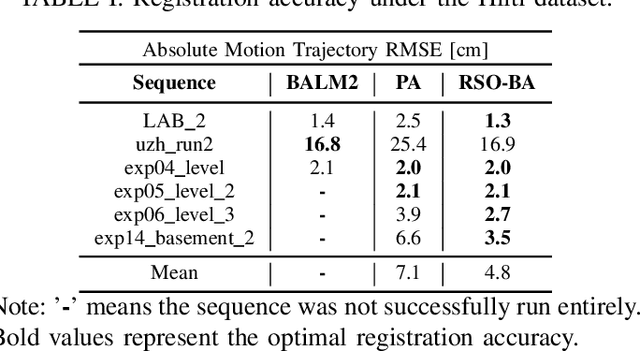

Abstract:The Bundle Adjustment (BA) algorithm is a widely used nonlinear optimization technique in the backend of Simultaneous Localization and Mapping (SLAM) systems. By leveraging the co-view relationships of landmarks from multiple perspectives, it constructs a joint estimation model for both poses and landmarks, enabling the system to generate refined maps and reduce front-end localization errors. However, applying BA to LiDAR data presents unique challenges due to the large volume of 3D points typically present in point clouds, making robust and accurate model solving more complex. In this work, we propose a novel mean square group metric (MSGM). This metric applies mean square transformation to uniformly process the measurement of plane landmarks from a single perspective. The transformed metric ensures scale interpretability while avoiding the time-consuming point-by-point calculations. By integrating a robust kernel function, the metrics involved in the BA model are reweighted, enhancing the robustness of the solution process. On the basis of the proposed robust LiDAR BA model, we derived an explicit second-order estimator (RSO-BA). This estimator employs analytical formulas for Hessian and gradient calculations, ensuring the precision of the BA solution. We evaluated the proposed RSO-BA estimator against existing implicit second-order and explicit approximate second-order estimators using the publicly available datasets. The experimental results demonstrate that the RSO-BA estimator outperforms its counterparts regarding registration accuracy and robustness, particularly in large-scale or complex unstructured environments.

MLO: Multi-Object Tracking and Lidar Odometry in Dynamic Environment

Apr 29, 2022

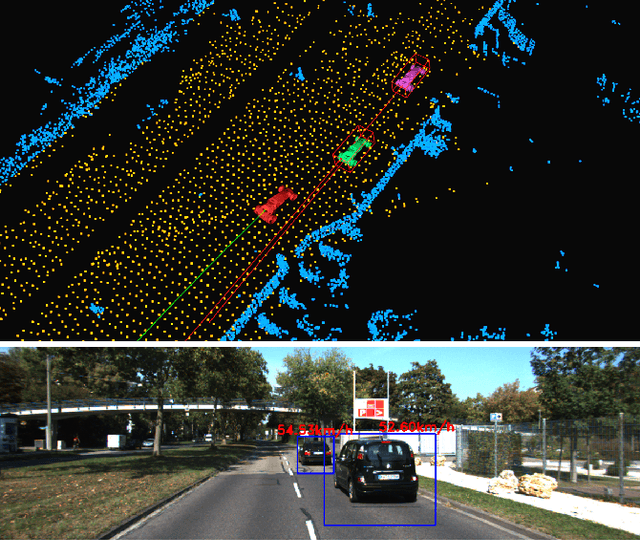

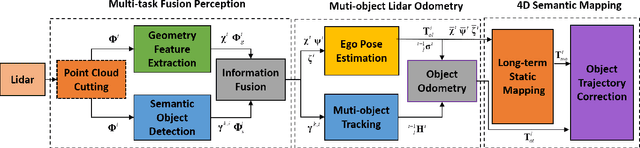

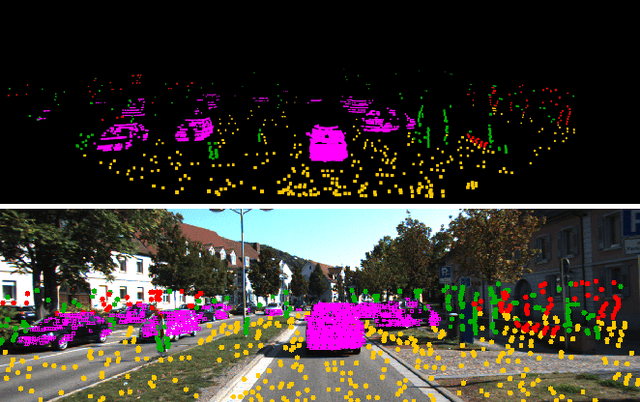

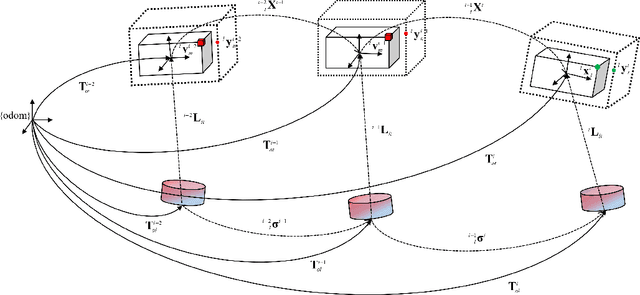

Abstract:The SLAM system built on the static scene assumption will introduce significant estimation errors when a large number of moving objects appear in the field of view. Tracking and maintaining semantic objects is beneficial to understand the scene and provide rich decision information for planning and control modules. This paper introduces MLO , a multi-object Lidar odometry which tracks ego-motion and movable objects with only the lidar sensor. First, it achieves information extraction of foreground movable objects, surface road, and static background features based on geometry and object fusion perception module. While robustly estimating ego-motion, Multi-object tracking is accomplished through the least-squares method fused by 3D bounding boxes and geometric point clouds. Then, a continuous 4D semantic object map on the timeline can be created. Our approach is evaluated qualitatively and quantitatively under different scenarios on the public KITTI dataset. The experiment results show that the ego localization accuracy of MLO is better than A-LOAM system in highly dynamic, unstructured, and unknown semantic scenes. Meanwhile, the multi-object tracking method with semantic-geometry fusion also has apparent advantages in accuracy and tracking robustness compared with the single method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge