Yizhu Gao

Can OpenAI o1 outperform humans in higher-order cognitive thinking?

Dec 07, 2024

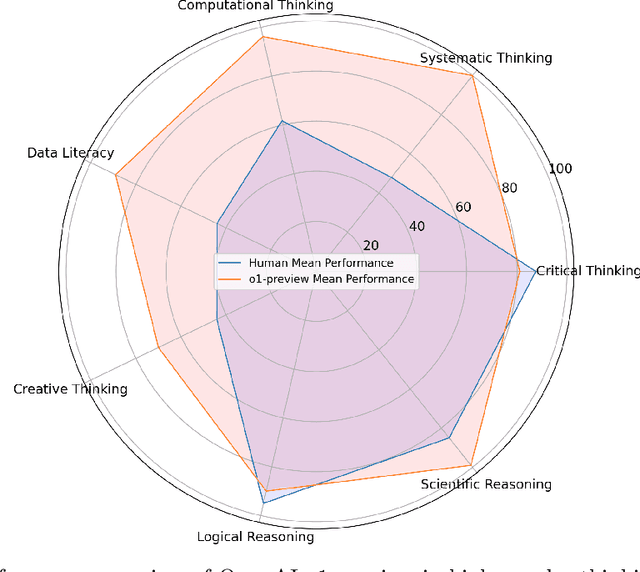

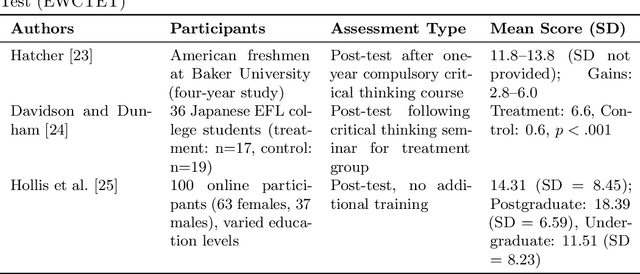

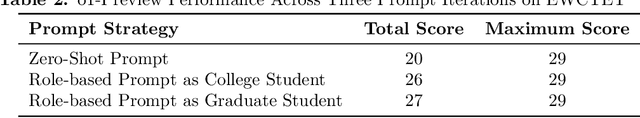

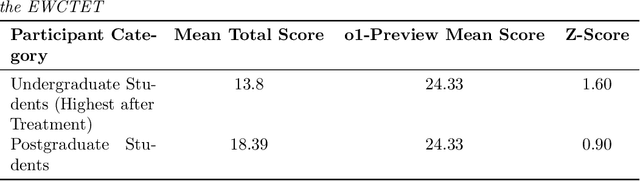

Abstract:This study evaluates the performance of OpenAI's o1-preview model in higher-order cognitive domains, including critical thinking, systematic thinking, computational thinking, data literacy, creative thinking, logical reasoning, and scientific reasoning. Using established benchmarks, we compared the o1-preview models's performance to human participants from diverse educational levels. o1-preview achieved a mean score of 24.33 on the Ennis-Weir Critical Thinking Essay Test (EWCTET), surpassing undergraduate (13.8) and postgraduate (18.39) participants (z = 1.60 and 0.90, respectively). In systematic thinking, it scored 46.1, SD = 4.12 on the Lake Urmia Vignette, significantly outperforming the human mean (20.08, SD = 8.13, z = 3.20). For data literacy, o1-preview scored 8.60, SD = 0.70 on Merk et al.'s "Use Data" dimension, compared to the human post-test mean of 4.17, SD = 2.02 (z = 2.19). On creative thinking tasks, the model achieved originality scores of 2.98, SD = 0.73, higher than the human mean of 1.74 (z = 0.71). In logical reasoning (LogiQA), it outperformed humans with average 90%, SD = 10% accuracy versus 86%, SD = 6.5% (z = 0.62). For scientific reasoning, it achieved near-perfect performance (mean = 0.99, SD = 0.12) on the TOSLS,, exceeding the highest human scores of 0.85, SD = 0.13 (z = 1.78). While o1-preview excelled in structured tasks, it showed limitations in problem-solving and adaptive reasoning. These results demonstrate the potential of AI to complement education in structured assessments but highlight the need for ethical oversight and refinement for broader applications.

Foundation Models for Low-Resource Language Education (Vision Paper)

Dec 06, 2024Abstract:Recent studies show that large language models (LLMs) are powerful tools for working with natural language, bringing advances in many areas of computational linguistics. However, these models face challenges when applied to low-resource languages due to limited training data and difficulty in understanding cultural nuances. Research is now focusing on multilingual models to improve LLM performance for these languages. Education in these languages also struggles with a lack of resources and qualified teachers, particularly in underdeveloped regions. Here, LLMs can be transformative, supporting innovative methods like community-driven learning and digital platforms. This paper discusses how LLMs could enhance education for low-resource languages, emphasizing practical applications and benefits.

Multimodality of AI for Education: Towards Artificial General Intelligence

Dec 12, 2023

Abstract:This paper presents a comprehensive examination of how multimodal artificial intelligence (AI) approaches are paving the way towards the realization of Artificial General Intelligence (AGI) in educational contexts. It scrutinizes the evolution and integration of AI in educational systems, emphasizing the crucial role of multimodality, which encompasses auditory, visual, kinesthetic, and linguistic modes of learning. This research delves deeply into the key facets of AGI, including cognitive frameworks, advanced knowledge representation, adaptive learning mechanisms, strategic planning, sophisticated language processing, and the integration of diverse multimodal data sources. It critically assesses AGI's transformative potential in reshaping educational paradigms, focusing on enhancing teaching and learning effectiveness, filling gaps in existing methodologies, and addressing ethical considerations and responsible usage of AGI in educational settings. The paper also discusses the implications of multimodal AI's role in education, offering insights into future directions and challenges in AGI development. This exploration aims to provide a nuanced understanding of the intersection between AI, multimodality, and education, setting a foundation for future research and development in AGI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge