Yinheng Zhu

PANDA: A Gigapixel-level Human-centric Video Dataset

Mar 10, 2020

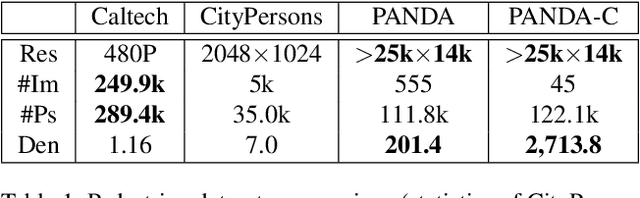

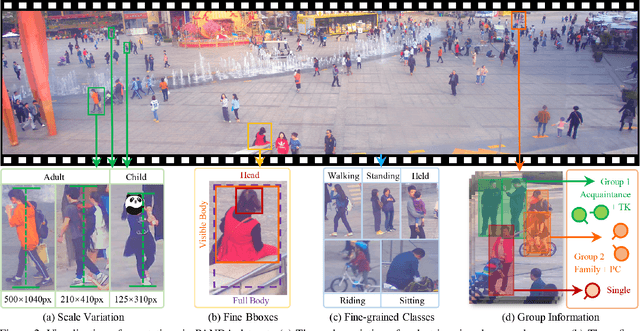

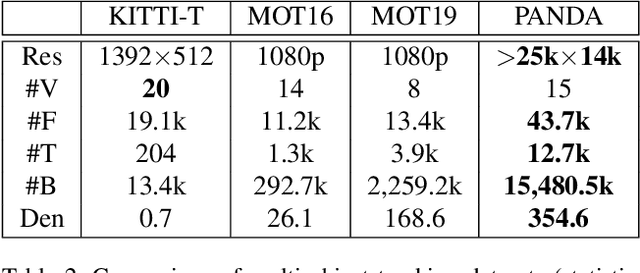

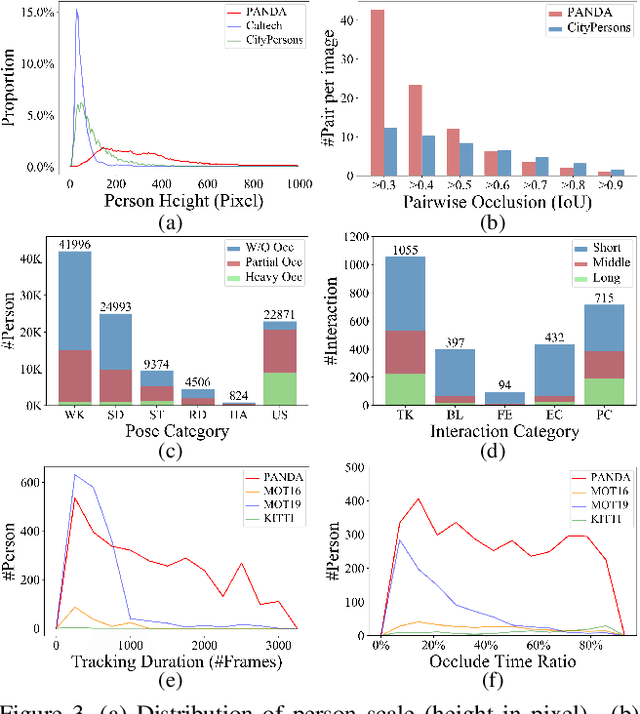

Abstract:We present PANDA, the first gigaPixel-level humAN-centric viDeo dAtaset, for large-scale, long-term, and multi-object visual analysis. The videos in PANDA were captured by a gigapixel camera and cover real-world scenes with both wide field-of-view (~1 square kilometer area) and high-resolution details (~gigapixel-level/frame). The scenes may contain 4k head counts with over 100x scale variation. PANDA provides enriched and hierarchical ground-truth annotations, including 15,974.6k bounding boxes, 111.8k fine-grained attribute labels, 12.7k trajectories, 2.2k groups and 2.9k interactions. We benchmark the human detection and tracking tasks. Due to the vast variance of pedestrian pose, scale, occlusion and trajectory, existing approaches are challenged by both accuracy and efficiency. Given the uniqueness of PANDA with both wide FoV and high resolution, a new task of interaction-aware group detection is introduced. We design a 'global-to-local zoom-in' framework, where global trajectories and local interactions are simultaneously encoded, yielding promising results. We believe PANDA will contribute to the community of artificial intelligence and praxeology by understanding human behaviors and interactions in large-scale real-world scenes. PANDA Website: http://www.panda-dataset.com.

Head Mounted Pupil Tracking Using Convolutional Neural Network

Jul 17, 2018

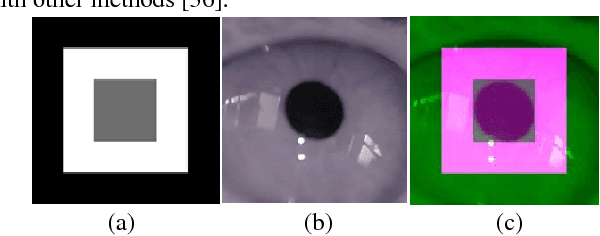

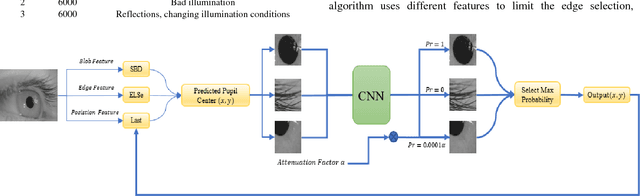

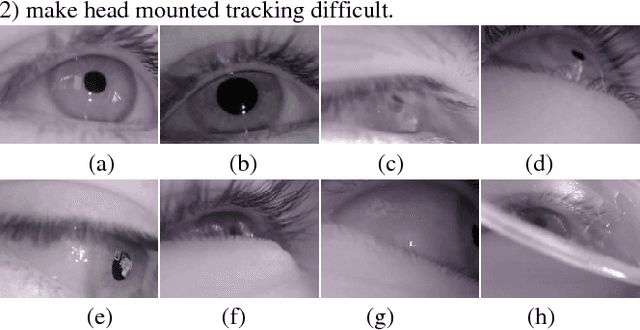

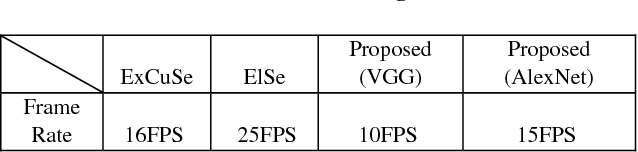

Abstract:Pupil tracking is an important branch of object tracking which require high precision. We investigate head mounted pupil tracking which is often more convenient and precise than remote pupil tracking, but also more challenging. When pupil tracking suffers from noise like bad illumination, detection precision dramatically decreases. Due to the appearance of head mounted recording device and public benchmark image datasets, head mounted tracking algorithms have become easier to design and evaluate. In this paper, we propose a robust head mounted pupil detection algorithm which uses a Convolutional Neural Network (CNN) to combine different features of pupil. Here we consider three features of pupil. Firstly, we use three pupil feature-based algorithms to find pupil center independently. Secondly, we use a CNN to evaluate the quality of each result. Finally, we select the best result as output. The experimental results show that our proposed algorithm performs better than the present state-of-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge