Yinglong Miao

Resolution Complete In-Place Object Retrieval given Known Object Models

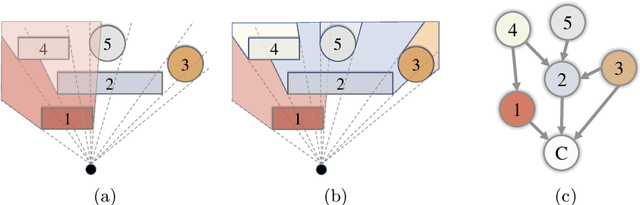

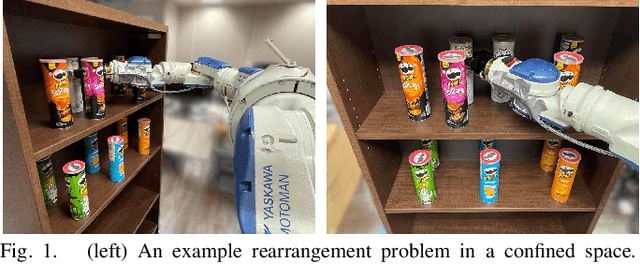

Mar 25, 2023Abstract:This work proposes a robot task planning framework for retrieving a target object in a confined workspace among multiple stacked objects that obstruct the target. The robot can use prehensile picking and in-workspace placing actions. The method assumes access to 3D models for the visible objects in the scene. The key contribution is in achieving desirable properties, i.e., to provide (a) safety, by avoiding collisions with sensed obstacles, objects, and occluded regions, and (b) resolution completeness (RC) - or probabilistic completeness (PC) depending on implementation - which indicates a solution will be eventually found (if it exists) as the resolution of algorithmic parameters increases. A heuristic variant of the basic RC algorithm is also proposed to solve the task more efficiently while retaining the desirable properties. Simulation results compare using random picking and placing operations against the basic RC algorithm that reasons about object dependency as well as its heuristic variant. The success rate is higher for the RC approaches given the same amount of time. The heuristic variant is able to solve the problem even more efficiently than the basic approach. The integration of the RC algorithm with perception, where an RGB-D sensor detects the objects as they are being moved, enables real robot demonstrations of safely retrieving target objects from a cluttered shelf.

Safe, Occlusion-Aware Manipulation for Online Object Reconstruction in Confined Spaces

May 24, 2022

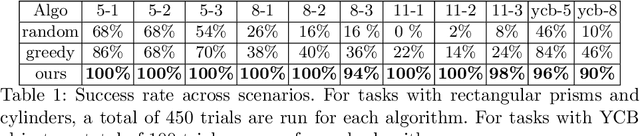

Abstract:Recent work in robotic manipulation focuses on object retrieval in cluttered space under occlusion. Nevertheless, the majority of efforts lack an analysis of conditions for the completeness of the approaches or the methods apply only when objects can be removed from the workspace. This work formulates the general, occlusion-aware manipulation task, and focuses on safe object reconstruction in a confined space with in-place relocation. A framework that ensures safety with completeness guarantees is proposed. Furthermore, an algorithm, which is an instantiation of this framework for monotone instances, is developed and evaluated empirically by comparing against a random and a greedy baseline on randomly generated experiments in simulation. Even for cluttered scenes with realistic objects, the proposed algorithm significantly outperforms the baselines and maintains a high success rate across experimental conditions.

Efficient and High-quality Prehensile Rearrangement in Cluttered and Confined Spaces

Oct 06, 2021

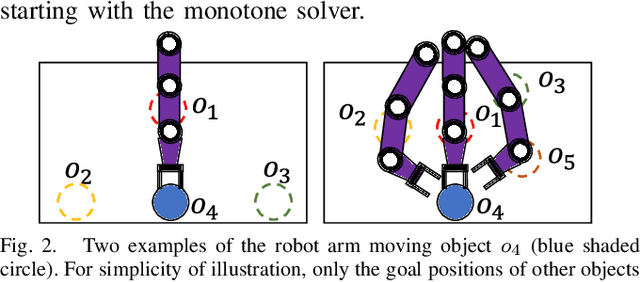

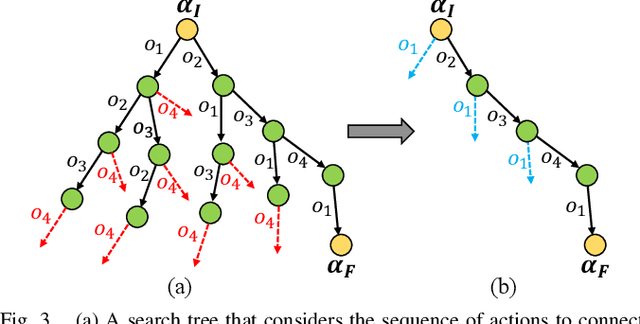

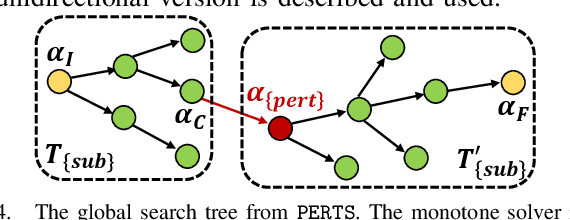

Abstract:Prehensile object rearrangement in cluttered and confined spaces has broad applications but is also challenging. For instance, rearranging products in a grocery or home shelf means that the robot cannot directly access all objects and has limited free space. This is harder than tabletop rearrangement where objects are easily accessible with top-down grasps, which simplifies robot-object interactions. This work focuses on problems where such interactions are critical for completing tasks and extends state-of-the-art results in rearrangement planning. It proposes a new efficient and complete solver under general constraints for monotone instances, which can be solved by moving each object at most once. The monotone solver reasons about robot-object constraints and uses them to effectively prune the search space. The new monotone solver is integrated with a global planner to solve non-monotone instances with high-quality solutions fast. Furthermore, this work contributes an effective pre-processing tool to speed up arm motion planning for rearrangement in confined spaces. The pre-processing tool provide significant speed-ups (49.1% faster on average) in online query resolution. Comparisons in simulations further demonstrate that the proposed monotone solver, equipped with the pre-processing tool, results in 57.3% faster computation and 3 times higher success rate than alternatives. Similarly, the resulting global planner is computationally more efficient and has a higher success rate given the more powerful monotone solver and the pre-processing tool, while producing high-quality solutions for non-monotone instances (i.e., only 1.3 buffers are needed on average). Videos of demonstrating solutions on a real robotic system and codes can be found at https://github.com/Rui1223/uniform_object_rearrangement.

Online Object Model Reconstruction and Reuse for Lifelong Improvement of Robot Manipulation

Sep 28, 2021

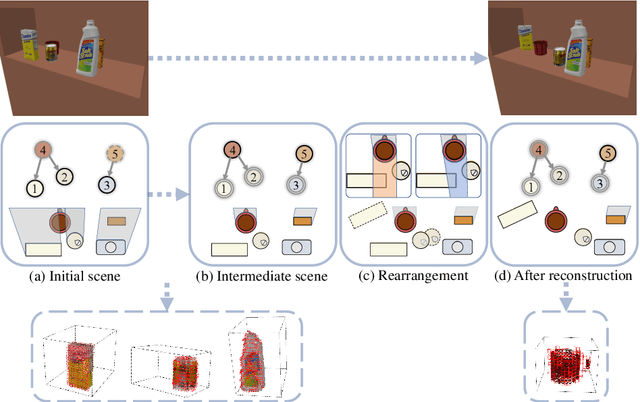

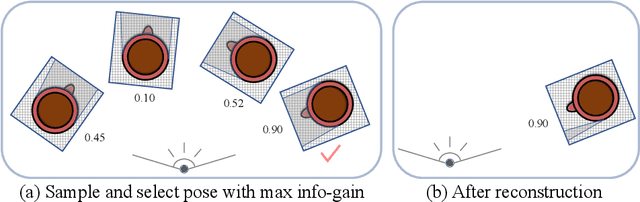

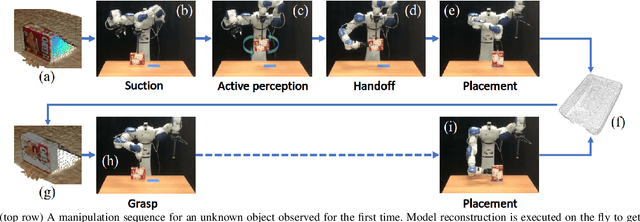

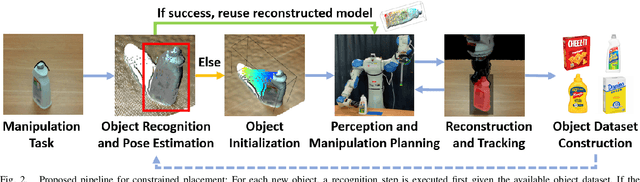

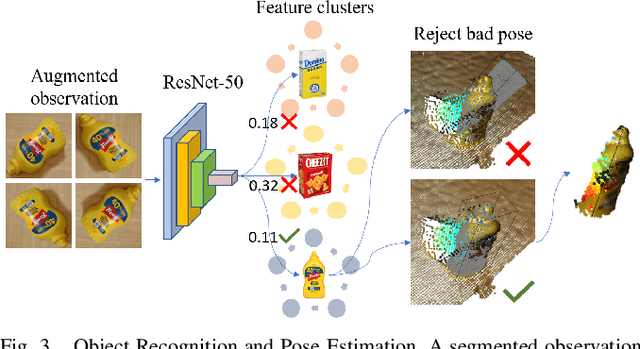

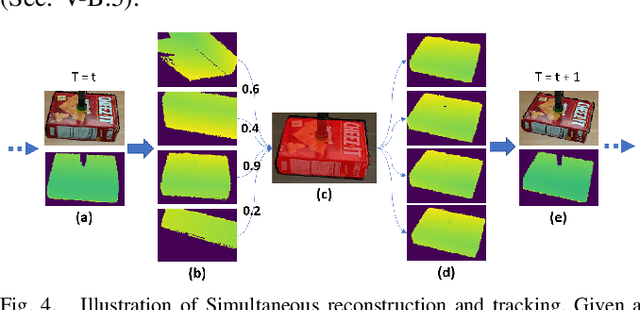

Abstract:This work proposes a robotic pipeline for picking and constrained placement of objects without geometric shape priors. Compared to recent efforts developed for similar tasks, where every object was assumed to be novel, the proposed system recognizes previously manipulated objects and performs online model reconstruction and reuse. Over a lifelong manipulation process, the system keeps learning features of objects it has interacted with and updates their reconstructed models. Whenever an instance of a previously manipulated object reappears, the system aims to first recognize it and then register its previously reconstructed model given the current observation. This step greatly reduces object shape uncertainty allowing the system to even reason for parts of the objects that are currently not observable. This also results in better manipulation efficiency as it reduces the need for active perception of the target object during manipulation. To get a reusable reconstructed model, the proposed pipeline adopts i) TSDF for object representation, and ii) a variant of the standard particle filter algorithm for pose estimation and tracking of the partial object model. Furthermore, an effective way to construct and maintain a dataset of manipulated objects is presented. A sequence of real-world manipulation experiments is performed to show how future manipulation tasks become more effective and efficient by reusing reconstructed models of previously manipulated objects that were generated on the fly instead of treating objects as novel every time.

MPC-MPNet: Model-Predictive Motion Planning Networks for Fast, Near-Optimal Planning under Kinodynamic Constraints

Jan 17, 2021

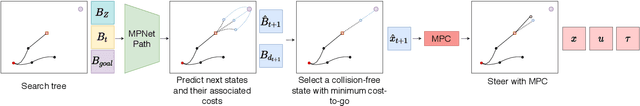

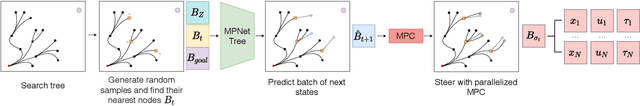

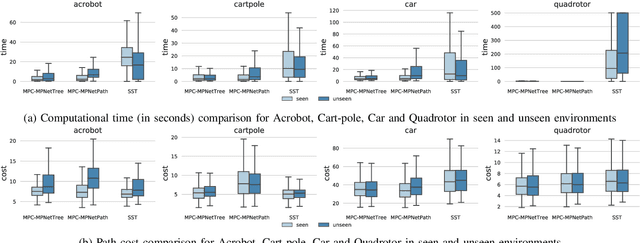

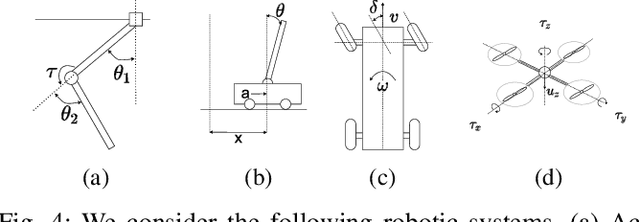

Abstract:Kinodynamic Motion Planning (KMP) is to find a robot motion subject to concurrent kinematics and dynamics constraints. To date, quite a few methods solve KMP problems and those that exist struggle to find near-optimal solutions and exhibit high computational complexity as the planning space dimensionality increases. To address these challenges, we present a scalable, imitation learning-based, Model-Predictive Motion Planning Networks framework that quickly finds near-optimal path solutions with worst-case theoretical guarantees under kinodynamic constraints for practical underactuated systems. Our framework introduces two algorithms built on a neural generator, discriminator, and a parallelizable Model Predictive Controller (MPC). The generator outputs various informed states towards the given target, and the discriminator selects the best possible subset from them for the extension. The MPC locally connects the selected informed states while satisfying the given constraints leading to feasible, near-optimal solutions. We evaluate our algorithms on a range of cluttered, kinodynamically constrained, and underactuated planning problems with results indicating significant improvements in computation times, path qualities, and success rates over existing methods.

Motion Planning Networks: Bridging the Gap Between Learning-based and Classical Motion Planners

Jul 13, 2019

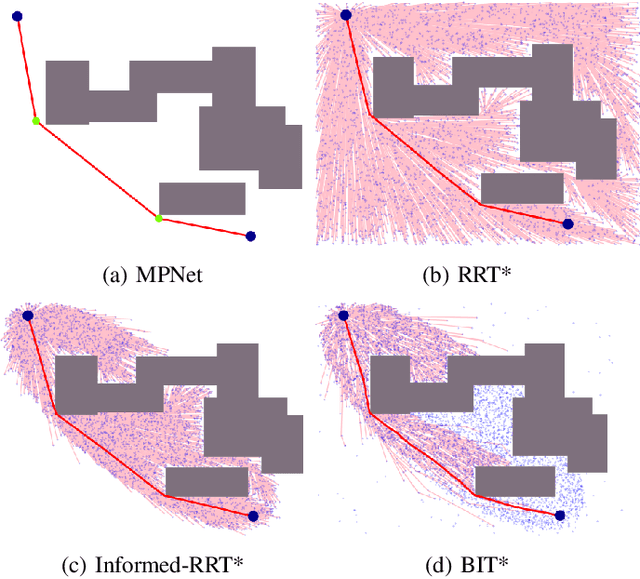

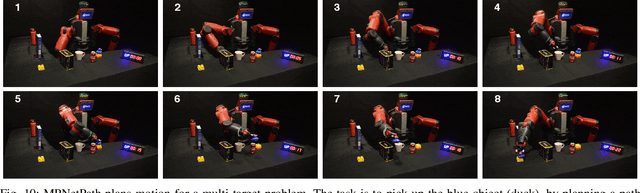

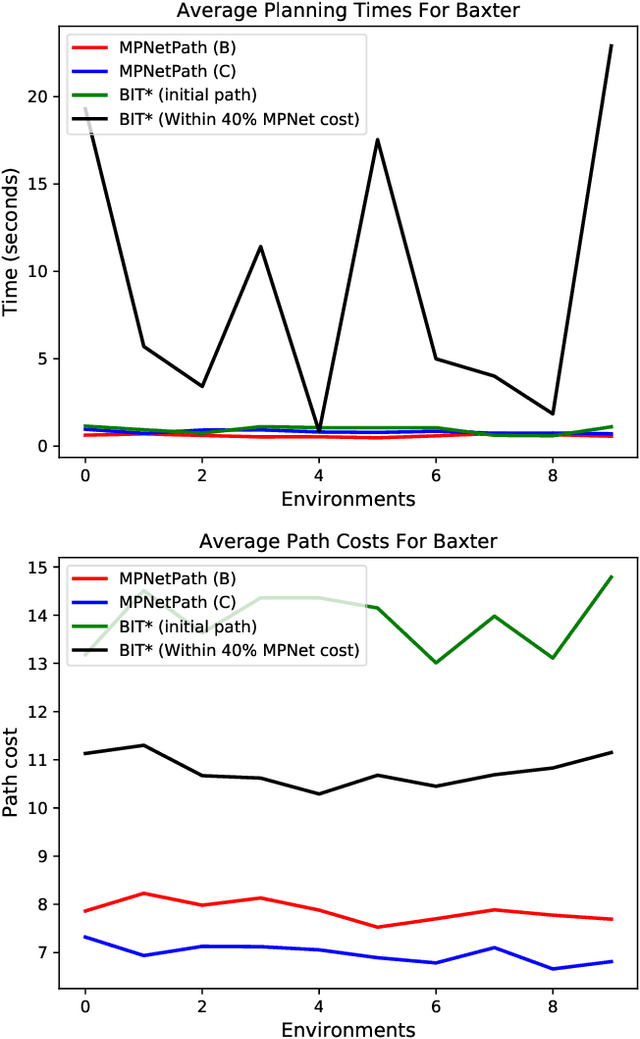

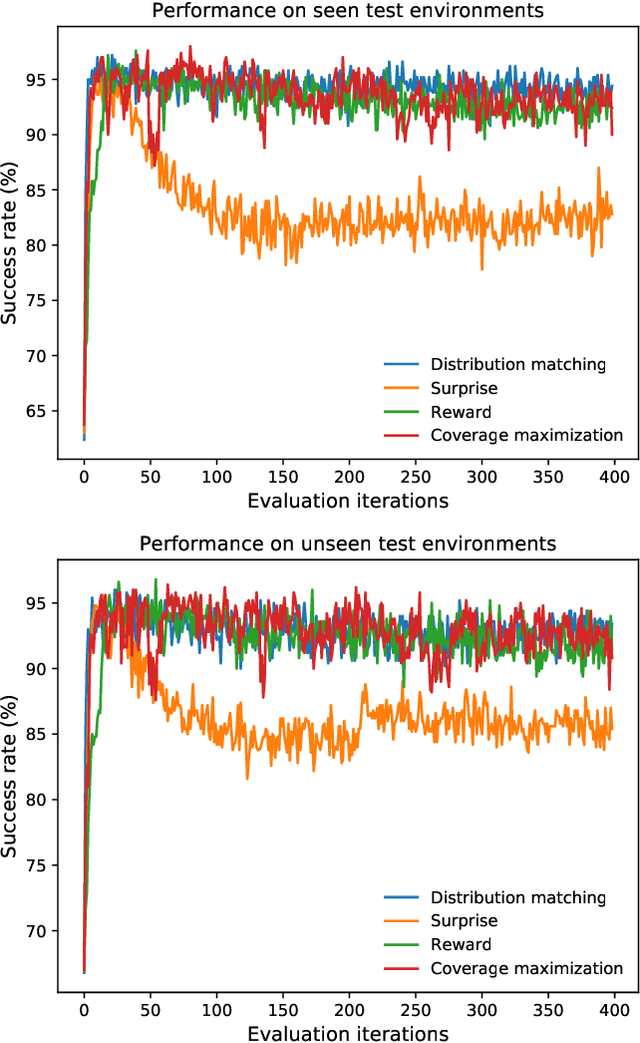

Abstract:This paper describes Motion Planning Networks (MPNet), a computationally efficient, learning-based neural planner for solving motion planning problems. MPNet uses neural networks to learn general near-optimal heuristics for path planning in seen and unseen environments. It receives environment information as point-clouds, as well as a robot's initial and desired goal configurations and recursively calls itself to bidirectionally generate connectable paths. In addition to finding directly connectable and near-optimal paths in a single pass, we show that worst-case theoretical guarantees can be proven if we merge this neural network strategy with classical sample-based planners in a hybrid approach while still retaining significant computational and optimality improvements. To learn the MPNet models, we present an active continual learning approach that enables MPNet to learn from streaming data and actively ask for expert demonstrations when needed, drastically reducing data for training. We validate MPNet against gold-standard and state-of-the-art planning methods in a variety of problems from 2D to 7D robot configuration spaces in challenging and cluttered environments, with results showing significant and consistently stronger performance metrics, and motivating neural planning in general as a modern strategy for solving motion planning problems efficiently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge