Yichen Jia

Fairy: Fast Parallelized Instruction-Guided Video-to-Video Synthesis

Dec 20, 2023

Abstract:In this paper, we introduce Fairy, a minimalist yet robust adaptation of image-editing diffusion models, enhancing them for video editing applications. Our approach centers on the concept of anchor-based cross-frame attention, a mechanism that implicitly propagates diffusion features across frames, ensuring superior temporal coherence and high-fidelity synthesis. Fairy not only addresses limitations of previous models, including memory and processing speed. It also improves temporal consistency through a unique data augmentation strategy. This strategy renders the model equivariant to affine transformations in both source and target images. Remarkably efficient, Fairy generates 120-frame 512x384 videos (4-second duration at 30 FPS) in just 14 seconds, outpacing prior works by at least 44x. A comprehensive user study, involving 1000 generated samples, confirms that our approach delivers superior quality, decisively outperforming established methods.

Censored Quantile Regression Neural Networks

May 26, 2022

Abstract:This paper considers doing quantile regression on censored data using neural networks (NNs). This adds to the survival analysis toolkit by allowing direct prediction of the target variable, along with a distribution-free characterisation of uncertainty, using a flexible function approximator. We begin by showing how an algorithm popular in linear models can be applied to NNs. However, the resulting procedure is inefficient, requiring sequential optimisation of an individual NN at each desired quantile. Our major contribution is a novel algorithm that simultaneously optimises a grid of quantiles output by a single NN. To offer theoretical insight into our algorithm, we show firstly that it can be interpreted as a form of expectation-maximisation, and secondly that it exhibits a desirable `self-correcting' property. Experimentally, the algorithm produces quantiles that are better calibrated than existing methods on 10 out of 12 real datasets.

DeepCENT: Prediction of Censored Event Time via Deep Learning

Feb 08, 2022

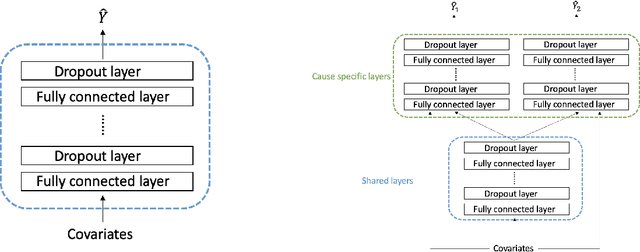

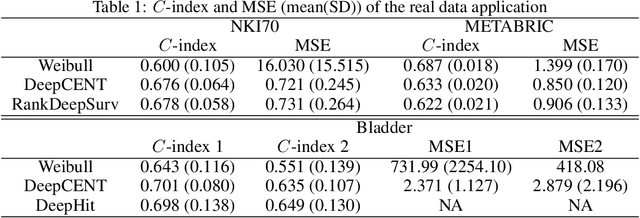

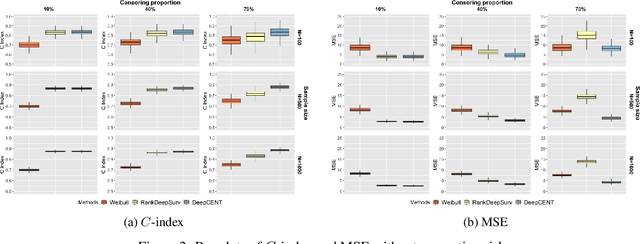

Abstract:With the rapid advances of deep learning, many computational methods have been developed to analyze nonlinear and complex right censored data via deep learning approaches. However, the majority of the methods focus on predicting survival function or hazard function rather than predicting a single valued time to an event. In this paper, we propose a novel method, DeepCENT, to directly predict the individual time to an event. It utilizes the deep learning framework with an innovative loss function that combines the mean square error and the concordance index. Most importantly, DeepCENT can handle competing risks, where one type of event precludes the other types of events from being observed. The validity and advantage of DeepCENT were evaluated using simulation studies and illustrated with three publicly available cancer data sets.

Deep Learning for Quantile Regression: DeepQuantreg

Jul 14, 2020

Abstract:The computational prediction algorithm of neural network, or deep learning, has drawn much attention recently in statistics as well as in image recognition and natural language processing. Particularly in statistical application for censored survival data, the loss function used for optimization has been mainly based on the partial likelihood from Cox's model and its variations to utilize existing neural network library such as Keras, which was built upon the open source library of TensorFlow. This paper presents a novel application of the neural network to the quantile regression for survival data with right censoring, which is adjusted by the inverse of the estimated censoring distribution in the check function. The main purpose of this work is to show that the deep learning method could be flexible enough to predict nonlinear patterns more accurately compared to the traditional method even in low-dimensional data, emphasizing on practicality of the method for censored survival data. Simulation studies were performed to generate nonlinear censored survival data and compare the deep learning method with the traditional quantile regression method in terms of prediction accuracy. The proposed method is illustrated with a publicly available breast cancer data set with gene signatures. The source code is freely available at https://github.com/yicjia/DeepQuantreg.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge