Yen-Hsi Richard Tsai

Optimizing Sensor Network Design for Multiple Coverage

May 15, 2024

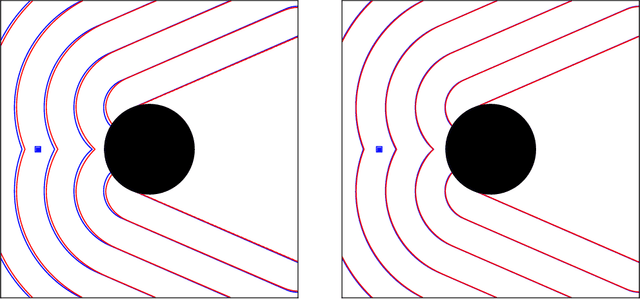

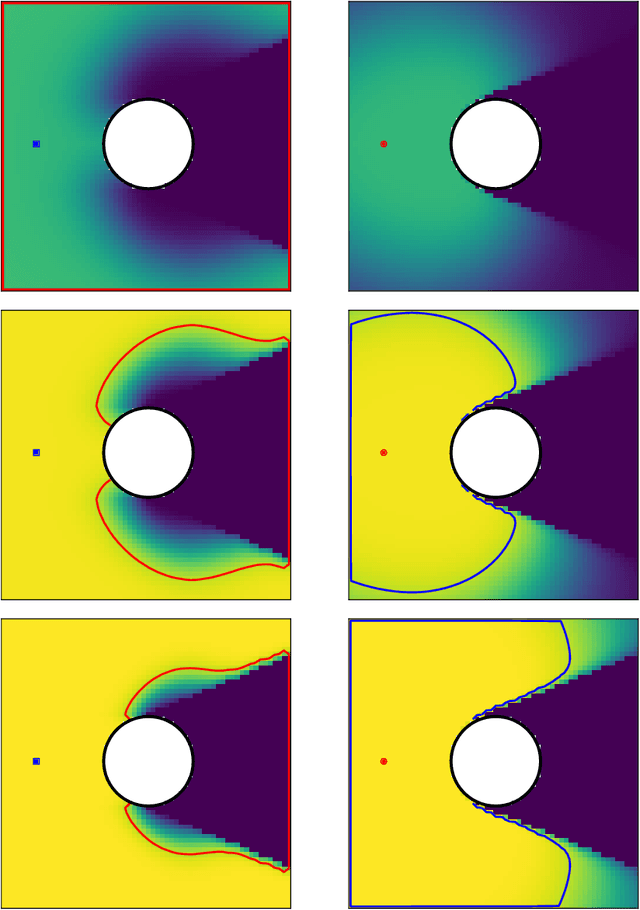

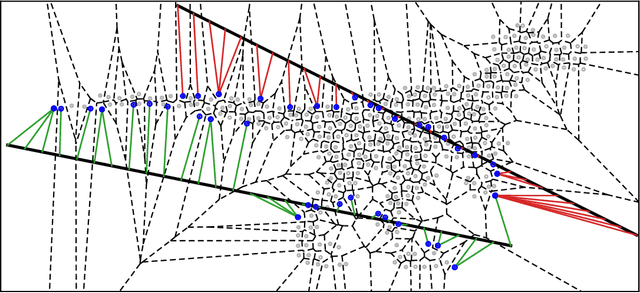

Abstract:Sensor placement optimization methods have been studied extensively. They can be applied to a wide range of applications, including surveillance of known environments, optimal locations for 5G towers, and placement of missile defense systems. However, few works explore the robustness and efficiency of the resulting sensor network concerning sensor failure or adversarial attacks. This paper addresses this issue by optimizing for the least number of sensors to achieve multiple coverage of non-simply connected domains by a prescribed number of sensors. We introduce a new objective function for the greedy (next-best-view) algorithm to design efficient and robust sensor networks and derive theoretical bounds on the network's optimality. We further introduce a Deep Learning model to accelerate the algorithm for near real-time computations. The Deep Learning model requires the generation of training examples. Correspondingly, we show that understanding the geometric properties of the training data set provides important insights into the performance and training process of deep learning techniques. Finally, we demonstrate that a simple parallel version of the greedy approach using a simpler objective can be highly competitive.

Data-induced multiscale losses and efficient multirate gradient descent schemes

Feb 06, 2024

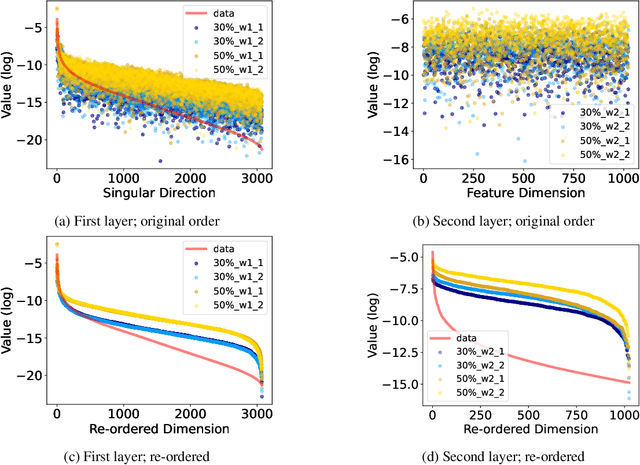

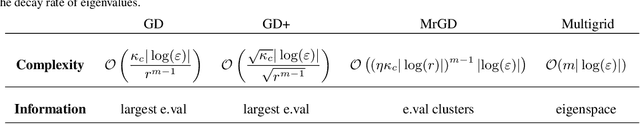

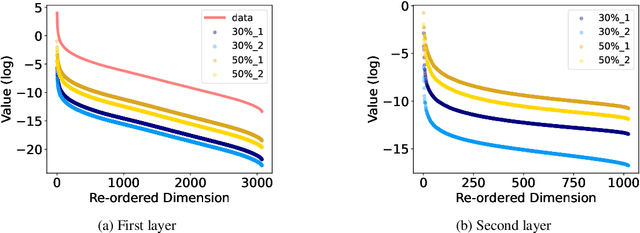

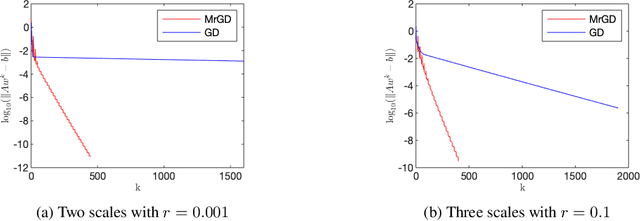

Abstract:This paper investigates the impact of multiscale data on machine learning algorithms, particularly in the context of deep learning. A dataset is multiscale if its distribution shows large variations in scale across different directions. This paper reveals multiscale structures in the loss landscape, including its gradients and Hessians inherited from the data. Correspondingly, it introduces a novel gradient descent approach, drawing inspiration from multiscale algorithms used in scientific computing. This approach seeks to transcend empirical learning rate selection, offering a more systematic, data-informed strategy to enhance training efficiency, especially in the later stages.

Efficient and robust Sensor Placement in Complex Environments

Sep 15, 2023

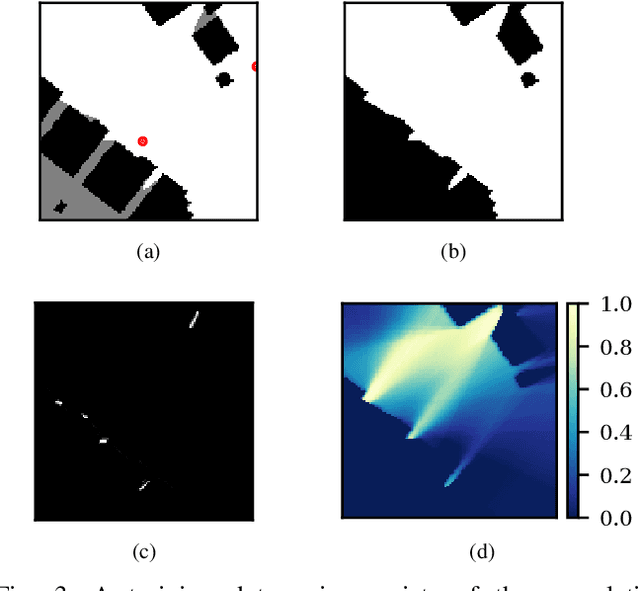

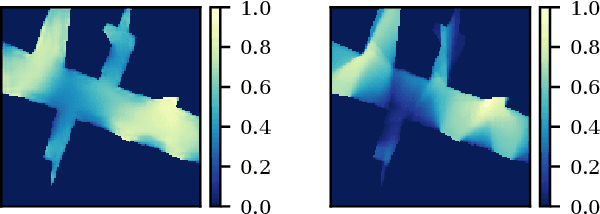

Abstract:We address the problem of efficient and unobstructed surveillance or communication in complex environments. On one hand, one wishes to use a minimal number of sensors to cover the environment. On the other hand, it is often important to consider solutions that are robust against sensor failure or adversarial attacks. This paper addresses these challenges of designing minimal sensor sets that achieve multi-coverage constraints -- every point in the environment is covered by a prescribed number of sensors. We propose a greedy algorithm to achieve the objective. Further, we explore deep learning techniques to accelerate the evaluation of the objective function formulated in the greedy algorithm. The training of the neural network reveals that the geometric properties of the data significantly impact the network's performance, particularly at the end stage. By taking into account these properties, we discuss the differences in using greedy and $\epsilon$-greedy algorithms to generate data and their impact on the robustness of the network.

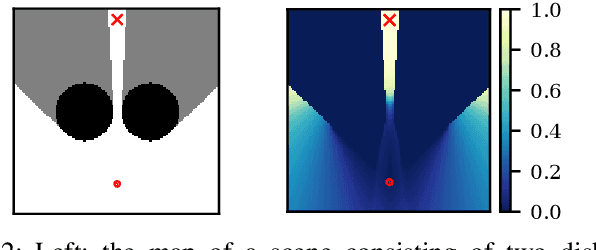

Visibility Optimization for Surveillance-Evasion Games

Oct 18, 2020

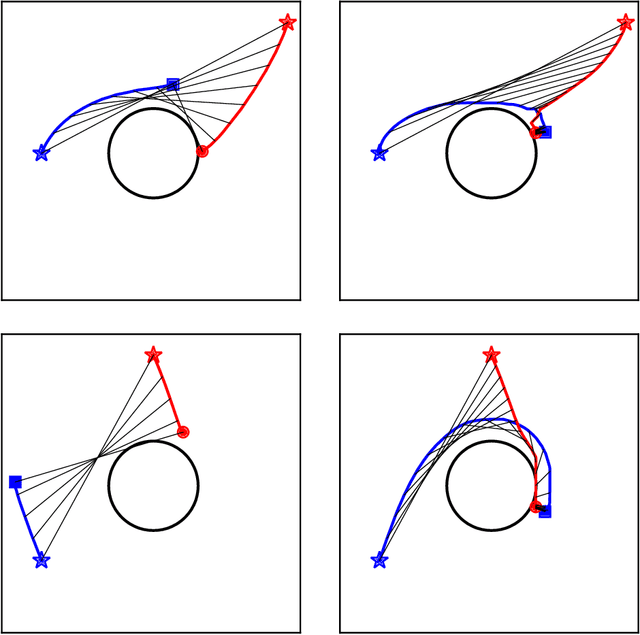

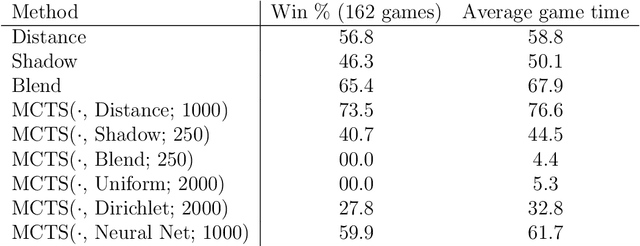

Abstract:We consider surveillance-evasion differential games, where a pursuer must try to constantly maintain visibility of a moving evader. The pursuer loses as soon as the evader becomes occluded. Optimal controls for game can be formulated as a Hamilton-Jacobi-Isaac equation. We use an upwind scheme to compute the feedback value function, corresponding to the end-game time of the differential game. Although the value function enables optimal controls, it is prohibitively expensive to compute, even for a single pursuer and single evader on a small grid. We consider a discrete variant of the surveillance-game. We propose two locally optimal strategies based on the static value function for the surveillance-evasion game with multiple pursuers and evaders. We show that Monte Carlo tree search and self-play reinforcement learning can train a deep neural network to generate reasonable strategies for on-line game play. Given enough computational resources and offline training time, the proposed model can continue to improve its policies and efficiently scale to higher resolutions.

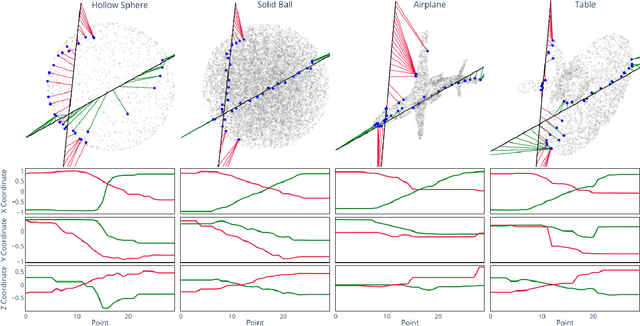

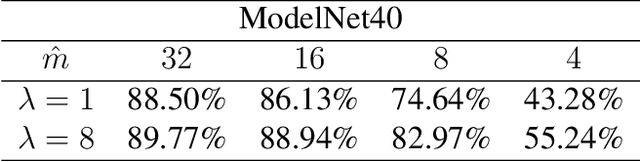

Nearest Neighbor Sampling of Point Sets using Random Rays

Nov 29, 2019

Abstract:We propose a new framework for the sampling, compression, and analysis of distributions of point sets and other geometric objects embedded in Euclidean spaces. A set of randomly selected rays are projected onto their closest points in the data set, forming the ray signature. From the signature, statistical information about the data set, as well as certain geometrical information, can be extracted, independent of the ray set. We present promising results from "RayNN", a neural network for the classification of point clouds based on ray signatures.

Autonomous Exploration, Reconstruction, and Surveillance of 3D Environments Aided by Deep Learning

Sep 17, 2018

Abstract:We study the problem of visibility-based exploration, reconstruction and surveillance in the context of supervised learning. Using a level set representation of data and information, we train a convolutional neural network to determine vantage points that maximize visibility. We show that this method drastically reduces the on-line computational cost and determines a small set of vantage points that solve the problem. This enables us to efficiently produce highly-resolved and topologically accurate maps of complex 3D environments. We present realistic simulations on 2D and 3D urban environments.

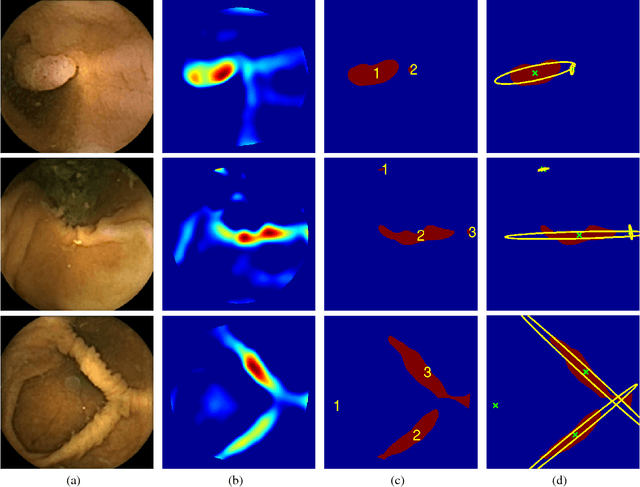

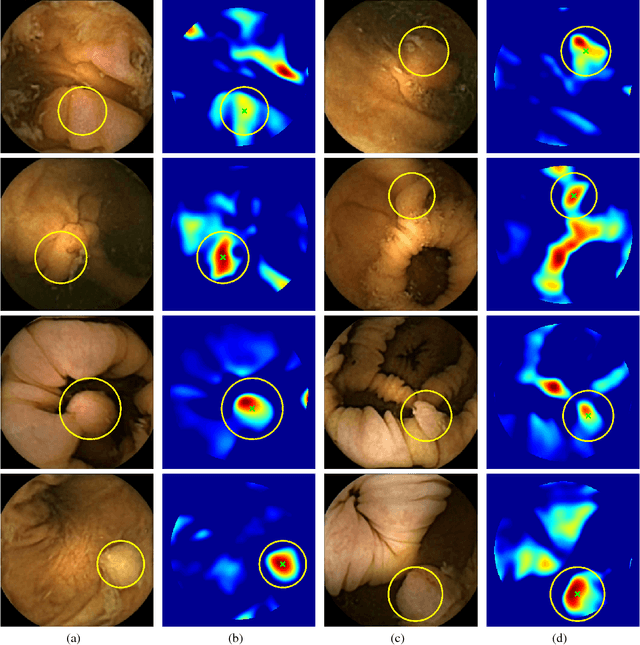

Automated polyp detection in colon capsule endoscopy

Mar 27, 2014

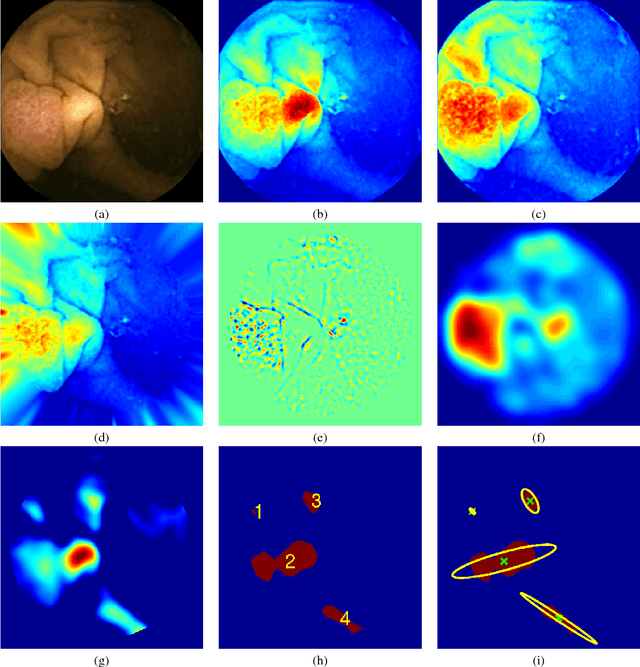

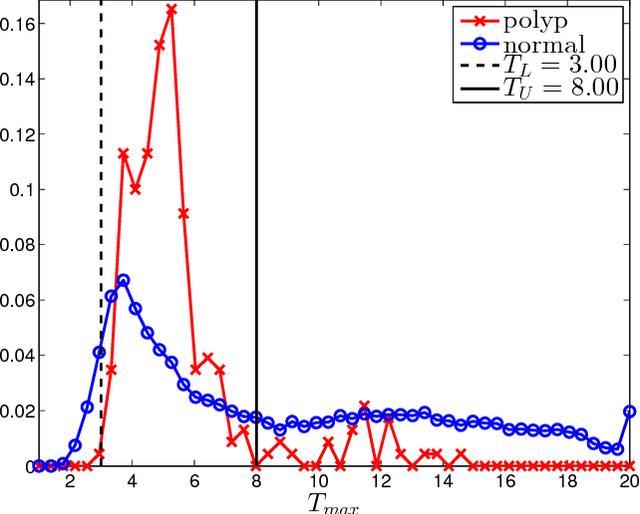

Abstract:Colorectal polyps are important precursors to colon cancer, a major health problem. Colon capsule endoscopy (CCE) is a safe and minimally invasive examination procedure, in which the images of the intestine are obtained via digital cameras on board of a small capsule ingested by a patient. The video sequence is then analyzed for the presence of polyps. We propose an algorithm that relieves the labor of a human operator analyzing the frames in the video sequence. The algorithm acts as a binary classifier, which labels the frame as either containing polyps or not, based on the geometrical analysis and the texture content of the frame. The geometrical analysis is based on a segmentation of an image with the help of a mid-pass filter. The features extracted by the segmentation procedure are classified according to an assumption that the polyps are characterized as protrusions that are mostly round in shape. Thus, we use a best fit ball radius as a decision parameter of a binary classifier. We present a statistical study of the performance of our approach on a data set containing over 18,900 frames from the endoscopic video sequences of five adult patients. The algorithm demonstrates a solid performance, achieving 47% sensitivity per frame and over 81% sensitivity per polyp at a specificity level of 90%. On average, with a video sequence length of 3747 frames, only 367 false positive frames need to be inspected by a human operator.

* 16 pages, 9 figures, 4 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge