Ye Feng

LLaMA Pro: Progressive LLaMA with Block Expansion

Jan 04, 2024

Abstract:Humans generally acquire new skills without compromising the old; however, the opposite holds for Large Language Models (LLMs), e.g., from LLaMA to CodeLLaMA. To this end, we propose a new post-pretraining method for LLMs with an expansion of Transformer blocks. We tune the expanded blocks using only new corpus, efficiently and effectively improving the model's knowledge without catastrophic forgetting. In this paper, we experiment on the corpus of code and math, yielding LLaMA Pro-8.3B, a versatile foundation model initialized from LLaMA2-7B, excelling in general tasks, programming, and mathematics. LLaMA Pro and its instruction-following counterpart (LLaMA Pro-Instruct) achieve advanced performance among various benchmarks, demonstrating superiority over existing open models in the LLaMA family and the immense potential of reasoning and addressing diverse tasks as an intelligent agent. Our findings provide valuable insights into integrating natural and programming languages, laying a solid foundation for developing advanced language agents that operate effectively in various environments.

Sampling Clustering

Jun 21, 2018

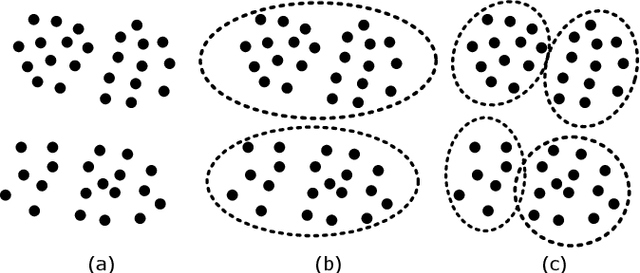

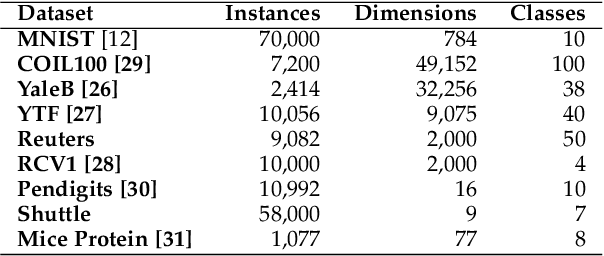

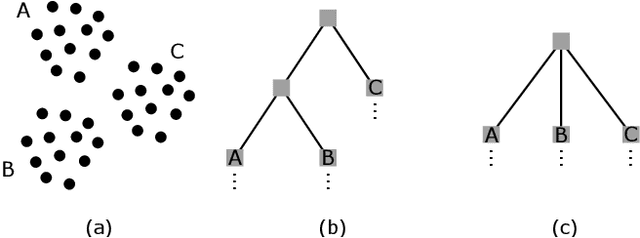

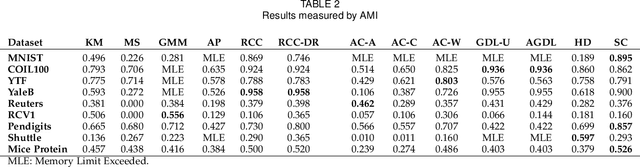

Abstract:We propose an efficient graph-based divisive cluster analysis approach called sampling clustering. It constructs a lite informative dendrogram by recursively dividing a graph into subgraphs. In each recursive call, a graph is sampled first with a set of vertices being removed to disconnect latent clusters, then condensed by adding edges to the remaining vertices to avoid graph fragmentation caused by vertex removals. We also present some sampling and condensing methods and discuss the effectiveness in this paper. Our implementations run in linear time and achieve outstanding performance on various types of datasets. Experimental results show that they outperform state-of-the-art clustering algorithms with significantly less computing resources requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge