Ye Bi

Predicting Dairy Calf Body Weight from Depth Images Using Deep Learning (YOLOv8) and Threshold Segmentation with Cross-Validation and Longitudinal Analysis

Apr 24, 2025Abstract:Monitoring calf body weight (BW) before weaning is essential for assessing growth, feed efficiency, health, and weaning readiness. However, labor, time, and facility constraints limit BW collection. Additionally, Holstein calf coat patterns complicate image-based BW estimation, and few studies have explored non-contact measurements taken at early time points for predicting later BW. The objectives of this study were to (1) develop deep learning-based segmentation models for extracting calf body metrics, (2) compare deep learning segmentation with threshold-based methods, and (3) evaluate BW prediction using single-time-point cross-validation with linear regression (LR) and extreme gradient boosting (XGBoost) and multiple-time-point cross-validation with LR, XGBoost, and a linear mixed model (LMM). Depth images from Holstein (n = 63) and Jersey (n = 5) pre-weaning calves were collected, with 20 Holstein calves being weighed manually. Results showed that You Only Look Once version 8 (YOLOv8) deep learning segmentation (intersection over union = 0.98) outperformed threshold-based methods (0.89). In single-time-point cross-validation, XGBoost achieved the best BW prediction (R^2 = 0.91, mean absolute percentage error (MAPE) = 4.37%), while LMM provided the most accurate longitudinal BW prediction (R^2 = 0.99, MAPE = 2.39%). These findings highlight the potential of deep learning for automated BW prediction, enhancing farm management.

Depth video data-enabled predictions of longitudinal dairy cow body weight using thresholding and Mask R-CNN algorithms

Jul 03, 2023

Abstract:Monitoring cow body weight is crucial to support farm management decisions due to its direct relationship with the growth, nutritional status, and health of dairy cows. Cow body weight is a repeated trait, however, the majority of previous body weight prediction research only used data collected at a single point in time. Furthermore, the utility of deep learning-based segmentation for body weight prediction using videos remains unanswered. Therefore, the objectives of this study were to predict cow body weight from repeatedly measured video data, to compare the performance of the thresholding and Mask R-CNN deep learning approaches, to evaluate the predictive ability of body weight regression models, and to promote open science in the animal science community by releasing the source code for video-based body weight prediction. A total of 40,405 depth images and depth map files were obtained from 10 lactating Holstein cows and 2 non-lactating Jersey cows. Three approaches were investigated to segment the cow's body from the background, including single thresholding, adaptive thresholding, and Mask R-CNN. Four image-derived biometric features, such as dorsal length, abdominal width, height, and volume, were estimated from the segmented images. On average, the Mask-RCNN approach combined with a linear mixed model resulted in the best prediction coefficient of determination and mean absolute percentage error of 0.98 and 2.03%, respectively, in the forecasting cross-validation. The Mask-RCNN approach was also the best in the leave-three-cows-out cross-validation. The prediction coefficients of determination and mean absolute percentage error of the Mask-RCNN coupled with the linear mixed model were 0.90 and 4.70%, respectively. Our results suggest that deep learning-based segmentation improves the prediction performance of cow body weight from longitudinal depth video data.

A Multimodal Late Fusion Model for E-Commerce Product Classification

Aug 14, 2020

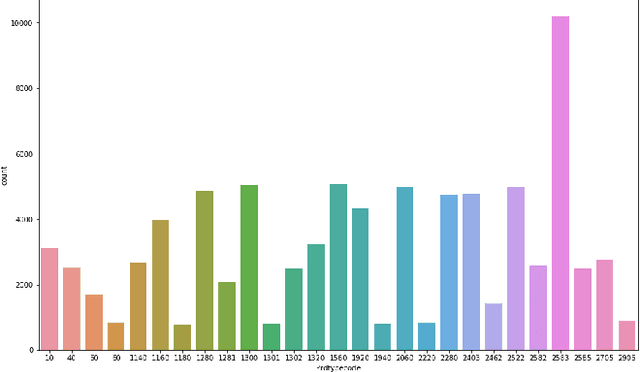

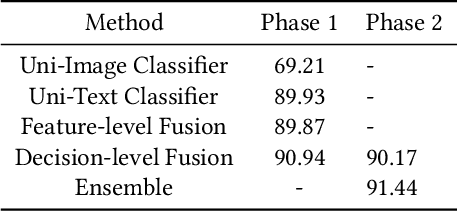

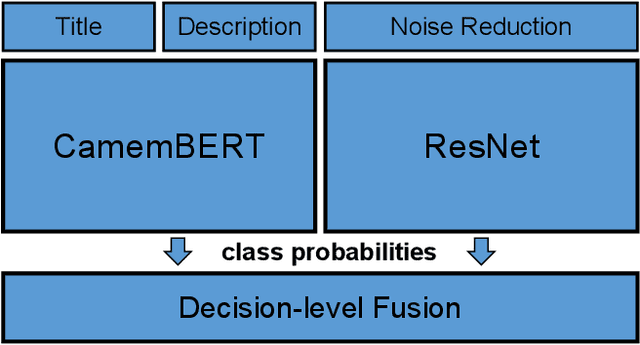

Abstract:The cataloging of product listings is a fundamental problem for most e-commerce platforms. Despite promising results obtained by unimodal-based methods, it can be expected that their performance can be further boosted by the consideration of multimodal product information. In this study, we investigated a multimodal late fusion approach based on text and image modalities to categorize e-commerce products on Rakuten. Specifically, we developed modal specific state-of-the-art deep neural networks for each input modal, and then fused them at the decision level. Experimental results on Multimodal Product Classification Task of SIGIR 2020 E-Commerce Workshop Data Challenge demonstrate the superiority and effectiveness of our proposed method compared with unimodal and other multimodal methods. Our team named pa_curis won the 1st place with a macro-F1 of 0.9144 on the final leaderboard.

A Hybrid BERT and LightGBM based Model for Predicting Emotion GIF Categories on Twitter

Aug 14, 2020

Abstract:The animated Graphical Interchange Format (GIF) images have been widely used on social media as an intuitive way of expression emotion. Given their expressiveness, GIFs offer a more nuanced and precise way to convey emotions. In this paper, we present our solution for the EmotionGIF 2020 challenge, the shared task of SocialNLP 2020. To recommend GIF categories for unlabeled tweets, we regarded this problem as a kind of matching tasks and proposed a learning to rank framework based on Bidirectional Encoder Representations from Transformer (BERT) and LightGBM. Our team won the 4th place with a Mean Average Precision @ 6 (MAP@6) score of 0.5394 on the round 1 leaderboard.

UBER-GNN: A User-Based Embeddings Recommendation based on Graph Neural Networks

Aug 06, 2020

Abstract:The problem of session-based recommendation aims to predict user next actions based on session histories. Previous methods models session histories into sequences and estimate user latent features by RNN and GNN methods to make recommendations. However under massive-scale and complicated financial recommendation scenarios with both virtual and real commodities , such methods are not sufficient to represent accurate user latent features and neglect the long-term characteristics of users. To take long-term preference and dynamic interests into account, we propose a novel method, i.e. User-Based Embeddings Recommendation with Graph Neural Network, UBER-GNN for brevity. UBER-GNN takes advantage of structured data to generate longterm user preferences, and transfers session sequences into graphs to generate graph-based dynamic interests. The final user latent feature is then represented as the composition of the long-term preferences and the dynamic interests using attention mechanism. Extensive experiments conducted on real Ping An scenario show that UBER-GNN outperforms the state-of-the-art session-based recommendation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge